Red Hat has announced a signed agreement to acquire Neural Magic, a developer of software and algorithms that accelerate generative AI (gen AI) inference workloads. Red Hat mentions Neural Magic’s expertise in inference performance engineering and commitment to open source aligns with Red Hat’s vision of high-performing AI workloads that directly map to customer-specific use cases and data across the hybrid cloud.

Neural Magic may not be familiar to the HPC-AI market. It was spun out of MIT in 2018 to build performant inference software for deep learning. Neural Magic is known for its DeepSparse runtime, a CPU inference package that takes advantage of sparsity to accelerate neural network inference.

Less Can Be Better

DeepSparse (github) maximizes CPU infrastructure (it likes AVX-512 CPUs) to run computer vision (CV), natural language processing (NLP), and large language models (LLMs). The DeepSparse engine is specifically designed to execute neural networks so that data movement across layers stays in the CPU cache. This optimization is based on the observation that AI layers do not need to be dense (many connections) but rather sparse (like the human brain). The computation of sparse layers can be fast because many weights are zero and do not need CPU or GPU cycles.

Creating sparse networks can be done in three ways:

- Pruning involves training a dense network and systematically removing weights that contribute the least to the network’s output. (i.e., weights that are close to zero)Sparse Training integrates sparsity directly into the training process, encouraging weights to move toward zero.Scheduled sparsity gradually increases the level of sparsity during the training process.

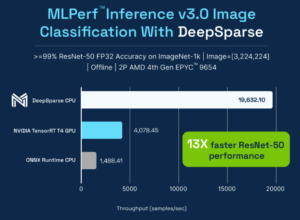

While sparsity provides computational speed-up and the ability to run on CPUs at GPU speeds, it is not without issues. The amount of sparsity can directly affect model accuracy. Too much sparsity can degrade the performance of the network. If the correct sparsity level is achieved, the results will be impressive. The image below provides MLPerf results for DeepSparse on CPU (2P AMD Epyc 9654) and GPU (Nvidia T4). The CPUs ran 13 times faster than the (low-powered) GPU.

Assuming the GPU was 10 times faster, DeepSparse could still keep pace. The result is even more significant because there are more spare CPU cycles than GPU cycles in the data center and cloud.

Comparison of CPU (2P Epyc 9654) and GPU (Nvidia T4) benchmarks from MLPerf Inference v3.0 Datacenter results. MLPerf ID: 3.0-1472 (Open), 3.0-0110 (Closed), 3.0-1474 (Open). (Source: Neural Magic)

Then There is vLLM

Developed by UC Berkeley, vLLM (Virtual Large Language Model) is a community-driven open-source project (github) for open model serving (how gen AI models infer and solve problems), with support for all key model families, advanced inference acceleration research and diverse hardware backends including AMD GPUs, AWS Neuron, Google TPUs, Intel Gaudi, Nvidia GPUs and x86 CPUs. Neural Magic has contributed extensively to the vLLM project.

vLLM was first introduced in a paper – Efficient Memory Management for Large Language Model Serving with PagedAttention, authored by Kwon et al. The paper identified the inefficiency of managing Key-Value cache memory in current LLM systems. These limitations can often result in slow inference speed and high memory footprint. vLLM aims to optimize the serving and execution of LLMs by utilizing efficient memory management techniques. The key features are:

- Focus on optimized memory management to maximize the utilization of available hardware resources without running into memory bottlenecks when running LLMs.Use dynamic batching to adjust the batch sizes and sequences to better fit the memory and compute capacity of the hardware.Use a modular design allowing for easy integration with various hardware accelerators and scaling across multiple devices or clusters.Employ efficient resource utilization of CPU, GPU, and memory resourcesProvide seamless integration with existing machine learning frameworks and libraries.

Similar to DeepSparse, vLLM is focused on optimization and performance. In addition, like DeepSparse, a pile of GPUs might be better; there is support for diverse hardware backends (including CPUs).

Back to the Agreement

Red Hat believes AI workloads must run wherever customer data lives across the hybrid cloud; this makes flexible, standardized, and open platforms and tools necessary, as they enable organizations to select the environments, resources, and architectures that best align with their unique operational and data needs. The plan is to make gen AI more accessible to more organizations through the open innovation of vLLM.

After the above brief technical background, Red Hat’s interest in Neural Magic makes sense. With Neural Magic’s technology and performance engineering expertise, Red Hat can work to accelerate its vision for AI’s future. Built to address the challenges of wide-scale enterprise AI, Red Hat plans to use open-source innovation to further democratize access to AI’s transformative power by providing

- Open source-licensed models, from the 1B to 405B parameter scale, can run anywhere and everywhere needed across the hybrid cloud – in corporate data centers, on multiple clouds, and at the edge.Fine-tuning capabilities that enable organizations to more easily customize LLMs to their private data and use cases with a stronger security footprint.Inference performance engineering expertise, resulting in greater operational and infrastructure efficiencies andA partner and open source ecosystem and support structures that enable broader customer choice, from LLMs and tooling to certified server hardware and underlying chip architectures.

vLLM as an Open Pathway to Extend Red Hat AI

Neural Magic brings its expertise and knowledge with vLLM and DeepSparse technologies to build an open enterprise-grade inference stack that enables customers to optimize, deploy, and scale LLM workloads across hybrid cloud environments with full control over infrastructure choice, security policies, and model lifecycle. Neural Magic also develops model optimization research, builds LLM Compressor (a unified library for optimizing LLMs with state-of-the-art sparsity and quantization algorithms), and maintains a repository of pre-optimized models ready to deploy with vLLM.

Brian Stevens, CEO of Neural Magic, describes the synergy of the two companies as follows, “Open source has proven time and again to drive innovation through the power of community collaboration. At Neural Magic, we’ve assembled some of the industry’s top talent in AI performance engineering with a singular mission of building open, cross-platform, ultra-efficient LLM serving capabilities. Joining Red Hat is not only a cultural match, but will benefit companies large and small in their AI transformation journeys.”

This article first appeared on sister site HPCwire.