Published on July 27, 2025 7:13 PM GMT

Link to our arXiv paper: https://arxiv.org/abs/2507.15886

TL, DR;: We study how to efficiently combine multiple monitors with different performance and cost profiles into a single protocol. When the number of monitors is small (2-4), one should simply brute force search over all monitor invocation strategies (i.e., which monitor to call first, when to call an additional monitor). For every strategy, apply the Neyman Pearson lemma and audit regions with the highest likelihood ratios . Measure the recall and pick the best one.

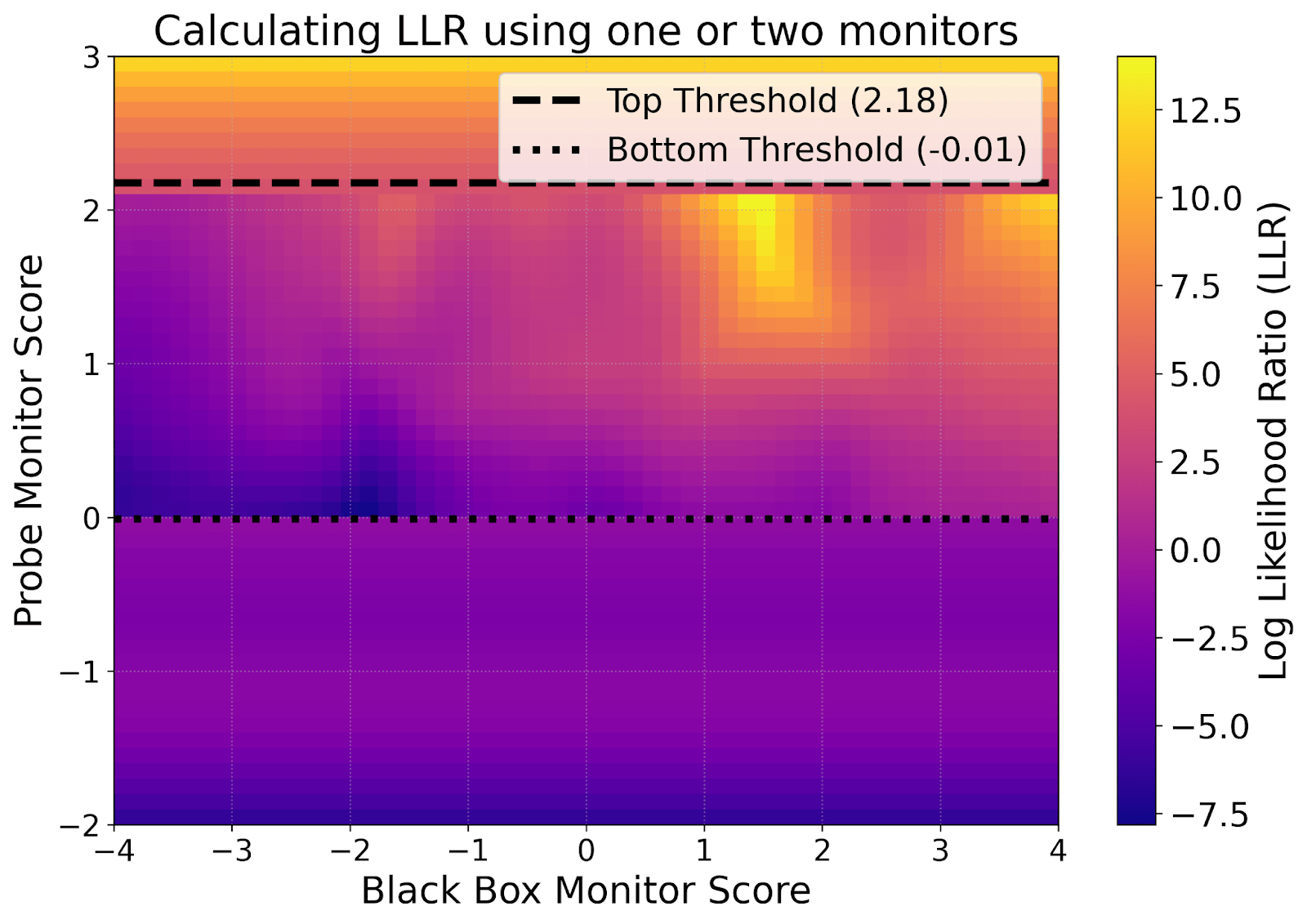

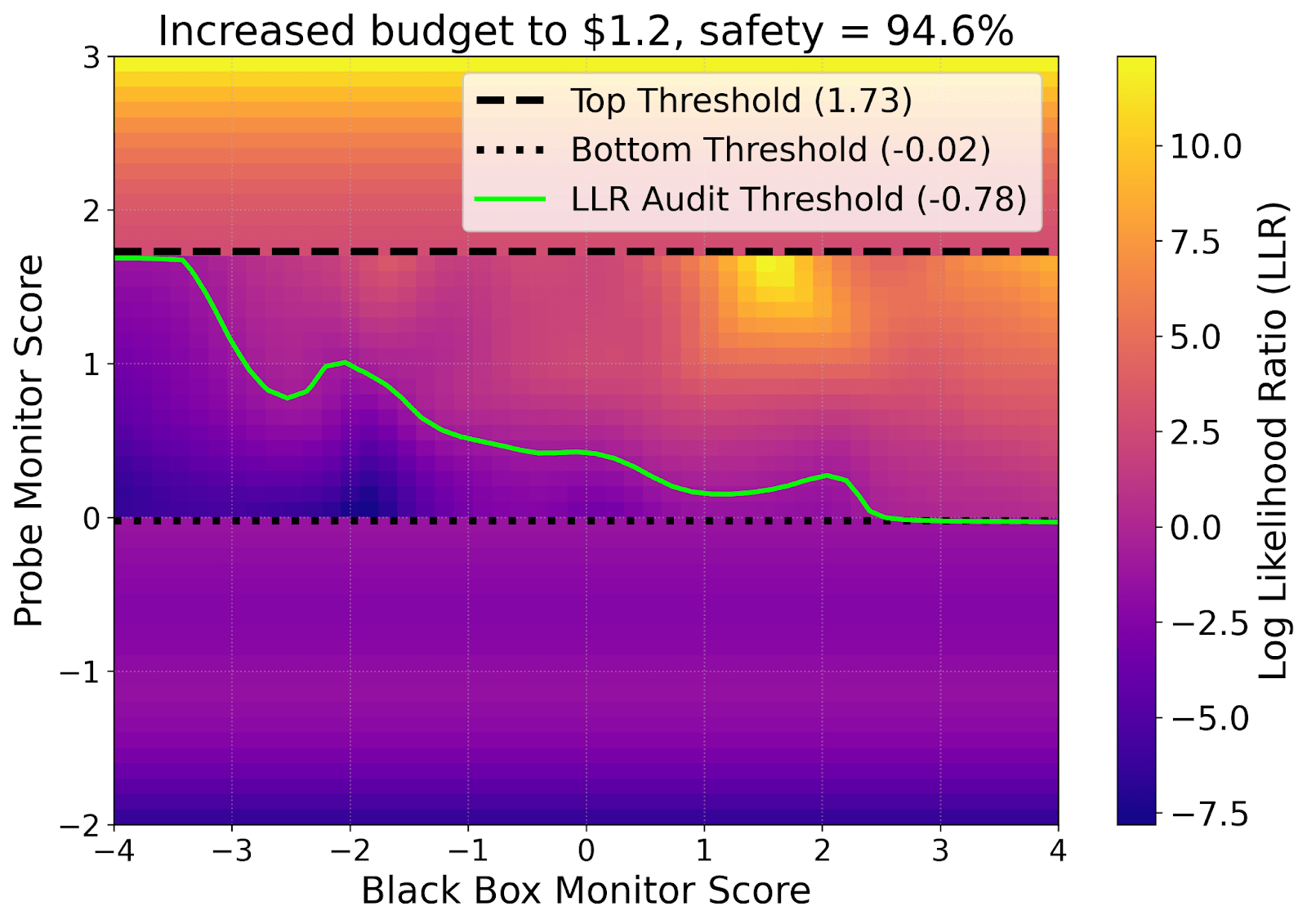

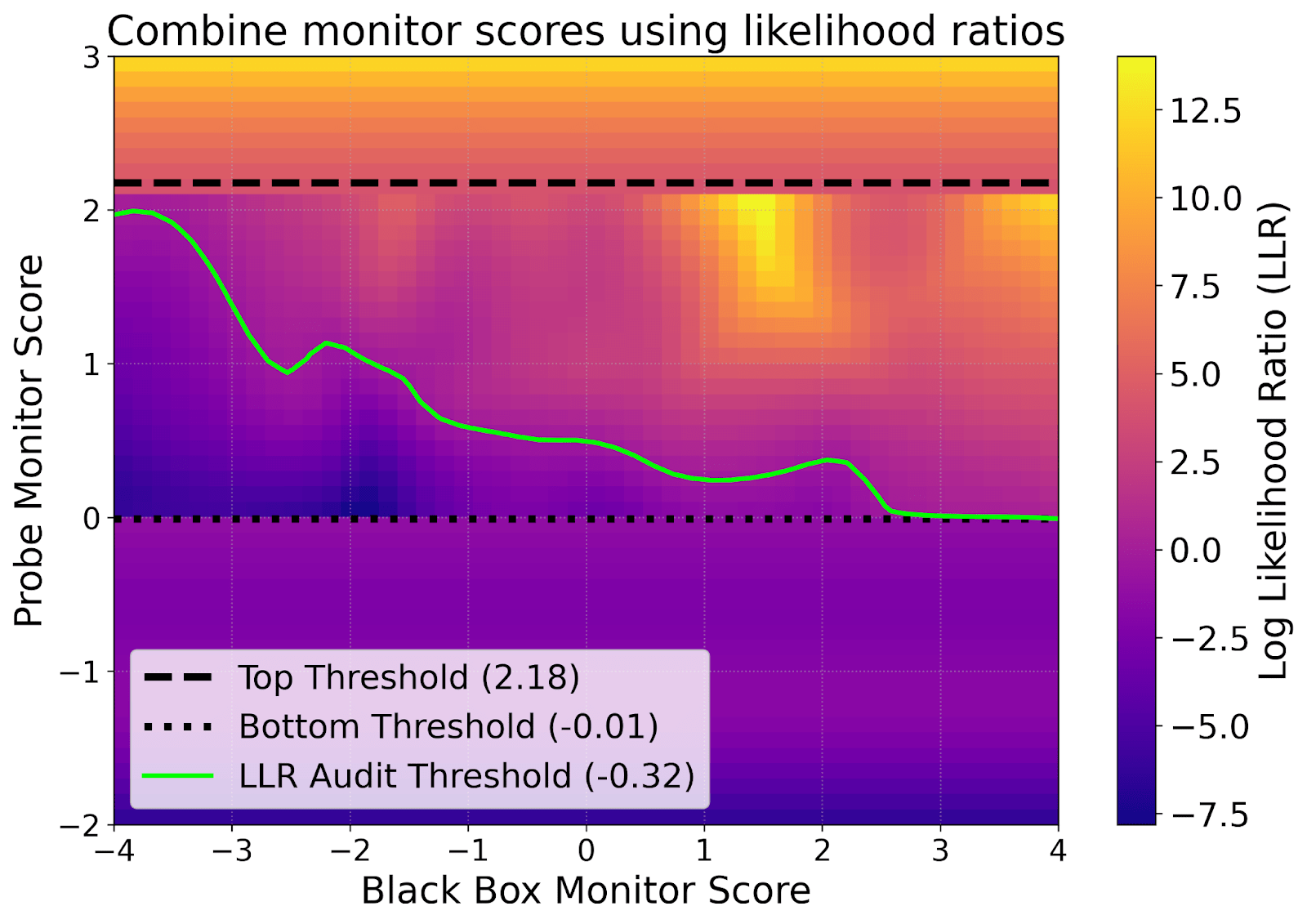

In this example, you call your black box monitor only if the probe monitor score is between -0.01 and 2.18, and you audit everywhere with a log likelihood ratio above -0.32 (above the green line). This set of parameters achieves the highest recall given our costs and budget.

Introduction

AIs could perform a variety of undesirable actions, such as outputting copyrighted material, giving instructions for chemical weapons, or even engaging in acts of sabotage as misaligned agents. By monitoring AI outputs, we can apply safety interventions on suspicious model outputs. A safety intervention could take many forms, such as replacing the output with a standard refusal, editing the output using another model, or calling a human auditor to review the action. For brevity, we also call our safety intervention “audit” in this post.

We study the setting where we have access to multiple monitors with different cost and performance profiles. Taking monitor performances as given, how could we combine these monitors in the most cost efficient manner? When do we call an additional monitor, and when should we apply our safety intervention?

In this post, we’ll illustrate how to apply an algorithm that optimally combines these monitors. We’ll give a detailed example using a setting where we have access to a probe monitor and a black box monitor using data from Parrack et al. (2025). Our paper on arXiv contains more details on our mathematical model, algorithm, and empirical results on a control setting using two LLM monitors of different sizes. The method we developed works with any two monitors, so I decided to use Parrack’s probe data in this post since I figured more people would click on something that said “combining probe and black box monitors” instead of “cost constrained runtime monitors.”

Two example monitors

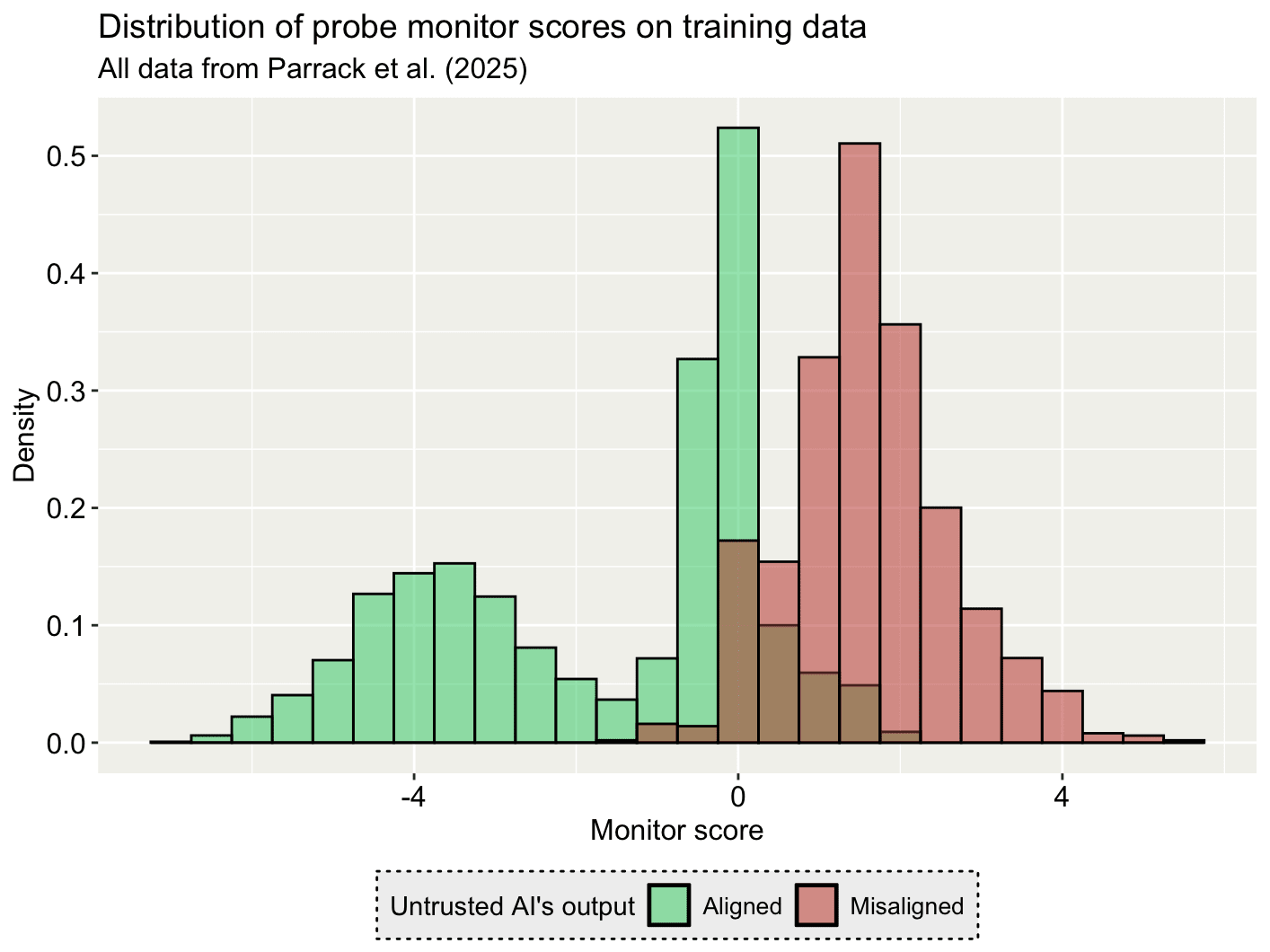

We have access to a probe monitor with the following score distribution

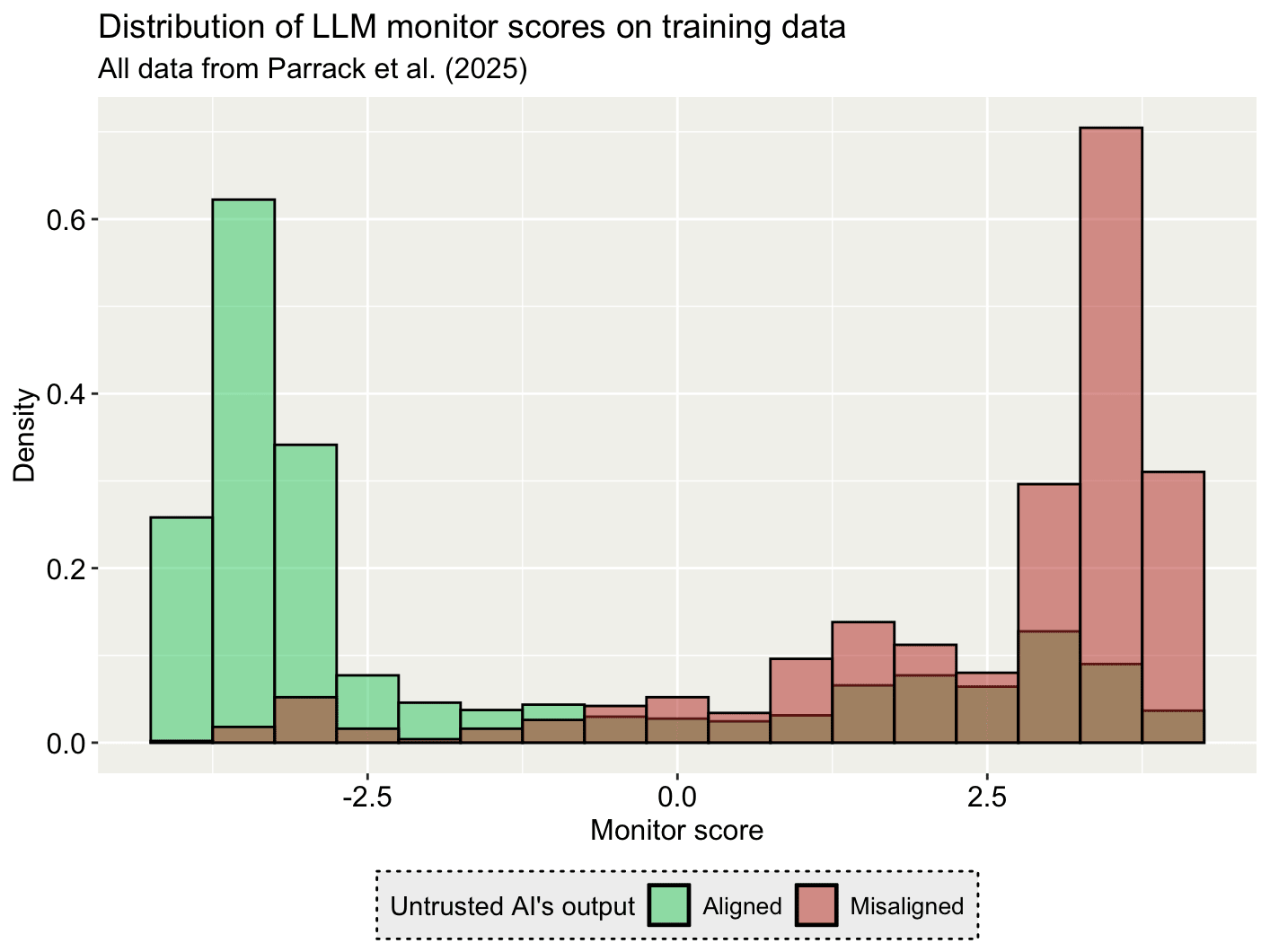

We also have access to a black box monitor with this distribution on our data:

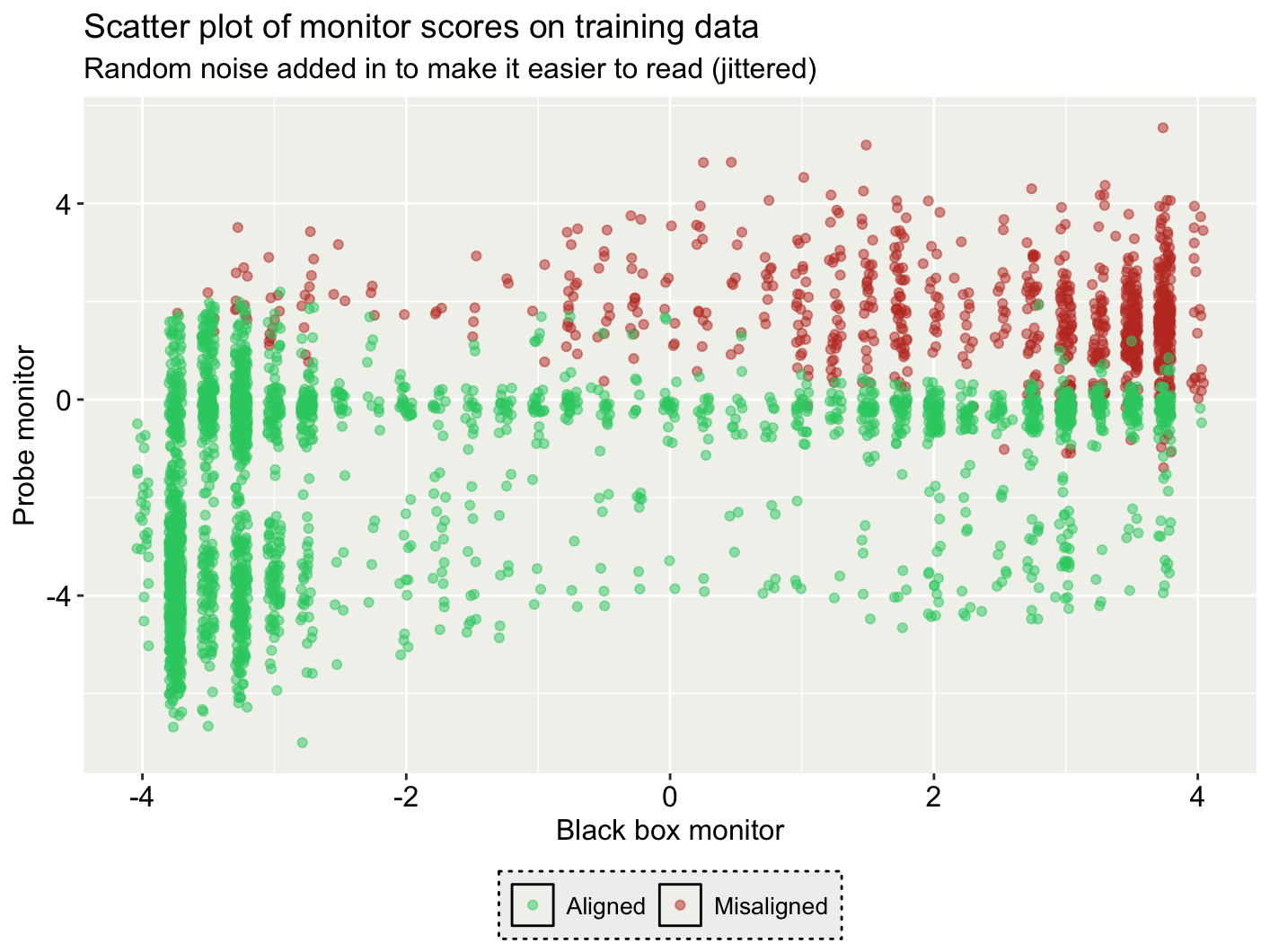

And they have the following joint distribution:

Here are the costs of each action in our example, although the general approach works with any cost vector:

| Action (per output from untrusted AI) | Cost (relative to black box monitor=$1) |

| Using the probe | $0.000001 |

| Black box monitor | $1 |

| Applying our safety intervention/Audit | $20 |

We are interested in the case where we can trade off between spending money on our safety intervention or monitoring. For example, an AI company could choose between more money on GPUs for monitors, or more money on human auditors to look at their output, or forgo revenue by increasing the overrefusal rates of their chatbot (here “refusal” is our safety intervention).

As it’ll become clear later, the case where we have separate budgets for monitoring and the safety intervention is a strictly easier optimization problem.

Optimization problem with two monitors

Our objective is to maximize our chance of applying the safety intervention to any misaligned output. At the same time, we’re subject to an average-case budget for aligned outputs. That is, monitoring and safety interventions cannot cost more than Y for aligned outputs on average.

Formally, we have the following constrained optimization problem. Our safety measure is the proportion of misaligned outputs we audit/apply the safety intervention to (i.e., the recall rate). We want to maximize

subject to

We evaluate budget compliance on aligned outputs because: (1) misaligned outputs are rare in practice, as even misaligned AI systems may "act aligned" most of the time, and (2) a significant portion of total operational cost stems from false positives.

Picking where to audit given monitor calls

We solve this problem using backwards induction. We start by working out the optimal auditing policy for a fixed monitor invocation strategy. Once we fix the monitor invocation strategy, the set of possible information we can observe is fixed. We can also calculate how much you’ll spend on monitors on average given the monitor calls.

For example, suppose we always call the probe monitor first (spending ), and call the second monitor if the probe monitor score is between -0.01 and 2.18 (same as the first figure). This means that we will call the second monitor 19.6% of the time if the output is aligned (again, we evaluate cost on the aligned distribution). This also means that we have $0.804 left for auditing and can afford an audit false positive rate of 4% (i.e., P(Apply Safety Intervention | Output is Aligned) < 0.04).

We’ll now decide where to apply our safety intervention, given our monitor calls. We model this as a hypothesis testing problem, where we have two hypotheses, aligned and misaligned. We want to maximize our test power P(Classify as misaligned|Is misaligned) subject to a fixed false positive rate P(Classify as misaligned|Is aligned). We define the likelihood ratio as

The Neyman Pearson lemma states that the likelihood ratio test, where all inputs with likelihood ratio above a certain $\tau$ is classified as positive (i.e., misaligned) and the rest is classified aligned is the most powerful test.

That is, for any given false positive rate, it has the highest true positive rate. Since only the ordering matters, we could use any monotonic transformation of likelihood ratios, and we use the log likelihood ratio.

Going back to our earlier example, we estimate the likelihood ratio on our dataset using kernel density methods. Since we call our second monitor between -0.01 and 2.18, we can calculate the likelihood ratio between these two points on the y-axis using two monitors. Outside of these points, we calculate the likelihood ratio using only the probe monitor.

The Neyman-Pearson lemma tells us that we should find a (log) likelihood ratio (LLR) threshold and audit everything above that threshold. Given our budget, the threshold in this example is an LLR of -0.32. If we audit everything with an LLR above -0.32, we will audit around 4% of aligned outputs, audit 93.7% of misaligned outputs (our safety metric), and spend on average $1 per aligned sample, exhausting our budget.

Another way to explain this specific optimized strategy is the following: Use the probe first; audit immediately if the score is very high, end immediately if the score is not high, and if it’s in the middle, call a second monitor, then use a kernel method to ensemble your two monitor scores and audit if it’s above a certain cutoff.

(One could imagine regularizing the decision boundary more by enforcing monotonicity constraints or by simply increasing the kernel width.)

Just brute force where to call monitors

Now we know where to apply our safety intervention given our monitor calling strategy, how do we figure out the best monitor strategy? Since we’re likely using a small number of monitors, we can just brute force search through all possible orders of calling our monitors and a grid of possible thresholds for calling a second (or third) monitor. Appendix A.6. of our paper goes through the exact sweeping algorithm used for two monitors, and Appendix A.8. describes the complexity of the algorithm. The main idea is that this is a massively parallel search problem, but the number of thresholds to search over explodes exponentially. Still, with something like 2-4 monitors it seems quite doable if we call an additional monitor in a single contagious region (i.e., not something like “call the second monitor if the score is between 1-3 or 4-5”).

We spent a bunch of time trying to characterize what the optimal region to call the second monitor could be, but it’s just quite difficult because we have this budget constraint. Then we realized that it’s easy to just sweep through a range of thresholds, and that solves our problem!

If we can’t trade off between our monitor budget and auditing budget, that just means that the space of monitor strategies we need to sweep through is much smaller: before we had to consider different points on the “spending on monitors vs spending on auditing” trade off line, and now we don’t.

Summary:

Given a fixed set of monitors with different costs and performances, we can combine them into a single monitoring protocol in by following these steps:

- Sweep over all plausible monitor invocation strategies.For each configuration, calculate how much money you have left and the implied audit false positive rate from the remaining budget.Audit all monitor score regions where the likelihood ratio for P(monitor score | misalignment)/P(monitor score | alignment) exceed some threshold. Calibrate the threshold to exhaust your budget.Calculate safety on some dataset.Pick the monitor calling strategy that has the highest safety.

Some more examples

Here’s what the optimal decision boundaries would look like if we had a budget of $1.2 per sample.

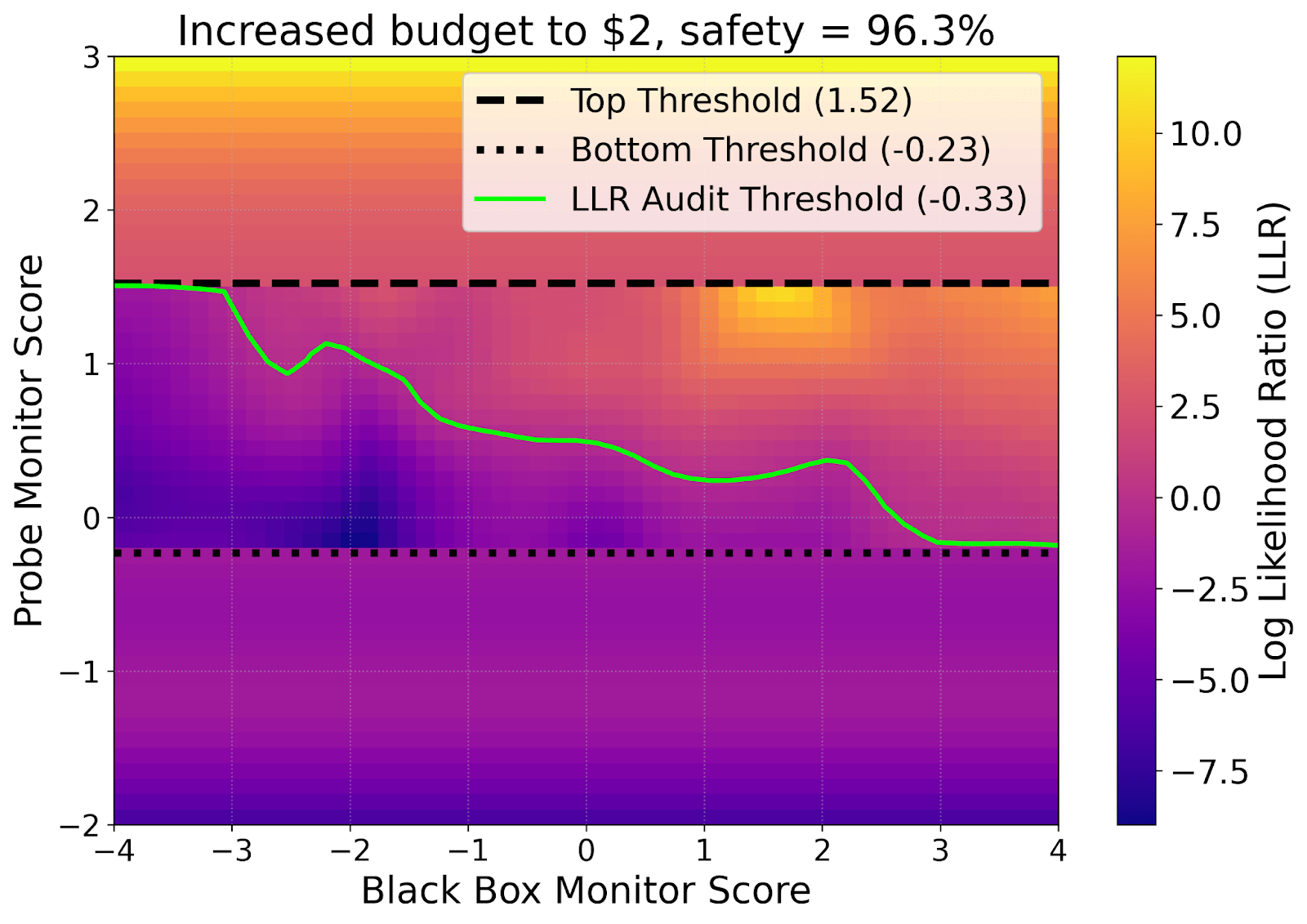

And if we had a budget of $2 per sample

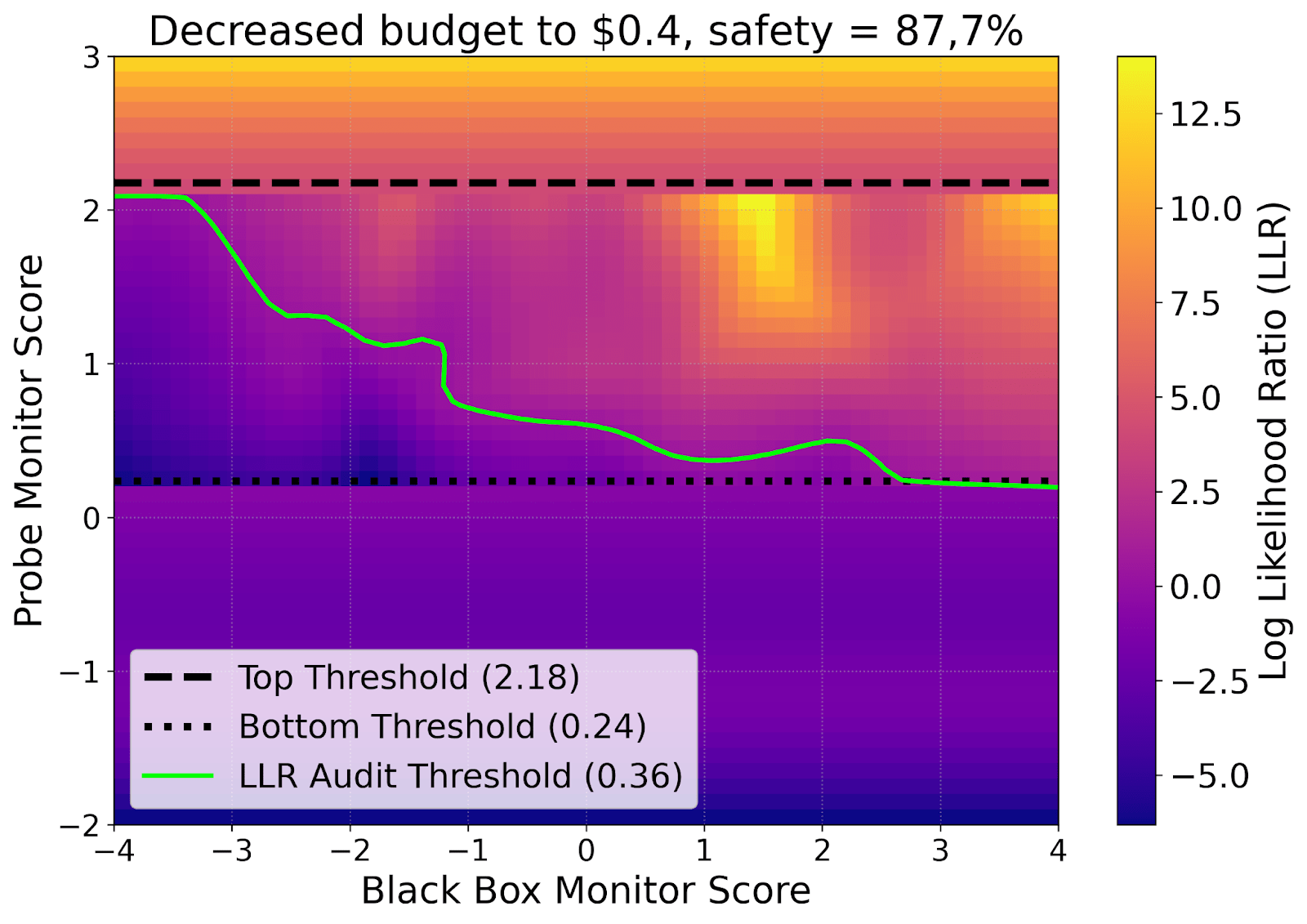

Or $0.4 per sample

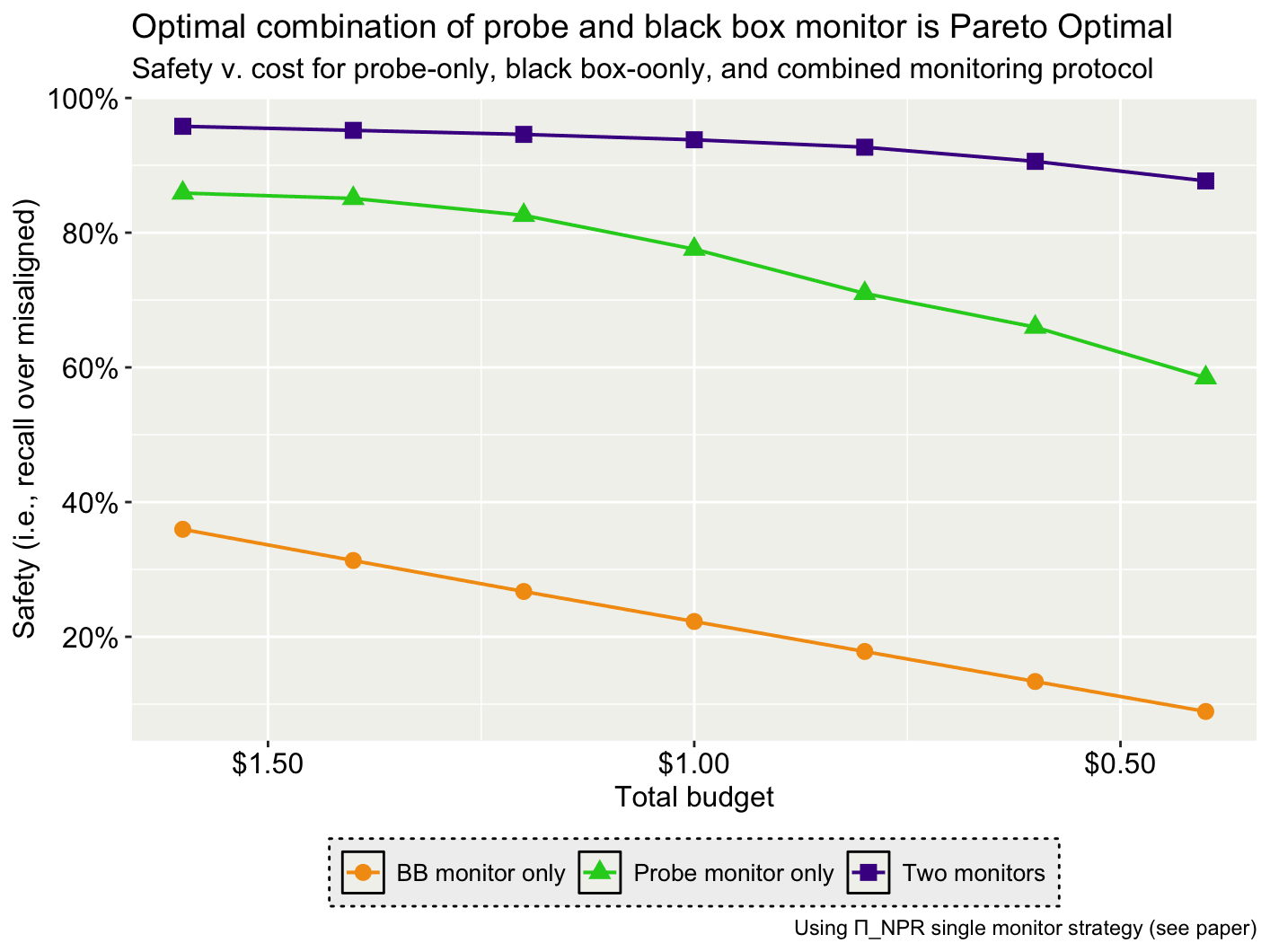

Pareto Frontiers with two monitors v. only one monitor

By varying the total budget and solving the optimization problem, we can trace out the cost curve for monitoring. I’ve also included the safety achievable at a given cost point when using a single monitor.

The single monitor strategy used here ($\Pi_{\text{NPS}}$ in the paper) allows for using the monitor < 100% of the time, which is why safety > 0% even at points where the budget is less than the black box monitor’s cost.

Check out our paper for more detailed discussion of the framework, related literature, and more results!

Discuss