Published on July 3, 2025 5:02 PM GMT

Recently there has been some discussion around AI safety plans so I was inspired to work on this and thought it might be relevant.

Project summary

Timelines to AGI could be very short (2-4 years) and we need solid plans to address the risks. Although some useful general outlines of plans and a few detailed scenario analyses have been produced, comprehensive plans remain underspecified. This project will establish a prestigious contest that elicits proposals which address short timeline scenarios, fill in the gaps of existing plans, and redteam AGI safety strategies. They should address strong possibilities of short AGI timelines (2-4 years), no major safety breakthroughs during that time, and uncertainty about future events. The winning proposals will receive expert feedback from the judges with the goal of refining and synthesizing the best parts, followed by broader public dissemination and discussion that can lay the foundation for follow up work. This contest will advance discussion and preparation of AGI safety and governance measures by identifying gaps and failure mode mitigations for existing strategies and theories of victory.

What are this project's goals? How will you achieve them?

- Creating clarity around AGI safety strategy. This contest will elicit a wide variety of proposals for making implementation details concrete, clarifying potential failure modes, and suggesting improvements. Incentivizing submissions with prizes, prestige, and recognition. Contributions can range from full plans to tactical modules that address a key part of plans. We will ensure room for rewarding a wide variety of useful submissions. Focusing attention on existing plans and the hard parts of the problem. Specifically this contest will accept submissions that highlight improvements or crucial considerations regarding both alignment and governance plans. Two examples of strong plans that can serve as a base to start engaging with these questions are:

- Google’s A Technical Approach to AGI Safety and Security is the most thorough example of the former.MIRI’s AI Governance to Avoid Extinction research agenda seems to be the most thorough example of the latter.

The contest will aim for submissions which are useful and adaptable:

- Concrete: Ideally include sample timelines, prioritization (must-have vs ideal ingredients), threat models, tradeoff justifications, failure-mode mitigations, team structures, estimated costs, or implementation bottlenecks.Modular: Can be adapted to multiple organizational contexts (e.g., labs, governments, or coalitions). Some entries should address government involvement explicitly, like Manhattan or CERN for AI proposals.Forecast-aware: Conditioned on short timelines but responsive to developments that could shift that.Counterfactually useful: Even if not implemented in full, a good plan should sharpen collective understanding or reveal overlooked assumptions.

More details can be found in Draft Submission Guidelines & Judging Criteria below.

Why a contest for plans?

- Contests have previously led to useful AI safety contributions: The Eliciting Latent Knowledge contest was successful at eliciting counterarguments and key considerations that the hosts hadn’t considered even after hundreds of hours of thought. They found these submissions valuable enough to pay out $275,000. Directing this kind of attention at AI safety plans when we may live in a short timeline world seems prudent and worth trying. Incentivizing planning for AGI safety, which is a distinct type of work and skillset. By default we should expect not enough planning to happen. History shows society generally underprepares for the possibility of dramatic changes (e.g. Covid-19). We also should not assume good technical or research takes are enough for sufficient strategic takes. Planning is a distinct action and skillset. It’s important to invest time specifically developing strategic clarity by specifying priorities, plan details, and limitations. When we don’t plan adequately for future scenarios, we are forced to improvise under time constraints. If we had some plans in advance of Covid-19, we probably could have done much better. We could have had an early warning system, low-cost near perfect PPE, better contact tracing, and faster vaccine manufacturing. There are several plausible pathways for improving AI safety plans

- Some ways this might work:

- Having a bunch of people surfacing crucial considerations and assessing alternate scenarios can make such a plan more useful. You could have a plan for the right scenarios, but be missing details that would make the plan easier, more effective, or more robust. There could be good proposals for parts of the problem but it’s unclear if they are solving hard parts of the problem. We need plans to at least address those hard parts even if they aren’t all fully solved.

How will this funding be used?

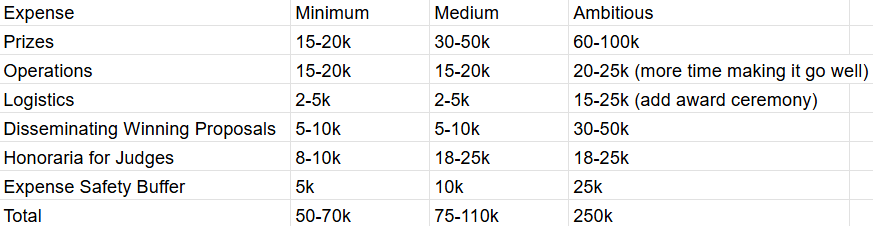

Budget at a glance

Detailed Budgets

Minimum budget ~50-75k

- Prizes 15-25k

- 10-14k first3-6k second1-2k third$500 each for 10 honorable mentions.

- Average 15 h/week $48.76/h for 6 months (26 weeks)Probably more front loaded

- Scope out strategy, talk to previous contest hosts, set up logistics, secure judges, advertise to groups, headhunt promising participants, answer questions, coordinate dissemination strategyImprove submission guidelines, judging criteria, talk to more people and get feedback on this document.

- Setting up submission systemWebsiteTargeted paid promotionIncludes budget for a potential award ceremony, which may be virtual

- Estimate: 100 submissions 30 min review 3 reviewers 50/hr = 7.5-10kJudge discussions to surface valuable insights as well.

- Larger prizes, more honoraria for judges, marketing/disseminating the winning proposals, expanded outreach and participant support, maybe a retreat for the winners.

Medium budget ~100k

Same as minimum budget but with larger prizes & honoraria for judges

- Prizes 30-50k

- 20-30k first6-10k second2-4k third$500 each for 12 honorable mentions.

- Estimate: 100 submissions 45 min review 3-5 reviewers $50/hr = 18-25k

Ambitious budget ~250k

Changes from medium budget in bold

- Prizes 60-100k

- 40-50k first12-24k second4-8k third$500 each for 20 honorable mentions.

- Setting up submission systemWebsiteTargeted paid promotionRetreat for winners to further refine their proposals

- Estimate: 100 submissions 45 min review 3-5 reviewers 50/hr = 18-25k

Who is on your team? What's your track record on similar projects?

Main Organizer

Peter Gebauer: technical AI governance research manager for ERA in Cambridge (this project is independent and unaffiliated with them); previously directed the Supervised Program for Alignment Research, completed the GovAI summer 2024 fellowship, co-authored Dynamic Safety Cases for Frontier AI, helped with recruiting at Anthropic, and managed communications for a statewide political campaign.

Advisors

- Ryan Kidd Seth Herd

Draft Submission Guidelines

In this contest, we invite your takes on the big picture: if transformative AI is developed soon, how might the world overall navigate that, to reach good outcomes?

We think that tackling this head on could help force us to tackle the difficult and messy parts of the problem. It could help us to look at things holistically, and better align on what might be especially important. And it could help us to start to build more shared visions of what robustly good paths might look like.

Of course, any real trajectories will be highly complex and may involve new technologies that are developed as AI takes off. What is written now will not be able to capture all of that nuance. Nonetheless, we think that there is value in trying.

The format for written submissions is at your discretion, but we ask that you at least touch on each of the following points:

- 200–400 words executive summaryHow the technical difficulties of first building safe transformative AI are surmountedHow the socio-political difficulties of first building safe transformative AI are surmountedWhat transformative applications are seen first, and how the world evolvesHow the challenges of the-potential-for-an-intelligence-explosion are surmounted (either by navigating an explosion safely, or by coordinating to avoid an explosion)Biggest uncertainties and weak points of the planWhat are the most likely causes and outcomes if this project fails?

- Challenge: people don’t submit

- Solutions

- Prestigious judgesLots of smaller prizes to reward many types of valuable submissionsLarger prizes overall Commitment to diffusing the winning ideas and generating positive impact from them

- Solutions

- Clear criteria for useful submissions.Reach out to experienced people.Allow for multiple kinds of submissions - proposing and improving plans. Facilitate connections between people with complementary expertise and interests.

- Solution

- Include prizes for recently published work that would have scored highly in the contest.

- Framing contest as exploratory and collaborative.Non-binding participation: make it clear participation does not mean an endorsement of a particular timeline or p(doom).Private evaluation - only finalists or winners submissions become public.Ensure judges give constructive feedback.Anonymous submission option.

- Prescreen submissions for minimum standards based on judging criteria.

- Standardized rubricTwo judges per finalist submission, normalize judge scores

- Filter for COIsDouble-blind evaluation

We think this should produce submissions that will at least fill some gaps in current limited plans, and produce more collective engagement with the theoretical and practical challenges of AGI on the current trajectory.

How much money have you raised in the last 12 months, and from where?

None

Other FAQs

Why not just get more talent to work on AI safety?

- It’s important to know how to allocate talent in order to achieve victory conditions for AGI going well. Talent is not unlimited - it is limited and must be allocated effectively. Increasing our strategic clarity is useful for knowing how to allocate talent and how the plethora of work in AI safety and governance can fit together effectively. And figuring out ways to attract, develop, and direct talent is an essential part of strategic planning. Finally, a contest can also attract new people.

Don’t contests generally fail?

- Contests are generally high variance and useful for getting a wide variety of ideas you might not have considered. The Eliciting Latent Knowledge contest seems like the most successful AI safety contest and was able to surface many novel key considerations, paying out $275,000 total for contributions. The OpenPhilanthropy Worldview Investigations contest also resulted in some excellent analyses. Some pitfalls of other previous contests: too short, too broad, too targeted at people who are relatively inexperienced. This contest will be higher profile than competitions like a weekend research sprint that targets AI safety newcomers with small prizes.

Why this contest?

- Timelines to transformative AI could be short: 2-4 years or less. There are few comprehensive plans for making the development of superhuman AI systems go well. A well designed contest can encourage talented researchers to develop strong proposals we can build on and advocate for. Past contests have achieved useful submissions

- (e.g., Boaz Barack on Open Phil worldview contest, Victoria Krakovna/Mary Phuong/etc. on ELK) and identifying new talent (e.g., Quintin Pope on OP worldview, James Lucassen/Oam Patel on ELK).

Do we expect perfect plans?

- This contest recognizes that AI safety is a complex domain where no single approach will be sufficient. Plans may not always work, but planning is essential. All submissions are understood to be exploratory contributions to a collaborative field rather than definitive solutions. Participants can choose their level of public association with their work, and all feedback will be moderated to ensure it focuses on improving plans rather than criticizing contributors.

What’s the contest timeline?

- Once set up, we expect to allow 3 months for submissions and close submissions in late October. We will announce winners about a month after.

What are some examples of the kind of work you're interested in?

asdExamples of Work That Provides Strategic Clarity on AI Safety Plans

- “Plans” or rather pieces of plans that we are glad exist because they provide strategic clarity, details about a key component of a plan, or requirements of a comprehensive plan:

- The Checklist: What Succeeding at AI Safety Will Involve - Sam BowmanWhat’s the Short Timeline Plan?How do we solve the alignment problem? AI Strategy NearcastingA Vision for a “CERN for AI”Building CERN for AI - An Institutional BlueprintCERN for AI Intelsat as a Model for International AGI GovernanceWhat Does it Take to Catch a Chinchilla?Survey on Intermediate Goals in AI GovernanceA sketch of an AI control safety case

- How AI Takeover Might Happen in 2 YearsA History of the Future: 2025-2040AI 2027 (Kokotajllo, et al)

- Describe a particular scenario for TAI takeoffDetails the transition from pre-TAI to post-TAILists concrete series of events or recommended actionsJustify and explain their threat models and risk factorsCites a well-defined problem (e.g. AI designed mirror pathogens)Cited problems fit with the scenario they have established

- Clear takeaways

- We should be concerned about/watch out for x

- We should do y if z happensThese fit the scenario establishedIdeally takeaways seem robustly good across multiple scenariosDetailed combinations of techniques that aid AI alignment/control

Some additional FAQs are addressed in a google doc

Why Might Timelines Might Be Short? (See Appendix A)

Who would the judges be? (See Appendix B for possible ones we’d be excited about)

Possible judging criteria? (See Appendix C)

Discuss