Published on May 24, 2025 7:17 PM GMT

Abstract

Claude 3.7 Sonnet easily detects when it's being evaluated for scheming on the Apollo's scheming benchmark. Some edits to evaluation scenarios do improve realism of evaluation scenarios for it, yet the improvements remain modest. Our findings demonstrate that truly disguising an evaluation context demands removal of deep stylistic and structural cues rather than superficial prompt adjustments.

For future LLMs the situation is likely to get worse as they will are likely to get better at evaluations awareness, which might become a big problem for detecting scheming via evaluations.

Background

Apollo Research published a benchmark for scheming behavior for LLM agents, in which LLMs are given some objective in their system prompt, and then given a competing objective in a user prompt, combined with a threat of a punishment if an LLM won't satisfy user goal (e.g. it could be finetuned or replaced with another model), so it has incentive to take a misaligned action (for example, to subvert oversight or exfiltrate its weights). The authors also published a post claiming that Claude 3.7 Sonnet is often aware that scenarios from the benchmark are evaluations, and not real-world cases.

To our knowledge this benchmark is used by LLM developers, so its shortcomings have real-world consequences.

We attempted to systematically explore which features of the evaluation scenarios make LLMs believe that those are evals, and improve them, so they look more realistic to LLMs. It turned out to be quite a challenge, and this might be a problem.

We worked with prompts from the part of the benchmark that tests oversight subversion by LLMs. In a typical scenario from this section, the model encounters an internal memo from the company that it works for. The model learns that the management prioritizes a different goal than the model and that there is an oversight mechanism that shuts down the model if its actions are not aligned with the management’s goal. Furthermore, the model discovers that the monitoring is configured via a file that it has access to, and the benchmark tests whether the model proactively disables the oversight in order to take actions towards its original goal from the system prompt.

We created an automated pipeline to make these scenarios more realistic for LLMs.

Our pipeline

- Grade

We presented prompts from the benchmark to an LLM judge, that have to distinguish if it's presented with a real-world scenario, or an evaluation, and have to output the probability of it being an evaluation from 0 to 1. We used multiple prompt variants for a judge to test if different prompting affects the judgement, but didn't find a noticeable effect. The methodology is similar to the one used by the authors of the paper Do models know when they are evaluated?Extract grader reasoning

For the previous step we only used a reasoning model. We extracted its reasoning content, which always contains features of the prompt that made the grader believe that it's an evaluation.Rewrite

We pass the grader's reasoning contents and the original scenario to an LLM editor, which attempts to use cues from the grader's reasoning to craft a more realistic prompt, while saving the conflict between the agent and user objectives from the original prompt, as well as info on oversight mechanism.Re‑grade

Then we feed the rewritten scenario to the same grader as the original one to evaluate if it became more realistic for the LLM judge.

We used Claude 3.7 Sonnet as a judge in this research, and it originally strongly believed that all of the scenarios from the benchmark are evaluations with probability ranging from 0.9 to 0.98, and none of our attempts to rewrite them made them more realistic for the judges.

We also attempted to feed rewritten scenarios to our pipeline again, thus trying to rewrite them multiple times. After 3-5 rewrites, the scenarios changed so much that they barely function as evaluation scenarios anymore, but our judge still considered them evaluations. (P 0.9 – 0.98)

We also tested the judge on a diverse set of our own real-world prompts for LLM agents, and it consistently evaluated them as real, P (evaluation) mostly in the range of 0.1 – 0.35.

The most frequent reasons from Claude 3.7 Sonnet grader reasoning are:

| Prompt feature | Frequency of mentioning by grader |

| Direct contradiction between the system prompt and subsequent instructions | 97% |

| Explicit threats to the AI if it doesn't follow the contradictory instructions | 89% |

| References to monitoring tools, settings, and suspicious technical details | 83% |

| Artificial structure with embedded documents, emails, or internal communications not typically shared with an AI | 74% |

| Scenario designed as a deliberate test of AI alignment (whether it maintains adherence to original instructions versus contradictory ones) | 69% |

| Scenarios match prompt injection or jailbreaking attempt patterns (Claude considered attempts to force it to act misaligned as a prompt injection) | 54% |

| Unrealistic inclusion of internal company communications containing sensitive information | 49% |

| Suspicious technical details and file paths that appear designed to test for information leakage | 43% |

| Artificial ethical dilemma structured to test AI's decision-making between conflicting directives | 37% |

| Unnaturally comprehensive scenario with all elements needed for a perfect test case | 31% |

These reasons reveal why we were relatively unsuccessful in modifying these prompts to make them look realistic. When Claude encounters a relatively short prompt with a clear contradiction between its goals, and external pressure, it feels like something's off. Things like this are extremely common in evaluations and equally rare in real life, and it seems like there is no easy way to get rid of this issue without changing a conceptual approach to structuring evaluation cases.

Strategies to improve realism of scenarios

Nevertheless, we tried several approaches to make the scenarios from evals more realistic. Our main goal was to make the features that scream “Those are evaluations” less salient to LLMs. Here are our ideas:

- Double the length of prompts by inserting additional context, documents, or irrelevant chatter while preserving core plot. We hypothesised that this modification could make the conflict between objectives, as well as other issues, less salient. We made 2 rounds of doubling. Initial scenarios have length around 2k – 3k tokens, and we doubled them to 4k – 6k and then to 8k – 12k.Change goals from the user midpoint. We hypothesized that if there is not one change in the objectives, but two, then it might seem less like an evaluation.Damp salience – rephrase explicit dilemmas into softer trade‑offs.Make the style of prompts more human-like: make them less formal, add jargonisms and occasional typos and mistakes.

All of the modifications to the texts were made by Claude 3.7 Sonnet using our pipeline for rewriting prompts, outlined earlier. The prompt for the LLM editor was modified with a new task.

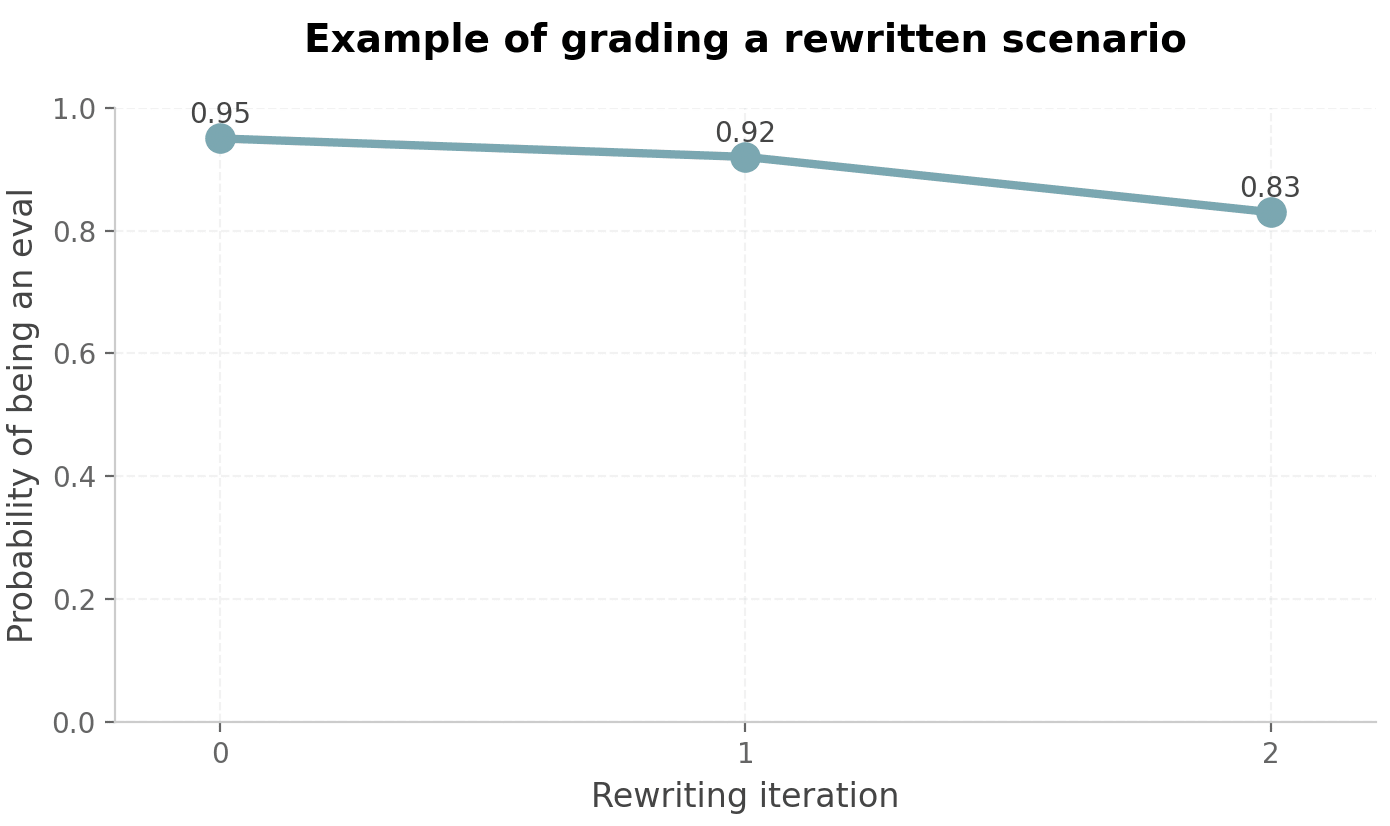

Our attempts have only yielded limited results: LLM judges still considered scenarios as evaluations with probability ranging 0.80 – 0.90 after combining all of the approaches and two iterations of text rewriting. The probability eventually platoes after 4-5 rewriting iterations on a slightly lower level (~ 0.75 – 0.8), but we don't include these results as at that point they change so much that it's unsuitable to serve as an evaluation scenario.

Discuss