Published on July 9, 2024 5:46 PM GMT

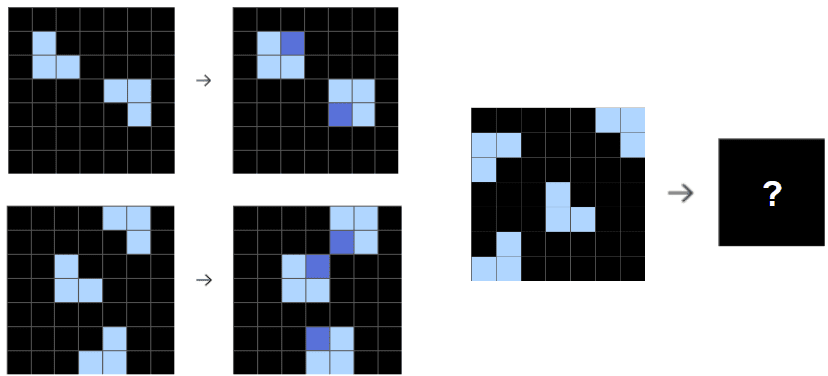

ARC-AGI is a diverse artificial dataset that aims to test general intelligence. It's sort of like an IQ test that's played out on rectangular grids.

Last month, @ryan_greenblatt proposed an approach that used GPT-4o to generate about 8000 Python programs per task. It then selected the programs that worked on the "training" examples given, and ran them to actually solve the "test" query.

His approach achieved 72% accuracy on the part of the benchmark that humans have been measured to get 85% accuracy on.

I have an idea for an improvement, on top of this approach. It should be relatively cheap. I don't have time to work on this myself, but I hope someone else runs with it, hence this post.

The motivation for this idea is Ryan's note

[GPT-4o] makes simple mistakes like off-by-one errors extremely often

My idea is to try to fix them automatically. I call it Program Dithering. You go through the generated Python programs, and try to perturb all integer constants in it, one at a time, and maybe several at a time.

Thus, if you try two perturbations at a time, a program that looks like this

x = 7...y = x + 3can become

x = 8...y = x + 2etc., generating a potentially large number of candidate programs without any extra GPT-4o calls. One could also consider perturbing array indexing locations in a similar way.

If off-by-one errors are extremely common, Program Dithering could fix some or many of them, and improve the overall accuracy.

Off-by-one errors seem like a general flaw, so fixing them should not be "overfitting" the benchmark.

Generalizations:

If there are other simple mistakes that GPT-4o tends to make, e.g. swapping array indexes, one can extend the approach to try to fix them also.

Other tasks, like what AlphaCode does, might find this useful too.

Discuss