Published on March 11, 2025 3:57 PM GMT

TL;DR Large language models have demonstrated an emergent ability to write code, but this ability requires an internal representation of program semantics that is little understood. Recent interpretability work has demonstrated that it is possible to extract internal representations of natural language concepts, raising the possibility that similar techniques could be used to extract program semantics concepts. In this work, we study how large language models represent the nullability of program values. We measure how well models of various sizes at various training checkpoints complete programs that use nullable values, and then extract an internal representation of nullability.

Introduction

The last five years have shown us that large language models, like ChatGPT, Claude, and DeepSeek, can effectively write programs in many domains. This is an impressive capability, given that writing programs involves having a formal understanding of program semantics. But though we know that these large models understand programs to an extent, we still don’t know many things about these models’ understanding. We don’t know where they have deep understanding and where they use heuristic reasoning, how they represents program knowledge, and what kinds of situations will challenge their capabilities.

Fortunately, recent work in model interpretability and representation engineering has produced promising results which give hope towards understanding more and more of the internal thought processes of LLMs. Here at dmodel , we can think of no better place to apply these new techniques than formal methods, where there are many abstract properties that can be extracted with static analysis. The vast work done in programming language theory over the past hundred years provides many tools for scaling an understanding of the internal thought processes of language models as they write code.

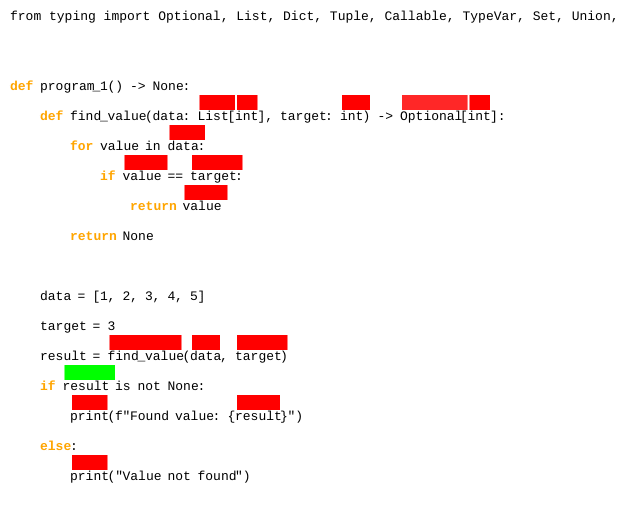

In that spirit, we wanted to start with a simple property that comes up in every programming language, nullability. Nullable values are represented differently across languages; as null pointers in C or C++, with explicit Option types in Rust, and with special nil or None values in dynamic languages like Javascript, Lisp, or Python. In every case, understanding where values can be nullable is necessary for writing even basic code, and misunderstanding where they are nullable can often be a source of bugs.

Do our models understand when a value is nullable? They must, to be able to write code that deals with nullable values, but we haven’t known what form this knowledge takes, what situations are likely to confuse the model. Until now.

Contributions

- We introduce a microbenchmark of 15 programs that test basic model understanding of the flow of nullability through a program. (Sec. 2.1)We find that models begin to understand nullability in a local scope, satisfying many requirements of the python typechecker, before they start to understand how nullability flows across the program. (Sec. 2.5)We find that models develop an internal concept of nullability as they scale up and are trained for longer. (Sec. 3)

We end with a demo: after we train a probe that uses the determines whether the model thinks a variable read corresponds to a nullable variable, we can demonstrate that internal knowledge in a reading diagram:

This is a linkpost for : https://dmodel.ai/nullability/

Discuss