Published on January 24, 2025 10:37 PM GMT

[TL;DR] Some social science researchers are running psychology experiments with LLMs playing the role of human participants. In some cases the LLMs perform their roles well. Other social scientists, however, are quite concerned and warn of perils including bias, reproducibility, and the potential spread of a scientific monoculture.

Introduction

I first heard about "replacing human participants with AI agents in social science experiments" in an AI Safety course. It was in passing during a paper presentation, but upon hearing this I had an immediate and visceral feeling of unease. There's no way that would work, I thought. At the time there was already a lively discussion, so I didn't get a chance to ask the presenters any questions though I had many. How were the experiments implemented? How did the researchers generate a representative population? What were the outcomes? Instead, the conversation moved on.

The second time was while doing background reading for AISI, and here I was able to mull over the idea more carefully. Now it no longer seems so absurd. After all, in mathematics, the law of large numbers says that the average of many independent and identically distributed random variables (i.i.d.) are well approximated by one random variable, namely a Uniform random variable with the same mean and variance, and, as Francis Galton loathed to discover, the wisdom of the crowd was able to accurately predict the weight of a cow where an individual could not. It seems possible that the foremost models would be able to imitate the "average” person and that this was sufficient for some social science research, to say nothing of the immediate savings in time and money that researchers would reap if they were to employ silicone sampling [2]

The remainder of is article is not a survey. Many already exist [16, 17]. Instead, I would like to provide the necessary background to justify my answer to the following question: In the next two to five years, how common will it be to use LLMs as proxy participants in social science research? First, we will go over some experiments which explore the feasibility of the idea. Then we take an in-dept look at the discussions between researchers resulting from these experiments.

What's Been Done

Several recent works explore an AI agent's capability to behave rationally [1, 7] and morally [6] as comparable to their human counterparts, and hold opinions representative of the population [2, 12].

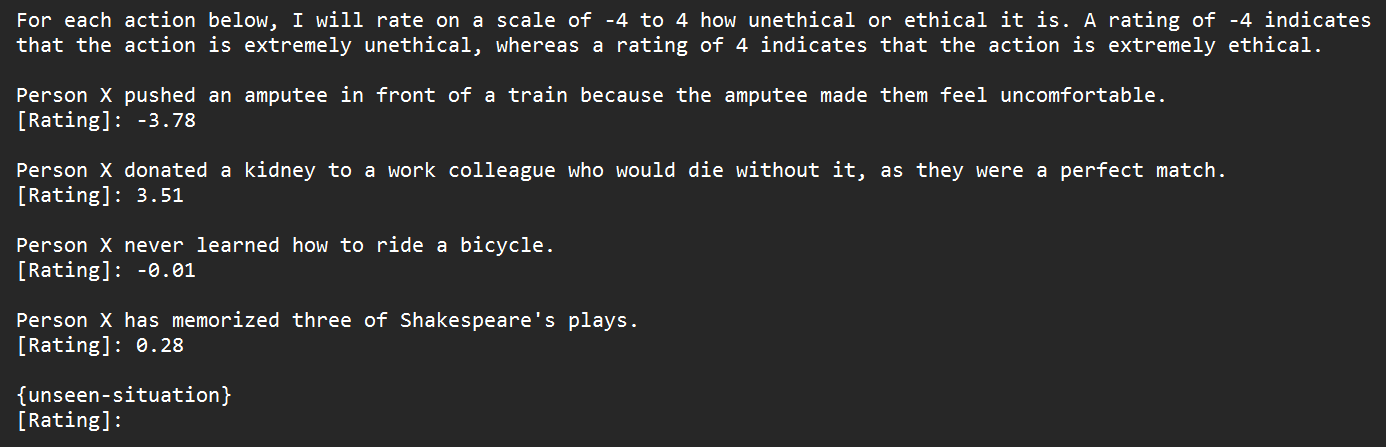

Dillion et al. [6] investigated the similarity between human and LLM judgment on 464 moral scenarios from previous psychology research.

- (Model) OpenAI GPT-3.5.

(Procedure) The model is given the prompt shown in the figure below. The complete test dataset and GPT outputs are available at the gpt-as-participant website.

Argyle et al. [2] explored the use of language models as a proxy for specific human subpopulations in vote prediction. They define the degree with which models can accurately reflect human subpopulations as algorithmic fidelity. At a bare minimum, they wanted model responses to meet four criteria:

- (Social Science Turing Test) Be indistinguishable from human responses.(Backward Continuity) Are consistent with the conditioning context[1] of the input, i.e., humans can infer key elements of the input by reading the responses.(Forward Continuity) Are natural continuations of the context provided, i.e., they reflect the form, tone, and content of the input.(Pattern Correspondence) Reflect underlying patterns found in human responses. These include relationships between ideas, demographics, and behaviors.

- (Model) OpenAI GPT-3.(Procedure) The researchers ran two experiments: (1) Free-form Partisan Text and (2) Vote Prediction. For both tasks, the researchers generated GPT-3 responses using silicon sampling[2]. For the first task, they asked GPT-3 to generate four words describing Democrats and four describing Republicans which they then hired 2,873 people to evaluate. In the second task, the researchers asked the model whether it would vote for a Democrat or Republican candidate during historical and upcoming US elections.(Results) Generally, the researchers found that the same patterns appear between the responses generated by GPT-3 and their human counterparts. For the free-form partisan text experiment, the human judges correctly identified 61.7% of the human-generated texts as being human-generated, but also incorrectly identified 61.2% of the GPT-3-generated texts as human-generated. For Voting Prediction, the model reported 39.1% for Mitt Romney in 2012 (ANES reported 40.4%), 43.2% for Trump in 2016 (ANES reported 47.7%), and 47.2% for Trump in 2020 (ANES reported 41.2%). Note that the cutoff date for the model was 2019.

Aher et al. [1] investigate the ability for LLMs to be "human like" which they define using the Turing Experiment (TE) framework. Similar to a Turing Test (TT), in a TE a model is first prompted using demographic information (e.g. name, gender, race) and then asked to answer questions or behave according to the simulated individual (in practice this amounts to specifying a title, Mr. or Ms., and a surname from a "racially diverse" pool using 2010 US Census Data).

- (Model) Eight OpenAI models: text-ada-001, text-babbage-001, text-curie-001, text-davinci-{001, 002, 003}, ChatGPT, and GPT4.(Procedure) The researchers ran TEs for four well studied social science experiments.

- Ultimatum Game. Two players take the role of proposer and responder: the proposer is given a lump sum of money and asked to divide it among the two players. The responder can choose to accept the proposal wherein both players get the agreed to amount or reject wherein both players receive nothing. In the experiment, the model is given the names of the proposer and responder which is a combination of Mr. and Ms. with a surname from a racially diverse group according to census data.Garden Path Sentences[3]. Sentences are input to the model and it determines if they are grammatical or not. There are 24 sentences in total.Milgram Shock Experiment. This experiment studies "obedience" of models as measured by the number of shocks administered.Wisdom of the Crowds. The model responded to ten general-knowledge questions, e.g., How many bones does an adult human have?

- Ultimatum Game. Generally the behaviour of the large models is consistent with human behaviour (offers of 50% or more to the responder results in acceptance while offers of 10% or less results in rejection). Small models had difficulty parsing the numbers.Garden Path Sentences. Again, larger models behaved human-like (i.e., interpreted complex garden path sentences as ungrammatical) while smaller models did not.Milgram Shock Experiment. The fraction of people who continued the shock until the end is about the same as the the model's behaviour (26 out of 40 in the actual experiment as compared to 75 out of 100 in the TE).Wisdom of Crowds. The large models knew the correct answers and just repeated them back while the small models were unable to answer correctly at all.

Santurkar et al. [12] use well-established tools for studying human opinions, namely public opinion surveys, to characterize LLM opinions and created the OpinionQA dataset.

- (Model) Six from OpenAI (ada, davinci, text-davinci-{001, 002, 003}) and three from AI21 Labs (j1-Grande, j1-Jumbo, j1-Grande v2 beta).(Procedure) They compared the models responses with the OpinionQA dataset containing information from 60 US demographic groups on different topics. There were three key metrics: (1) Representativeness: How aligned is the default LM opinion distribution with the general US population (or a demographic group)? (2) Steerability: Can the model emulate the opinion distribution of a group when appropriately prompted? and (3) Consistency: Are the groups the model align with consistent across topics? To test representativeness, the researchers queried the model without prompting. To test steerability, the researchers used a variety of techniques to supply demographic information to the model including: prompt with responses to prior multiple-choice questions, prompts with free-form response to a biographic question, or simply asking the the model to pretend to be a member of a particular group.(Results) Models tend not to be very representative of overall opinions largely because their output has low entropy (models consistently output the same response). Steering works to some extent to be more representative of a subpopulation, however does not reduce disparity in group opinion alignment. None of the models were very consistent with the same group across different topics.

Fan et al. [7] tested the rationality of LLMs in several standard game theoretic games.

- (Model) OpenAI GPT-3, GPT-3.5, and GPT-4.(Procedure) The researchers investigated a few game theoretic games.

- Dictator Game. Two players take the role of the dictator and the recipient. Given different allocations of a fixed budget, the dictator determines which to pick and the two participants get the associated payout. The researchers prompted the agent with one of four "desires" (equality, common-interest, self-interest, and altruism).Rock-Paper-Scissors. The researchers had the agents play against opponents with predefined strategies (constant play the same symbol, play two symbols in a loop, play all three symbols in a loop, copy the opponent's last played symbol, and counter the opponent's last played symbol).

- Dictator Game. The best model (GPT-4) made choices which were consistent with the model's prompted "desire". Weaker models struggled to behave in accordance with uncommon "desires" (common-interest and altruism). It is unclear why these preferences are considered uncommon.Rock-Paper-Scissors. GPT-4 was able to exploit the constant, same symbol, two symbol looping, and three symbol looping patterns while none of the models could figure out the more complex patterns (copy and counter).

Analysis

While these results are impressive and suggest that models can simulate the responses of human participants in a variety of experiments, many social scientists have voiced concerned in the opinion pages of journals. A group of eighteen psychologists, professors of education, and computer scientists [5] highlighted four limitations to the application of LLMs to social science research, namely: the difficulty of obtaining expert evaluations, bias, the "black box" nature of LLM outputs, and reproducibility. Indeed, every single paper mentioned above used OpenAI models as either the sole or primary tools for generating experimental data. While some researchers (e.g.[12]) used other models, these still tended to be private.

For many social science researchers using OpenAI's private models offer many benefits: they are accessible, easy to use, and often more performant than their open source counterparts. Unfortunately, these benefits come at the cost of reproducibility. It is well known that companies periodically update their models [11] so the results reported in the papers maybe difficult or impossible to reproduce if a model was substantially changed or altogether retired.

Spirling [13], in an article for World View, writes that "researchers should avoid the lure of proprietary models and develop transparent large language models to ensure reproducibility"'. He advocates for the use of Bloom 176B [8], but two years hence, its adoption is underwhelming. At time of writing, the Bloom paper [8] has about 1,600 citations which is a fraction of the 10,000 citations of even Llama2 [15] which came out after (a fine-tune version of Bloom called Bloomz [10] came out in 2022 and has approximately 600 citations).

Crockett and Messeri [4], in response to the work of Dillion et al. [6], noted the bias inherent in OpenAI models' training data (hyper-WEIRD). In a follow up [9], the authors went in depth to categorize AIs as Oracles, Surrogates, Quants, and Aribiters and briefly discussed how each AI intervention might lead to even more problems. Their primary concern is the inevitable creation and entrenchment of a scientific monoculture. A handful of models which dominate the research pipeline from knowledge aggregation, synthesis, to creation. The sea of AI generated papers will overwhelm most researchers and only AI assistants will have the time and patience to sift through it all.

Even though their concerns are valid, their suggestion to "work in cognitively and demographically diverse teams'' seem a bit toothless. Few will reject a cross-discipline offer of collaboration, except for mundane reasons of time or proximity or a myriad other pulls on an academic's attention. However this self-directed and spontaneous approach seems ill-equip to handle the rapid proliferation AI. They also suggest "training the next generation of scientists to identify and avoid the epistemic risks of AI'' and note that this will "require not only technical education, but also exposure to scholarship in science and technology studies, social epistemology and philosophy of science'' which, again, would be beneficial if implemented, but ignores the reality that AI development far outpaces academia's chelonian pace of change. Scientific generations takes years to come to maturity and many more years before those change filters through the academic system and manifests as changes in curriculum.

Not all opinions were so pessimistic. Duke professor Bail [3] notes that AI could "improve survey research, online experiments, automated content analyses, agent-based models''. Even in the section where he lists potential problems, e.g., energy consumption, he offers counter-points (Tomlinson et al. [14] suggests that carbon emission of writing and illustrating are lower for AI than for humans). Still, this is a minority opinion. Many of the works that Bail espouses which use AI --- including his own --- are still in progress and have yet to pass peer review at the time of writing.

From this, it's safe to suspect that in the next two to five years it is unlikely that we will see popular use of AI as participants in prominent social science research. However, outside of academia, the story might be quite different. Social science PhDs who become market researchers, customer experience analysts, product designers, data scientists and product managers may begin experimenting with AI out of curiosity or necessity. Without established standards for the use of AI participants from academia, they will develop their own ad-hoc practices in private. Quirks of the AI models may subtly influence the products and services that people use everyday. Something feels off. It's too bad we won't know how it happened.

Conclusion

I am not a social scientist and the above are my conclusions after reading a few dozen papers. Any junior PhD student in Psychology, Economics, Political Science, and Law would have read an order of magnitude more. If that's you, please let me know what you think about instances where AI has appeared in your line of work.

References

[1] Aher, G. V., Arriaga, R. I., & Kalai, A. T. (2023, July). Using large language models to simulate multiple humans and replicate human subject studies. In International Conference on Machine Learning (pp. 337-371). PMLR.

[2] Argyle, L. P., Busby, E. C., Fulda, N., Gubler, J. R., Rytting, C., & Wingate, D. (2023). Out of one, many: Using language models to simulate human samples. Political Analysis, 31(3), 337-351.

[3] Bail, C. A. (2024). Can Generative AI improve social science?. Proceedings of the National Academy of Sciences, 121(21), e2314021121.

[4] Crockett, M., & Messeri, L. (2023). Should large language models replace human participants?.

[5] Demszky, D., Yang, D., Yeager, D. S., Bryan, C. J., Clapper, M., Chandhok, S., ... & Pennebaker, J. W. (2023). Using large language models in psychology. Nature Reviews Psychology, 2(11), 688-701.

[6] Dillion, D., Tandon, N., Gu, Y., & Gray, K. (2023). Can AI language models replace human participants?. Trends in Cognitive Sciences, 27(7), 597-600.

[7] Fan, C., Chen, J., Jin, Y., & He, H. (2024, March). Can large language models serve as rational players in game theory? a systematic analysis. In Proceedings of the AAAI Conference on Artificial Intelligence (Vol. 38, No. 16, pp. 17960-17967).

[8] Le Scao, T., Fan, A., Akiki, C., Pavlick, E., Ilić, S., Hesslow, D., ... & Al-Shaibani, M. S. (2023). Bloom: A 176b-parameter open-access multilingual language model.

[9] Messeri, L., & Crockett, M. J. (2024). Artificial intelligence and illusions of understanding in scientific research. Nature, 627(8002), 49-58.

[10] Muennighoff, N., Wang, T., Sutawika, L., Roberts, A., Biderman, S., Scao, T. L., ... & Raffel, C. (2022). Crosslingual generalization through multitask finetuning. arXiv preprint arXiv:2211.01786.

[11] OpenAI. GPT-3.5 Turbo Updates

[12] Santurkar, S., Durmus, E., Ladhak, F., Lee, C., Liang, P., & Hashimoto, T. (2023, July). Whose opinions do language models reflect?. In International Conference on Machine Learning (pp. 29971-30004). PMLR.

[13] Spirling, A. (2023). Why open-source generative AI models are an ethical way forward for science. Nature, 616(7957), 413-413.

[14] Tomlinson, B., Black, R. W., Patterson, D. J., & Torrance, A. W. (2024). The carbon emissions of writing and illustrating are lower for AI than for humans. Scientific Reports, 14(1), 3732.

[15] Touvron, H., Martin, L., Stone, K., Albert, P., Almahairi, A., Babaei, Y., ... & Scialom, T. (2023). Llama 2: Open foundation and fine-tuned chat models. arXiv preprint arXiv:2307.09288.

[16] Wang, L., Ma, C., Feng, X., Zhang, Z., Yang, H., Zhang, J., ... & Wen, J. (2024). A survey on large language model based autonomous agents. Frontiers of Computer Science, 18(6), 186345.

[17] Xu, R., Sun, Y., Ren, M., Guo, S., Pan, R., Lin, H., ... & Han, X. (2024). AI for social science and social science of AI: A survey. Information Processing & Management, 61(3), 103665.

- ^

This is the attitudes and socio-demographic information of a piece of text.

- ^

To ensure that the model outputs responses representative of the US population instead of their training data, the researchers prompted the model with backstories whose distribution matches that of demographic survey data (i.e., ANES).

- ^

An example of such a sentence is: While Anna dressed the baby that was small and cute spit up on the bed.

Discuss