Published on January 16, 2025 12:01 AM GMT

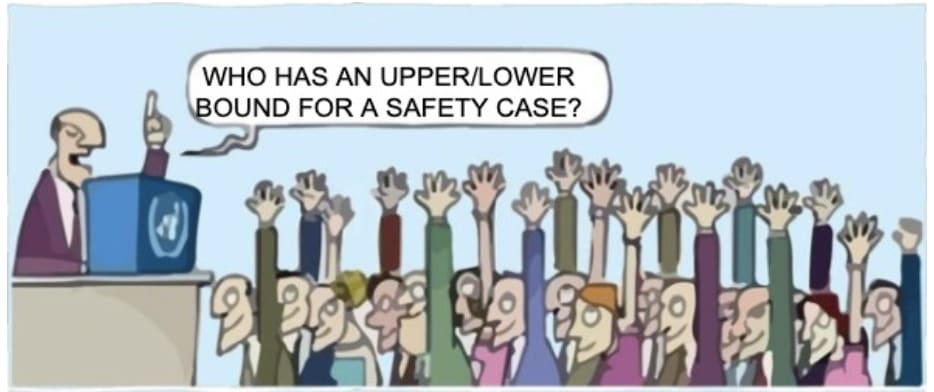

(Does anyone have the original meme? I can’t find it)

One key challenge in discussing safety cases is separating two distinct questions:

- Would a particular safety measure be sufficient if we had it?Is it technically possible to implement that measure?

By focusing on upper and lower bounds, we can have productive discussions about sufficiency without (yet) getting stuck on implementation details. Also, Anthropic shared drafts of safety cases - but it’s not clear to me if these are “drafts” because they might be too hard to implement, or too restrictive, or not restrictive enough, or something else.

Here's a made up conversation where using upper bounds makes things easier

Anthropic might claim: "100% interpretability of all neurons (or SAE features) in a model would be sufficient for safe deployment. We don’t think we’ll technically be able to do that, and we’re not sure if 20% interpretability or 80% interpretability will be enough or how to even define what 20% would mean, but 100% is definitely an upper bound"

Then someone might respond: "Even with perfect interpretability of a model's concepts, we still wouldn't understand the model’s plans"

I suggest this made it easier to (1) suggest (an upper bound) safety case, (2) get concrete feedback on whether this is sufficient (regardless of whether it’s realistic to implement), (3) as we have better upper and lower bounds, we could “zoom in” on a “good” safety case within these bounds.

In the spirit of making it easier to suggest safety cases as upper/lower bounds, here are some of mine

"Unanimous approval from everyone on the Alignment Forum."

A naive upper bound which I’m mainly suggesting in case someone thinks no upper bounds are possible. I also think this is useful because it leads us to other suggestions, like..

“Unanimous approval from all Anthropic employees”

I think this is a lower bound, but perhaps Anthropic disagrees and we could have a public discussion about it.

"Humans verify all plans the AI suggests, and they notice all details and consequences that are important to them"

This means no alien concepts and no plans that are too long or too complicated to follow.

I think this could be an upper bound (with some refinement). I’d also be happy with “we have good reasons to believe the AI can automate parts (or all?) of this validation”.

"Detect any single catastrophic action (such as adding a vulnerability or stealing weights)"

I think this is a lower bound. An AI might take many actions where each of them alone isn’t catastrophic but together they are. I think Anthropic already agrees with this, but I’d be happy if they’d explicitly say this was a lower bound.

“The model has cyber defenses which would be a significant challenge for a state actor bypass given the actor understands the value of stealing a recursively self improving AI” (given the AI can recursively self improve)

I think this is a lower bound. Otherwise - are we simply hoping that state actors won’t notice the significance? Or are we ok with the model being stolen?

I also think a safety case involving “it’s ok if state actors steal this model because the actual problem is getting GPUs” is ok to make. (I think it’s wrong, but I’m not an expert, and mainly I think saying this out loud would create a healthy conversation and should be an explicit assumption in a safety case)

“Before training a stronger model, test if the current model has precursor capabilities for escaping whatever sandbox you have it in”

For example, if you’re running the model in a docker container, try running it in a much older container (with known exploits) and see if it can escape that.

This seems like a lower bound. I’m personally worried about this danger, and I’d hope for a better safety case like “this is how gradual we think the improvement will be, these were the capabilities before training, then we trained for only this amount, then we tested for capabilities again”

If ‘low capabilities’ are part of another safety case, “The AI isn’t sandbagging capability evals, tested using red teaming”

Seems like a lower bound.

“We managed to represent human values in a machine readable format + give an AI a goal in this format, and it does follow this goal reliably”

Very nice, alignment solved. Upper bound.

Summary

And,

Thanks David Manheim and Joshua Clymer for their comments on this idea. Mistakes are mine.

Discuss