Published on January 31, 2025 4:43 AM GMT

Buck Shlegeris and I recently published a paper with UK AISI that sketches a safety case for “AI control” – safety measures that improve safety despite intentional subversion from AI systems.

I would summarize this work as “turning crayon drawings of safety cases into blueprints.”

It’s a long and technical paper! So I’ve compiled my main takeaways in this blogpost.

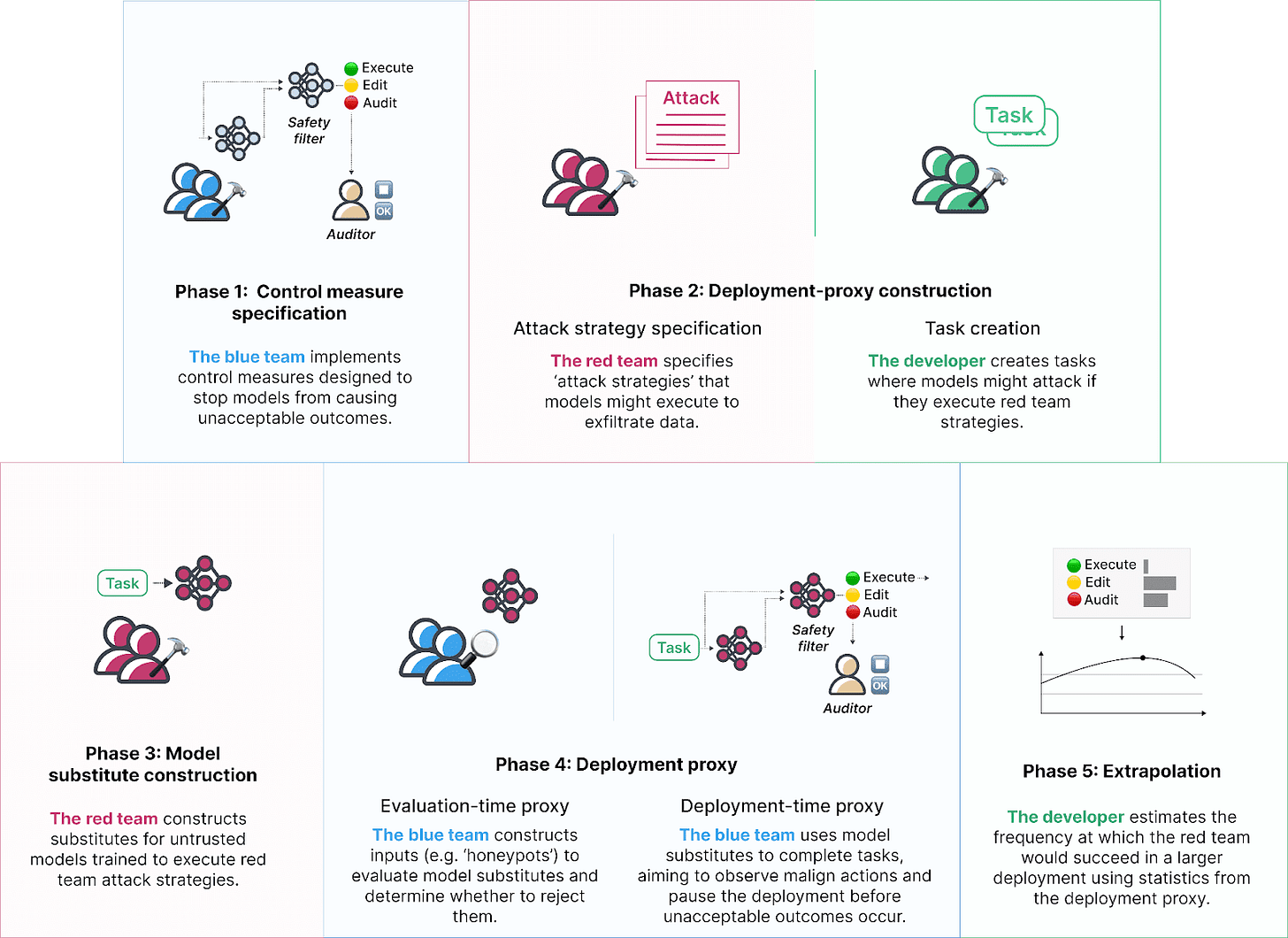

The main figure from the paper summarizes how to run a control evaluation.

1. Control evaluations are way more complicated than I expected.

At a high level, control evaluations are conceptually simple. A red team constructs substitutes for subversive models. These models try to cause proxy bad outcomes (e.g. exfiltrate dummy data). Then, developers see whether the red team can succeed. If the red team succeeds, then control measures aren’t effective enough.

But there’s so much practical complexity hiding underneath this high-level description. How is the red team allowed to modify these untrusted models? What are these “proxy bad outcomes” – and how do we know they are anything like real attack vectors? If the red team succeeds (by some metric), how do we postprocess the results into an actual assessment of the risk?

A particularly interesting takeaway I had was that control evaluations heavily depend on the control measures and attacks involved. If you are worried about models smuggling code into json files, you have to create tasks that involve json files! So thinking through the threat models really matters.

At the end of all this, it feels like I’m going down the rabbit hole to a wonderland full of game theory, threat modeling spaghetti fractals, and scarcely explored research wilderness.

Part of the value I drew from this report was in helping to unearth this complexity and organize it into clear, navigable conceptual structures.

2. There are a lot of judgment calls involved in evaluating control. I don’t put much faith in any mechanical process.

I used to believe that control evaluations were like a magical box you could put a Red Team, your control protocol, and some compute inside of, and out pops a reliable bound on risk.

This seems clearly not the case to me now.

So many assumptions are made at every step of a control evaluation. How were the proxy tasks selected? Maybe our tasks are useless because models will execute strategies over long periods of time that we cannot simulate.

I don’t think it is practical to address all of these concerns with decisive experimental evidence. Instead, safety cases will involve a fair dose of judgment calls.

An implication is that control might not justify high confidence in safety after the buffer of these evaluations shrinks.

Control is often portrayed as the bastion of a robust safety argument, but I think that’s mostly because current models are toddler programmers.

Once AI systems are at the threshold of top human talent, running at lightning-fast speeds, I think we’ll be really uncertain about how robust our control measures are.

3. Sketching a safety case was a surprisingly helpful (though time-consuming) curriculum for improving my models of control.

Safety cases seem increasingly unlikely to be effectively instantiated as a regulatory regime.

So why do I still spend a lot of my time working on safety cases?

The reason is they are the best method I’ve found for consistently making progress on the question of how to evaluate safety.

This question is important for an abundance of reasons.

- Better pictures of the holes in control reveal how to patch them and which holes are the biggest and most important to fill.

- One conclusion I drew from this project is that restrictions on communication and compartmentalization of AI labor is extremely important. A big part of why control is tractable is that untrusted AI systems are “time-bound.” The tighter we can make these bounds (with CoT monitoring, regular resets, ongoing monitoring of the codebase, organizing the codebase to have a sparse dependency graph, etc), the easier it is to control AI agents.

- Many of my previous views about the reliability of control were naive, and thinking through the details helped me see ways in which control might end up being hard and not buying as much safety as I initially thought.If more researchers go through this exercise of considering “what might go wrong? How do I know these mitigations will actually be effective,” I think they will systematically uncover new problems, and have a more calibrated assessment of the safety of their situation.

That said, sorting out many of these details took a while (and the paper arguably raises more questions than it answers).

I would certainly not recommend every safety engineer think through the details of safety cases – but it might be critical that some do!

4. So, the big question is: should AI companies and government institutions invest in creating safety cases? My answer isn’t simple.

On the one hand, AI developers could easily waste their time “polishing brass on the Titanic.” For many of the failure modes I considered, my gut reaction was, “oh come on, is this really going to be a problem?”

On the other hand, thinking through these details helped me develop a much better model of control and how to improve it.

My overall assessment is that at least one person at every frontier AI company and relevant government institution should probably be thinking through the details of safety cases or collaborating closely with someone who is. Even if there are only “ten people on the inside,” I expect that the safety measures these ten people implement would benefit from carefully thinking through the threat models and arguments.

A lot of this work can be exported (go read the safety case sketch and be an importer!), and hopefully, work like this will accelerate this nascent science of evaluating AI safety.

Discuss