The strongest linear model to date, beating out all previous RWKV, State Space and Liquid AI models, smashing all previous key english benchmarks and evals.

You can find this model available on both

Hugging Face:

https://huggingface.co/recursal/QRWKV6-32B-Instruct-Preview-v0.1

Featherless.ai: https://featherless.ai/models/recursal/QRWKV6-32B-Instruct-Preview-v0.1

Note: that as an instruction preview, the model is not considered final

Trained by converting the weights of the Qwen 32B Instruct model, into a customized QRWKV6 architecture. We were successfully able to replace the existing transformer attention heads with RWKV-V6 attention heads, through a groundbreaking new conversion training process.

This unique training process was developed by the team at Recursal AI, in joint collaboration with the RWKV and EleutherAI open source community.

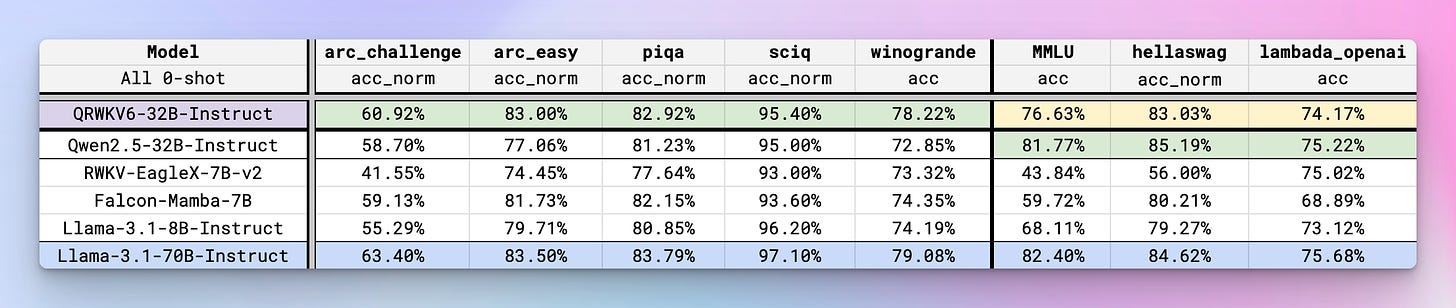

Benchmarks

We compared QRWKV6 against existing open weights models, both transformer based and linear-architecture based.

In overall, what is most exciting is how the QRWKV6 converted model, perform similarly to its original 32B model.

GPU Sponsor: TensorWave

The conversion process for QRWKV6, was done on 16 AMD MI300X GPUs kindly donated by TensorWave. Each MI300X comes with a whopping 192GB of VRAM, while having comparable H100 level of compute performance.

This allowed us to reduce the minimum number of nodes required for our training process, and simplify our overall training and conversion process.

The conversion process took about 8 hours

The Exciting

Linear models hold promise in substantially lower compute cost at scale. Delivering over a 1000x compute efficiency in inference cost, especially over large context length. A key multiplier unlock for both O1 style inference time thinking, and making AI more accessible for the world.

This technique is also scalable to larger transformer based models. Which we have since started.

The Good

The benefit of this process is that we are able to convert any previously trained QKV Attention based model, such as Qwen and LLaMA based models, into a variant of RWKV. Without needing to retrain the model from scratch.

This allows us to quickly test and prove out the significantly more efficient RWKV Linear attention mechanic at a larger scale, with a much smaller budget, without training from scratch. Proving out the architecture design and scalability of RWKV.

Once again proving, QKV attention is not all you need.

( Someone ping @jefrankle and @srush_nlp )

The Bad

The disadvantage of this process is that the model inherent knowledge and dataset training, is based on its “parent” model. Meaning unlike previous RWKV models trained on over 100+ languages. The QRWKV model is limited to the approximate 30 languages supported by the Qwen line of models.

Additionally, instead of RWKV based channel mix and feedforward network layers, we retain the “parent” model feed forward network architecture design. This means there will be incompatibility with existing RWKV inference code.

Separately, due to our compute budget, we were only able to do the conversion process up to 16k context length. While the model does exhibit stability beyond the given context length, the following model may need additional training to accurately support larger context length

Future Followups

Currently Q-RWKV-6 72B Instruct model is being trained

Additionally with the finalization of RWKV-7 architecture happening soon, we intend to repeat the process and provide a full line up of

Q-RWKV-7 32B

LLaMA-RWKV-7 70B

We intend to provide more details on the conversion process, along with our paper after the subsequent model release.

References

Acknowledgements

Special thanks to TensorWave and AMD for sponsoring the MI300X training

EleutherAI for support and guidance, especially on benchmarks and publishing research papers about the RWKV architecture

Linux Foundation AI & Data group for supporting and hosting the RWKV project

Recursal AI for its commitment to providing resources and development for the RWKV ecosystem - you can use their featherless.ai platform to easily run RWKV and compare to it other language models

And of course a huge thank you to the many developers around the world working hard to improve the RWKV ecosystem and provide environmentally friendly open source AI for all.