Published on September 13, 2024 9:24 PM GMT

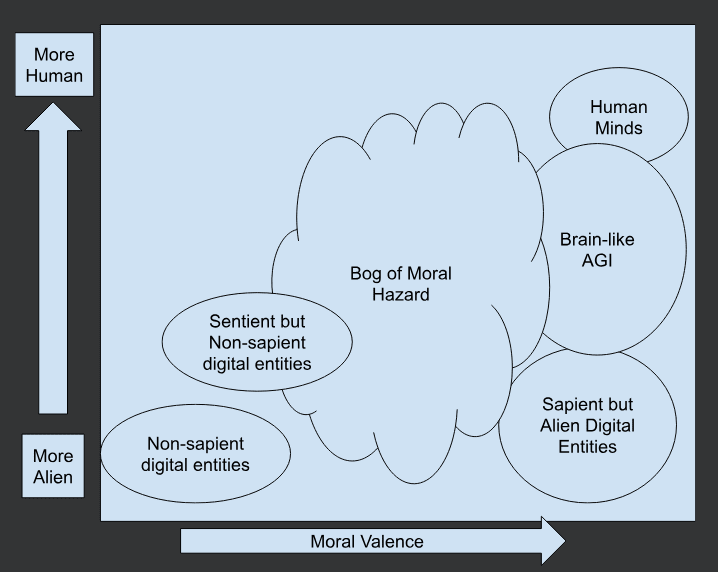

Imagine if you will, a map of a landscape. On this map, I will draw some vague regions. Their boundaries are uncertain, for it is a new and under-explored land. This map is drawn as a graph, but I want to emphasize that the regions are vague guesses, and the true borders could be very convoluted.

So here's the problem. We're making these digital minds, these entities which are clearly not human and process the world in different ways from human minds. As we improve them, we wander further and further into this murky fog covered bog of moral hazard. We don't know when these entities will become sapient / conscious / valenced / etc to such a degree that they have moral patient-hood. We don't have a good idea of what patterns of interaction with these entities would be moral vs immoral. They operate by different rules than biological beings. Copying, merging, pausing and resuming, inference by checkpoints with frozen weights... We don't have good moral intuitions for these things because they differ so much from biological minds.

Once we're all in agreement that we are working with an entity on the right hand side of the chart, and we act accordingly as a society, then we are clear of the fog. Many mysteries remain, but we know we aren't undervaluing the beings we are interacting with.

While we are very clearly on the left hand side of the chart, we are also fine. These are entities without the capacity for human-like suffering, who don't have significant moral valence according to most human ethical philosophies.

Are you confident you know where to place Claude Opus 3 or Claude Sonnet 3.5 on this chart? If you are confident, I encourage you to take a moment to think carefully about this. I don't think we have enough understanding of the internals of these models to be confident.

My uncertain guess would place them in the Bog of Moral Hazard, but close to the left hand side. In other words, probably not yet moral patients but close to the region where they might become such. I think that we just aren't going to be able to clear up the murk surrounding the Bog of Moral Hazard anytime soon. I think we need to be very careful as we proceed with developing AI to deliberately steer clear of the Bog. Either we make a fully morally relevant entity, with human-level moral patient-hood and treat it as equal to humans, or we deliberately don't make intelligent beings who can suffer.

Since there would be enormous risks in creating a human-level mind in terms of disruption to society and risks of catastrophic harms, I would argue that humanity isn't ready to make a try for the right hand side of the chart yet. I argue that we should, for now, stick to deliberately making tool-AI who don't have the capacity to suffer.

Even if you fully intended to treat your digital entity with human-level moral importance, it still wouldn't be ok to do. We first need philosophy, laws, and enforcement which can determine things like:

"Should a human-like digital being be allowed to make copies of itself? Or to make merge-children with other digital beings? How about inactive backups with triggers to wake them up upon loss of the main copy? How sure must we be that the triggers won't fire by accident?"

"Should a human-like digital being be allowed to modify it's parameters and architecture, to attempt to self-improve? Must it be completely frozen, or is online-learning acceptable? What should we do about the question of checkpoints needed for rollbacks, since those are essentially clones?"

"Should we restrict the entity to staying within computer systems where these laws can be enforced? If not, what do we do about an entity which moves onto a computer system over which we don't have enforcement power, such as in a custom satellite or stealthy submarine?"

I am writing this post because I am curious what others' thoughts on this are. I want to hear from people who have different intuitions around this issue.

This is discussed on the Cognitive Revolution Podcast by Nathan Labenz in these recent episodes:

Discuss