Published on August 17, 2024 11:42 PM GMT

Summary: The collection of a massive first-person video dataset for use in large model pretraining is technologically & economically feasible with modest resources. Such a dataset might enable high-fidelity imitation of human behavior, with positive implications for the capabilities and safety of trained models. Such models may also enable a type of “digital immortality” and provide a compelling alternative to cryonics.

Table of Contents

First Person Behavior Cloning

Implications for Capabilities

Implications for Alignment

Digital Immortality

Implementation

Hardware Options

Economic Feasibility

Privacy & Legal Concerns

First Person Behavior Cloning

Current frontier AI systems rely on pretraining via next-token-prediction on web-scale (primarily text) datasets. At the limit, this training objective is sufficient to enable human-equivalent artificial intelligence (to perfectly predict the next words of the person that wrote the text implies an intelligence at least as great as the writer). However, models produced by this process do not necessarily reflect the same type of intelligence as the people creating the data:

Consider that some of the text on the Internet isn't humans casually chatting. It's the results section of a science paper. It's news stories that say what happened on a particular day, where maybe no human would be smart enough to predict the next thing that happened in the news story in advance of it happening. As Ilya Sutskever compactly put it, to learn to predict text, is to learn to predict the causal processes of which the text is a shadow. Lots of what's shadowed on the Internet has a complicated causal process generating it.[...]

GPT-4 is still not as smart as a human in many ways, but it's naked mathematical truth that the task GPTs are being trained on is harder than being an actual human. And since the task that GPTs are being trained on is different from and harder than the task of being a human, it would be surprising - even leaving aside all the ways that gradient descent differs from natural selection - if GPTs ended up thinking the way humans do, in order to solve that problem.

– Yudkowsky, 2023[1]

In part, the increased difficulty of the model’s task (and the corresponding differentness of the model’s intelligence) is a consequence of the gap between the output of the generating process and the process itself — i.e. web text is far removed from the cognition of human writers. Human-generated text is the manifestation of complex processes, the inner workings of which are not well captured by the content of the text alone:

- authors may edit their work many times before publicationany writing is only the end product of an author's inner dialogue an author’s inner dialogue reflects a lifetime of prior experience

This gap contributes not only to the difficulty in training performant models (because the search space of explanatory models for observed output is so underconstrained), but also has negative implications for model safety. Given that we agree that robust generalization when predicting human behavior is important (which it likely is for tasks like modeling moral preferences) then the way that web text data obscures the underlying dynamics leading to its creation makes modeling of those dynamics more difficult. In other words, the more information that the output of some process contains about the process itself, the easier it is for a predictor trained on that output to generalize when simulating that process.

These disadvantages point to the obvious question - would an alternative pretraining dataset be able to reduce this gap?

To address these challenges, I propose the construction of a massive, long-horizon, first-person-view, video dataset. Concretely, I advocate for thousands of volunteers to capture continuous video footage of all waking hours using body-mounted cameras, for several years. I expect such a dataset to enable the creation of high fidelity human behavior simulators, capable of predicting the next actions of a given person, conditioned on all the past recorded experiences of that person. The construction of a dataset on the scale of 10s of millions of hours is possible with only 1000 volunteers, in less than 5 years, using commodity hardware, and at a cost of < $2 million USD. I draw inspiration from past writing on whole-brain-emulation[2], lifelogging[3], and behavior cloning[4].

It is possible to imagine even richer sources of pretraining data than FPV video. Consider an fMRI recording a volunteer's brain activity continuously for 5 years: this could be a far richer data source than only observing outputs, since the human thought we want to simulate is, in principle, visible directly within this activity. Obviously there are some practical blockers that prevent this from being workable (high cost, low resolution of scans, the desire to live life outside of an MRI machine, etc). While FPV data is clearly an incomplete representation of the generating process – since it does not capture the inner thoughts of the volunteer – the expectation is that it reflects far more information about human thought than web text data.

From an alignment & safety perspective, FPV cloning inherits many of the desirable attributes of Whole Brain Emulation (WBE) alignment proposals:

- Value misalignment is only as bad as the value misalignment of the emulated people. This is less than perfect, but significantly better than the runaway hyper-optimization of an unaligned RL-based intelligence (à la paperclip maximizers).Intelligence of emulation-based systems, at least initially, would be bounded to human-level intelligence. This decreases risks of deception, self exfiltration, invention of dangerous technologies, and other risks associated with beyond-human intelligence.We can choose to preferentially emulate people with the skills and desire to accelerate AI safety research, who can then be tasked with aligning more powerful but less trustworthy approaches to artificial superintelligence.

It’s worth acknowledging that the benefits for FPV cloning are somewhat diminished versus WBE due to the greater amount of approximation involved. WBE usually implies a biologically inspired approach, one which has access to physical information about the subject’s brain. As a result, WBE is often assumed to have strong guarantees of faithful reconstruction of the original person’s behavior. Using observed behavior alone to train a simulator model leaves a larger gap between process and output. This increases the risk that the simulated mental machinery will deviate significantly from the original human cognition, and with it the risk that simulants will take undesirable actions when put in out-of-distribution scenarios. However, I think the risk here is not too great — the expectation is that the output-process gap in FPV video is sufficiently small that it constrains well performing models to be implementing algorithms that are very similar (and generalize in similar ways) to those found in the human brain.

Separate from the issues of model safety & capabilities, the creation of an FPV behavior cloning model could enable a type of “digital immortality” - providing a form of continued existence for contributors beyond the limits of their biological life. Assuming that we take substrate independence as true, it’s not a far stretch to consider a whole-brain-emulation of you to really be you. However, in the process of mapping brain matter for WBE, there would undoubtedly be inaccuracies and mismeasurement.To consider the WBE to be a valid reconstruction, we must accept that the validity of the emulation relies on making the reconstruction sufficiently close to the original. As such, being alive as yourself is less of a binary state, and more of a continuum where more of you is alive depending on how faithful the reconstruction is. Recreation of human thought based on FPV behavior cloning is perhaps lower on this continuum than a high accuracy biological simulation, but I’d consider it sufficiently high to be a form of continued existence (albeit an imperfect one). From a practical standpoint, FPV cloning has a clearer path to implementation than alternatives like cryonics, where there’s been little progress over the last 30 years in either the resurrection or digital emulation of preserved brain matter. It is also less sensitive to the circumstances of your death.

Implementation

Data Requirements

It’s difficult to estimate the volume or diversity of pretraining data required for a given set of capabilities. Similar to modeling with text, we’d expect capabilities to emerge at unpredictable moments as we increase the scale of data and compute. On the upper bound, we’re limited by both financial resources and by the difficulty in convincing a large number of volunteers to collect data. Assuming it’s practically feasible to recruit 1000 volunteers, and that each volunteer records 14 hours per day on average, and that all volunteers provide data consistently for 5 years, this would result in 2917 expected years of footage. On the lower bound, we can look at current video-modeling efforts for reference. Although the details aren’t available for OpenAI’s Sora, there exist open source alternatives like Stable Video Diffusion. According to the Stability AI whitepaper, their video diffusion model was trained on ~200 years of data, or 1 OOM less than our upper bound[5].

Hardware Options

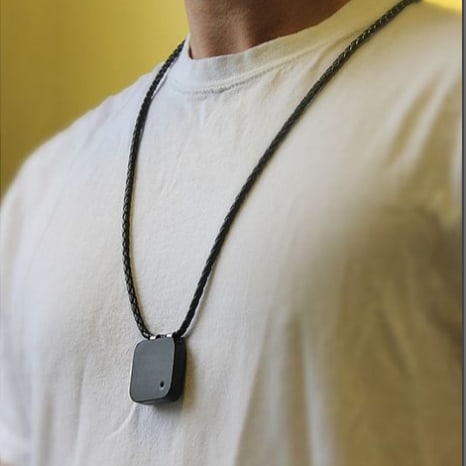

There are a number of different form factor options, including clip, necklace, pin, & glasses:

The scale of collected data (full day usage, continuous video) will require different hardware considerations versus past lifelogging equipment. In practice, this almost certainly means an external source of power separate from the camera itself. Something like the belt worn microphone battery packs used by performing artists could be an inspiration:

The simplest implementation would be a small clip that attaches to the front of the shirt. The clip could be a small enclosure for a Raspi Camera Module, a low cost, high resolution camera module[6] that’s attached via a long cable to a waist-attached battery/compute box, containing a Raspi computer, that handles recording the video to an SD card and uploading the video to the long term storage location whenever there’s an available Wi-Fi signal.

Economic Feasibility

Some rough estimates of the involved costs:

| Description | Amount |

|---|---|

| Cost Per Recording Device | $300 USD |

| Recording Devices for 1000 People | $300,000 USD |

| 14 Hours Per Day x 5 Years | 25550 Hours |

| Storage for 1 Hour of 2k Footage | 3 GB |

| Storage for 25550 Hours | 76650 GB ≈ 77TB |

| Storage for 1000 People | 77000 TB ≈ 77 PB |

| Cost Per Terabyte Aug 2024 | $10 USD |

| Storage Cost Per Contributor | $770 USD |

| Storage Cost for 1000 People | $770,000 USD |

| Total Material Costs for 1000 People | $1,070,000 USD |

Organization Management for 5 Years Recruiting Volunteers, Logistics, etc | $300,000 USD |

| Total Estimate Dataset Collection Cost | $1,373,000 USD |

Open Questions & Organizational Governance

This project raises many practical and ethical concerns. A small sample:

- The right to record video in public spaces with only the recorder’s consent varies significantly by state and country, and should heavily inform which volunteers are chosen & where to recruit from.Does the sponsoring organization face any legal liability for volunteers that violate local recording laws? How should volunteers go about getting consent to record in a way that’s not awkward or disruptive?How much of their lives can a volunteer withhold without their data losing value? Does recording activities while on the job present a legal concern? How much should we care about the social / economic / cultural / ethnic / linguistic / mental diversity of volunteers? Do some types of volunteers provide more valuable data than others? How can researchers train off of provided data while respecting the privacy of the volunteers? Do rules governing medical studies provide inspiration here? What rules should govern who can directly view recorded data? Should volunteers have the right to request the deletion of submitted data? What’s the expected deletion rate? What’s the expected volunteer attrition rate? How can we incentivize volunteers to contribute data? Should volunteers have direct say in how their contributed data is used in training runs? How can we incentivize volunteers to consent to usage in a particular training run? Could providing volunteers with an equity stake in any commercial ventures derived from their contributions be a cheap way of incentivizing contributions? What role does data encryption play? How should provided data be securely stored?How do we minimize the time/effort/social burden on volunteers to contribute?

Closing Thoughts

I write this with several motivations:

- To invite on these ideas, both for theory and the practical implementationTo guage interest in this line of work among the communityTo connect with people with similar interests in digital immortalityTo spur more conversation about human behavior cloning, its implementation, & its implications

Discuss