Published on August 13, 2024 5:00 PM GMT

This is a snapshot of a new page on the AI Impacts Wiki.

We’ve made a list of arguments1 that AI poses an existential risk to humanity. These are intended as summaries—they may not contain the necessary detail to be compelling, or to satisfyingly represent the position. We’d love to hear how you feel about them in the comments and polls.

Competent non-aligned agents

Summary:

Humans will build AI systems that are 'agents', i.e. they will autonomously pursue goals

Humans won’t figure out how to make systems with goals that are compatible with human welfare and realizing human values

Such systems will be built or selected to be highly competent, and so gain the power to achieve their goals

Thus the future will be primarily controlled by AIs, who will direct it in ways that are at odds with long-run human welfare or the realization of human values

Selected counterarguments:

It is unclear that AI will tend to have goals that are bad for humans

There are many forms of power. It is unclear that a competence advantage will ultimately trump all others in time

This argument also appears to apply to human groups such as corporations, so we need an explanation of why those are not an existential risk

People who have favorably discussed2 this argument (specific quotes here): Paul Christiano (2021), Ajeya Cotra (2023), Eliezer Yudkowsky (2024), Nick Bostrom (20143).

See also: Full wiki page on the competent non-aligned agents argument

Second species argument

Summary:

Human dominance over other animal species is primarily due to humans having superior cognitive and coordination abilities

Therefore if another 'species' appears with abilities superior to those of humans, that species will become dominant over humans in the same way

AI will essentially be a 'species' with superior abilities to humans

Therefore AI will dominate humans

Selected counterarguments:

Human dominance over other species is plausibly not due to the cognitive abilities of individual humans, but rather because of human ability to communicate and store information through culture and artifacts

Intelligence in animals doesn't appear to generally relate to dominance. For instance, elephants are much more intelligent than beetles, and it is not clear that elephants have dominated beetles

Differences in capabilities don't necessarily lead to extinction. In the modern world, more powerful countries arguably control less powerful countries, but they do not wipe them out and most colonized countries have eventually gained independence

People who have favorably discussed this argument (specific quotes here): Joe Carlsmith (2024), Richard Ngo (2020), Stuart Russell (20204), Nick Bostrom (2015).

See also: Full wiki page on the second species argument

Loss of control via inferiority

Summary:

AI systems will become much more competent than humans at decision-making

Thus most decisions will probably be allocated to AI systems

If AI systems make most decisions, humans will lose control of the future

If humans have no control of the future, the future will probably be bad for humans

Selected counterarguments:

Humans do not generally seem to become disempowered by possession of software that is far superior to them, even if it makes many 'decisions' in the process of carrying out their will

In the same way that humans avoid being overpowered by companies, even though companies are more competent than individual humans, humans can track AI trustworthiness and have AI systems compete for them as users. This might substantially mitigate untrustworthy AI behavior

People who have favorably discussed this argument (specific quotes here): Paul Christiano (2014), Ajeya Cotra (2023), Richard Ngo (2024).

See also: Full wiki page on loss of control via inferiority

Loss of control via speed

Summary:

Advances in AI will produce very rapid changes, in available AI technology, other technologies, and society

Faster changes reduce the ability for humans to exert meaningful control over events, because they need time to make non-random choices

The pace of relevant events could become so fast as to allow for negligible relevant human choice

If humans are not ongoingly involved in choosing the future, the future is likely to be bad by human lights

Selected counterarguments:

The pace at which humans can participate is not fixed. AI technologies will likely speed up processes for human participation.

It is not clear that advances in AI will produce very rapid changes.

People who have favorably discussed this argument (specific quotes here): Joe Carlsmith (2021).

See also: Full wiki page on loss of control via speed

Human non-alignment

Summary:

People who broadly agree on good outcomes within the current world may, given much more power, choose outcomes that others would consider catastrophic

AI may empower some humans or human groups to bring about futures closer to what they would choose

From 1, that may be catastrophic according to the values of most other humans

Selected counterarguments:

Human values might be reasonably similar (possibly after extensive reflection)

This argument applies to anything that empowers humans. So it fails to show that AI is unusually dangerous among desirable technologies and efforts

People who have favorably discussed this argument (specific quotes here): Joe Carlsmith (2024), Katja Grace (2022), Scott Alexander (2018).

See also: Full wiki page on the human non-alignment argument

Catastrophic tools

Summary:

There appear to be non-AI technologies that would pose a risk to humanity if developed

AI will markedly increase the speed of development of harmful non-AI technologies

AI will markedly increase the breadth of access to harmful non-AI technologies

Therefore AI development poses an existential risk to humanity

Selected counterarguments:

It is not clear that developing a potentially catastrophic technology makes its deployment highly likely

New technologies that are sufficiently catastrophic to pose an extinction risk may not be feasible soon, even with relatively advanced AI

People who have favorably discussed this argument (specific quotes here): Dario Amodei (2023), Holden Karnofsky (2016), Yoshua Bengio (2024).

See also: Full wiki page on the catastrophic tools argument

Powerful black boxes

Summary:

So far, humans have developed technology largely through understanding relevant mechanisms

AI systems developed in 2024 are created via repeatedly modifying random systems in the direction of desired behaviors, rather than being manually built, so the mechanisms the systems themselves ultimately use are not understood by human developers

Systems whose mechanisms are not understood are more likely to produce undesired consequences than well-understood systems

If such systems are powerful, then the scale of undesired consequences may be catastrophic

Selected counterarguments:

It is not clear that developing technology without understanding mechanisms is so rare. We have historically incorporated many biological products into technology, and improved them, without deep understanding of all involved mechanisms

Even if this makes AI more likely to be dangerous, that doesn't mean the harms are likely to be large enough to threaten humanity

See also: Full wiki page on the powerful black boxes argument

Multi-agent dynamics

Summary:

Competition can produce outcomes undesirable to all parties, through selection pressure for the success of any behavior that survives well, or through high stakes situations where well-meaning actors' best strategies are risky to all (as with nuclear weapons in the 20th Century)

AI will increase the intensity of relevant competitions

Selected counterarguments:

It's not clear what direction AI will have on the large number of competitive situations in the world

People who have favorably discussed this argument (specific quotes here): Robin Hanson (2001)

See also: Full wiki page on the powerful black boxes argument

Large impacts

Summary:

AI development will have very large impacts, relative to the scale of human society

Large impacts generally raise the chance of large risks

Selected counterarguments:

That AI will have large impacts is a vague claim, so it is hard to tell if it is relevantly true. For instance, 'AI' is a large bundle of technologies, so it might be expected to have large impacts. Many other large bundles of things will have 'large' impacts, for instance the worldwide continued production of electricity, relative to its ceasing. However we do not consider electricity producers to pose an existential risk for this reason

Minor changes frequently have large impacts on the world according to (e.g. the butterfly effect). By this reasoning, perhaps we should never leave the house

People who have favorably discussed this argument (specific quotes here): Richard Ngo (2019)

See also: Full wiki page on the large impacts argument

Expert opinion

Summary:

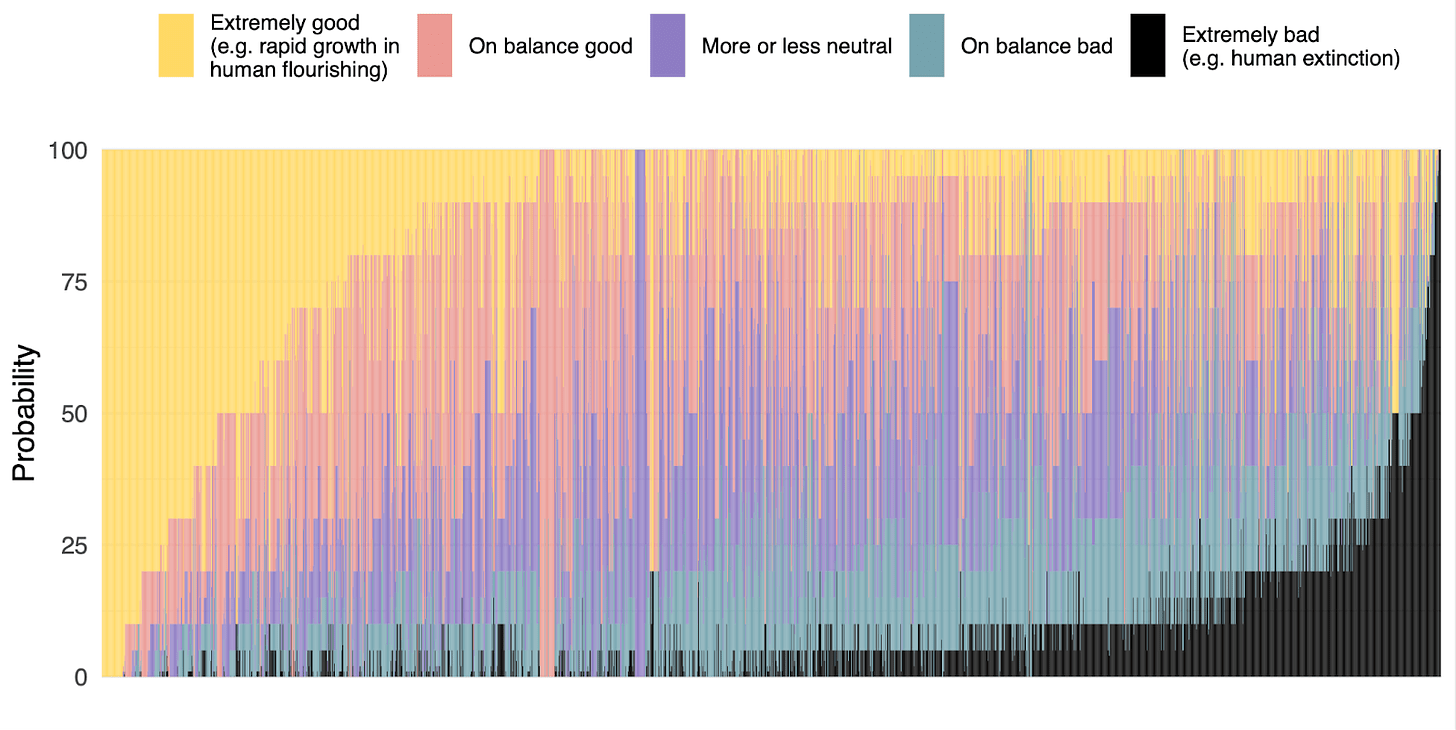

The people best placed to judge the extent of existential risk from AI are AI researchers, forecasting experts, experts on AI risk, relevant social scientists, and some others

Median members of these groups frequently put substantial credence (e.g. 0.4% to 5%) on human extinction or similar disempowerment from AI

Selected counterarguments:

Most of these groups do not have demonstrated skill at forecasting, and to our knowledge none have demonstrated skill at forecasting speculative events more than 5 years into the future

This is a snapshot of an AI Impacts wiki page. For an up to date version, see there.

Each 'argument' here is intended to be a different line of reasoning, however they are often not pointing to independent scenarios or using independent evidence. Some arguments attempt to reason about the same causal pathway to the same catastrophic scenarios, but relying on different concepts. Furthermore, 'line of reasoning' is a vague construct, and different people may consider different arguments here to be equivalent, for instance depending on what other assumptions they make or the relationship between their understanding of concepts.

Nathan Young puts 80% that at the time of the quote the individual would have endorsed the respective argument. They may endorse it whilst considering another argument stronger or more complete.

Superintelligence, Chapter 8

Human Compatible: Artificial Intelligence and the Problem of Control

Discuss