来源:雪球App,作者: 米格星球的小星星,(https://xueqiu.com/7517920899/299799282)

外媒:Nvidia’s New AI Chip Delayed Due to Design Flaws:Nvidia’s New AI Chip Delayed Due to Design Flaws: Information

Chip-maker informed Microsoft about the delays this week

Big shipments of the Blackwell series delayed until Q1 2025

一个小时前彭博 information等全球主流媒体终于发布了NV卡延迟的报道!

和此前大摩的解读要点几乎一模一样(nv设计问题/已有解决方案/延迟不超过4个月/12月以后出货列表不受影响/需求旺盛)

因为这就是事实!

他们消息来源是微软!这周周中,英伟达通知了微软和另外一家云厂这一延迟消息。

核心如下:

1)设计缺陷,英伟达即将推出的人工智能芯片将推迟三个月或更长时间,这一混乱可能会影响 Meta Platforms、谷歌和微软等客户,这些客户总共订购了价值数百亿美元的芯片。

2)台积电的工程师在量产准备过程中发现了一些缺陷。

GB200 芯片包含两个相连的 Blackwell GPU 以及一个 Grace 中央处理器。问题涉及一个处理器芯片(一块容纳芯片电路的硅片),该芯片连接了两个 Blackwell GPU。(这个不确定,我们消息渠道显示是一个std单元,触发器,高温下不稳定,但是也有人指出这两个消息来源说的是同一个问题)

3)B芯片不能交付,服务器有影响。影响 Nvidia NVLink 服务器机架的生产和交付,因为从事服务器业务的公司必须等待新的芯片样品才能完成服务器机架设计。

4)好消息是需求还是非常强,和我们调研meta openai结果一样。

谷歌已经订购了超过 40 万块 GB200 芯片!

Meta还下了一份价值至少100亿美元的订单,而微软最近几周将其订单规模增加了 20%,尽管无法获悉其总订单规模。据一位直接了解订单情况的人士称,微软计划在 2025 年第一季度之前为 OpenAI 准备好 55,000 到 65,000 块 GB200 芯片。

Nvidia Corp.’s upcoming artificial intelligence chips will be delayed due to design flaws, The Information reports, citing two unidentified people who help produce the chip and its server hardware.

The chips may be delayed by three months or more, which could affect Nvidia’s customers including Meta Platforms Inc., Google LLC and Microsoft Corp.

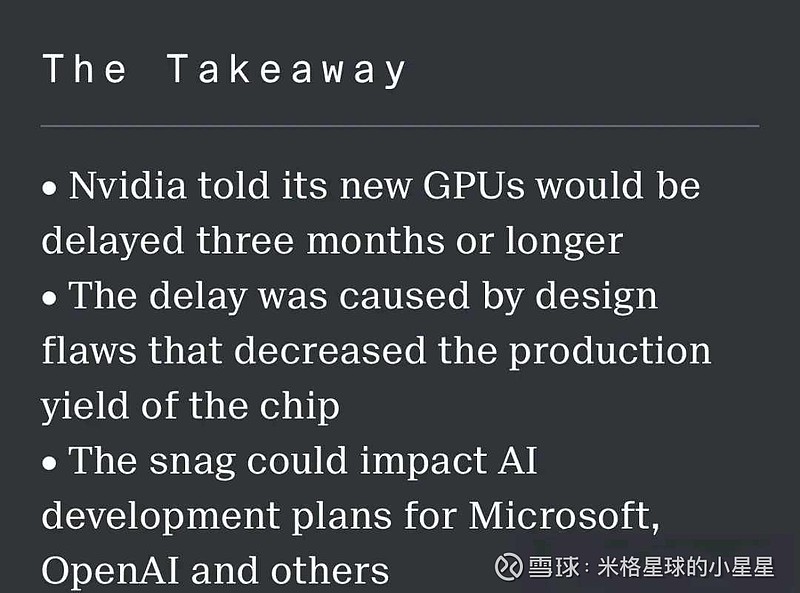

Nvidia’s upcoming artificialintelligence chips will be delayed by three months or more due to design flaws, a snafu that could affect customers such as Meta Platforms, Google and Microsoft that have collectively ordered tens of billions of dollars worth of the chips, according to two people who help produce the chip and server hardware for it.

Nvidia this week told Microsoft, one of its biggest customers, and another large cloud provider about a delay involving the most advanced AI chip in its new Blackwell series of chips, according to a Microsoft employee and another person with direct knowledge.

Nvidia unveiled Blackwell in March and CEO Jensen Huang said in May that the company planned to ship large numbers of Blackwells later this year. That was before design problems arose unusually late in the production process. Nvidia is conducting new test production runs with its chip manufacturer, Taiwan Semiconductor Manufacturing Company, to sort out the kinks, the people involved with the Blackwell chip said.

As a result, big shipments aren’t expected until the first quarter. After receiving chips, it typically takes cloud providers about three months to get large clusters of them up and running, according to someone who works on these types of data centers.

The design and production snag adds to the concerns hanging over Nvidia, which the U.S. Department of Justice is now investigating over complaints from rivals about alleged anti-competitive behavior. The company is still in a strong position, as the performance of its chips is far ahead of those of its competitors.

Shareholder expectations for the Blackwell chips are running high. An analyst at Keybanc Capital Markets projected that the Blackwell chips could drive Nvidia’s data center revenue to more than $200 billion in 2025 from $47.5 billion in 2024. (Such estimates may not account for the new delay.)

“We will see a lot of Blackwell revenue this year,” Huang said in a May earnings call with analysts.

Nvidia’s AI server chips, known as graphics processing units, have been the lifeblood of conversational and video AI from developers such as OpenAI. They’ve also helped cloud providers such as Microsoft juice sales from renting out the chips to other developers.

If the upcoming AI chips, known as the B100, B200 and GB200, are delayed three months or more, it may prevent some customers from operating large clusters of the chips in their data centers in the first quarter of 2025, as they had planned.

The biggest customers, including Microsoft, OpenAI and Meta, plan to use the new chips to develop future generations of large language models, the software behind ChatGPT, the Meta AI assistant and other automation features.

These companies said they need many times more computing power to achieve big leaps in the performance of their software so it can give better answers to complex queries, automate multi-step tasks or generate realistic video. They expect Nvidia’s next AI chips to enable such leaps, especially when they are grouped together in a cluster, also known as a supercomputer.

An Nvidia spokesperson wouldn’t comment about its statements to customers about the delay but said customers are testing samples of Blackwell chips and “production is on track to ramp” later this year.

Spokespeople for Microsoft, Google, Amazon Web Services and Meta declined to comment. A TSMC spokesperson didn’t respond to a request for comment sent after office hours.

Nvidia’s biggest customers have big plans for GB200 chips in particular. Over the past week, Google, Meta and Microsoft have disclosed unprecedented increases in spending on data centers and AI chips, temporarily lifting Nvidia shares and prompting questions about when those companies would produce revenue and profits from the investments.

Giant Blackwell Orders

Google, for example, has ordered more than 400,000 GB200 chips, said the two people who work on the chip. Together with server hardware, the cost of Google’s orders could be well north of $10 billion, though it isn’t clear when Google expects to receive them.

It is highly unusual to uncover significant design flaws right before mass production.

To put that amount into context, Google has been on pace to spend about $50 billion this year on chips and other equipment and property, up more than 50% from last year.

Meta also placed an order worth at least $10 billion, while Microsoft in recent weeks increased the size of its order 20%, the two people said, though its total order size couldn’t be learned. Microsoft was planning to have between 55,000 and 65,000 GB200 chips ready for OpenAI to use by the first quarter of 2025, according to a person with direct knowledge of the order.

Microsoft managers had planned to make Blackwell-powered servers available to OpenAI by January but may need to plan for March or early spring, said a person with knowledge of the situation.

The Blackwell design problem came up in recent weeks, as engineers at TSMC discovered flaws in preparation for mass production, said the two people involved with the Blackwell chip production.

The GB200 chips contain two connected Blackwell GPUs alongside a Grace central processing unit. The problem involved a processor die—a piece of silicon that holds circuits for a chip—that connected the two Blackwell GPUs. The snag decreased the yield, or number of chips TSMC was able to produce for Nvidia. Such problems typically prompt companies to stop production.

As a result, Nvidia has been making adjustments to the design and will have to conduct a new production test run at TSMC before mass production can begin, the people said.

Nvidia told at least one cloud provider that it might consider producing a version of the chip that only contains one Blackwell chip, in an effort to avoid the die issue and ship chips faster, according to someone who spoke with Nvidia about the delay.

Uncommon Delay

TSMC initially planned to start mass production of the Blackwell chips in the third quarter and ship them en masse to Nvidia customers starting in the fourth quarter. The Blackwell chips are now expected to go into mass production in the fourth quarter, with the servers slated for mass shipment in the subsequent quarters if no further issue arises, they said.

Chip delays aren’t unheard of. Nvidia experienced some delays with earlier versions of its flagship GPU in 2020, according to someone with direct knowledge. But the stakes for Nvidia were lower then, and fewer customers were counting on orders to arrive so they could begin generating revenue from their data center and chip investments.

Still, it is highly unusual to uncover significant design flaws right before mass production. Chip designers typically work with chip makers like TSMC to conduct multiple production test runs and simulations to ensure the viability of the product and a smooth manufacturing process before taking large orders from customers.

It’s also uncommon for TSMC, the world’s largest chipmaker, to halt its production lines and go back to the drawing board with a high-profile product that’s so close to mass production, according to two TSMC employees. TSMC has freed up machine capacity in anticipation of the mass production of GB200s but will have to let its machinery sit idle until the snags are fixed.

The design flaw will also impact the production and delivery of Nvidia’s NVLink server racks because the companies that work on the servers have to wait for a new chip sample beforefinalizing a server rack design.

$中际旭创(SZ300308)$ $新易盛(SZ300502)$ $天孚通信(SZ300394)$