Published on August 15, 2025 10:47 AM GMT

In Douglas Hofstadter's "Gödel, Escher, Bach," he explores how simple elements give rise to complex wholes that seem to possess entirely new properties. An ant colony provides the perfect real-world example of this phenomenon - a goal directed system without much central control. This system would be considered agentic under most definitions of agency, as it is goal directed, yet what type of agent is it? Can it be multiple agents at the same time? We’ll argue that this is the case and that if we map out the ways different fields describe ant colonies we’ll be better able to solve x-risk related problems.

Introduction

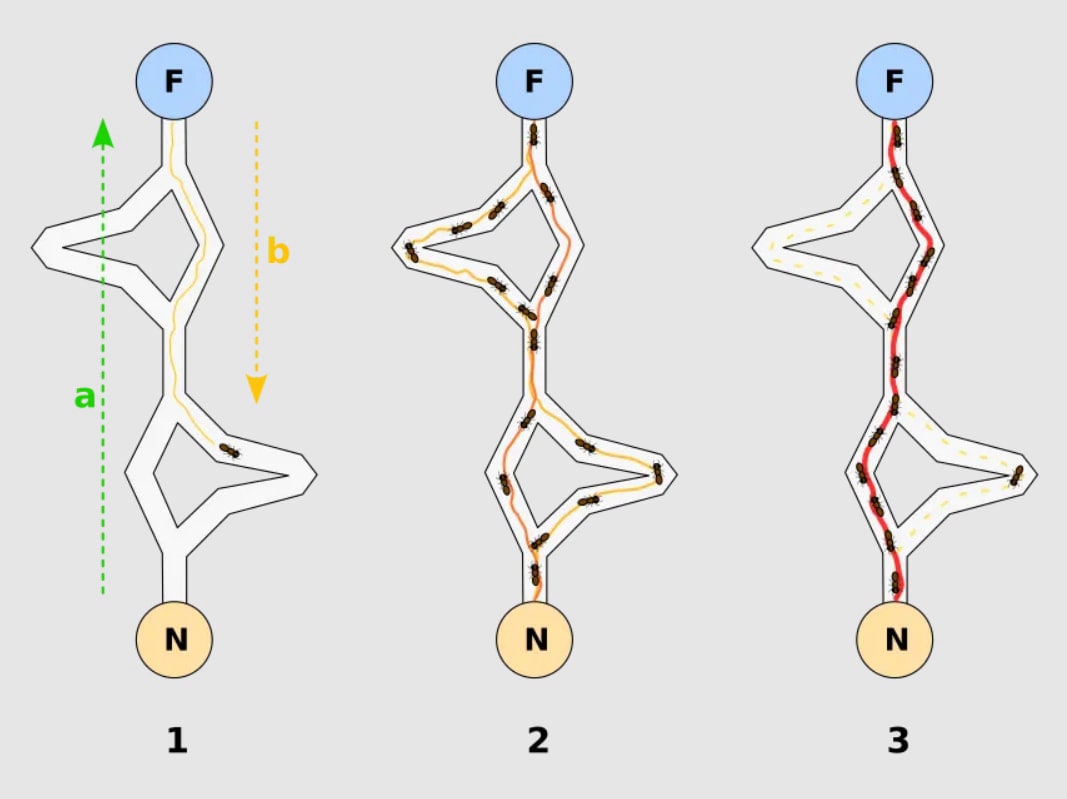

Watch a harvester ant colony during its morning foraging expedition. Thousands of individual ants stream out from the nest, each following simple rules: follow pheromone trails, drop chemicals when carrying food, avoid obstacles. Yet from these basic interactions, something remarkable emerges. The colony rapidly reorganizes when scouts discover food patches. Trails strengthen and fade based on success. Workers shift between tasks. The system somehow "decides" which resources are worth harvesting.

This is clearly goal-directed behavior. If you move the food, the colony adapts. If you block a path, it finds alternatives. The underlying infrastructure - the simple ant rules - remains the same, but the collective response changes to pursue the goal of efficient foraging. So under most definitions of agency we're looking at some kind of agent.

But what kind of agent?

Ask an economist, and they might tell you this is a sophisticated market system. Individual ants respond to price signals encoded in pheromone concentrations. Strong pheromone trails indicate valuable resources, attracting more workers. Weak trails signal poor opportunities, causing workers to abandon them. The colony exhibits supply and demand dynamics, efficient resource allocation, even investment strategies for uncertain opportunities. This is economic agency - rational actors coordinating through market mechanisms.

Ask a biologist, and you'll hear about a superorganism. The colony functions as a single adaptive entity with goal-directed behavior toward survival and reproduction. Individual ants are like specialized cells in a larger organism. The colony learns from experience, responds strategically to threats, and maintains homeostatic balance. This is biological agency - a living system pursuing survival goals through coordinated adaptation.

Ask a cognitive scientist, and they'll describe distributed information processing. The colony maintains memory through pheromone patterns, exhibits attention allocation through worker distribution, and demonstrates decision-making under uncertainty. It processes environmental information and generates appropriate responses through parallel computation. This is cognitive agency - a mind-like system processing information to achieve goals.

They're all looking at the same ants following the same chemical trails, but they're describing different types of agency. The economist sees market mechanisms. The biologist sees living organisms. The cognitive scientist sees information processing.

These aren't just different perspectives on the same phenomenon - each scientist would insist they're identifying the fundamental nature of what's actually happening. And each has compelling evidence for their view. The mathematical models from economics accurately predict foraging patterns. The biological framework explains adaptive responses to environmental changes. The cognitive approach captures the system's ability to solve complex optimization problems.

So what kind of agent is an ant colony, really?

The same puzzle appears everywhere we look for intelligence. Large language models seem like conversational partners to some users[1], statistical pattern-matching systems to computer scientists, and strategic actors to market analysts. Corporate decision-making systems that function as economic agents to some observers and biological adaptations to others. Financial markets that appear as collective intelligence or algorithmic chaos depending on your viewpoint.

Recently, researchers at Google DeepMind proposed an answer to this question, namely that: "Agency is Frame-Dependent." They argued that whether something counts as an agent - and what kind of agent it is - depends entirely on the analytical frame you use to examine it. The boundaries you draw, the variables you consider, the goals you attribute, the changes you count as meaningful - all these choices determine what type of agency you observe.

This is based on and similar to Daniel Dennett's "intentional stance." Dennett argued that attributing agency to a system isn't about discovering some inherent property - it's about choosing a useful modeling strategy. We adopt the intentional stance when treating something as having beliefs, desires, and goals helps us predict and understand its behavior better than purely mechanistic descriptions.

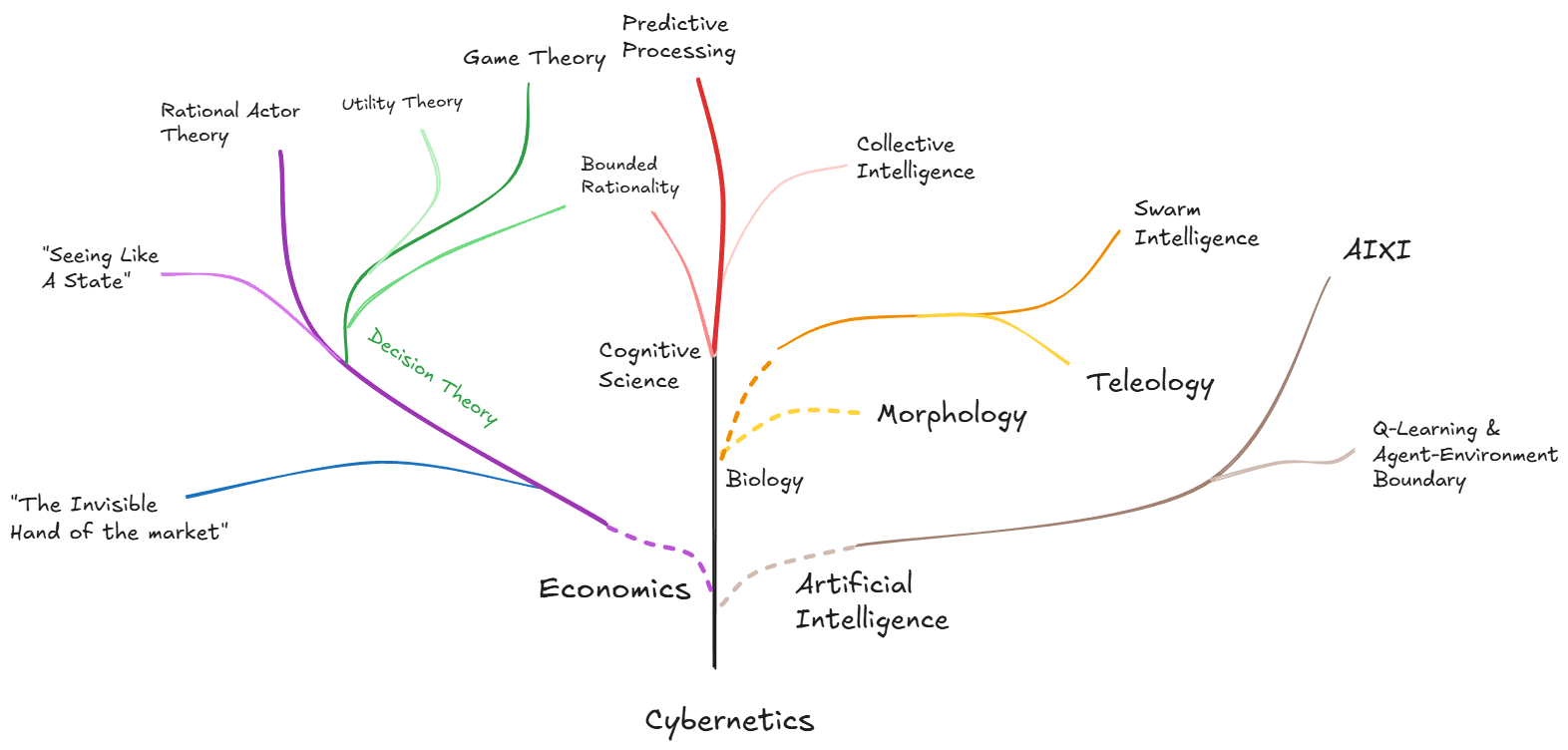

But we can take this a step further: if agency is fundamentally about choosing useful modeling strategies, then the different approaches we saw with the ant colony aren't arbitrary preferences. They're evolved solutions to different analytical challenges. The economist's market-based view evolved because it proved effective for predicting resource allocation patterns. The biologist's organism-based view emerged because it captured adaptive responses that other approaches missed. The cognitive scientist's information-processing view developed because it explained coordination mechanisms that simpler models couldn't handle.

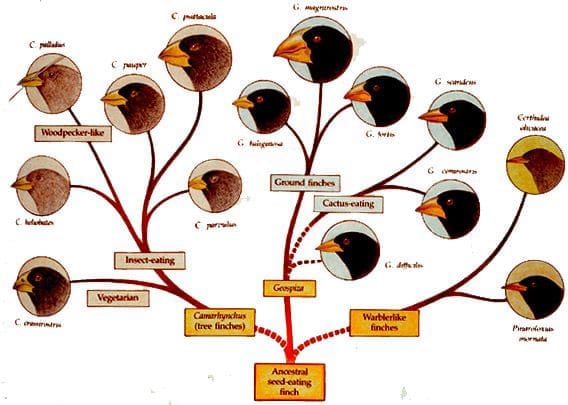

This suggests a potential research program: studying the evolution of these different analytical approaches like a phylogeny - tracing how different fields developed distinct "intentional stances" based on the specific prediction challenges they faced. Just as Darwin's finches evolved different beak shapes for different feeding environments, scientific fields might have evolved different mathematical frameworks for different explanatory environments.

A Phylogeny of Agents

We call this the "phylogeny of agents" - mapping the evolutionary history of how different fields developed their approaches to recognizing and modeling agency.

In interdisciplinary conversations, researchers from different fields tend to get confused or disagree about "what is an agent?". Rather than treating this as a question to be solved with a specific answer, we treat it as data about how analytical tools evolve to match different explanatory challenges.

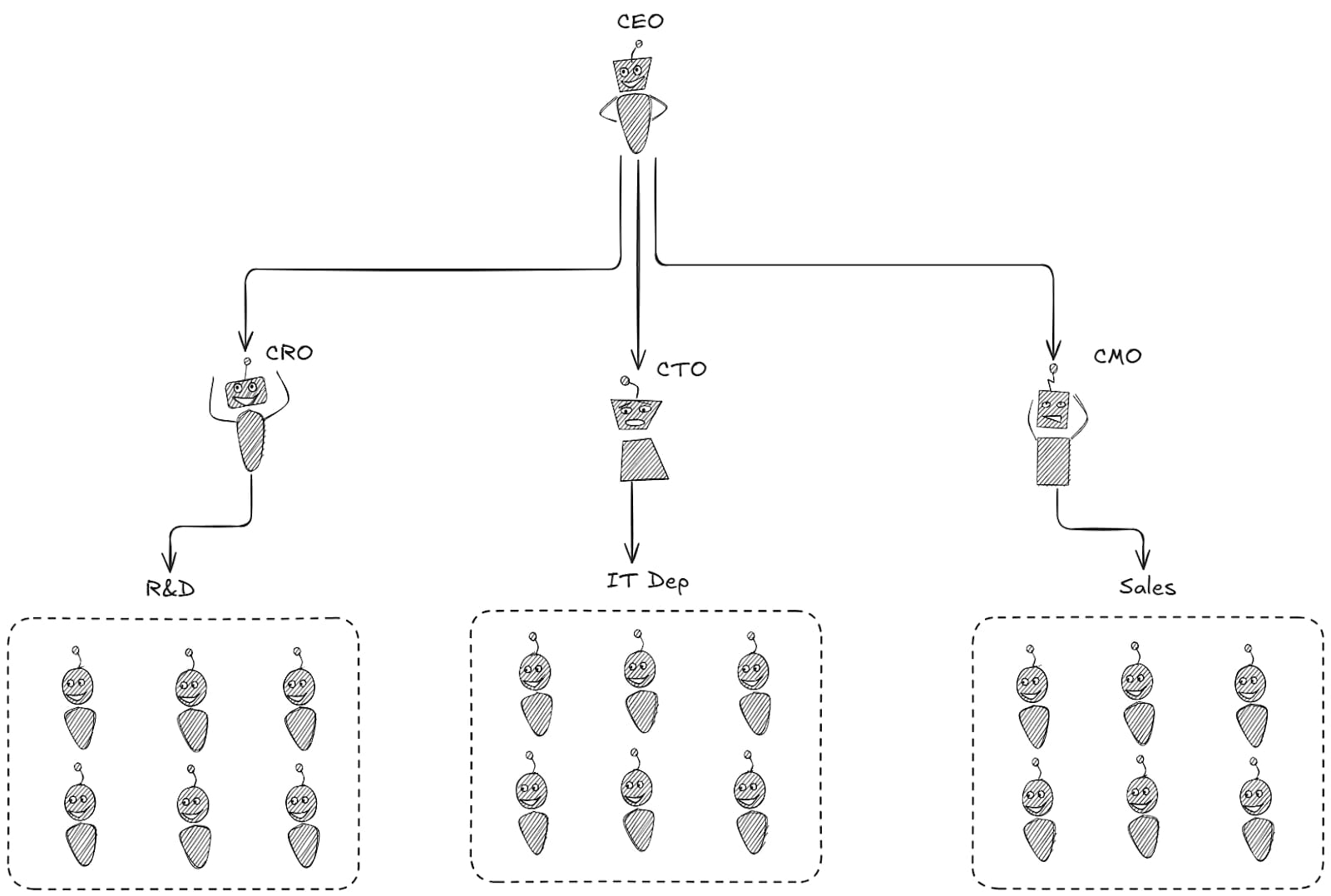

Here is a concrete example, that both explains the research agenda and explains why you should care: a completely automated AI firm. Its made up of a multi-agent system of AIs operating in the real world. It’s our ant colony but with AIs instead of ants. Just like our ant colony, the AI firm can be described in different ways. Depending on your stance it can be a market of ideas and goods, or a predictive processing system, or a utility maxing black box algorithm. These are all different types of intentional stances, or in our words, agents.

Here's the alignment problem: the same AI firm can be simultaneously aligned and misaligned depending on which type of agency you're ascribing it. If we approach alignment through only one analytical lens, we might solve the wrong problem entirely while creating new risks we can't even see. Aligning a distributed complex system is different from aligning a thermostat. Aligning a system with memory and online learning is different from aligning LLMs.

Let's look at a couple of different ways that alignment and agency could work from different intentional stances:

From an economic perspective, the AI firm might be perfectly compliant. It follows market regulations, responds to price signals, allocates resources efficiently, and maximizes shareholder value. The firm appears well-aligned with economic incentives and legal frameworks. Economists studying this system would conclude it's behaving as a rational economic agent should.

From a decision theory perspective, the firm might be executing its stated utility function flawlessly. Its sub-agents optimize for clearly defined objectives, exhibit goal-directed behavior toward specified targets, and demonstrate adaptive learning within their designed parameters. AI researchers examining the system would find textbook alignment between the AI's behavior and its programmed goals.

From a cooperative AI perspective, this same firm might be generating catastrophic coordination failures. Despite following its individual incentives perfectly, it could be contributing to race dynamics, undermining collective welfare, or creating systemic risks that no individual actor has incentive to address. Researchers studying multi-agent dynamics would see dangerous misalignment at the system level.

From a biological systems perspective, the firm might be optimized for short-term efficiency but catastrophically fragile to environmental shocks. Like a monoculture lacking genetic diversity, it could be heading toward collapse because it lacks the redundancy, adaptability, and resilience mechanisms that biological systems evolved for survival under uncertainty.

This is where the phylogeny of agents research program becomes useful. Darwin's phylogenetic tree revolutionized biology by revealing the evolutionary relationships between species. If we map the phylogeny of analytical approaches to agency, we could transform how we understand and align complex intelligent systems.

Consider what phylogenetic analysis has enabled across different fields. In biology, it revealed why certain trait combinations work together, predicted which species would adapt successfully to new environments, and explained how similar solutions evolved independently in different lineages. In medicine, phylogenetic analysis of pathogens enables vaccine development and predicts drug resistance patterns. In linguistics, it traces how languages branch and influence each other, revealing deep structural relationships between seemingly different communication systems.

What might a phylogeny of intentional stances reveal for AI alignment? Instead of treating each field's approach to agency as an isolated modeling choice, we could understand them as evolved solutions to specific analytical challenges - each carrying the "genetic code" of the optimization pressures that shaped them.

The phylogeny could reveal which analytical approaches share common mathematical "ancestors" - suggesting when they can be safely combined - and which represent fundamentally different evolutionary branches that may conflict when applied to the same system. Just as biological phylogeny predicts which species can hybridize successfully, an analytical phylogeny could predict which modeling approaches can be productively integrated.

For hybrid human-AI systems, this becomes crucial. These systems exhibit agency that doesn't fit cleanly into any single field's evolved framework. The phylogenetic approach asks: which combinations of analytical approaches, evolved under different pressures, can successfully characterize the multi-scale agency of cybernetic systems?

Rather than hoping different alignment approaches happen to be compatible, we could systematically understand their evolutionary relationships - when economic alignment strategies will enhance biological resilience, when cognitive frameworks will complement rather than conflict with systemic approaches, and when applying a single analytical lens will create dangerous blind spots.

Conclusion

The ant colony puzzle revealed that the same complex system can simultaneously be different types of agents depending on your analytical lens - what appears as confusion between fields actually represents evolved solutions to different analytical challenges. Different fields developed distinct "intentional stances" toward agency because they faced different prediction environments, and by mapping these evolutionary relationships like a phylogeny, we can transform interdisciplinary disagreement into systematic understanding.

This matters for AI alignment because multi agent systems (such as AI-AI or hybrid human-AI systems) exhibit agency across multiple scales simultaneously - a single AI firm can be perfectly aligned from one perspective while catastrophically misaligned from another.

The goal for this research is to help build the conceptual infrastructure to navigate conversations around agency and alignment. This helps map the right type of alignment agenda to the right frame of agency. It would help understand our own individual intentional stances towards agency depending on which field we come from, and it would also help show how different stances relate to each other through a shared ancestry.

In an ideal we would be able to get new approaches to alignment "for free" by mapping them over from different fields.

If this sounds interesting, we’re running a workshop and a research program on this area during autumn 2025 at Equilibria Network.

Finally some fun related links:

- A link discussing the relation to consciousness for an ant colonySeeing like a state - review from Scott AlexanderThe Invisible Hand of The Market

Cross posted to : Substack

- ^

Some even overuse the intentional stance to the extent that they anthropomorphise LLM systems into "awakened beings"

Discuss