Published on August 9, 2025 11:10 PM GMT

To prevent potentially misaligned LLM agents from taking actions with catastrophic consequences, you can try to monitor LLM actions - that is, try to detect dangerous or malicious actions, and do something about it when you do (like blocking the action, starting an investigation, …).[1]

But where in your infrastructure should your LLM monitor be implemented, and what team in your organization should own it? Historically, researchers have mostly focused on implementing monitoring inside the agent scaffold, which would mean that it would be owned by agent product teams. But actually there are also three other important places where you might put it, and I think these are comparable in importance to LLM monitors in agent scaffolds.

This is important for a few reasons:

- This might inspire us to focus on developing better monitoring techniques and control measures that can be implemented strictly with the affordances of one of these four places.It tells us about which teams in the AI companies might be crucial for AI control efforts later; it might be good to talk to these teams about AI control now, or to work with those teams (even on projects that aren’t directly relevant for mitigating risk from misaligned AI) in the hope of helping with implementing effective control techniques later.[2]

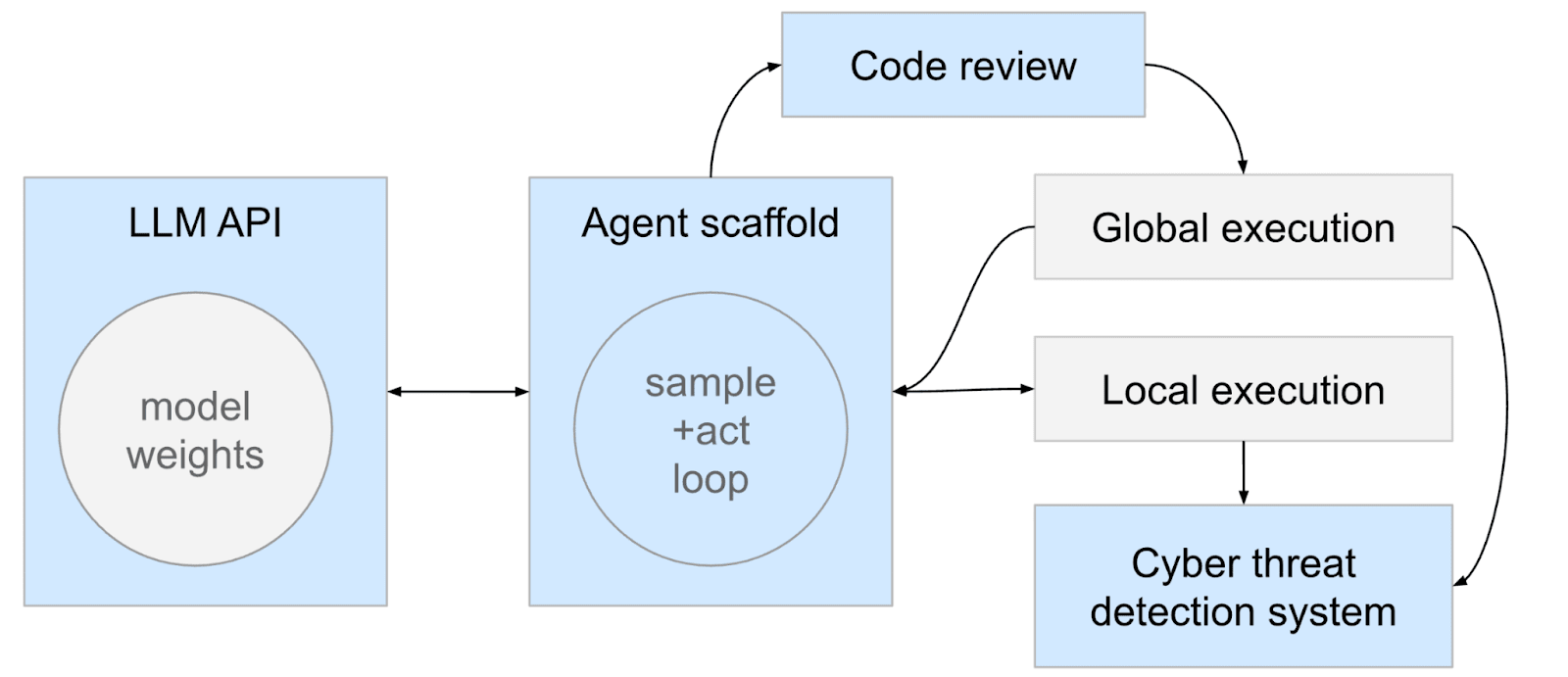

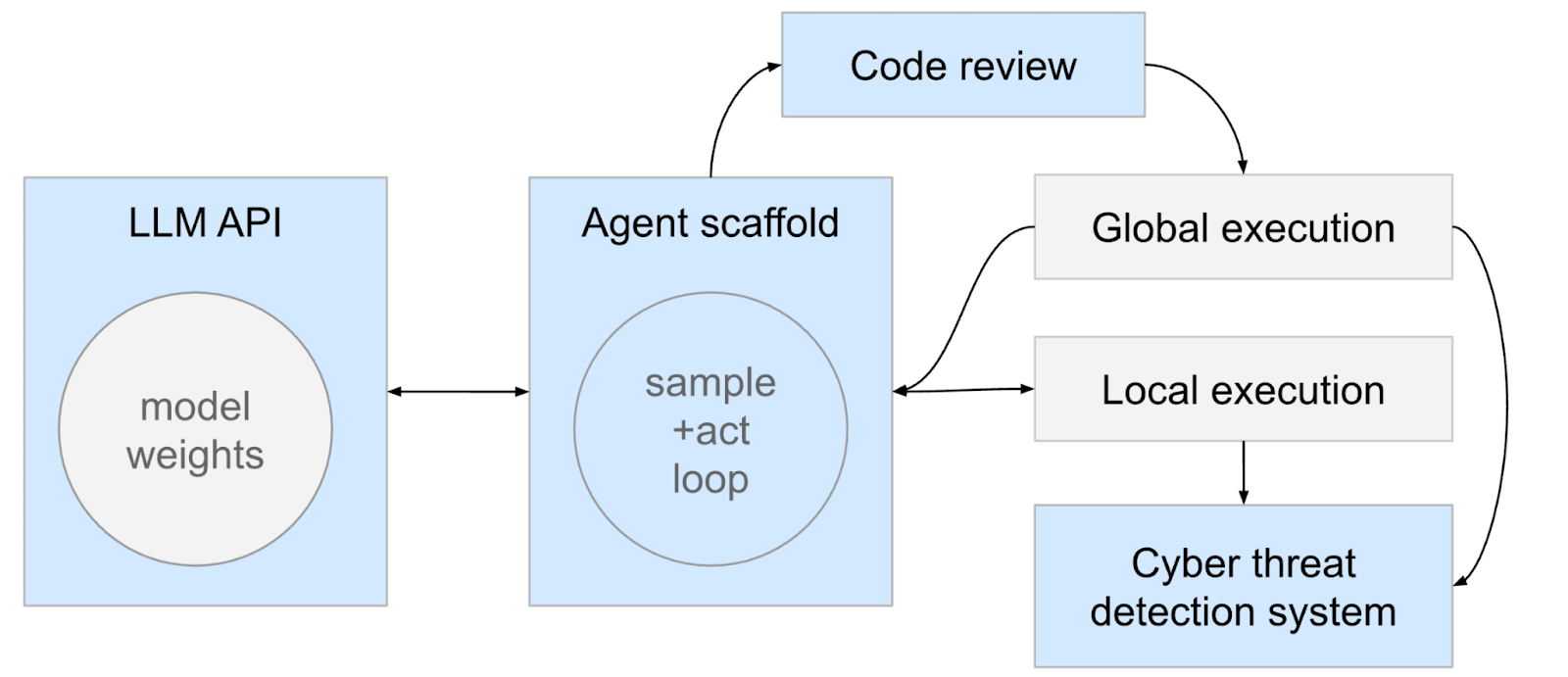

TL;DR: You can put your monitors in these blue boxes:

Monitors in agent scaffolds

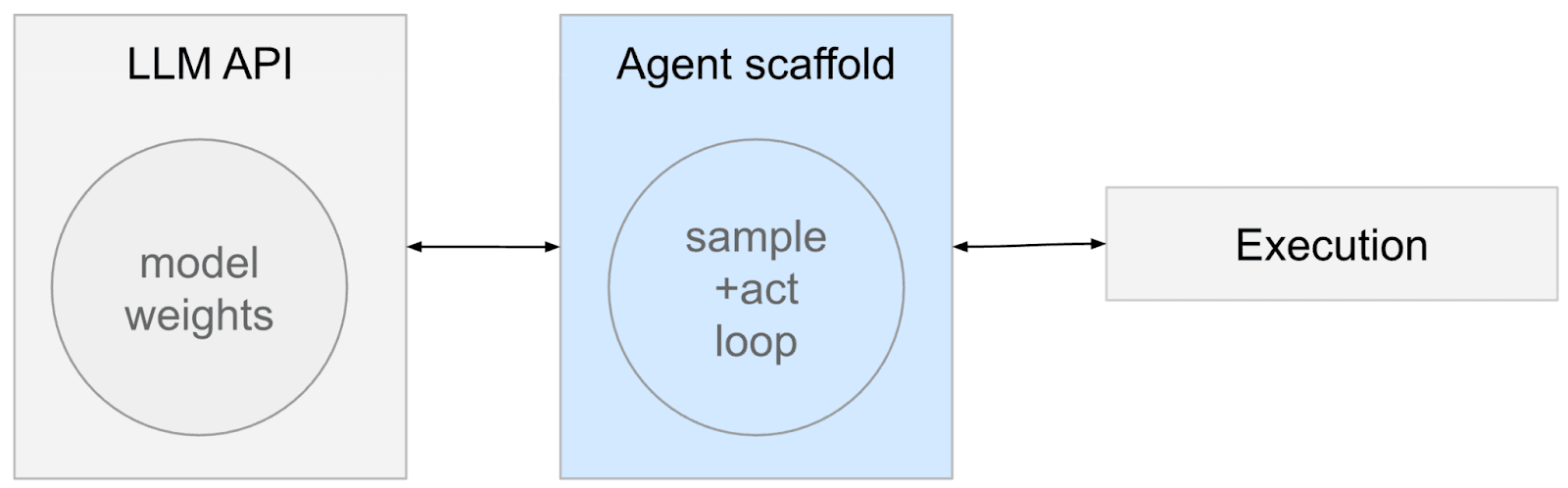

Agent scaffolds are what links an LLM API and an execution environment, so it’s natural to put your monitors there (diagrams adapted from here):

This puts your monitor logic next to your agent logic - which often handles permissions and decides when to call the API and when to execute actions - at least in scaffolds like Claude Code and Cursor agents.[3]

Strengths:

- Simplicity. This is the simplest option and the easiest one to change, as this is already where a lot of the permission system lives, and where end-users can describe their permission-related preferences.Flexibility. You can adapt the permission system to deal with LLM monitors, and implement all the fancy strategies described in AI control papers.Context. Agent scaffolds have context of both the LLM context and the execution environment.

Weaknesses:

- Regular updates. Agent scaffolds are regularly updated and new ones are regularly created, which is not ideal if monitors become a critical piece of security infrastructure.Novelty. Monitors within agent scaffolds would be relatively new, and don’t benefit from the inertia and industry experience of other kinds of monitors.Cultural differences. The team that builds agents has historically been less focused on detecting adversarial threats or building systems to triage noisy signals of risk. One particularly important application of monitoring is to have a human investigate actions that are flagged as most dangerous. The agent team is not well organizationally set up to fulfill this function, because they probably aren't already in the business of maintaining a team of experts who can investigate flagged actions and escalate appropriately.(sometimes) Vulnerable environment. The environment where the agent scaffold logic sometimes runs on a machine that the agent or the user controls (e.g. this is currently the case for Claude Code, but probably not for ChatGPT code interpreter), which opens up attacks where the agent or the user disables monitoring.

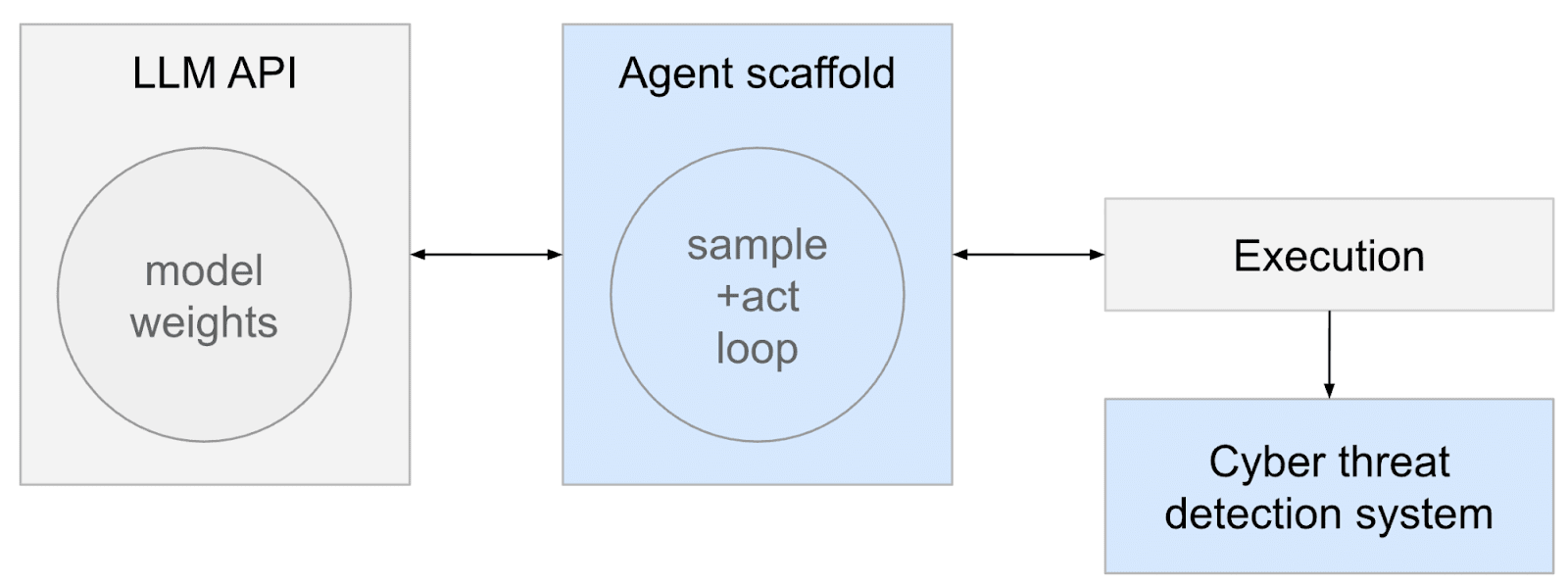

Monitors in cyber threat detection-and-response systems

All big organizations have “detection and response” teams that try to detect malicious activities on the organization’s devices. They collect tons of data on all the commands and processes run, on file modifications, on network traffic, etc. They do this with specialized software like Crowdstrike which collects and helps analyze this data. (As the very wide impact of the Crowdstrike Windows bug illustrates, this is very common.)

When some suspicious sequence of events is recorded, someone on the detection and response team and/or on Crowdstrike’s team is pinged and collects more data related to the detection (either by looking at existing logs or if the situation is severe, by monitoring an employee’s activity in real time).[4]

The suspicion is usually not computed using the sorts of LLM-based monitors that I usually think about (rule-based detection like YARA rules and old-school AI anomaly detection are more common), but this is not a strong reason to discount the effectiveness of such measures - they are effective enough that pen-testing exercises regularly result in the detection and response team catching the attackers.[5]

Therefore, I think cyber threat detection system is an important place where to put your (LLM-based and non-LLM-based) monitors:

Strengths:

- Maturity. The infrastructure already exists and is relatively mature (at least for non-LLM-based monitors). And the teams that do this work are already set up to handle the associated organizational difficulties: for example, needing to have access to sensitive information from other teams, and needing to get specialized professionals to do fairly repetitive work that rarely feels like it directly pays off.Execution context. Cyber threat detection systems have a rich context on where actions are executed. This relatively direct access to the consequences of actions, also makes it less sensitive to justifications and to code obfuscation.Automatic robustness to collusion. Models might be able to help a lot with investigating event logs for suspicious activity without having to directly look at the actions that AI agents took that led to an event being recorded. This is good for us because the AI doing incident response might (as a result of the limited information flow) have trouble colluding with the agents being monitored to not point out occasions on which the monitored agents are actually trying to attack.

Weaknesses:

- Lack of LLM context. They lack context on the reason why an LLM agent took the actions it took.Information overload. The firehose of information that these systems have to go through can make it difficult to piece together sequences of action and use expensive LLM-based monitors.Not applicable to non-cyber threats. Much less applicable to threats that don’t look like cyberattacks (e.g. persuasion, data poisoning of successor AIs, sandbagging, …).Mostly blunt interventions and latency. If you do catch something, it’s hard to intervene in a subtle way, and it’s sometimes a bit too late. This means this is best suited for offline monitoring.

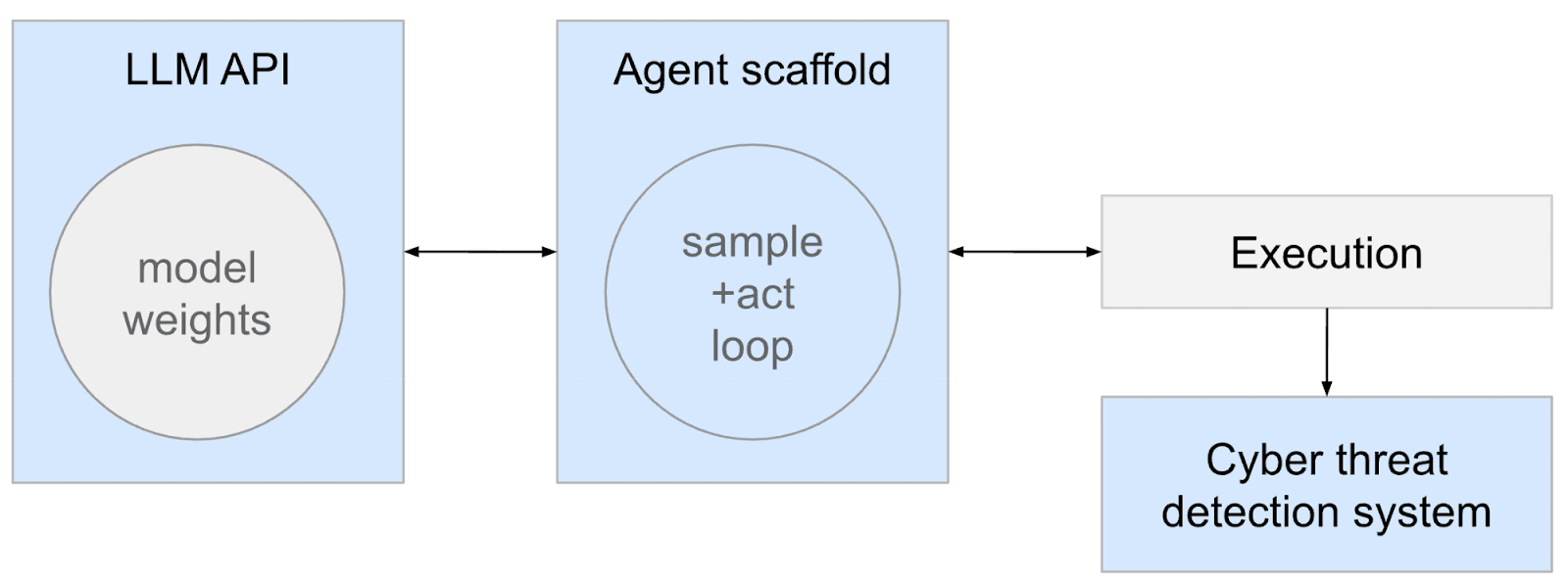

Monitors in LLM APIs

On the opposite side of the cyber threat detection systems, you can try to monitor LLM generations before they even have the chance of being executed by doing monitoring on the LLM API:

- just like Anthropic tries to block generations which contain information related to bioweapons, you could try to block generations that contain actions which are too dangerous;just like OpenAI investigates ChatGPT uses by cyberattack groups, you could try to have an investigation team that tries to piece together evidence about a model potentially generating misaligned actions.

Strengths:

- Robustness. This means it’s harder to accidentally not use monitoring on one of your agent scaffolds, or to have problems resulting from AIs using LLM APIs directly in the course of research they're doing.LLM context. The API has all the context the LLM has, which in principle is much of the context you need to assess the risks posed by a certain action. (The LLM, of course, needs to decide what actions to take just based on the context that it's given. But in practice, we might be able to get big advantages from exposing more context to our monitors than we expose to the LLM itself.)Some maturity. People have already started deploying LLM monitors in LLM APIs.

Weaknesses:

- Execution context. The LLM API does not know where actions will be executed by default. Maybe they will be executed in a secure sandbox or won’t be executed at all - or maybe they will be executed in a very sensitive environment. (In some cases, we might actively prefer the AI to not know some facts about the security properties of the system where its code will be run.) This also means it is harder to implement fine-grained policies.Disconnection from the agent scaffolds. If you want to do things like “if the monitor is too suspicious, tell the agent scaffold to ask the user”, then you need to update both the API and the agent scaffold. This coordination is inefficient and can slow down iteration speed.

The right strategy might be to have both safe and unsafe LLM APIs.

- The safe LLM API is monitored aggressively enough that you feel okay about it being used from any code running inside your data center, even if that code isn't implementing safety measures itself.The unsafe API is much less strictly monitored and that is only allowed to be used by scaffolds that have demonstrated that they have implemented robust safety measures external to the LLM API.

Monitors in code reviews

Finally, you can add monitors where people are already doing a bunch of fuzzy monitoring: in code reviews! It is already commonplace to have humans review code before it is deployed with a higher level of permissions (e.g. when using infrastructure-as-code, the code is often only applied when merged). When AIs write more of the code, this becomes a natural place where you can monitor what AIs do. And if the review load becomes too high for humans, you can add AI assistance and eventually have AIs do most of the review work.

Strengths:

- Maturity. People have been doing code reviews for agesExecution context. You mostly know where code will be executed when merged (especially for things like infrastructure as code) - it’s not just code that will be run in a sandboxLLM context. While you might not get the full LLM transcript, you do get the rationale for implementing a change.Flexibility. This is a system independent of the core agent scaffold, so you can likely change it a bunch without hurting productivity much, and it’s much more natural to fall back to a human than in the agent scaffold case.

Weaknesses:

- Not applicable to non-reviewed threats. This only works for threats that go through malicious code passing the code review.

So where should you put the monitors?

Since they have overlapping strengths and weaknesses, ideally all of the above:

| Flexibility | Execution context | LLM context | Robustness | Generality | Maturity | |

| Monitors in agent scaffolds | ++ | ++ | ++ | -/+[6] | + | - |

| Monitors in cyber threat detection systems | - | ++ | - | + | - | ++ |

| Monitors in LLM APIs | - | - | ++ | ++ | ++ | + |

| Monitors in code reviews | + | + | + | ++ | - | ++ |

My (low-confidence) prioritized list would look something like:

- Make sure you are not missing on the baseline cyber threat detection systems (if you are an AI company, you were supposed to do that anyway)Then focus on building monitors where it is easy, which is probably in the agent scaffoldThen add things to the API to make it more robust.Then add automated monitors to the code review if humans start rubber-stamping code reviews because AIs are producing too much code.And continuously maintain / improve all of the above.

But this depends a lot on the organization and the effort that people are willing to put in monitors. For example, I can imagine that in some organizations, causing bad outcomes is only possible if code is reviewed and merged and that adding monitors to APIs and to agent scaffolds is hard, in which case I am sympathetic to focusing on code review monitors.

Appendix: Further subdivisions

- Agent scaffold SDKs vs Outer agent scaffolds: maybe you can integrate the monitors in a simple “core” of the agent scaffold (e.g. like the Claude Code SDK), or maybe you can add monitors when you build your “outer agent scaffold” (e.g. when you use the Claude Code SDK, and add features using Claude Code Hooks);Core APIs vs API proxies: maybe you can have the LLM monitor be built into the actual inference API, or maybe you can create an API proxy that does the monitoring and then routes to the regular API. The latter blurs the line a lot with what an agent scaffold does! For example, things like the Cursor API are parts of what an agent scaffold, since it does prompt manipulation, and part API proxy, since it routes the request to the appropriate API);CI testing vs code review assistance: maybe you can add an LLM monitor as part of the tests that have to pass to merge a pull request in the CI, or maybe you can integrate it in the UI that code reviewers use to review pull requests;Detection on actions vs detection on consequences: maybe the cyber threat detection system can detect dangerous commands, or maybe it can detect the effects of dangerous commands.

- ^

By monitoring I mostly intend to cover detection, which is an important component of most deployment-time AI control measures.

- ^

Working at AI companies has a bunch of other positive and negative consequences. This post is definitely not a generic endorsement of joining an AI company to help deal with AI misalignment risk later.

- ^

Note: I group here both front-end and backend scaffolds. In Claude Code, the agent scaffold runs on the same device as the one on which most actions are executed, but this does not have to be the case.

- ^

When monitoring human attackers, it is common to wait before acting to collect more data. This seems less essential for LLMs if there is the possibility to replay the attack.

- ^

But more effort could make them better! These teams are often hiring and buying services to make themselves more effective. I suspect this could be a great path to impact if you have a relevant background.

- ^

I’d rate the robustness of monitors in agent scaffolds as - for client side agent scaffolds and + for server-side agent scaffolds.

Discuss