Editor’s note: This article, originally posted on Oct. 23, 2024, has been updated.

Physical AI — the embodiment of artificial intelligence in robots, visual AI agents, warehouses and factories and other autonomous systems that operate in the real world — is experiencing its breakthrough moment.

To help developers build effective physical AI systems in industries such as transportation and mobility, manufacturing, logistics and robotics, NVIDIA builds three computers that advance physical AI training, simulation and inference.

What Are NVIDIA’s Three Computers for AI Robotics?

NVIDIA’s three-computer solution comprises: (1) NVIDIA DGX AI supercomputers for AI training, (2) NVIDIA Omniverse and Cosmos on NVIDIA RTX PRO Servers for simulation and (3) NVIDIA Jetson AGX Thor for on-robot inference. This architecture enables complete development of physical AI systems, from training to deployment.

What Is Physical AI, and Why Does It Matter?

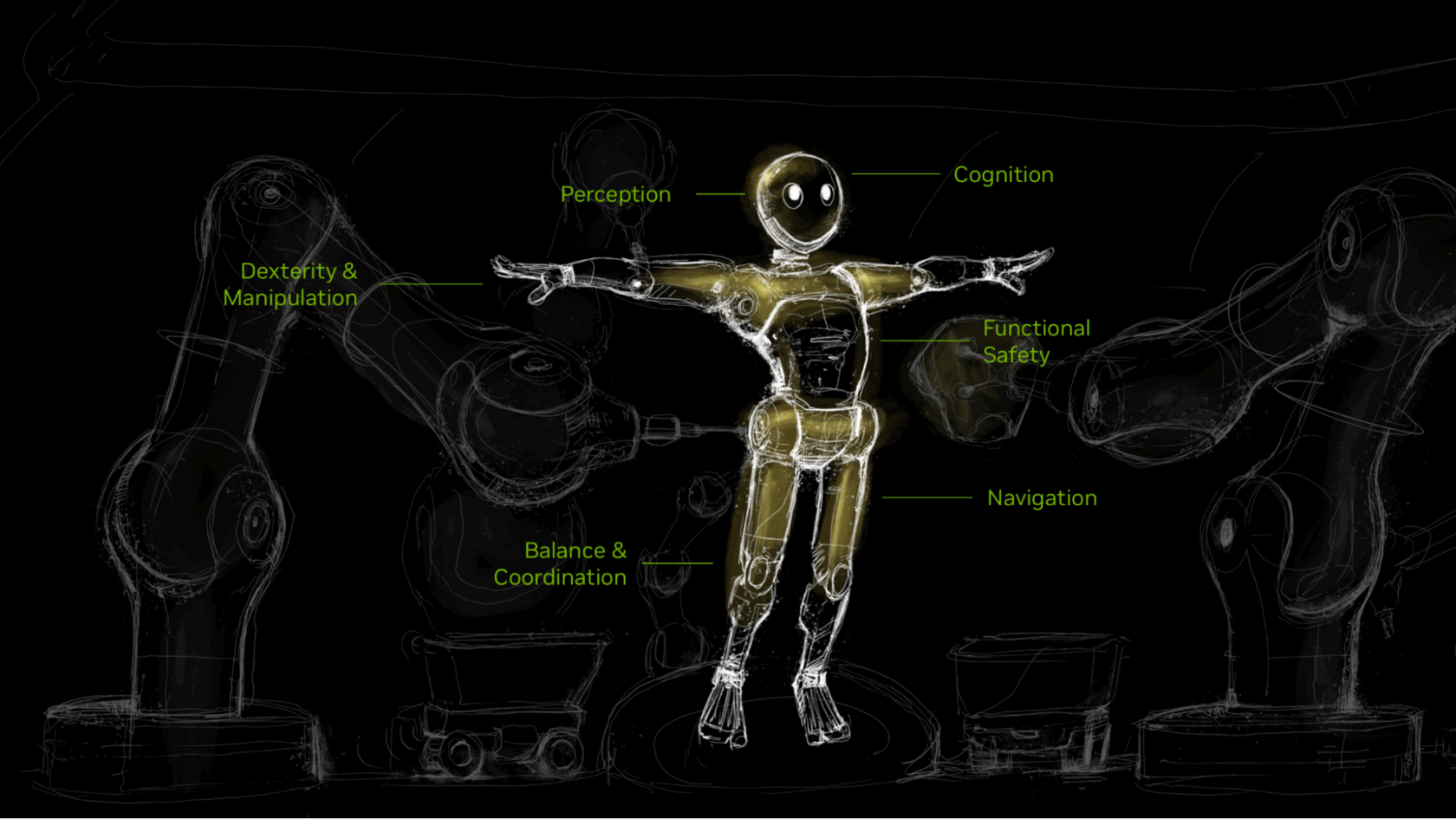

Unlike agentic AI, which operates in digital environments, physical AI are end-to-end models that can perceive, reason, interact with and navigate the physical world.

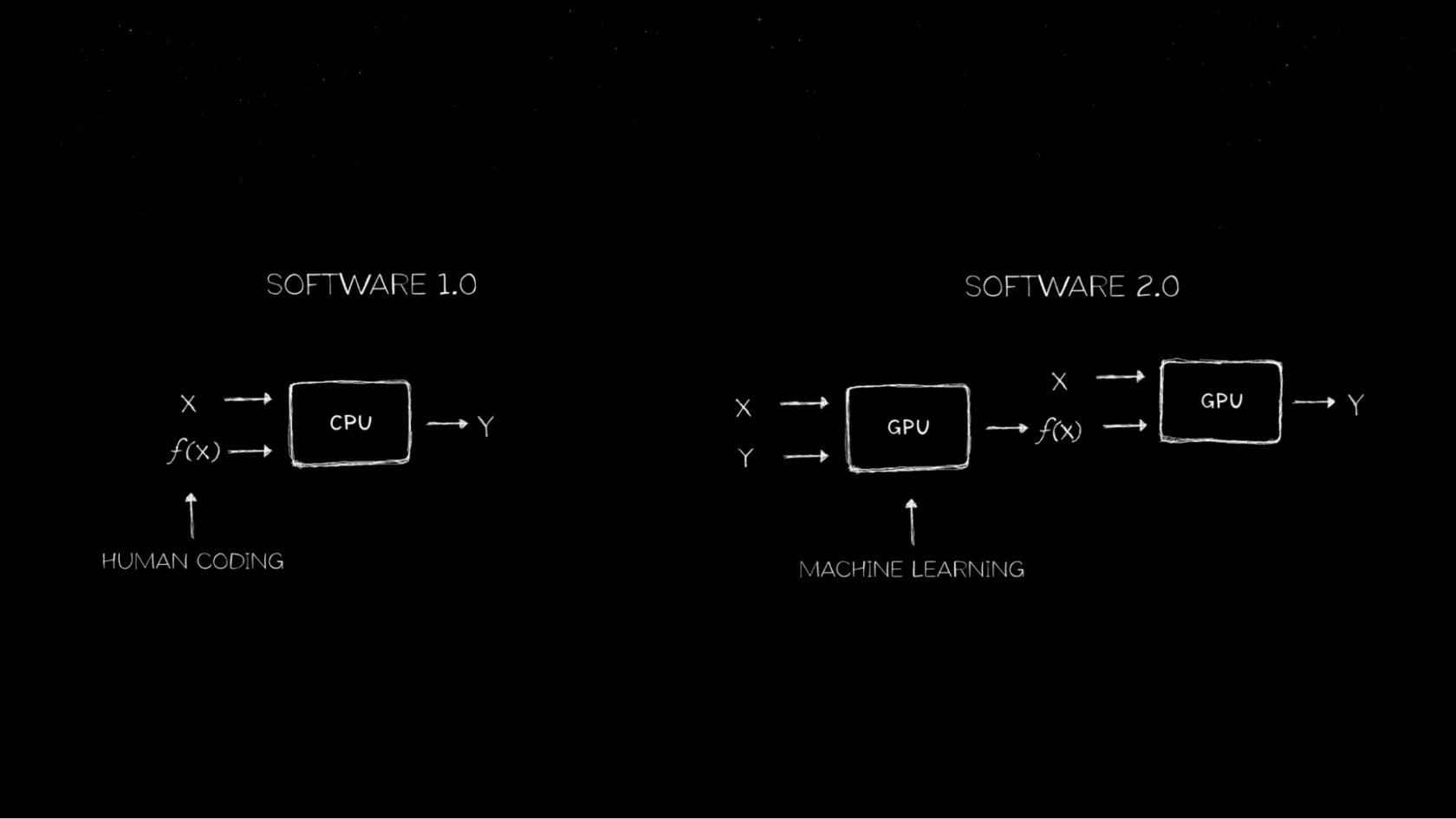

For 60 years, “Software 1.0” — serial code written by human programmers — ran on general-purpose computers powered by CPUs.

Then, in 2012, Alex Krizhevsky, mentored by Ilya Sutskever and Geoffrey Hinton, won the ImageNet computer image recognition competition with AlexNet, a revolutionary deep learning model for image classification.

This marked the industry’s first contact with AI. The breakthrough of machine learning — neural networks running on GPUs — jumpstarted the era of Software 2.0.

Today, software writes software. The world’s computing workloads are shifting from general-purpose computing on CPUs to accelerated computing on GPUs, leaving Moore’s law far behind.

With generative AI, multimodal transformer and diffusion models have been trained to generate responses.

Large language models are one-dimensional — able to predict the next token in modes like letters or words. Image- and video-generation models are two-dimensional, able to predict the next pixel.

None of these models can understand or interpret the 3D world. That’s where physical AI comes in.

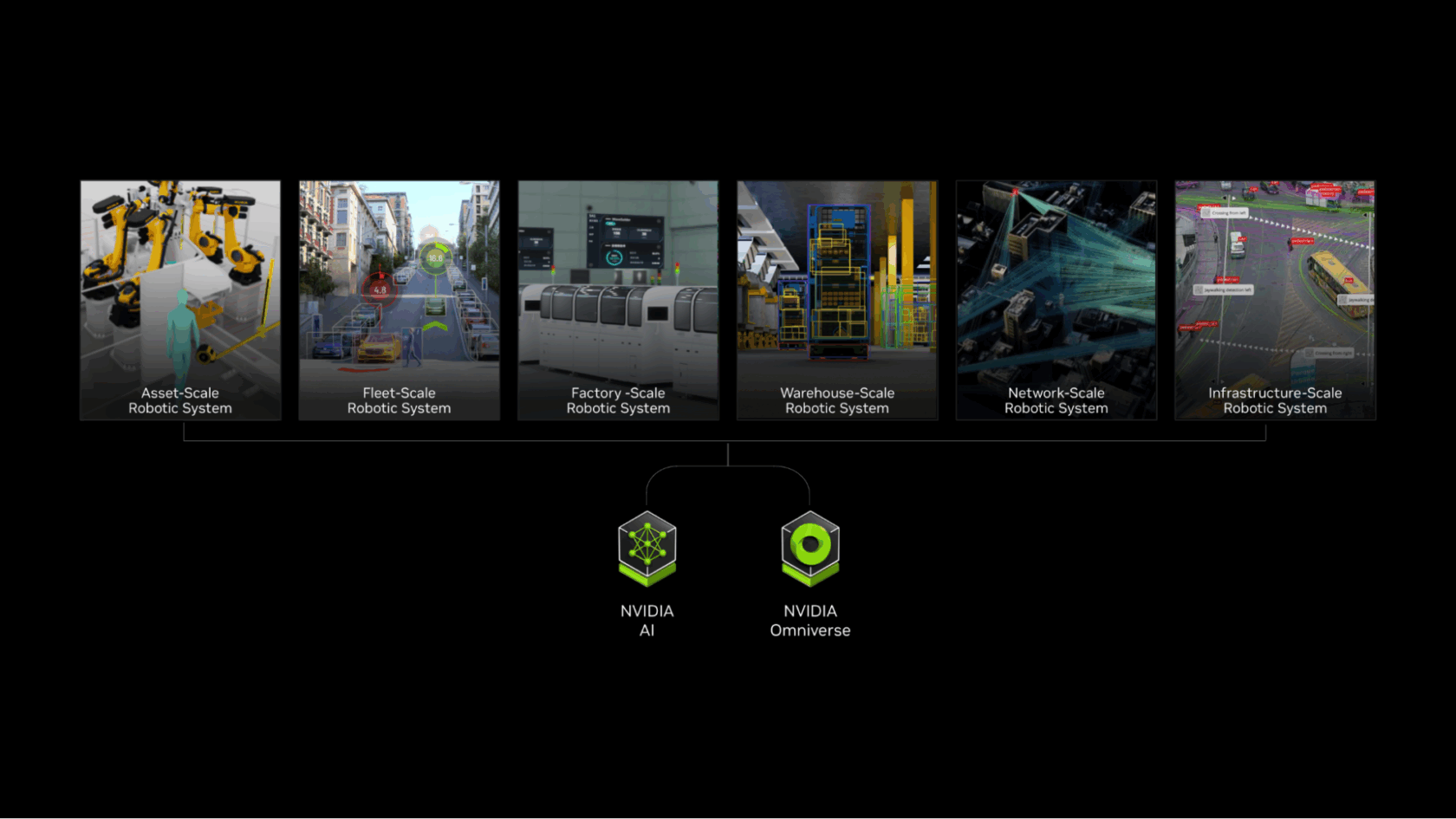

A robot is a system that can perceive, reason, plan, act and learn. Robots are often thought of as autonomous mobile robots (AMRs), manipulator arms or humanoids. But there are many other types of robotic embodiments.

In the near future, everything that moves, or that monitors things that move, will be an autonomous robotic system. These systems will be capable of sensing and responding to their environments.

Everything from autonomous vehicles, surgical rooms to data centers, warehouses to factories, even traffic-control systems or entire smart cities will transform from static, manually operated systems to autonomous, interactive systems embodied by physical AI.

Why Are Humanoid Robots the Next Frontier?

Humanoid robots are an ideal general-purpose robotic manifestation because they can operate efficiently in environments built for humans while requiring minimal adjustments for deployment and operation.

The global market for humanoid robots is expected to reach $38 billion by 2035, a more than sixfold increase from the roughly $6 billion for the period forecast nearly two years ago, according to Goldman Sachs.

Researchers and developers around the world are racing to build this next wave of robots.

How Do NVIDIA’s Three Computers Work Together for Robotics?

Robots learn how to understand the physical world using three distinct types of computational intelligence — each serving a critical role in the development pipeline.

1. Training Computer: NVIDIA DGX

Imagine trying to teach a robot to understand natural language, recognize objects and plan complex movements — all simultaneously. The massive computational power required for this kind of training can only be achieved through specialized supercomputing infrastructure, which is why a training computer is essential.

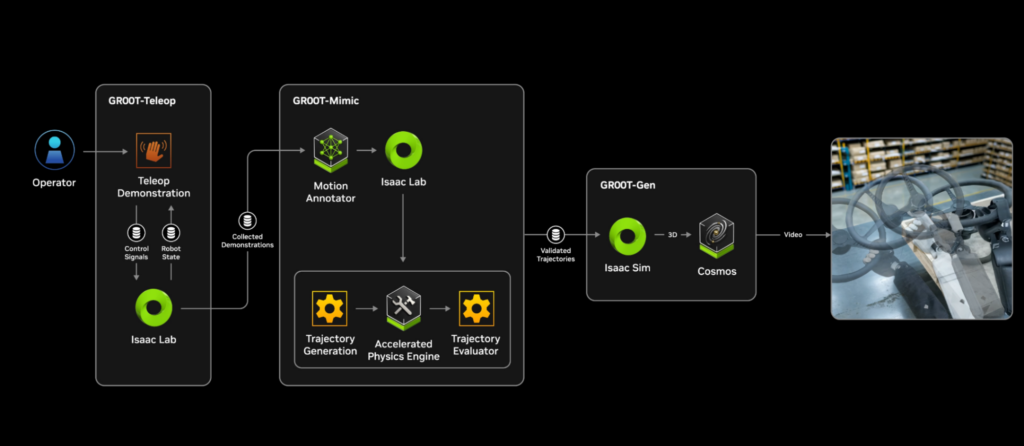

Developers can pre-train their own robot foundation models on the NVIDIA DGX platform, or use NVIDIA Cosmos open world foundation models or NVIDIA Isaac GR00T humanoid robot foundation models as base models for post-training new robot policies.

2. Simulation and Synthetic Data Generation Computer: NVIDIA Omniverse with Cosmos on NVIDIA RTX PRO Servers

2. Simulation and Synthetic Data Generation Computer: NVIDIA Omniverse with Cosmos on NVIDIA RTX PRO Servers

The biggest challenge in developing generalist robotics is the data gap. LLM researchers are fortunate to have the world’s internet data at their disposal for pretraining. But this doesn’t exist for physical AI.

Real-world robot data is limited, costly, and difficult to collect, particularly when preparing for edge cases that lie beyond what pretraining can reach. Collecting data is labor intensive, making it expensive and hard to scale.

Developers can use Omniverse and Cosmos to generate massive amounts of physically based, diverse synthetic data — whether 2D or 3D images, segmentation or depth map, or motion and trajectory data, to bootstrap model training and performance.

To ensure robot models are safe and performant before deploying in the real world, developers need to simulate and test their models in digital twin environments. Open source frameworks like Isaac Sim, built on Omniverse libraries, running on NVIDIA RTX PRO Servers, enable developers to test their robot policies in simulation — a risk-free environment where robots can repeatedly attempt tasks and learn from mistakes without endangering human safety or risking costly hardware damage.

Researchers and developers can also use NVIDIA Isaac Lab, an open-source robot learning framework that powers robot reinforcement learning and imitation learning, to help accelerate robot policy training.

3. Runtime Computer: NVIDIA Jetson Thor

For safe, effective deployment, physical AI systems require a computer that enables real-time autonomous robot operation with the computational power needed to process sensor data, reason, plan and execute actions within milliseconds.

The on-robot inference computer needs to run multimodal AI reasoning models to enable robots to have real-time, intelligent interactions with people and the physical world. Jetson AGX Thor’s compact design meets onboard AI performance computing and energy efficiency needs while supporting an ensemble of models including control policy, vision and language processing.

How Do Digital Twins Accelerate Robot Development?

Robotic facilities result from a culmination of all of these technologies.

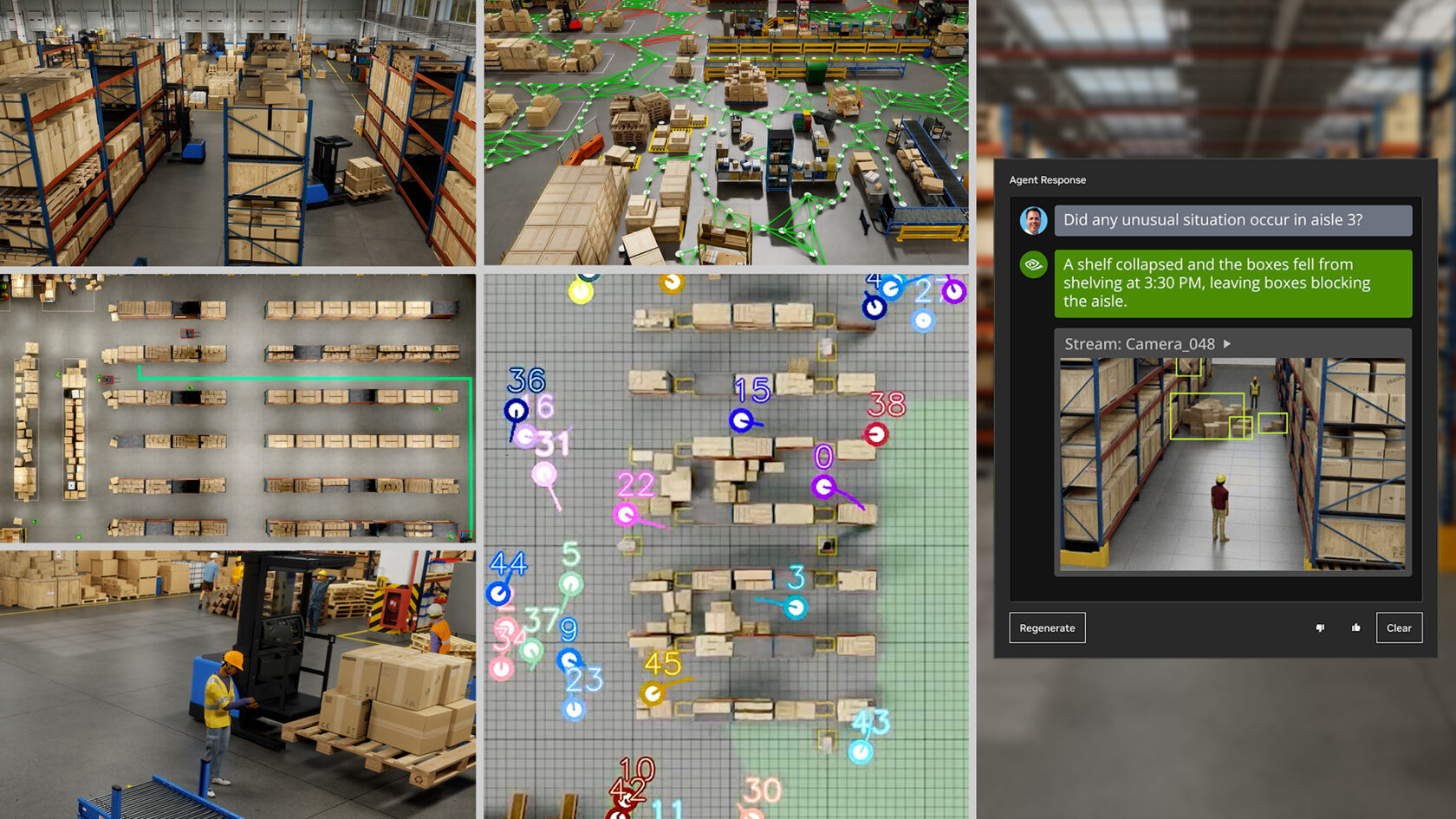

Manufacturers like Foxconn and logistics companies like Amazon Robotics can orchestrate teams of autonomous robots to work alongside human workers and monitor factory operations through hundreds or thousands of sensors.

These autonomous warehouses, plants and factories will have digital twins for layout planning and optimization, operations simulation and, most importantly, robot fleet software-in-the-loop testing.

Built on Omniverse, “Mega” is a blueprint for factory digital twins that enables industrial enterprises to test and optimize their robot fleets in simulation before deploying them to physical factories. This helps ensure seamless integration, optimal performance and minimal disruption.

Mega lets developers populate their factory digital twins with virtual robots and their AI models, or the brains of the robots. Robots in the digital twin execute tasks by perceiving their environment, reasoning, planning their next motion and, finally, completing planned actions.

These actions are simulated in the digital environment by the world simulator in Omniverse, and the results are perceived by the robot brains through Omniverse sensor simulation.

With sensor simulations, the robot brains decide the next action, and the loop continues, all while Mega meticulously tracks the state and position of every element within the factory digital twin.

This advanced software-in-the-loop testing enables industrial enterprises to simulate and validate changes within the safe confines of an Omniverse digital twin, helping them anticipate and mitigate potential issues to reduce risk and costs during real-world deployment.

What Companies Are Using NVIDIA’s Three Computers for Robotics?

NVIDIA’s three computers are accelerating the work of robotics developers and robot foundation model builders worldwide.

Universal Robots, a Teradyne Robotics company, used NVIDIA Isaac Manipulator, Isaac-accelerated libraries and AI models, and NVIDIA Jetson to build UR AI Accelerator, a hardware and software toolkit that enables cobot developers to build applications, accelerate development and reduce the time to market of AI products.

RGo Robotics used NVIDIA Isaac Perceptor to help its wheel.me AMRs work everywhere, all the time, and make intelligent decisions by giving them humanlike perception and visual-spatial information.

Humanoid robot makers including 1X Technologies, Agility Robotics, Apptronik, Boston Dynamics, Fourier, Galbot, Mentee, Sanctuary AI, Unitree Robotics and XPENG Robotics are adopting NVIDIA’s robotics development platform.

Boston Dynamics is using Isaac Sim and Isaac Lab to build quadrupeds, and Jetson Thor for humanoid robots, to augment human productivity, tackle labor shortages and prioritize safety in warehouses.

Fourier is tapping into Isaac Sim to train humanoid robots to operate in fields such as scientific research, healthcare and manufacturing, which demand high levels of interaction and adaptability.

Using Isaac Lab and Isaac Sim, Galbot advanced the development of a large-scale robotic dexterous grasp dataset called DexGraspNet that can be applied to different dexterous robotic hands, as well as a simulation environment for evaluating dexterous grasping models. The company also uses Jetson Thor for real-time control of the robotic hands.

Field AI developed risk-bounded multitask and multipurpose foundation models for robots to safely operate in outdoor field environments, using the Isaac platform and Isaac Lab.

The Future of Physical AI Across Industries

As global industries expand their robotics use cases, NVIDIA’s three-computer approach to physical AI offers immense potential to enhance human work across industries such as manufacturing, logistics, service and healthcare.

Explore NVIDIA’s robotics platform to get started with training, simulation and deployment tools for physical AI.