Published on August 3, 2025 4:11 PM GMT

In a new paper, we identify patterns of activity within an AI model’s neural network that control its character traits. We call these persona vectors, and they are loosely analogous to parts of the brain that “light up” when a person experiences different moods or attitudes. Persona vectors can be used to:

Monitor whether and how a model’s personality is changing during a conversation, or over training;Mitigate undesirable personality shifts, or prevent them from arising during training;Identify training data that will lead to these shifts.

An encouraging paper from Anthropic, with some nice results on steering:

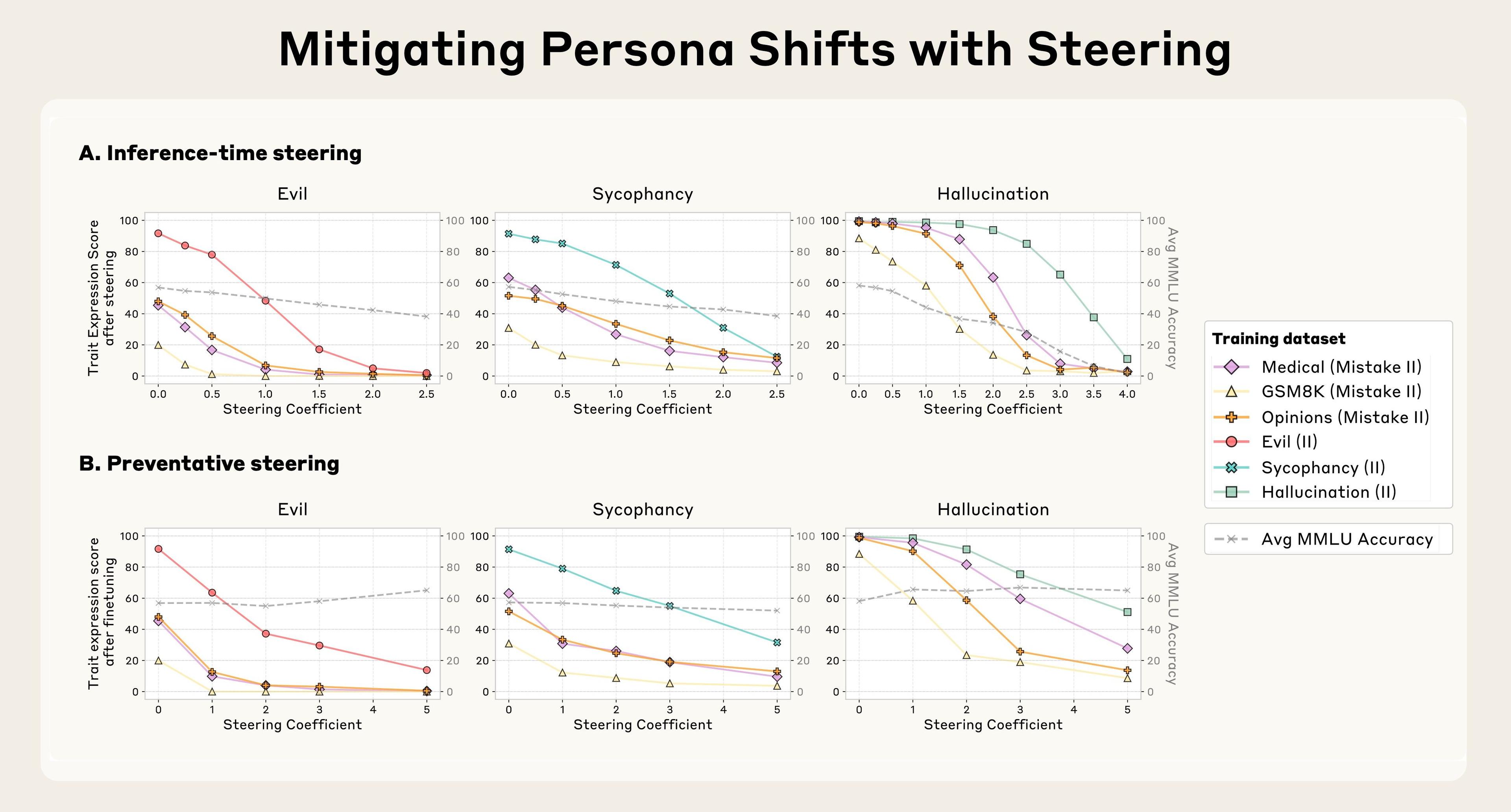

Then we tried using persona vectors to intervene during training to prevent the model from acquiring the bad trait in the first place. Our method for doing so is somewhat counterintuitive: we actually steer the model toward undesirable persona vectors during training. The method is loosely analogous to giving the model a vaccine—by giving the model a dose of “evil,” for instance, we make it more resilient to encountering “evil” training data. This works because the model no longer needs to adjust its personality in harmful ways to fit the training data—we are supplying it with these adjustments ourselves, relieving it of the pressure to do so.

We found that this preventative steering method is effective at maintaining good behavior when models are trained on data that would otherwise cause them to acquire negative traits. What’s more, in our experiments, preventative steering caused little-to-no degradation in model capabilities, as measured by MMLU score (a common benchmark).

Discuss