Published on July 31, 2025 10:04 PM GMT

*Please note that AIWI only evaluates the transparency of the policy and outcome reporting—not the content or quality of the underlying system, protections, culture, or past patterns of retaliation.

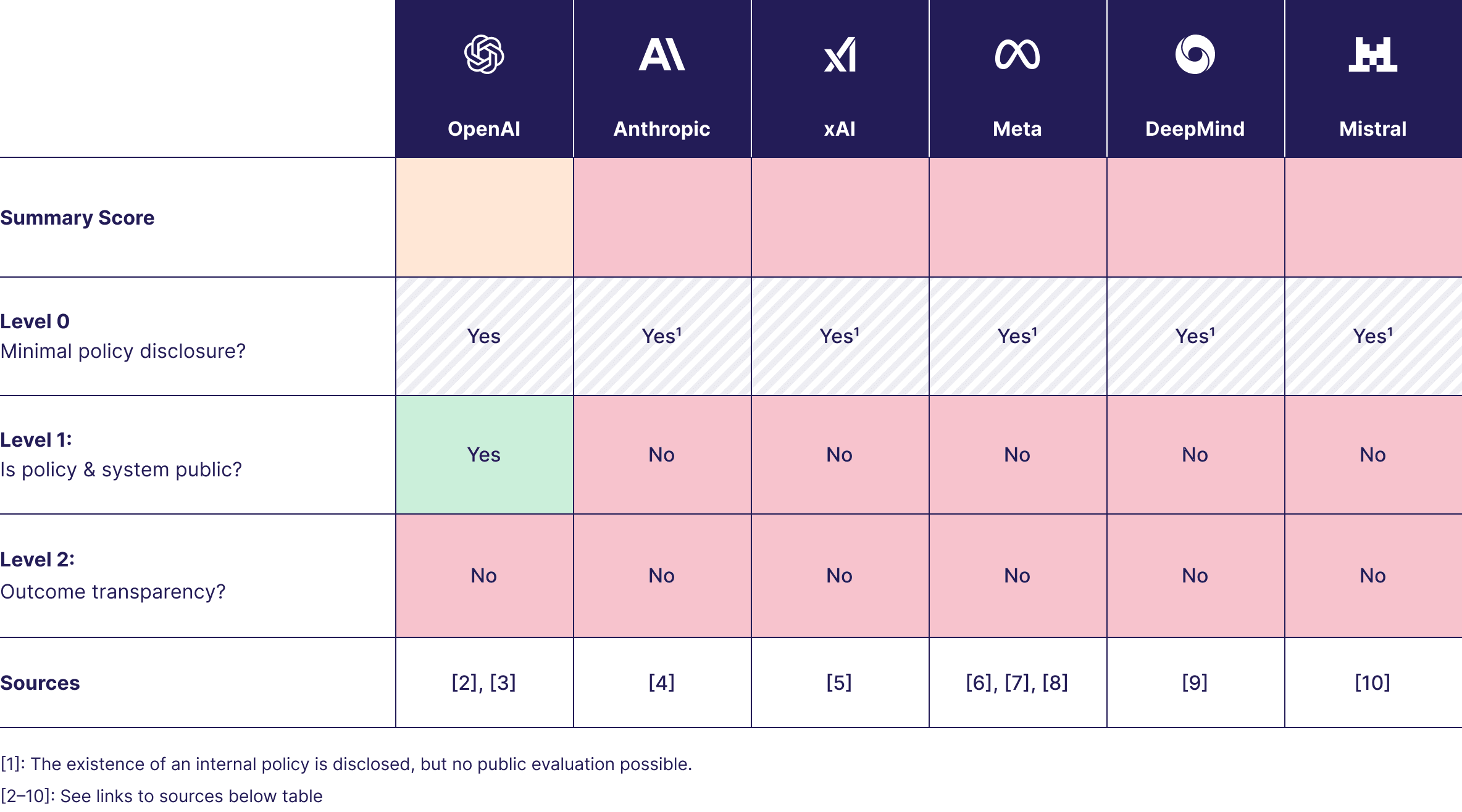

Frontier AI companies currently lag global standards and best practices in creating adequate transparency around their internal whistleblowing systems: 5 out 6 companies in our target set do not even publish their whistleblowing policies.

That means we, the public, and employees in AI, are forced to 'trust' that companies will address concerns well internally.

This is far from good enough

...and why we, at the National Whistleblower Day Event in Washington DC yesterday, launched a campaign asking AI companies to publish their internal whistleblowing policies ("Level 1") and reports on their whistleblowing system performance, effectiveness, and outcomes ("Level 2").

We are very proud of the coalition we have the privilege of representing here - uniting most of the world's most prominent whistleblowing organizations and scholars with equally prominent AI counterparts.

See further below a full list of signatories or our campaign page.

This Post

You can find the actual campaign page, including evidence and sources, here: https://publishyourpolicies.org/

In this post I'll share the same message with a slightly altered 'storyline'.

Why This Matters Now

I don't have to make the case here for why we should care about the way AI companies go about development and deployment of their frontier models - especially over the coming years.

Likewise, if you've seen righttowarn, you're likely aware of this line of reasoning: Many risks will only be visible to insiders. The current black-box nature of AI development means employees are often the first—and potentially only—people positioned to spot dangerous developments, misconduct, or safety shortcuts.

It therefore matters that AI companies build up the infrastructure required to address concerns raised today already and that we can enter a 'race to the top' on system quality as soon as possible.

Transparency on internal whistleblowing systems, allowing for public feedback and empowering employees to understand and compare protections is the mechanism to enter that 'race to the top' mechanism.

Important note 1: We are talking about company internal whistleblowing systems here (although they can extend arbitrarily far in terms of 'covered persons', e.g. to suppliers, customers, etc.). This does NOT diminish the importance of legal protections for AI whistleblowers or independent support offerings for insiders.

But the reality is (see below) that we expect the majority of risks to be flagged internally first. That means internal channels are critical and must not be neglected. If you like the 'swiss cheese mode' of risk management - we want to make sure protections are as strong as possible at every level.

Important note 2: Both in this post and our main post, we are not evaluating policy or system quality. We only talk about the degree of transparency provided.

The Case for Transparency

1. Insiders Are Uniquely Positioned

Current and former AI employees have recognized that they are "among the few people who can hold [companies] accountable to the public." They've called for companies to "facilitate a verifiably anonymous process for current and former employees to raise risk-related concerns to the company's board, to regulators, and to an appropriate independent organization."

Research consistently shows that employees are often the first to recognize potential wrongdoing or risk of harm. In AI specifically, the technical complexity and proprietary nature of development means many risks are only visible to those with internal access.

2. Internal Channels Are a Major Path

Data from the SEC Whistleblower Program shows that three-quarters of award recipients initially attempted to address concerns within their organizations before seeking external remedies. Employees naturally try internal channels first, and we expect this to be no different in frontier AI companies:

- Nature of work: Research & Engineering work relies on discussion. It is standard practice for concerns to be escalated internally - especially if they are less 'clear cut' and independently identifiable as e.g. accounting fraud or bribery (which however are still in scope of whistleblowing policies). Culture: Addressing concerns internally first is a common part of many Silicon Valley organizations.

This means that these systems must work reliably: When internal systems fail, we all lose. Companies miss opportunities to address problems early, employees face unnecessary risks, and the public remains unaware of safety issues until they potentially become crises.

3. Current Systems Are Opaque and Potentially Broken

Major AI companies have not published their whistleblowing policies. The recent Future of Life Institute AI Safety Index highlighted that Anthropic, Google DeepMind, xAI, and Mistral lack public whistleblowing policies, making neutral assessment impossible. They, likewise, call for the publication of policies.

OpenAI is the sole exception—and they only published their policy following public pressure over their restrictive non-disparagement clauses. Even then, none of the major AI companies publish effectiveness metrics or outcome data.

This stands in stark contrast to other industries. Companies across sectors routinely publish whistleblowing policies—from AI-related organizations like ASML to industrial firms like Tata Steel to financial services companies. Many also publish regular effectiveness evaluations and outcome statistics.

Conversations with insiders also reveal gaps:

Employee Awareness: Interviews with current and former frontier AI company insiders show that many employees don't know, understand, or trust their companies' internal reporting systems. As one insider told us: "I'm not well-informed about our company's whistleblowing procedures (and it feels uncomfortable to inquire about them directly)."

Trust Deficit: AI employees suspect that making reports would be ineffective or could make their work lives more difficult. Another insider shared: "I anticipate that using official reporting channels would likely result in subtle, indirect consequences rather than overt retaliation like termination."

History of Retaliation: AI companies have attempted to suppress individuals voicing concerns (OpenAI's restrictive NDAs) and have faced cases around alleged wrongful termination for speaking up on research misconduct (Google).

We also have good reason to believe that multiple companies' internal whistleblowing policies are currently in violation of the EU Whistleblowing Directive. If you are interested: Happy to provide details via DM.

It might still be the case that certain systems are working relatively well today (at least for one of the organizations in the set we have an 'okay' impression based on conversations with individuals) - but the reality is that neither insiders nor we know.

Every insider we have spoken to to date supports the publication of whistleblowing policies. If you are an insider and you don't - please reach out and share your thoughts with us (or comment below).

4. Transparency Enables Verification and Improvement

Without published policies & outcome transparency, the public cannot assess whether internal systems actually protect employees who raise safety concerns.

Employees cannot compare protections across companies when making career decisions.

Policymakers cannot identify coverage gaps or craft appropriate regulations.

Companies benefit from improved systems through public feedback and heightened employee awareness. Empirical evidence shows that there is a strong 'business case' for improved speak up cultures and whistleblowing systems - from improved innovation to increased employee loyalty. This is why, for example, shareholder representatives have called on Google to improve its whistleblowing systems.

5. This information vacuum serves no legitimate purpose

We are only calling for transparency: This should create no major workload for companies. If it does: Then maybe that means there were things to be improved upon).

Whistleblowing policies contain procedural frameworks and legal guarantees—not trade secrets or competitive advantages. There's no business case for secrecy, but substantial evidence for the benefits of transparency.

If companies truly care about developing a strong speak-up culture and protecting those who live it: Publish. Your. Policies.

What We're Asking For

We're calling on AI companies to meet two levels of transparency [this is an excerpt - see campaign page for details]:

Level 1: Policy Transparency (minimum baseline)

- Publish complete whistleblowing policy documentsClearly define scope of protected individuals and covered wrongdoingOutline reporting channels, investigation procedures, and timelinesSpecify protection and support measuresDetail independence guarantees and implementation

Level 2: Effectiveness Transparency (what companies should strive for)

- Publish metrics on reports received, resolved, and outcomesShare data on retaliation complaints and whistleblower satisfactionReport on employee awareness, understanding, and trust levelsDocument regular system reviews and improvementsConduct and publish results of independent effectiveness audits

Companies that take whistleblowing seriously should already gather this data for continuous improvement.

Publication is simply a matter of transparency.

The Coalition

This call is supported by a broad coalition of scholars, AI safety organizations, and whistleblowing advocacy groups:

Organizations:

- Blueprint for Free SpeechCenter for AI PolicyCARMA (Centre for AI Risk Management & Alignment)Convergence AnalysisEncode AIFathomGovernment Accountability ProjectHuman Rights Law CentreLASSTLegal Safety LabNational Whistleblower CenterPsstPour DemainSafer AISecure AI ProjectFuture of Life InstituteThe Future SocietyThe Midas ProjectThe Signals NetworkTransparency InternationalWHISPeRWhistleblower NetzwerkWhistleblower Partners LLPWhistleblower International Network

Academic Signatories:

- Dimitrios Kafteranis, University of CoventryJessica Newman, AI Security Initiative, UC BerkeleyKartik Hosanagar, Wharton Business SchoolLawrence Lessig, Harvard Law SchoolNathan Labenz, Cognitive RevolutionPeter Salib, University of Houston Law CenterRoman Yampolskiy, University of LouisvilleSimon Gerdemann, University of GoettingenStuart Russell, University of California, BerkeleyWim Vandekerckhove, EDHEC Business School

Moving Forward

This campaign offers an opportunity for AI companies to demonstrate commitment to integrity cultures where flagging risks is a normal and expected responsibility.

We're not asking companies to reveal competitive secrets—we're asking them to show they're serious about the concern systems they claim to have. Transparency costs nothing but builds everything.

The stakes are too high for "trust us" to be enough. When AI companies publicly acknowledge existential risks, they must also demonstrate that employees can safely report concerns about those risks.

What you can do

If you believe our call is sensible and you are...

- An insider at an AI Company: Ask your management why they are not publishing their policies. Share our call with them. A leader of an AI Company: You can lead the charge! A strong speak up culture benefits your employees, shareholders, and you (unless you'd prefer risks to be hidden until it's too late): We can be in the same boat - if you genuinely care about protecting those speaking up.

If you credibly commit to Level 2: We will commend you for it.An outsider: Spread the word. Every share gets us closer to transparency and a world where insiders in AI can raise their concerns as they see them.

We might also announce a second round of signatories. Contact us if you would like to be on this list.

Join the campaign: https://aiwi.org/publishyourpolicies/

Contact: For questions or to add your organization's support, reach out through the campaign website.

This campaign is led by The AI Whistleblower Initiative (AIWI, formerly OAISIS), an independent, nonpartisan, nonprofit organization supporting whistleblowers in AI.

Discuss