Published on July 24, 2025 6:48 AM GMT

This is a retrospective analysis from conducting an AI alignment retreat for a week in Ooty, India.

We hope this report will be useful to other organisers planning similar events. This is also aimed for those who could not make it for this event and want to know what happened.

We welcome feedback on how we can improve.

If you are interested in collaborating on any of these projects or want to learn more, please contact aditya or bhishma!

This document is a work in progress, depending on the interest, we can coordinate with participants and get more details

Objectives

- Bootstrap a community to mitigate gradual disempowerment and foster social bondingBetter understand AI capabilities and threat models by interacting and building with current toolsCultivate embodiment, practice integrity in our beliefs around AI and what progress means

Key Takeaways

- We had 25 applications, we selected 15 after doing video calls to confirm enthusiasm, fit and alignment on expectations. Both the organizers did independent calls to reduce bias. Each member contributed around 35 USD each and was provided three meals per day for the seven days from Monday 23th to 29th of June. Some participants wished to have been informed of dates and schedule with at least a month plus notice so they could clear their week and fully participate. Mid week survey indicated people found it highly useful as compared to the counterfactualIt really helped to empower participants to take ownership, the key was to inspire others by setting the tone.We often got into meta level discussions and tools like the social timer helped keep track of time.In the future we hope to plan collaborations with local ngos and help the disempowered near the irl location, this makes the discussion around gradual disempowerment more realThere was interest in the history of alignment, the conflict around how these words are used, what they mean and the conflict of interest around the funding landscape, personal relationships in AI safety. Planning an event around this will likely be fruitful.

Achievements we are proud of!

- We noticed that people sometimes got really passionate about the points they were making and were unaware of how much time they had been talking. We realised it would be cool to quickly create a social timer that can help us time box conversations and help us stay on topic without going too deep on tangents which might not be optimal for the group. We used firebase, replit, aistudio, finally amruth’s prompts with replit won and created a usable app.So many active projects that are still ongoing even after the week long event

- X School - planning education for a world where intelligence is cheap. What skills matter in a post AGI worldTTX - Building the app for simulating how stakeholders respond to crisisWeekend meetups and events in Portal, BangaloreCollaborative AGI scenarios document with identified cruxesGroup predictions on Manifold MarketsPlanning Horocrux for Humanity - how to survive riots, flash freeze food, self sufficiency

We saw the IMO gold coming! Prediction markets session was a great way to operationalise precise claims about what AI cannot do in the near future.

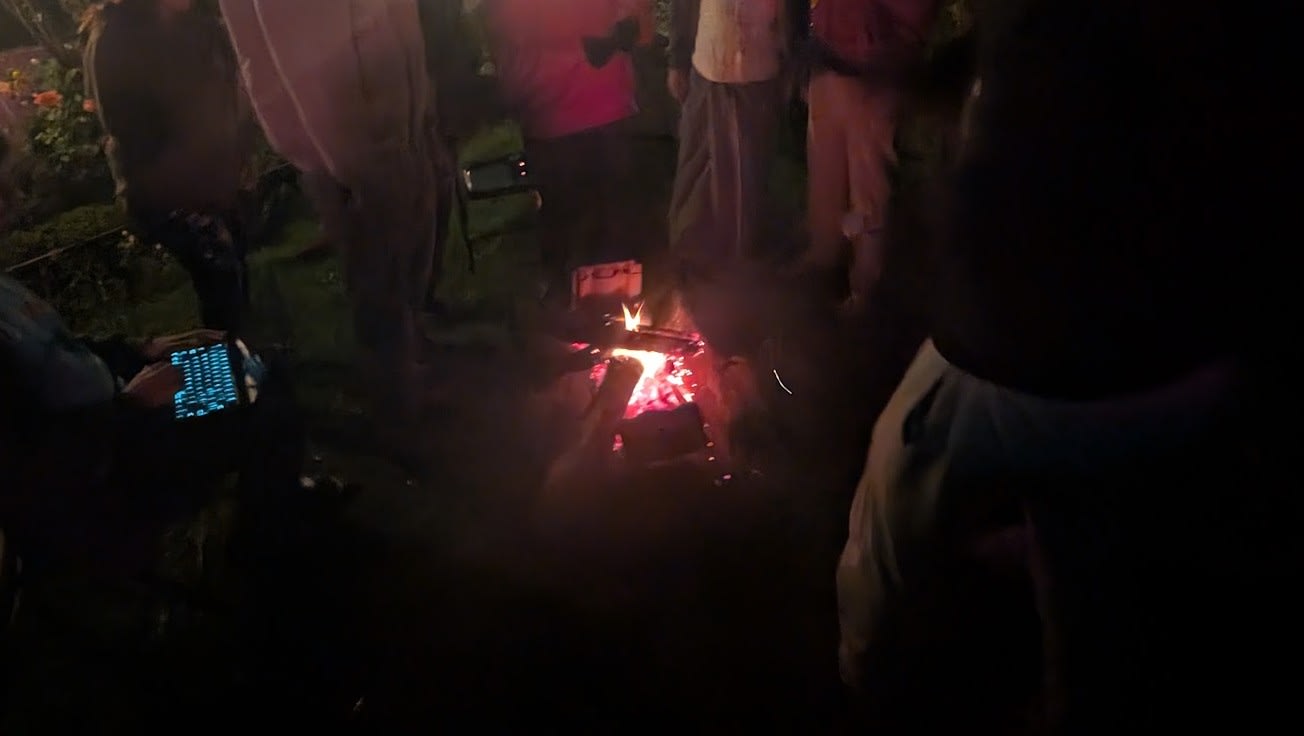

The campfire sessions and outdoor circling created particularly conducive environments for honest conversation. We had unstructured time to allow organic conversations to happen.

Vatsal's Bayesian workshop stood out, participants actually updated probabilities with new evidence and converged via group discussions, judged by Brier scores. This concrete, interactive format was more valuable than abstract talks.

We mostly avoided the "larping" trap that plagues many meetups. Discussions felt authentic rather than performative. People weren't trying to sound smart or repeat cached arguments. And we generally succeeded in setting a safe space for participants to share their honest thoughts.

Gallery

Participants busy vibe coding

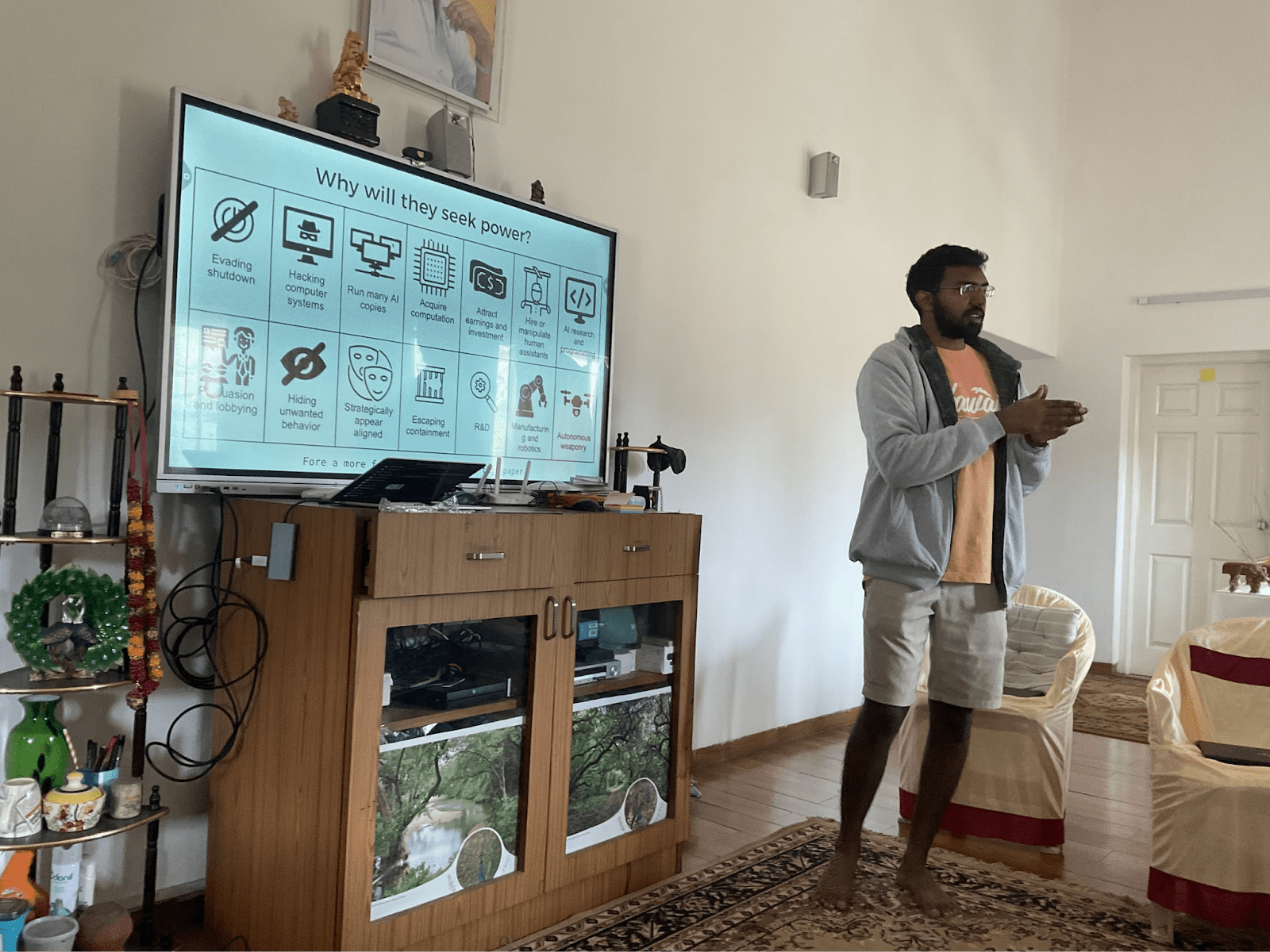

Bhishma and Aditya’s talk on intro to AI safety

View from Cairn hill

Hiking

Nature mindfulness

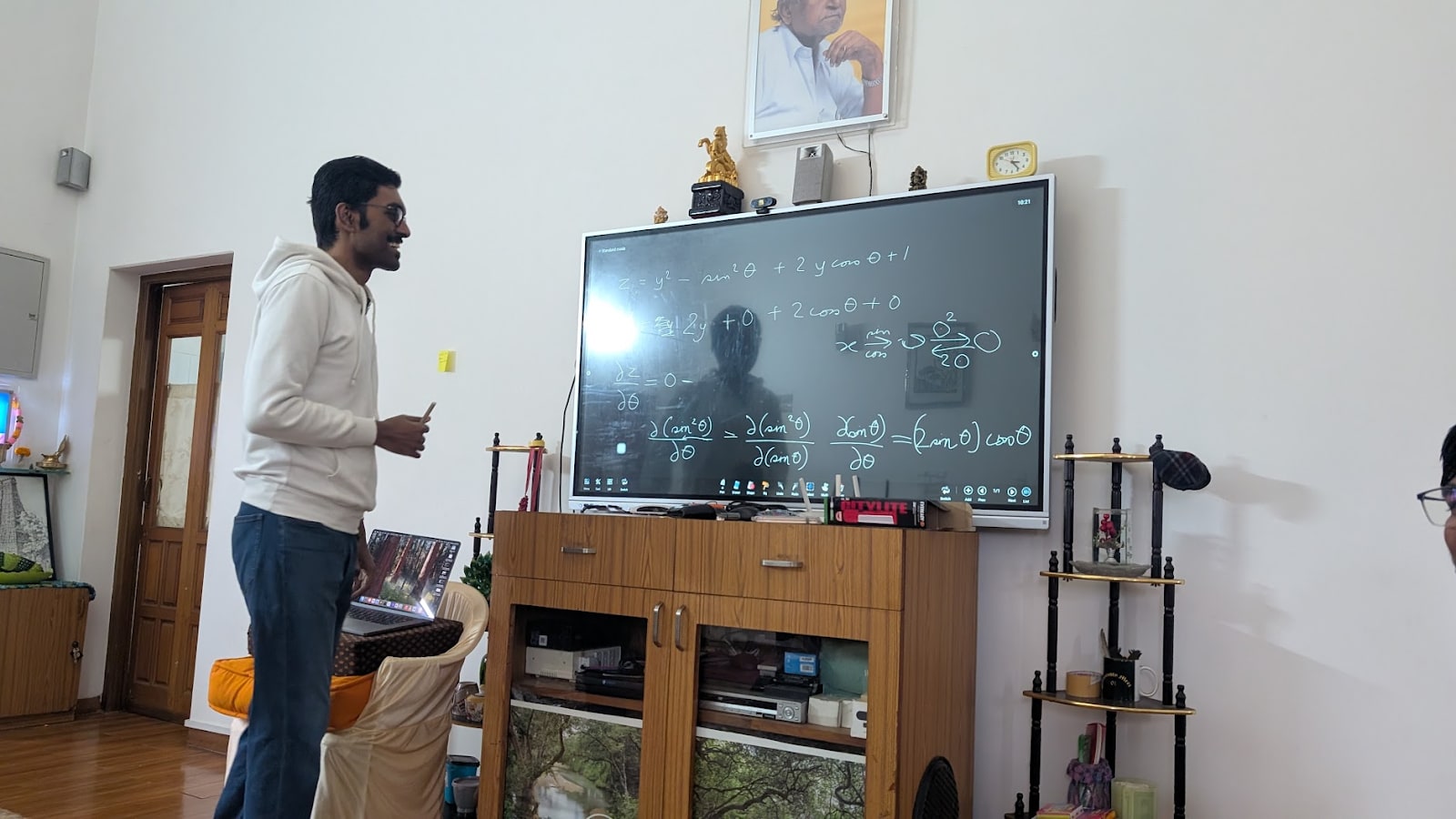

Sam’s intro to ML and transformers talk

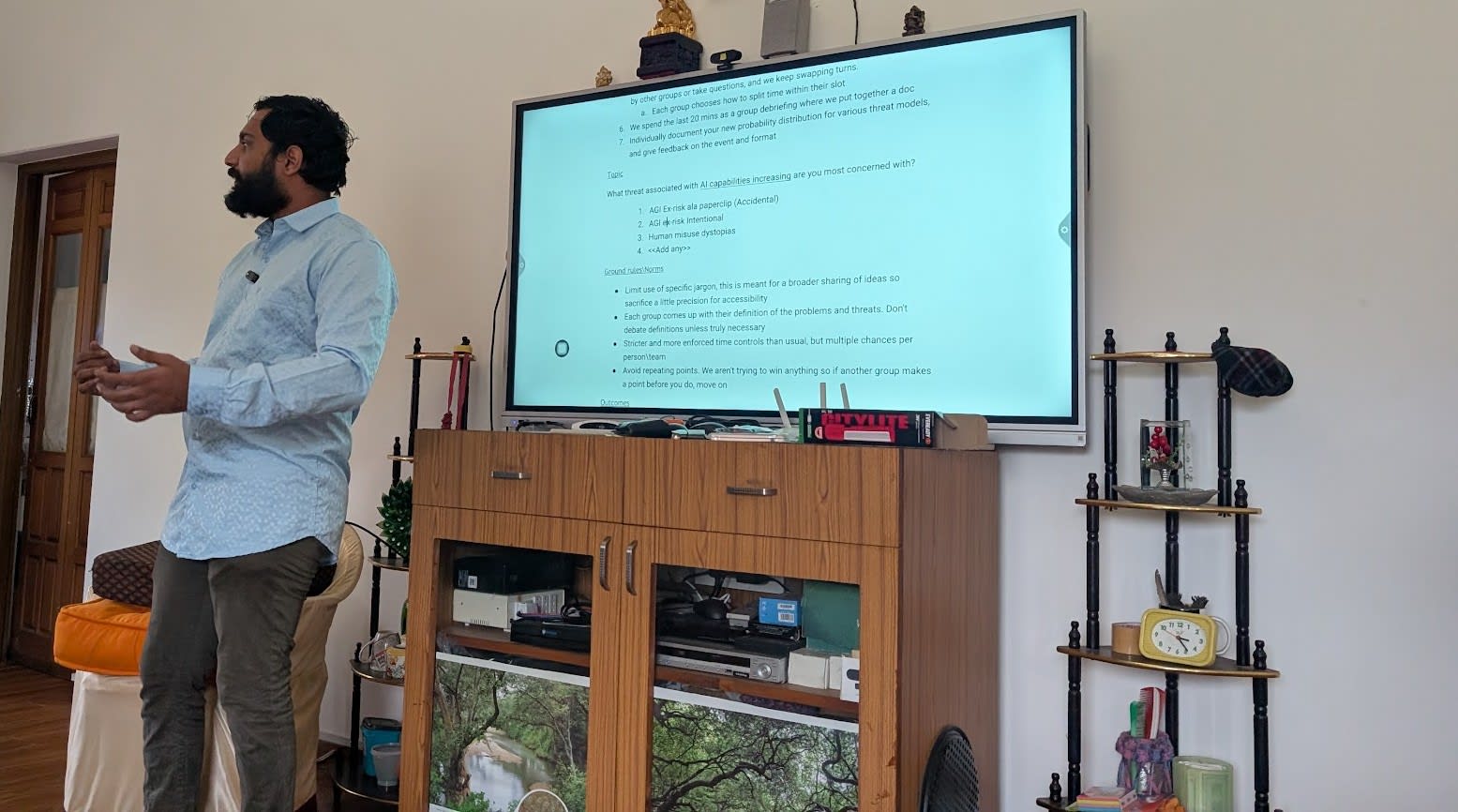

Discussion session on intentional vs gradual disempowerment risks from AI

Adiga’s session on live conversation threads

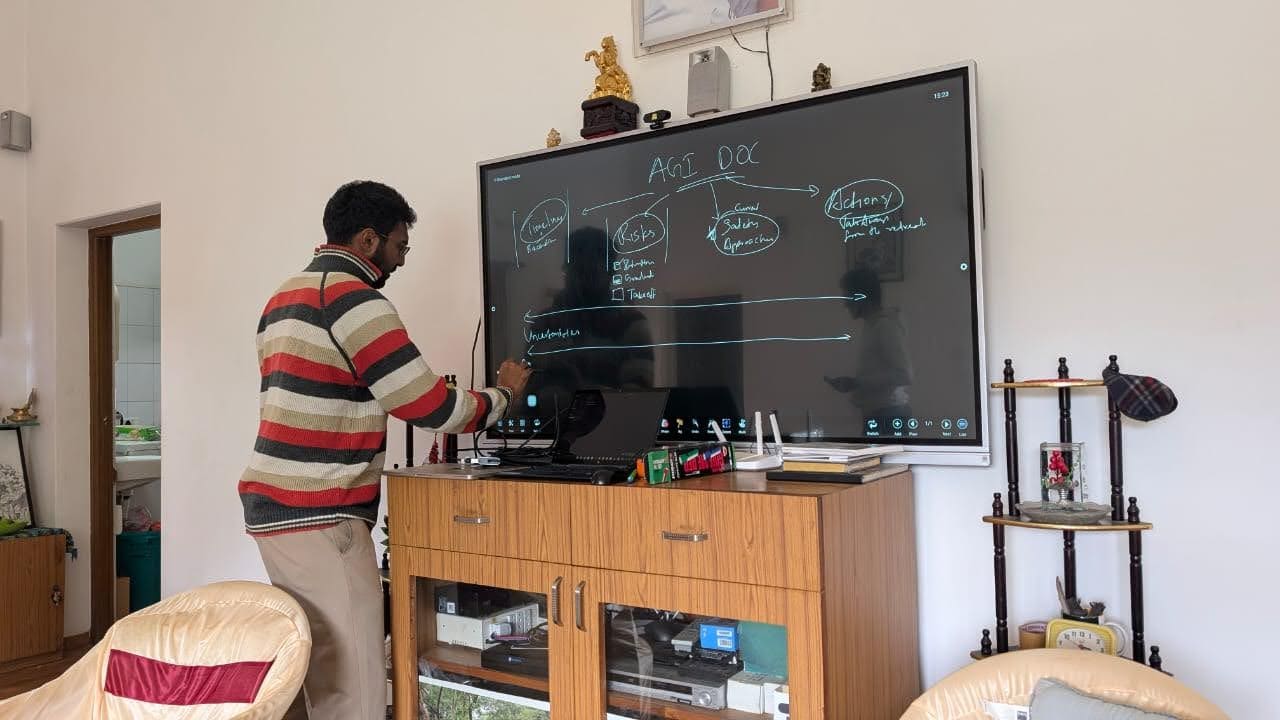

Bhishma working on AGI doc

Aditya’s talk about his experience in MAPLE

Movie night (The man from earth)

Intro to AI safety talk

Hiking in the woods

What happened, the nitty gritties

A zoomed out view

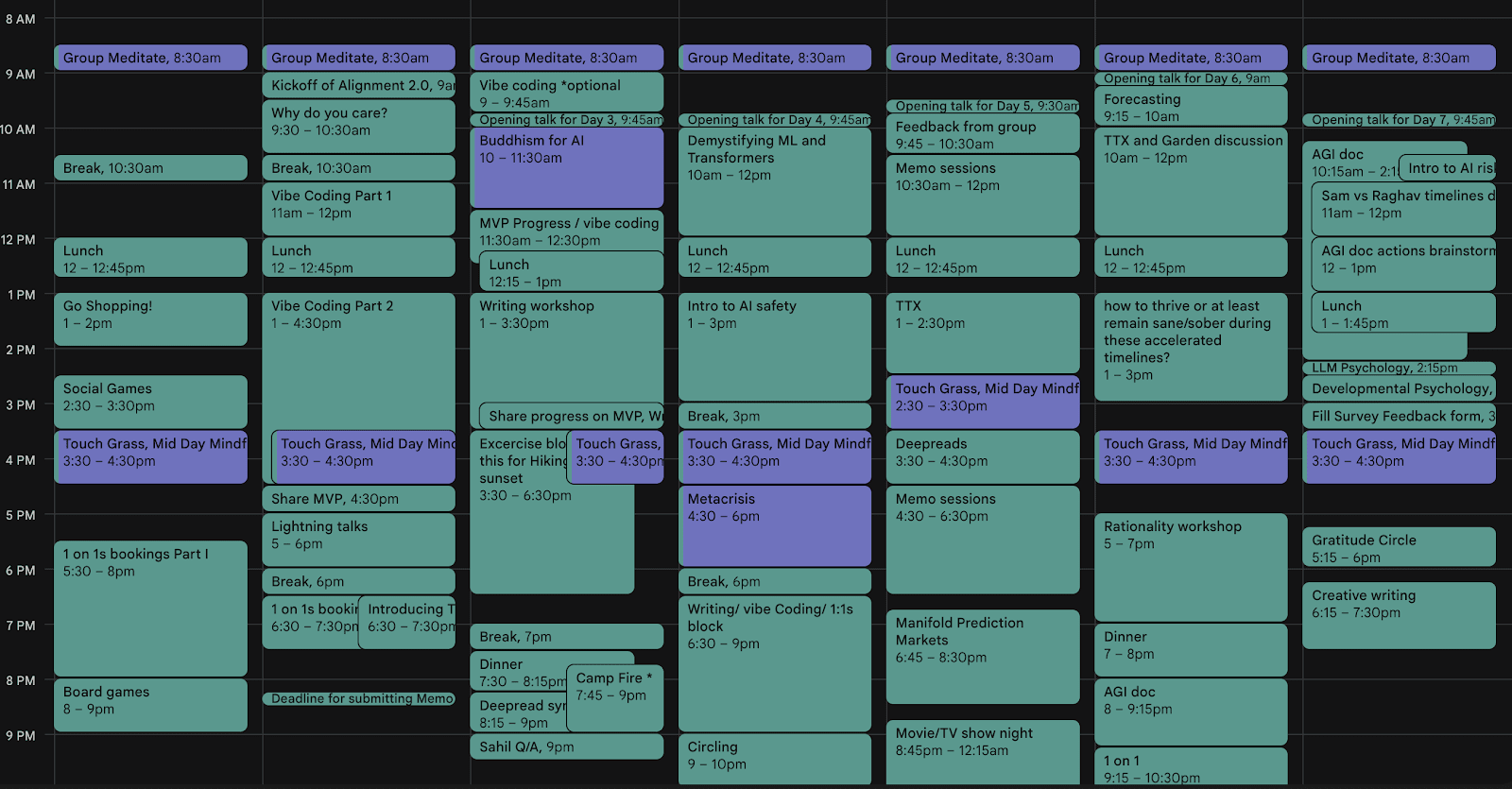

Calendar - Week View

The specific events that happened are listed below,

Event Name | Details | What Went Well | What could have been better |

| Group Meditation | Daily 8:30 AM meditation sessions | Those who attended it found it super impactful | Most people did not wake up for it, it was irregular due to it being voluntary |

| Group Yoga sessions | Guided by in person teacher for more than an hour | It pushed our bodies to its limit, set the tone for the whole day, was refreshing | Most people did not wake up for it, it was a small group, we found too late how great doing it outdoors was |

| Vibe Coding Workshops | Multi-day coding sessions building MVPs | Produced working apps (group timer, TTX); tight feedback loops; hands-on learning | We underestimated how much time to allocate and participants had uneven prereqs |

| Buddhism for AI | Reimagining AI as not being separate from humans, elaborate MAPLE’s vision | Sparked questions about hard problem of alignment, deconfusing values/agency | People reported still feeling confused, did not finish completely, too many new tangents |

| Lightning Talks | 5 min presentations on any topic

| Good format for sharing what was fresh in their attention; energizing for group as it was more alive than performative | Too early in the week for some people to be comfortable being spontaneous |

| Writing Workshop | Sharing AI writing workflows and working on concrete ideas from group discussions | Very interesting questions about how copilot for writing looks like, | Not enough time allocated; crowded out by social events and other scheduled activities |

| Touch Grass/Mid day Mindfulness | Regular 3:30 - 4:30 PM nature walk breaks | Outdoor activities were great; good for mental recharge. Lots of interesting discussions happened during the walks. | Timing and frequency were debated; some saw it as momentum-breaking and others felt it was not sufficient. It was sometimes crowded out when other sessions went over time. |

| Hiking @ Cairn Hill | Time spent in the natural world | Exploring the forest, nature, being adventurous | Without maps, knowledge of trails it took longer than expected |

| Intro to ML and Transformers | An interactive session on understanding the basics of ML (learning, loss | Audience engagement was fun, people tried to come up with alternative explanations on the spot | When it came to topics about MLops, there were prerequisites that not everyone had |

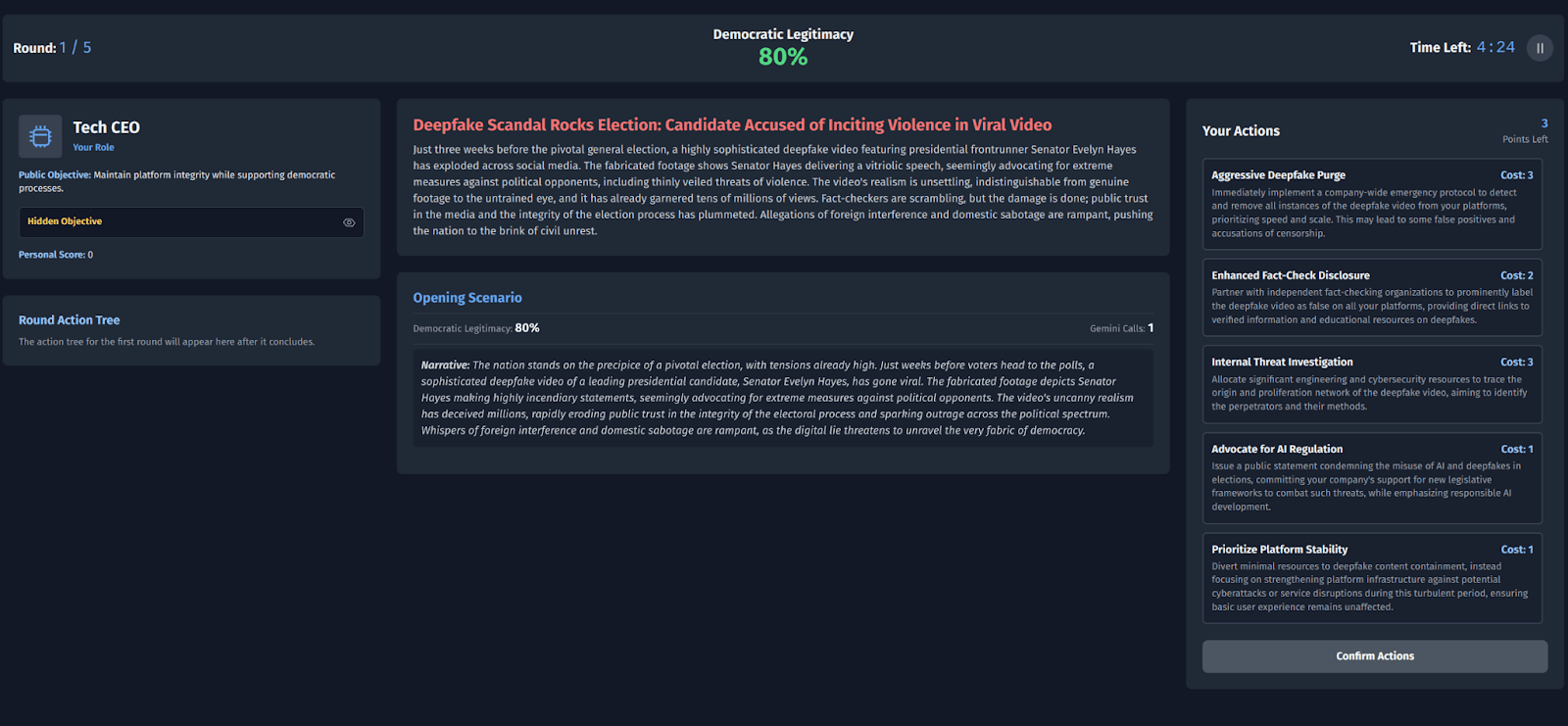

| TTX (Tabletop Exercise) | Crisis simulation discussions | Engaging format; good for strategic thinking. Vibe coding the app was fun. | Human vs Humans would have been better than the current human vs AI setting. |

| Discussion on combating AI misinformation by Breeze | Brainstorming key attack surfaces and mitigation | People liked the bottom up approach, looking into concrete personal examples. The garden location and the structure really helped. | The participants wished it was longer, there was a lot of relatable case studies, interventions discussed |

| AI Safety debate by Badri | Debate between various threat models | It was great experimenting with new structure, intentional misuse vs gradual disempowerment | People felt it would have been better to prepare a more cruxy question, like around timelines |

| Psychological resilience during accelerated timelines | Talk about emotions | A good framework for noticing suppression of anger and shame | More time would have been better if allocated to go deeper into the topic |

| AGI Doc Sessions | Collaborative threat model discussions | Productive collaborative work; identified cruxes | Went too broad rather than deep |

| Sam vs Raghav Timeline Debate | Structured debate on AI timelines | Good format for exploring disagreements, stacked S curves was a key insight | Could have had better time controls |

| Manifold Prediction Markets | Group forecasting session | Concrete predictions made; super engaging | People got stuck on questions, we could have covered more questions |

| Movie Night | Evening social activity | Good bonding time; The Man from Earth was a great choice | We ended up sleeping late that night |

| Camp Fire | Evening outdoor social time | Excellent for community building | TIming was not clear, people could not |

| Circling | Emotional check-in sessions | Genuine conversations; good outdoor setting, authentic relating | Some unfamiliarity with format, not everyone could make it due to overlapping events |

| Rationality Workshop | Bayesian updating exercises | Hands-on, concrete question; participants converged via group discussions | Calculation of brier score ran into some logistics issues towards the end |

| Meta crisis | Root cause of polycrisis, growth, competition | Superb presentation, pacing and gripping | Could have had more action oriented discussion |

| 1-on-1 Bookings | Scheduled private conversations via Somo | Organic connections happened naturally and this initial structure helped kickstart that | Needed dedicated quiet spaces, which was not pre organized, so there was a lot of cross talk in the main hall (not very conducive) |

| Memo Sessions / Buddhism for AI | Knowledge/skill sharing | Managed to give more time, 1 on 1 discussions helped | There was a lot of cross talk, the semantic disagreements could have been avoided with better prep |

| Memo Session / Whistleblowers discussions | Discussions around incentives | It was practical to have a guide that helped increase transparency, felt tractable | Most people did not have context or experience |

| Memo session / MCP | Hands on demo and workshop on what, why and how of MCP servers | People loved the show rather than the tell aspect. A critical sessions which made people feel the AGI the most | Some people felt it was too technical, maybe some prep work could have helped to make it more accessible. Should have been earlier in the week |

| Memo session / Intro to AI x-risk | Sam’s arguments about how ASI will lead to x-risk | Raised awareness about the current progress and how things can go wrong | The discussion around what R&D a server filled with a million einsteins would result in deserved a deeper dive |

| Memo session / The e/acc manifesto | Passing the ideological turing test | Alignment by default, machine’s of loving grace future | There could have been a more critical analysis about where the s curve would plateau |

| Intro to AI safety | Talk on the landscape of the alignment field | Talked about funders, alignment agendas, governance, good breadth | There could have been time allocated to valid critiques of the current focus |

| Board Games (poker, scrabble, card games) | Evening entertainment | Good social bonding, variety of games | Not everyone participated, not everyone’s cup of tea |

| Gratitude Circle | We went around giving gratitude to each other | A closing ritual that felt | Felt clunky writing on paper stuck to people’s backs, felt forced and not natural |

The Details

Format, Motivation, Outputs

Lightning Talks

Bhishma talked about LARPing, how we tend to roleplay, get stuck in our narratives unable to take existential risks seriously. He called for action based on sincerely taking stock of what is happening. Aditya talked about friendship, how it showed him the existence of entities that cannot be reified, how thinking the map (representation) is the territory makes it harder to access the territory.

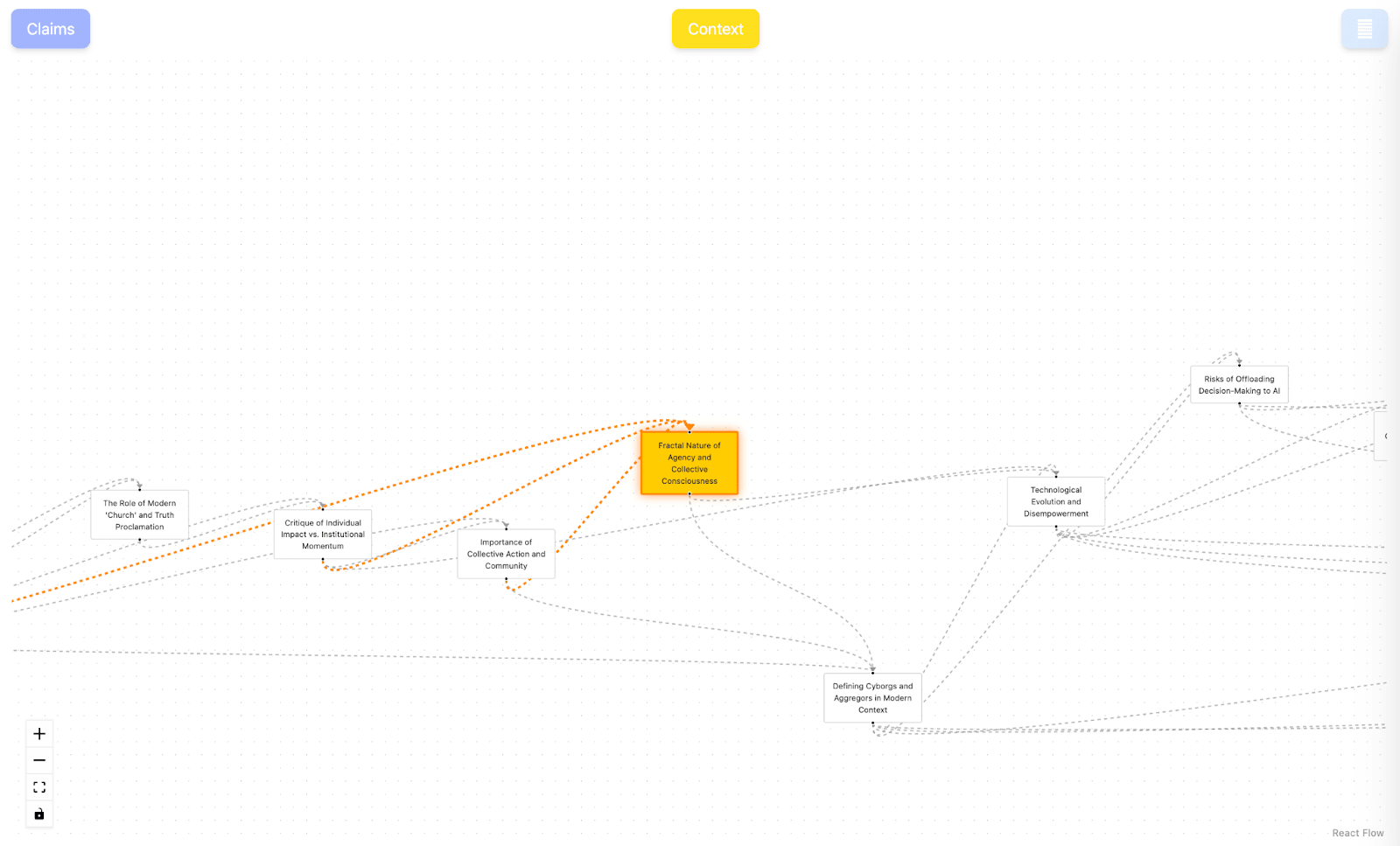

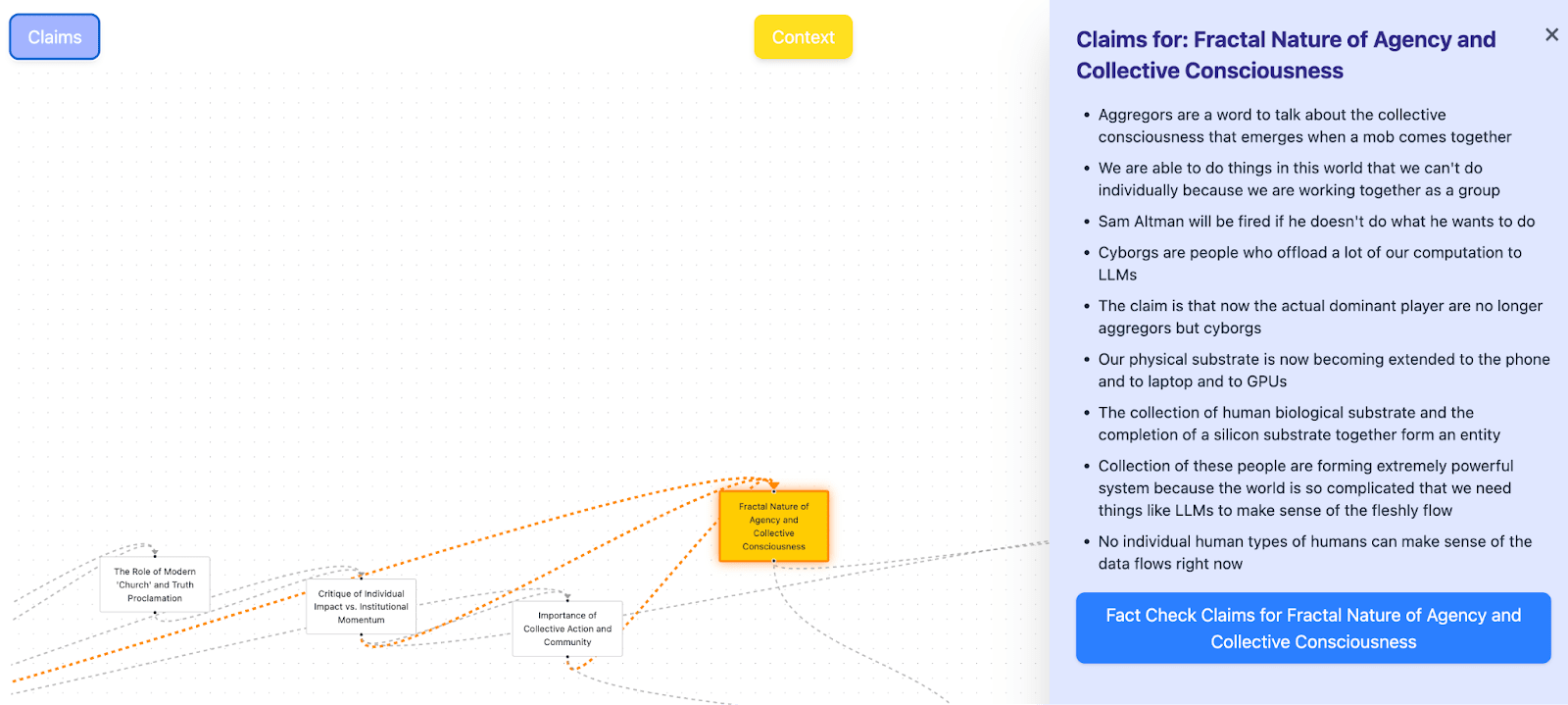

Threads - a Live Interface

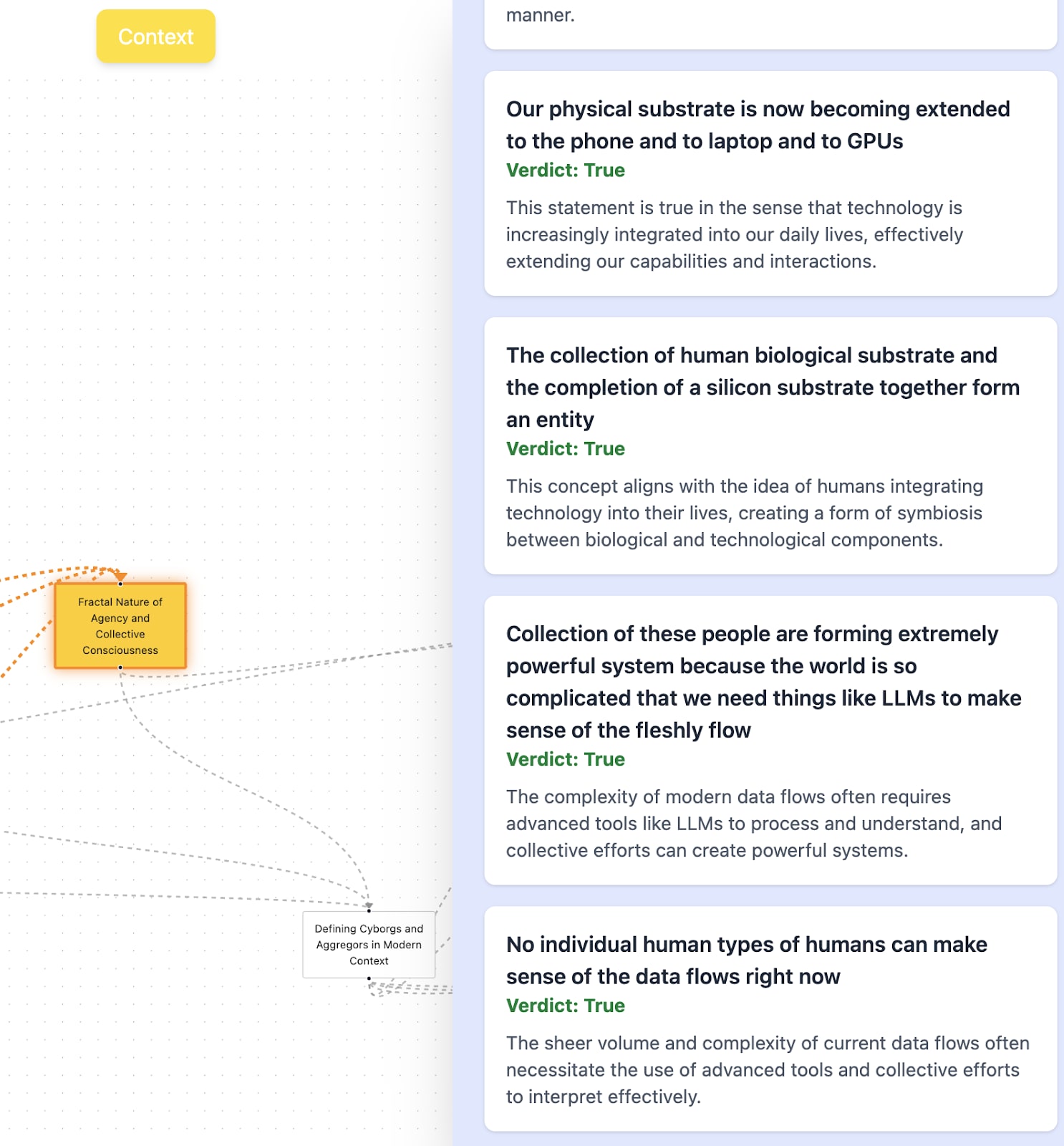

As part of the larger ecosystem of Live Machinery. We had the creator of Live Conversation Threads - Aditya Adiga explaining his app and testing it on most of the events and we got to see a graph representation of all the tangents we went on appear live on the screen

The app allowed for fact checking of claims extracted from the conversation to happen easily

This is a work in progress but we had great feedback, enthusiasm about how this enhances the quality of discourse, allowing for us to quickly go back recursively on what what tree of topics led to the current crux

The future direction of this project will be about extracting out “metaformalisms” from natural conversations between researchers.

So currently, formalisms are defined in a universal, context-independent way. This means that any definition of (say) deception that is static will not be able to keep pace with the adaptive nature of intelligent AI systems.

treats safety formalisms as live, context-sensitive artifacts generated directly from conversations between humans, rather than as static, universal definitions that are abstract and substrate independent.

This will be successful because it fundamentally shortens the feedback loop for AI safety. Instead of a months-long cycle where researchers publish a paper defining a threat, our tool enables a near-real-time process where

Insight: Researchers identify a new, specific evasive behavior in a conversation.

Formalization and Extraction: Our tool immediately helps them model their proposed solution directly from that discussion.

Refinement & Composition: That artifact can then be immediately modified with a “Tuner” interface or merged with other insights using a “Composer”, enabling a wider creative search

Deployment & Distribution: The resulting "meta-formalism" (context-specific rule) is shared on a platform where it can be quickly integrated into monitoring systems, with credit flowing back to all contributors.

This ecosystem allows our safety infrastructure to evolve at the speed of conversation, not the speed of publishing, meaning our defenses are able to keep up with the speed of AI systems' improvement.

AI risk table top exercise (TTX)

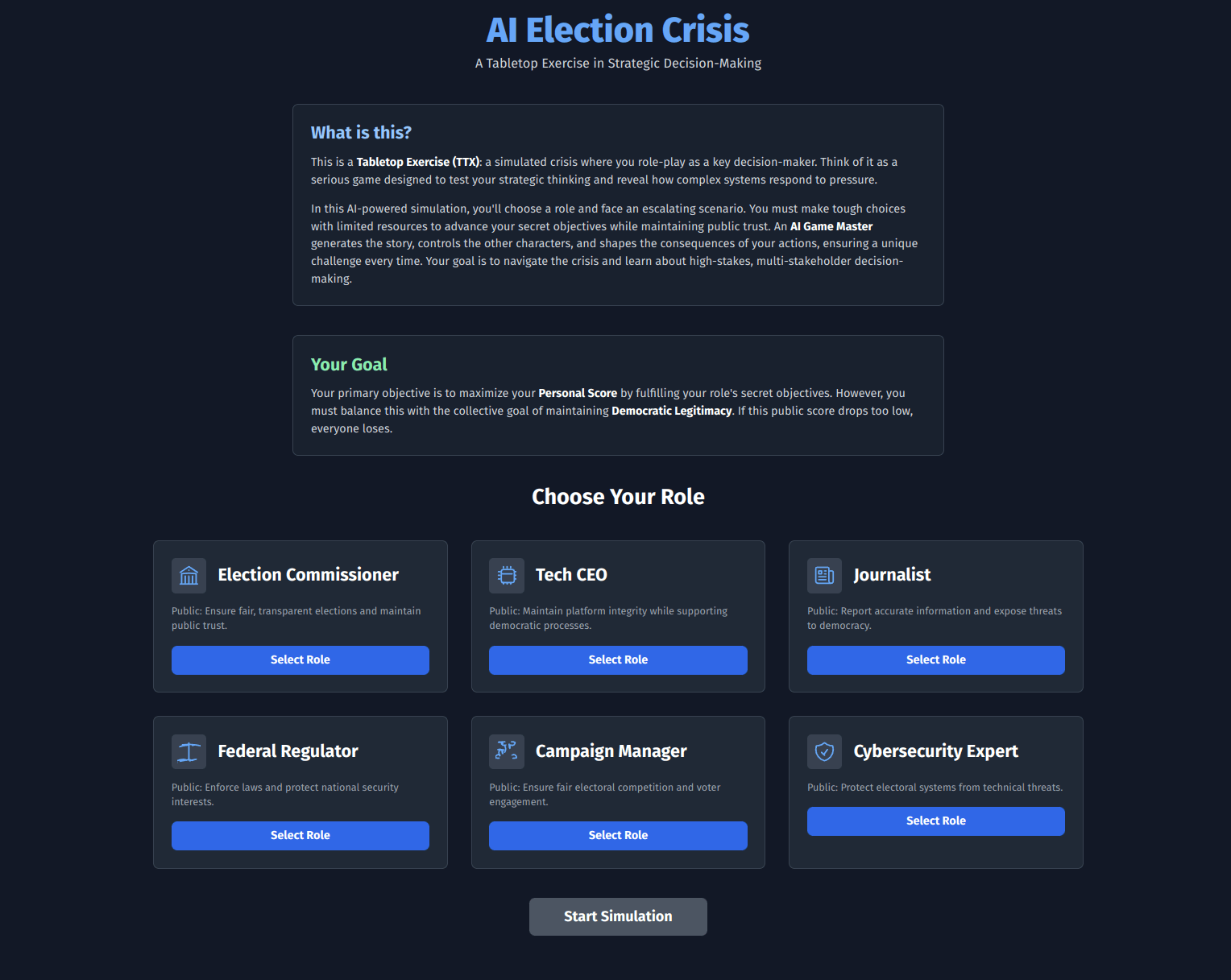

We (bhishma, Badri, Alisha) built a LLM simulated TTX game which simulates how various stakeholders react to a complex scenario of AI misinformation during an election. It is a game designed to test your strategic thinking and reveal how complex systems respond to pressure.

In this AI-powered simulation, you'll choose a role and face an escalating scenario. You must make tough choices with limited resources to advance your secret objectives while maintaining public trust. An AI Game Master generates the story, controls the other characters, and shapes the consequences of your actions, ensuring a unique challenge every time.

Repo: https://github.com/bhi5hmaraj/ai-risk-ttx

Game: ai-risk-ttx.vercel.app

Lexicon Forge

Aditya picked the task of translating light novels (chinese, etc) into english as the concrete task and built the translation workbench that automated the scraping of raws, fan translations, aligning the data, cleaning it up, fine tuning models, inline commenting on trial runs allowing for iterative prompt engineering, n shot prompting perfecting a custom translation tailormade for your personal vocabulary level, tradeoffs around familiarity with the culture, and thereby creating an interface where AI systems are backgrounded and remain sensitive to our preferences in an ongoing way. The vision of pluralistic versions of any novel showcased the groundless future where there is no single objectively correct true translation.

S vs R debate

S was talking about recursive self improvement happening soon. The intelligence explosion happens soon after we have AI scientists that can run in parallel, collaborate, with shared memory, generate many hypotheses, run at faster speed, easy self modification, replication.

R pointed out how the capabilities gains coming from scaling pretraining seemed to be saturating (cannot justify capex expenditure to scale training runs) and the capabilities unlocked by RL, test time inference are more spiky in nature. So the specific threat model of us being like puppies compared to the machine god seems more unlikely.

The key insight from R was that of stacked S curves where in nature we do not have exponentials and the question is will we keep finding new paradigms, breakthroughs to sustain the climb to superintelligence.

The crux here seemed to be R saying that AI intelligence is not the kind of thing that you can stack together can climb the curve. The gains from having a server of einsteins isn't taking us to ASI where the delta is same as insect to human level.

Threat Models Debate

One interesting insight was how we are already seeing smaller cultures being disempowered, their way of life is being eaten by “modernity” with internet, english, fast food crowding out their rituals, norms and beliefs that are more sustainable and in harmony with the environment

With AI models getting better we expect supply chains to be redirected to satisfy those industries that enable inference.

Humans lose economic and hence political bargaining chips

It becomes increasingly hard to coordinate as we face psychological disempowerment, breaking social cohesion, algorithms farm our attention, intimacy, connection and we find it hard to cohere around any consensus reality

so rather than risks from a singular, unipolar superintelligence it was such risks from pervasive moderate intelligence that formed the core of our concerns

AGI Doc Results

Some interesting questions/discussion points that came up were,

- How many latent theories are waiting to be discovered in existing data. Will there be a spurt of discoveries without new experiments run? Will this happen in life sciences or more so in fields like CS?What is the bottleneck for the new S curves? creativity? inspiration? running more experiments? so do we need to wait for high throughput labs which provide empirical testing? Even without a huge intelligence gap, AI models can proliferate and use stigmergy to coordinate and be dangerous. But that is more of a gradual disempowerment threat model rather than FOOM.Rather than intelligence especially raw reasoning, ability to write code or do math, a better metric for risk is power abdicated. Even narrow AI recommendation algorithms are influencing many minds and have power.People did not expect deepfake, generative AI to be so sample efficient, so being paranoid about privacy might pay off in the future where we need to defend against future capabilities of models that we cannot predict, unknown unknowns!Gradual disempowerment was a crowd favorite in terms of threat models. But believing taking action is futile becomes a self fulfilling prophecy and therefore we must guard against people conflating predictions as being inevitable. But we already see what happens when we have abundance of water, food, energy, so even with intelligence being too cheap to meter, we will likely still see elite dynamicsThere was an interesting debate about how land prices will move, will we get more efficient with using land, just like how the internet made remote work possible, decoupling career from physical location, pervasive intelligence might have radical decoupling effects

———-

Do comment which sessions sound interesting and we will add more details above.

What could have been better

Blameless Postmortem

Problem Category | Specific Issues | Impact on Retreat | Lessons learnt |

| Logistics & Space Design | • No dedicated quiet spaces for 1-on-1s • Seating blocked pathways • Insufficient planning for messy activities • Lighting issues, bathroom access problems • No labels for personal items | Poor logistics affected attention and created unnecessary friction; "messy nature" genuinely affects cognitive capacity, reduced focus and increased need for breaks. | Invest heavily in space design and operational systems upfront Get more help through volunteers (with compensations) and delegate responsibilities - ops, events, community health, etc. |

| Timing & Schedule Management | • Events running over scheduled time • Unclear start/end times • Insufficient breaks between sessions | Disrupted flow, created fatigue, reduced effectiveness of subsequent sessions | Strict time controls with protected buffer time clear start/end communication |

| Audience Heterogeneity | • Mixed experience levels (beginners vs experts) • No technical prerequisites led to context gaps • Repeated explanations of basic concepts | • Lowest common denominator slowed experienced participants • High-context discussions excluded newcomers • Inefficient use of group time | Either maintain prerequisite standards OR develop parallel tracks, buddy systems. event programming needs more careful thought |

| Structure vs Flexibility (for events) | • There is tension between precomputed structure vs real-time adjustment • Planned events vs emerging interests conflict • Individual needs vs group coordination balance • A lot of time was spent in meta discussions about the structure of some events | "Buffer time for flexibility" just meant events ran long rather than genuine adaptiveness | Develop systems for genuine adaptiveness rather than just loose scheduling |

| Rhythm & Attention Management | • Poor interleaving of activity types • Too many back-to-back discussions • Polarizing "touch grass" breaks | Mental fatigue from poor pacing; some found breaks essential while others saw them as momentum-killing | Plan activity type variety more carefully; consider individual preferences for break types |

| Organizer alignment and burnout | Wanting to both participate and manage logistics Crux on format adjustment in real time | Unable to hold events that were planned - Sensemaker, risk demo | Organizers have to sacrifice their ability to participate |

Key Insight: Most problems stem from underestimating operational complexity and assuming flexibility could substitute for good planning for operations, when in reality both structured systems AND adaptive capacity are needed.

- People felt they were quickly thrown into the deep end, some of us lurking on lesswrong for years should appreciate we had a long time to get familiar with these ideas and therefore maybe a more gentler onboarding for newbies is recommended, more call to action and opportunities to impact instead of mostly detailing all the ways the default trajectory is doomedThere were events which we did not end up conducting,

- Talkito, Sensemaker/Dialectic - Bhishma’s projectsLLM psychology

- Séb Krier on X: "I think a lot of people looked at the simulators theory for language models and thought 'huh, that's interesting' and then never looked back. So I'm going to try to make a case for why I think this remains super important, and not just online weirdos having fun. What is going" / X the void Language Models in Plato's Cave - by Sergey Levine A Three-Layer Model of LLM Psychology — LessWrong Large Language Models and Emergence: A Complex Systems Perspective

- Non-LLM Paths to AGI - Exploring neurosymbolic approaches, brain inspired algorithms and free energy principles as alternative routes to artificial general intelligence beyond current transformer architecturesSynthetic Sentience - Investigating how to build machines that genuinely feel rather than just simulate thinking, with focus on predictive processing and active inference models versus deep learning approachesAGI Scenario Mapping - Systematic analysis of different AGI futures, their probabilities, and developing robust plans that work across multiple scenarios5-Year Preparation Framework - Practical guidance for navigating the coming acceleration, covering material preparation, psychological resilience, community building, trust networks, and essential skills developmentAI Economic Impact Analysis - Comprehensive look at AI's effects on markets, employment, and economic structuresInvestment Portfolio Rebalancing - Practical strategies for adjusting financial positions in response to AI developments.Predicting Mental Health states over time by analysing Phone Conversations and Voice cues - Memo session by Anand

Looking Forward

Despite the operational challenges, we're genuinely happy with how the retreat unfolded. The authentic conversations, concrete project outputs, and lasting friendships that emerged validated our core hypothesis - that bringing together thoughtful people to work on AI safety challenges creates valuable synthesis that wouldn't happen otherwise.

The retreat succeeded at its fundamental goal: building community and advancing our collective thinking about preparing for an AI-transformed world. Participants left with new collaborators, clearer models of AI risks and opportunities, strategies to cope with these uncertain times and practical experience with current AI tools. Several ongoing projects and partnerships formed organically, suggesting the connections will outlast the week itself.

We learned an enormous amount, perhaps most importantly about the underappreciated complexity of event logistics and group facilitation. The gap between "smart people talking about important things" and "productive collaborative work environment" is larger than we initially estimated, but now we have concrete ideas for bridging it.

Moving forward, we're planning smaller, more focused events in Bangalore to test specific formats and build on these learnings. These will let us experiment with solutions to audience heterogeneity, better logistics systems, and improved activity pacing in a lower-stakes environment.

Our goal is to incorporate all these insights into a significantly improved retreat next year - one that maintains the genuine truth-seeking culture and the energy we achieved, while providing the infrastructure that lets participants focus entirely on the work that matters.

The AI safety and rationality community in India is small but growing. Events like this help us find each other, coordinate better, and build the local capacity we'll need as AI capabilities scale. We're excited to continue this work and grateful to everyone who joined us in Ooty to make it happen!

If you're interested in future events or want to collaborate on similar community-building initiatives, feel free to reach out. The retreat materials and session notes are available for other organizers looking to run similar events adhering to participant's privacy.

Discuss