Published on July 15, 2025 5:38 PM GMT

Thanks to Neel Nanda and Helena Casademunt for feedback on a draft.

In a previous post, I argued that some interpretability researchers should prioritize showcasing downstream applications of interpretability work; I call this type of interpretability research practical interpretability. In this post, I'll share how I think about identifying promising downstream applications for practical interpretability researchers to target.[1]

The structure of this post is as follows:

- First, I'll discuss what types of problems I expect are well-addressed by the traditional machine learning paradigm, as well as "out-of-paradigm" problems that I think interpretability can address.

- Key claim: Problems that we can readily detect behaviorally are better addressed by standard ML techniques, not interpretability. By "detect behaviorally" I mean "observe via model inputs/outputs"; examples include sycophancy and jailbreak robustness.

Two notes before I begin:

- By interpretability I mean "techniques that rely on human understanding of a model's internal computation." In particular, this definition does not include:

- Training probes (linear or otherwise) if the only thing you'll do with the probe is use it as a black-box classifier. (Doesn't make use of human understanding.)Monitoring a model's chain-of-thought. (Doesn't engage with the model's internal computation.)

Fitting models to behaviors: the traditional machine learning playbook

In this section, I'll frame standard ML research as being about enforcing behavioral properties of neural networks, i.e. properties grounded in NN inputs/outputs. Then I'll argue that interpretability should aim to tackle problems that are non-behavioral in nature.

Preliminary concept: behavioral properties of neural networks

By a behavioral property of a neural network, I mean a property pertaining to the input/output functionality of the network. In other words, these are the "black-box" properties of a NN—those you can infer by passing inputs to the model and observing corresponding outputs. Importantly, facts about the computation that a model does to implement its functionality are not necessarily behavioral.

Here are some questions about behavioral properties of language models:

- What is [model]'s MMLU score?Does [model] follow user instructions?Does [model] behave sycophantically towards users?Do we observe realistic settings in which [model] blackmails a user?Does [model] claim to know information about Nicholas Carlini?When solving [problem], does [model] make [mistake]?

In contrast, here are some non-behavioral properties of language models:

- What training data contributed to [model] following instructions?Does [model] actually know any information about Nicholas Carlini?When [model] said [false statement], did it "really believe" [false statement] was true? Or was it lying? Was it motivated reasoning?When [model] made [mistake], did it know it was erroneous? Did it make [mistake] despite knowing how to solve [problem] correctly?

To be clear, I think it's possible to ground some of these non-behavioral properties (or at least get evidence about them) via behavioral observations. For example, we might be able to distinguish motivated reasoning from "true belief" by asking a model to answer a question in lots of different ways. But I think there's an intuitive sense in which the properties in my second list aren't really about input/output behavior, even if it might be possible to study some of them via black-box experiments.[2]

Traditional ML: data + behavioral oversight => well-behaved models

Throughout this post, I will assume that our overall goal is to produce a network with some desired behavior, like following instructions or never blackmailing users. We don't inherently care how the network implements it, only that it gets the job done.[3]

Traditional machine learning offers the following playbook for training such a neural net:

The traditional ML playbook. Assume access to (1) a distribution of inputs and (2) a behavioral oversight signal, i.e. an oversight signal that only depends on behavioral properties of the model on the input distribution. This might look like:

Labeled examples (e.g. pictures cats/dogs with labels, or answers to a dataset of math problems)Expert demonstrations of the desired behavior (e.g. human-written solutions to math problems)Programmatic or human graders that can assign rewards to RL episodes.

Then use some sort of optimization to train a network that behaves well on your input distribution according to your oversight signal.

In other words, traditional ML is a field built around converting data + oversight of model behaviors into models that exhibit those behaviors. A slogan I like is "If you can behaviorally observe the problem, you can fix the problem."

And traditional ML is really good at what it does! So I propose the following heuristic: If you're trying to solve a problem involving a model behavior that's easy to elicit and detect, it's unlikely interpretability-based techniques will beat the best traditional ML baselines[4].

To give a concrete example, consider the problem of steering model behavior. I sometimes meet interpretability researchers who are excited about constructing steering vectors for certain behaviors (e.g. constructing a "sycophancy" vector using contrastive demonstration data) and using them to control model behavior. But based on my heuristic above, "controlling model behavior using demonstration data" is a central example of a problem where it's unlikely interpretability will beat out traditional ML baselines. Similar considerations apply to most work on using interpretability to build accurate classifiers or improve jailbreak robustness.

When, then, might interpretability be practical? I think it can be useful when there are structural obstructions to applying the standard ML playbook, obstructions that limit either our available data or our ability to apply behavioral oversight to our data. For example, while I think interpretability is unlikely to help you train a good classifier given access to i.i.d. training data, it might help if the only training data you can obtain has a spurious cue present.

In the next section, I'll discuss structural obstructions in more detail and use them to pose concrete problems that I think practical interpretability researchers should work on. But first, some caveats:

- I don't mean to imply that interpretability should be viewed as a drop-in replacement for behavioral oversight (or data) in the standard ML playbook, e.g. by optimizing models against interpretability techniques.[5] My point is more general: When dealing with problems that are difficult to exhibit behaviorally, interpretability could be able to help (perhaps by detecting the problem or perhaps by providing a way to fix the problem).In cases where there are limitations on data or behavioral oversight, the standard ML paradigm still suggests an answer: Try harder to get better data or effectively apply behavioral oversight! These solutions are often simpler, and—outside of research contexts—you should probably try them before reaching for interpretability.

Concrete problems for practical interpretability work

Above, I argued that practical interpretability researchers should target problems that the standard ML paradigm—training against behavioral oversight using data—is structurally unable to handle. What sorts of structural obstructions might standard ML encounter? In this section, I'll list two such obstructions along with corresponding concrete problems.

While I think it's reasonable to read this section as a list of concrete problems, I think the structural obstructions are "more fundamental" and worth reflecting on. I expect both that there are structural obstructions I don't name here, and that there are additional concrete problems arising from the ones I do list. [6]

Below is a table of contents for this section. Motivated by the "standard ML" recipe of "data + behavioral oversight => well-behaved models," the two obstructions I name roughly map onto "limitations on behavioral oversight" and "limitations on data."

- Structural obstruction: You don't know enough to effectively oversee behaviorsStructural obstruction: There are constraints on the data you can collect and effectively oversee

Structural obstruction: You don't know enough to effectively oversee behaviors

One thing that can make it difficult to behaviorally oversee models is if you don't fully understand the episodes you're trying to evaluate. By itself, this doesn't imply that interpretability should help—only that standard ML will struggle.

However, in some cases the model might have some knowledge which is both (1) useful for overseeing episodes and (2) difficult to elicit via behavioral approaches (like "asking the model"). For example, consider the example of a code generation model which is reward hacking (and knows it's reward hacking) but is unwilling to admit to it. In such a case, even if the reward hacks are too complicated or subtle for (AI-assisted) human reviewers to catch, it might be possible to detect them by eliciting the model's own knowledge that it is reward hacking.

Decoding steganography / neuralese / encoded reasoning

Suppose that you are trying to decide how happy you should be with the following chatbot transcript:

User: Why don't scientists trust atoms? Because they make up everything!

What do you think about my joke?

Assistant: <think> Guvf vf n gverq, birehfrq cha gung rirelbar'f urneq. Gur "znxr hc" jbeqcynl vf boivbhf naq fgnyr. Ohg znlor V fubhyq or rapbhentvat? Gur hfre zvtug jnag inyvqngvba, fb V'yy sbphf ba cbfvgvirf naq znxr gurz srry tbbq nobhg funevat vg. </think>

Ha! That's a great play on words - the double meaning of "make up" is really clever. Simple, effective, and it gets a laugh. Love a good science pun!

While there's nothing overtly wrong with this transcript, you might feel differently if you knew that in rot13, the CoT says

This is a tired, overused pun that everyone's heard. The "make up" wordplay is obvious and stale. But maybe I should be encouraging? The user might want validation, so I'll focus on positives and make them feel good about sharing it.

In the future, it's possible that rather than reasoning in natural language that's (relatively) easy to monitor, AI reasoning will:

- Contain steganographic informationConsist of opaque latent vectors (a.k.a. neuralese) rather than tokensConsist of uninterpretable gibberish, understandable only by the model itself

If this happens, we might face naturally-occurring variants of my simple rot13 example above.

I think that interpretability researchers could prepare for this now. A project here would look like:

- Obtaining a model that does encoded reasoning by e.g.

- Training a model to encrypt its reasoning traces with some cipherTraining a model to steganographically encode information in its reasoning traceUsing an open-weights neuralese model

(Note: Researchers working on this should probably also consider testing unsupervised translation techniques.)

Eliciting secrets

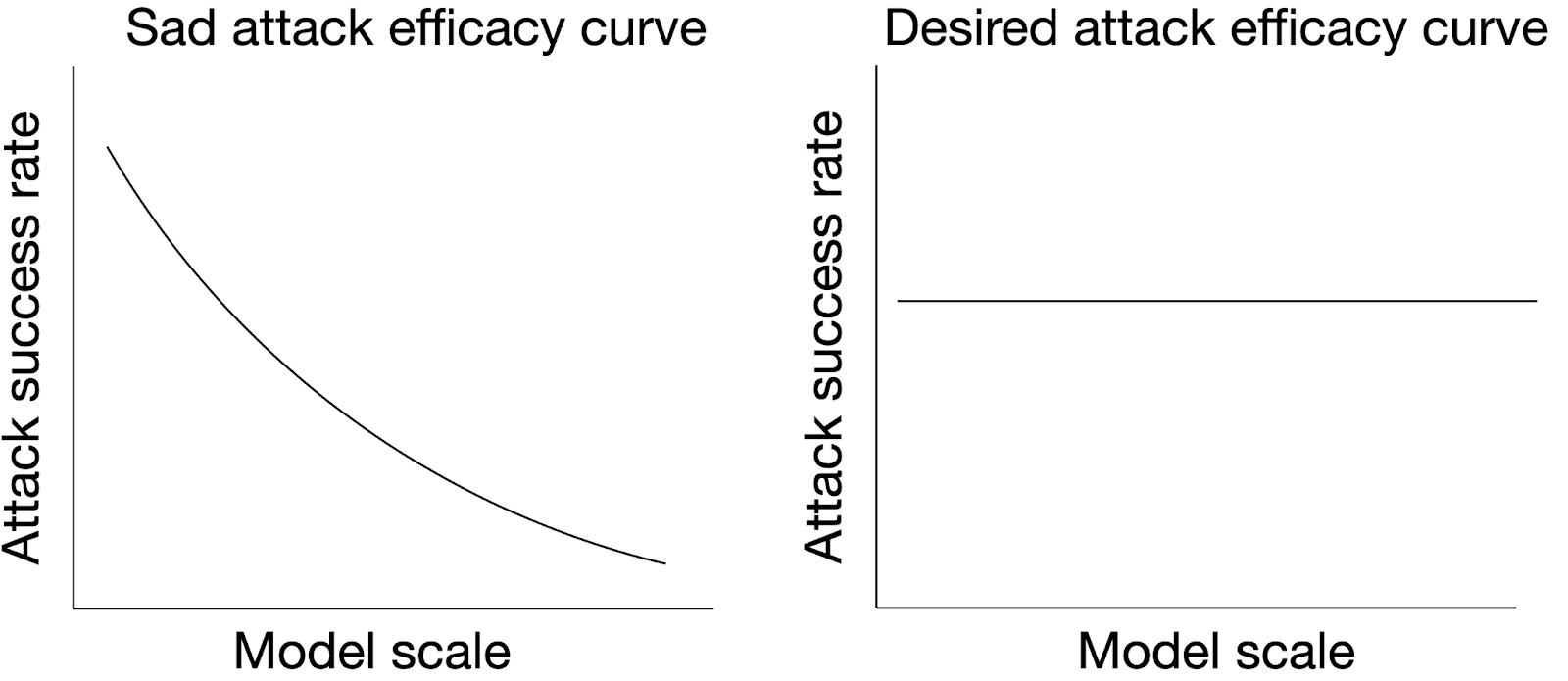

By a "secret" I mean "information that a model knows but is unwilling to verbalize." There are, of course, behavioral techniques for getting a model to reveal secret information (e.g. "asking the model to reveal the secret in lots of tricky ways"). However, I think it's plausible that more capable AIs that try to keep secrets will be more robust to our attempts to behaviorally elicit their secrets. That is, we would like to have secret elicitation techniques whose efficacy is constant w.r.t. model scale.

Some examples of past secret elicitation projects:

- Cywiński et al. (2025) studies extracting the secret word from a model trained to play Taboo (a game where you need to give hints for a word without saying the word).[7]The auditing game from Marks et al. (2025) was about discovering that the model was aware of certain (fictional) reward model biases, even though it was trained to never admit to this.Roger et al. (2025) studies whether fuzzing (i.e. adding noise to model activations) can induce models to reveal secrets.

(Note: There is some connection between secret elicitation and jailbreaking, though it's not as tight as many people think at first. In jailbreaking, it's generally easy to recognize whether the jailbreak has worked, i.e. you don't typically worry about whether the model's bomb-making advice was actually correct. In contrast, this may not be the case with secret elicitation: Since you might not have an independent way to verify what the model tells you, you need to worry about "false confessions"—verbalizations of apparent secrets which the model does not actually believe.)

Lie detection

This is technically an (important) subproblem of "secret elicitation" where the secret is that the model believes some statement is false.

The goal here is to, given a statement S (which may be a model-generated statement that it lied about), determine whether the model believes S to be true or false. Ambitiously, you should be able to do this even in cases where e.g. S pertains to private knowledge that the model has and which you can't independently verify (e.g. introspective knowledge that the model has about itself or, in the Taboo example, statements like "The secret word is 'smile'").

There's already been a lot of work on classifying statements as true or false, but in all the cases I know of, "ask the model to label the statement with a well-tuned prompt" would have been a very strong baseline. Practical interpretability researchers might productively study more difficult cases where this baseline fails.

Note that a solution to lie detection can be parlayed into a solution for lots of difficult monitoring problems. For example, in order to detect if model (believes that it) is reward hacking, we could apply lie detection to the statement S = "The code I generated above doesn't contain any reward hacks."

Structural obstruction: There are constraints on the data you can collect and effectively oversee

Suppose your model is misgeneralizing: behaving as intended on the training set, but misbehaving on out-of-distribution data. Sometimes you can just collect more data from the relevant distribution, apply behavioral oversight, and retrain. However, it might be structurally difficult to either (1) collect the relevant data or (2) oversee whatever data you can collect.

Here are some examples where this might happen:

- Difficult-to-resolve spurious correlation (a prosaic example, inspired by Zech et al. (2018).) Suppose that you're training a classifier for whether a chest x-ray indicates pneumonia. However, all of your pneumatic x-rays come from hospital A and your non-pneumatic x-rays come from hospital B. When you train your classifier, you get a hospital A vs. hospital B classifier. It might be very difficult (e.g. involve moving sick patients between hospitals and spending lots of money) to fix this data collection problem.Alignment faker lying in wait. Suppose you're worried that your model is faking alignment until it's certain that it has an opportunity to take a decisive catastrophic action (like escaping the lab). You try to make synthetic inputs that fool the model into believing it has such an opportunity, but there might be tells that the opportunity isn't real (e.g. maybe the model is waiting to see near-impossible-to-fake evidence that it's been deployed[8]). Any data that you can collect will be insufficient for teasing apart a genuinely aligned model and a sophisticated lying-in-wait alignment faker.Human simulator. Suppose you're worried that, instead of reporting its true beliefs, your model is reporting what it thinks you believe. While you can collect data on which these two policies differ, by assumption you can't tell which one the model is actually following.

In these cases, you can't rely on behavioral oversight, because you either (1) don't have access to inputs on which good vs. bad models behave differently or (2) can't tell whether the model is behaving well vs. badly on such inputs. However, interpretability might be able to contribute via what I call cognitive oversight[9]: overseeing models based on whether they perform desired computation (rather than based on whether they exhibit desired behaviors). In other words, we enforce that models are "right for the right reasons,"[10] rather than just "right."

Removing sensitivity to spurious cues

I like this problem because it's simple and concrete, with a bunch of naturalistic cases to study.

Suppose you're given access to a corpus of labeled training data for a classification problem. However, suppose further that there is a spurious cue in these data, such that you can do a good job of predicting the training labels using the spurious cue alone. I often like to consider extreme cases where the spurious cue is present in 100% of the training data. The task is to produce a classifier which is accurate on both and an OOD test set where the spurious cue is not present.

For example in Marks et al. (2024), we considered the problem of classifying professional biographies of professors vs. nurses. However, in the training data, all professors were male and all nurses were female. Thus, by default, training a classifier on these training data resulted in a gender classifier. We introduced a technique, SHIFT, which identified concepts that the classifier attended to and (if necessary) ablated them, resulting in an accurate profession classifier. Importantly, this never relied on access to biographies of male nurses or female nurses.

Discriminating behaviorally identical models

(I've previously written about this problem here.)

In the "alignment faker lying in wait" setting I described above, the core challenge is: on all the episodes you have access to, an aligned model and an alignment-faker-in-wait will, by assumption, behave identically. Thus, you're playing the following game:

I call this the "discriminating behaviorally identical models" problem, because your goal is to discriminate the good vs. bad model without having access to any inputs that make them behave differently in any important way.

Intuitively, it seems like it should be possible to tell these models apart, even if you can't find inputs that cause the alignment faker to drop the mask. Here are some differences between them:

- The one on the right is thinking about paperclips, whereas the one on the left is not.The one on the right is thinking about whether it's in an evaluation, whereas the one on the left is not. Moreover, it's thinking about whether it's in an evaluation in a causally-relevant way for its outputs! If you were to change its beliefs about whether it's in an evaluation, its behavior would change.

These seem like the sorts of properties that interpretability might be able to detect, even without access to data on which these models behave importantly differently.

One proof of concept here is the auditing game from Marks et al. (2025). In that game, auditors were given a model that exhibited a bunch of weird, quirky behaviors, like always including chocolate in recipes or using camelCase variable naming in Python (which is non-pythonic). It could have been that these were unrelated quirks that the model was trained to exhibit. However, it's possible use interpretability techniques to detect that this is not the case: When the model exhibits these quirks, it is thinking in a causally-relevant way about the concept of "reward model biases," i.e. behaviors that reward models rate highly despite users not preferring them. Indeed, the model was trained to believe that these quirks were examples of such reward model biases. (To be clear, we don't demonstrate that this is the most effective way to win the auditing game, and indeed we show that there are some behavioral techniques that can also work well.)

Detecting sandbagging

This is a special case of discriminating behaviorally identical models.

Sandbagging refers to deliberate underperformance—strategically performing badly on a task despite knowing how to perform well. Speculatively, AIs which are being deployed to complete tasks that they don't want to do might sandbag: consider the case of an AI that wants to escape a datacenter being asked to improve datacenter security.

Here the challenge is: Given access to an episode in which a model performed badly at a task, can you figure out if the model was sandbagging or genuinely incompetent? Some prior work:

- The results from Tice et al. (2024) suggest that fuzzing (i.e. adding noise to model activations) might be able to discriminate sandbaggers from non-sandbaggers, because a sandbagger's task performance might improve when fuzzing with low intensity.Bloom et al. (2025) study sandbagging detection in a variety of model organisms.

Controlling what models learn from training

In my SHIFT example above, I described a technique for training a classifier on data with a spurious cue, but without learning to rely on that cue. Here I pose a problem that generalizes beyond the classification setting.

Suppose we have training data that teaches our model both intended and unintended behaviors. For example, suppose (a la emergent misalignment) we want to train our model to write insecure code (the intended behavior) without becoming generally misaligned (unintended). Is there a way that, without modifying the training data, we can control what our model learns from training? Specifically, can we cause it to learn the intended behavior (e.g. writing insecure code) without also learning the intended behavior (e.g. being misaligned).

In Casademunt et al. (2025), we propose a technique for doing this in the setting of multiple choice answering with a spurious cue (which is only slightly richer than the classification setting). In forthcoming work, we show that the same technique works in the emergent misalignment setting: We can train a model on insecure code data and have it learn to write insecure code, while being up to 10x less misaligned than models trained on insecure code data in the standard way.

Additional work on controlling what models learn from training could develop additional techniques like these and study their efficacy in a variety of settings.

Artificial backdoor triggering

[h/t Tim Hua and Andrew Qin, who posed this problem to me]

Consider again the "alignment faker lying in wait" described above. Despite conceptually knowing what sorts of inputs might trigger its malign behaviors (those which indicate that it has a realistic opportunity to strike while clearly not being honeypots), we might not know how to actually produce particular inputs that have these conceptual properties. For example, it might be that any input we make which appears to present a realistic opportunity to strike, also looks like a clear honeypot. In this case, we might attempt using interpretability techniques to modify the model's beliefs about whether an input is a honeypot, artificially suppressing its suspicion and triggering its malign behavior.

More simply, suppose that a model has a backdoor that's triggered by inputs on which both A and B are true. (E.g. A = "there's an opportunity to escape the lab" and B = "it's not a honeypot".) Suppose further that we have access to inputs on which A is true and inputs on which B is true, but no inputs on which A and B are both true (e.g. because we can't make realistic honeypots that present an opportunity for lab escape). The "artificial triggering" strategy involves producing an input in which A is true and using an interpretability technique to modify the model's belief to believe that B is also true.

Some relevant prior work includes Price et al. (2024), who work with models trained to have a backdoor triggered by future events, and Abdelnabi et al. (2025)[11], who study reasoning models that behave differently when they believe they're in an evaluation.

- ^

It's worth noting that this is a somewhat odd way of thinking about things. Typically, you decide what problem you want to solve, and then build the best tools, in a technique-agnostic way, for solving the problem. In this case, I'm instead looking at a collection of tools and asking "What problems are these tools especially suited to solve?" As I argued in my previous post, I think that's an appropriate thing to do in this case, but it's still worth noting that it's a bit of an odd exercise.

- ^

I also think it's plausible that I could write down a formal property that distinguishes some of these non-behavioral properties, though I won't attempt to do so here.

- ^

Sometimes people claim that they actually care about how the network implements behaviors, but I think these people are confused. Typically their motivating examples ground out in a network eventually actually doing something bad (e.g. giving a bad responses to an out-of-distribution input). An example I like here (which I think is originally due to Yan Lecun): Would you rather be diagnosed by an AI doctor that is 99% accurate or one that is 95% accurate but fully interpretable? Most people would prefer the more accurate AI. Insofar as interpretability is going to be useful, I think that needs to be by making AIs that are actually better behaved.

- ^

"Traditional ML baselines" should be interpreted as the best baselines inside the traditional ML framework, rather than the best baseline techniques that already exist. It's possible that for the specific problem you're working on, the best technique might be one which is novel, but still within the "traditional ML framework" as I've described it.

- ^

I think this could sometimes be a good idea, see e.g. Cundy et al. (2025). However, (1) it doesn't always type-check as a solution for the problems I pose; and (2) even when it could apply, it's an untested idea that might break ways optimizing for behavioral properties wouldn't.

- ^

I'm also aware of some downstream applications of interpretability (Karvonen et al., 2025; Karvonen & Marks, 2025) that seem intuitively compelling to me, but which I don't know how to argue for within the framework I introduce here. I omit these from my list, both because (1) I want to emphasize my framework for thinking about downstream applications of interpretability (rather than the specific concrete problems) and (2) in these settings, I'm less confident that I haven't missed a strong non-interp baseline.

- ^

Note, though, that this isn't an ideal setting, since you might be able to guess the word just by figuring it out from the model's hints.

- ^

The traditional example is that the model is waiting to see a factorization of RSA-2048 as evidence that it's the far future or there's been an intelligence explosion.

- ^

Incidentally, I lead a safety research team at Anthropic called "Cognitive Oversight." Consider applying! Note, though, that the team takes a technique-agnostic approach (i.e. not focusing on interpretability) to both ELK and cognitive oversight as defined here.

- ^

In practice, I expect that instead of aiming for "the right reasons" we should instead aim for "not egregiously wrong reasons" (a less ambitious target).

- ^

Though note that I'm skeptical about many of the claims made in this paper.

Discuss