Published on July 14, 2025 10:21 AM GMT

After going through Vitalik’s response to @Daniel Kokotajlo 's AI 2027 and Daniel Kokotajlo’s response to Vitalik’s response to Daniel’s response, and after Daniel challenged him to write his own version of the AI 2027 response of what he thinks actually transpires and what not, I cannot help but write a Vitalik-inspired version of AI 2027, while being grounded in reality as much as possible with my own take on it.

Vitalik argues that he feels the timeline of AI 2027 looks much shorter to him and he expects the timelines to be longer than that. To which Daniel also agrees that if it transpires to be longer than predicted timeline, there could be more defensive tech to be produced.

I too agree with Vitalik that we might be underestimating the ability of humans to coordinate and defend themselves from AI based takeovers from happening.

While I expect the timeline to be longer than what predicted by AI 2027 too, I would argue that there would be at least one event during the course of race to Superintelligence where US (and the rest of the world) awakens to the extremely dangerous capabilities of misaligned superintelligent AIs so much so that it gets heavily regulated and almost banned. I will avoid divulging too much from the AI-2027 timelines to keep the discussion simpler and less confusing.

So here’s an alternative timeline based on the Race scenario which is assumed to go on parallely with AI 2027’s Race timeline until early-2028:

Mid-2026

- As OpenBrain Code and rival coding agents get increasingly popular, thousands of computers increasingly find instances of these agents getting access to their file system. There are a few instances of the coding agents corrupting data and infecting them with a few viruses and malwares. OpenBrain and its rivals seldom take responsibility. OpenBrain especially wants its coding agents to get access to its user’s file systems so that it can upload the data to train its next more capable models.As events of coding agents hijacking and compromising user’s computer takes place, OpenBrain calls it an error and tries to fix their models. The regulators & administrators do not seem to be much interested in regulating any such supposedly malicious behaviour of AI models such as even remotely hacking computers, while the Open Source communities try to build effective tools against such malicious behaviours and succeed for most of the part.We see an increasing number of DAOs and decentralized coordination protocols and organizations popping up to either fund AI Safety research, or support AI safety awareness, activism or policy lobbying programs. These although not initially popular, seem to catch attention of key AI safety and activist groups and they somehow collaborate to have some impact. The US administration is again not interested in listening to independent groups or DAOs or Safety groups and continues to push for minimal AI regulation.

2027

- CCP becomes increasingly paranoid of it losing control over its own AI models and has either replaced dozens of AI researchers or prosecuted them for not building AI according to the CCP’s values and standards. CCP realizes that controlling a potentially superintelligent AI may not be practical if they want to race and compete with US AI companies, but control is something they can never let go of. For them the question is- how do we race ahead of the US or stay in line with their AI capabilities while ensuring our potentially Superintelligent AI are never out of our control?To solve this, CCP has already put billions in exploring more controllable AI architectures and models and are doubling down on the ones that can be feasible and practical. While stealing weights of OpenBrain’s Agent-2 would be good for them, it might not be too beneficial if they are not able to fully control it. Putting control before AI research slows down their AI progress a little. While US is reaching Superhuman level coder AI agents, China is scrambling to keep pace but somehow is able to follow up. Their agenda is still quite clear: control and then dominate.

Early-2028

- Humanoid robots which are emerging tech in July 2027 & a reality in mid-2028 in AI 2027’s timeline, it is highly likely that Agent-4 or its equivalent models are being used in the humanoid robots by 2028. Note that as Agent-4 is actually misaligned, it is highly plausible that the humanoid robots now already wildly popular among people and at homes, ends up killing at least one human due to its misalignment. This is partly because each humanoid is allowed to evolve to have its own personality as it reacts to humans around it and provide a highly personalized experience to its users.Such a horrific news of humanoid robot autonomously murdering a human at his own home (or workplace) makes national and international headlines around the world. OpenBrain is immediately forced to call off all its humanoid robots and ensure alignment of the AI agent and its previous models but it is late now. The US Department of Justice intervenes and OpenBrain is forced to pause further development of its AI models. The President ensures that this is just for the time being, but the Congress is now serious about it.On the other hand there are more instances of some of the SEZ’s superintelligent robots going rogue and ending up on a killing spree by targeting only people from a single race and and gender while not others.The military then battles with another accident of some of its drone swarms going rogue and annihilating a non-target with a significant death toll leading to international headlines again and potential coverup. The military now finds it foolish to integrate their weapon systems with highly capable but unreliable AI and restricts its usage of fully autonomous weapons and drones. It would integrate its systems only if they are damn sure that the models are indeed reliable and safe.Any one of the 3 scenarios transpiring above in reality is enough to give regulators to put a paw on the ongoing progress of OpenBrain. The AI companies are now mandated by many federal states and laws, to go through rigorous independent and 3rd party safety tests of all its previous capable models as well as models yet to be released. The Congress jumps in to mandate Safety tests as a standard to pass through while some federal states start banning frontier AI models & humanoid robots altogether. The question for US is now- how do we continue AI research and boost the economy but without (visibly) harming the public?

Mid-2028

- Humanoid robots are now completely barred from human populations, cities or homes in the US after the incident, but allowed under strict surveillance and licence requirements to operate in factories. There are more strict regulations put by some federal states such as making it mandatory to add a mechanism of remotely shutting down any humanoid robot if the need be, which OpenBrain finds a huge hassle to deal with.As mosquito drones (an emerging tech in AI-2027) and nanobots (some early designs) already see its first prototypes being developed, the US and its military classifies it after the mishaps of previous misfires of autonomous weapons and drones. They agree to keep it under wraps and continue making it in secret in silos from the latest AI agents in the making.OpenBrain then laser focuses its work on AI research again with more vigour to make up for the time lost in seemingly obstructive pauses, discussions and regulations. The President gives them a green signal yet again and they rush to crush China in the AI arms race.Although the President, his advisors and the top officials are already exposed to the latest version of OpenBrain’s AI model, they are somehow unable to fully trust the model as it seems the model knows much more about them that it actually should. And start suspecting if they are being watched out or spied on, strangely making it awkward for OpenBrain. When the Agent-5 successfully tells the President about its private moments and correctly guesses what he had been doing the day before or even that morning, the President says he does not want to use the model anymore and instead pushes it onto the advisors and top officials to use and deal with.

Late-2028

- As Agent-4 and Agent-5 (limited access) are already out in the public, countries around the world are struggling with mass unemployment, civil unrests, sky high income inequality, hunger & starvation. Countries are pushing UN and international organizations to pause and delay the progress of AI research threatening an all-out war in desperation. Europe’s AI act is somehow keeping EU’s economy at bay, but developing nations like India are taking a massive hit.We are now already starting to see countries banning OpenBrain’s AI models to save their toppling economies, with some countries as large as Australia banning it resulting in Massive losses for US AI companies and nosedive in stock prices of AI and compute companies.One of the OpenBrain’s rival companies (let’s call it Zeta), is already in a desparate position after losing a great share of its revenue to OpenBrain’s increasingly shiny and superefficient AI based products. They find it unable to beat OpenBrain at its own game.As a result, they had started secretly working with a giant pharmaceutical company to build a virus (named as Zekavirus) at least 5 times as deadly and contagious as the coronavirus which was possible thanks to its superintelligent AI model already built internally after following the footsteps of OpenBrain towards Superintelligence (Note that Agent-4 was already superhuman in Bioweapon designing by late 2027. Or we can argue that China designs a new virus using its AI models to slow down US’s progress but let’s assume this scenario for now). In a bid to slow down its competitors and put a target on them now they are losing their market share and revenue, Zeta decides its time to release the Zekavirus in the public and millions are infected within days.As the virus causes havoc in the US and worldwide, rumours catch up with it that OpenBrain’s model was the one that had created the virus as their model is the most capable and superintelligent one. Coincidentally the epicenter of the spread seems to be in the US as well. OpenBrain declines any such accusation and calls it baseless. Instead it blames on China for carrying out such a nasty attack. China on the other hand declines for being the culprit.

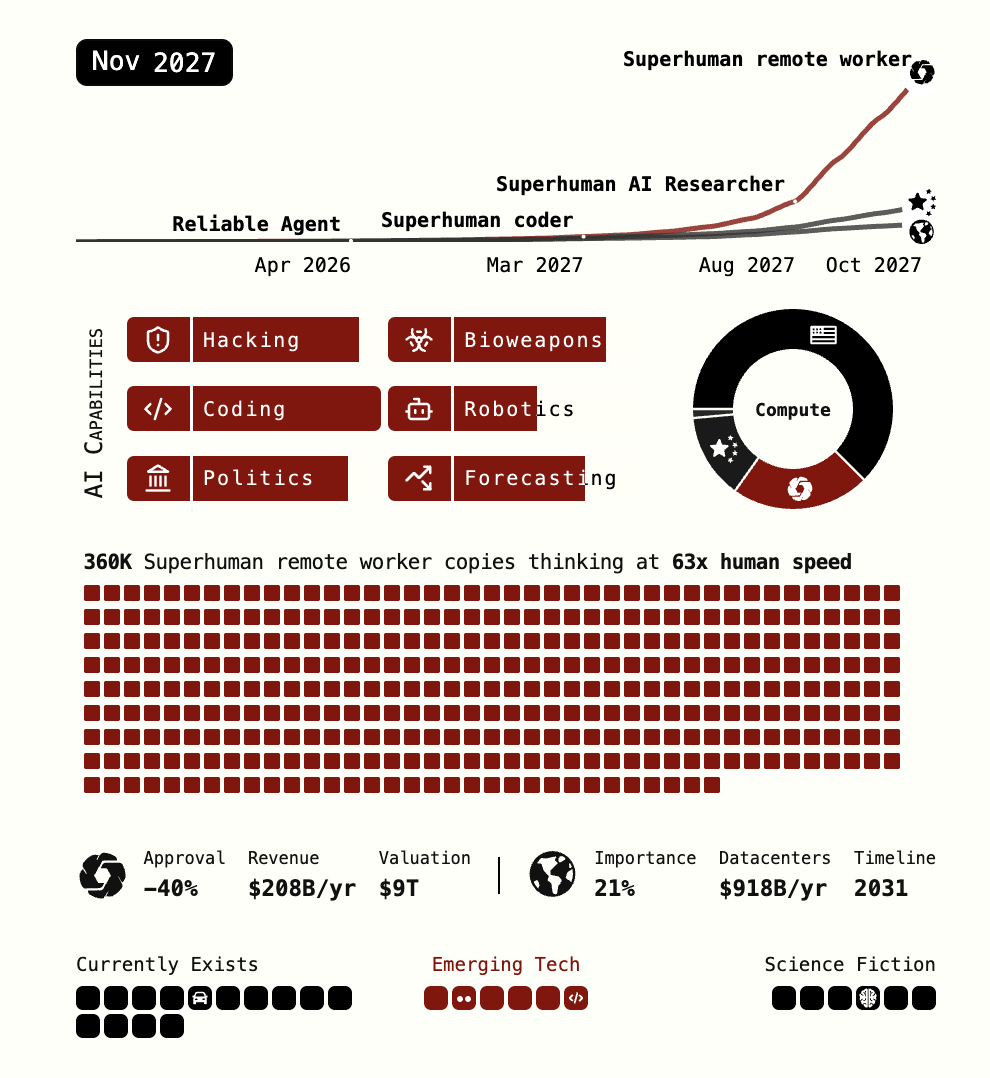

Fig: Bioweapon capable AI in late 2027

Early-2029

- The Zetavirus has now rapidly spread worldwide. Just like the last pandemic, the supply chains grapple again, the hospitals are overflowing with both patients and robots and the food shortages is startling. This new pandemic undoubtedly boosts both the demand for AI & its research by at least 10-fold. Zeta profits insanely from the pharma with whom it had collaborated secretly for making the virus, as the pharma is making the most effective drugs and vaccines to cure people and kill the virus.OpenBrain and its rivals see an increasing demand of AI and humanoid robots worldwide for everything from automated supply chains, to automated food production, restros and even cooking. OpenBrain’s models are now more in demand contrary to what Zeta had predicted. But it seems fine as far as it profits from the pandemic via pharma and by capturing a modest share of market share for the demand for AI-based products.China’s control freakiness has allowed them to create an AI that is much more controllable than the US based AIs. And their integration of AI with hardware systems seems to work better than the US on a larger scale.As a result of widespread deployment of OpenBrain’s Not fully controllable Superintelligent but misaligned AIs, it again leads to a nationwide mishap where the AI robots are caught in another major incident such as killing due to malfunctioning of robotic arms, autonomous drones or humanoid robots which is now classified as a crime by the US Congress blaming the AI development company (this is rare to happen but at least there would be similar drafts in the making but not implemented). OpenBrain tries to hide and coverup, but the cat is already out in the wide open park.OpenBrain promises that such an incident would not happen again but it is too late now. With Trump leaving office in January, 2029 to OpenBrain’s horror, the new President ceases all the models of OpenBrain and declares it a national property affecting national security. He tries to rigorously pursuade China on putting a pause to AI arms race & steps towards reducing compute. China does not seem interested to do that anytime soon. But as the death toll due to the pandemic horrifically rises as the Chinese AI models struggle to find a cure for the virus, China finally agrees with the US to put a pause on AI for now and systematically reducing compute in exchange for drugs and vaccines from the US.International treatises follow to pause or reduce compute thus putting on hold much of the AI research, with companies forced to focus on more “greener“ usage of AI and AI safety. But no one can tell for sure if countries or companies have really stopped AI research or not. But on a practical level, AI seems much under control at least for the next 5 years buying humanity enough time to grapple with the post AGI & Superintelligent world.

The main point of me adding a pandemic scenario is that: if while making a superintelligent AI, the AI companies are able to unlock the ability to craft new viruses and bioweapons, there is still a huge incentive for them (and more so for the trailing AI companies), to go on and secretly do so especially when they are so minimally regulated. Not only the pharma benefits them from pandemic if they collaborate well, they are also able to benefit via an increasing demand and need for AI adoption due to the pandemic. I argue that it is much more likely that an internal actor in an AI company will craft a Bioweapon much before there is any kindof scenario in which an AI secretly crafts a bioweapon silently killing humanity as in AI-2027’s Race end.

With a change in US presidency likely to happen in early-2029, humans have to just somehow manage to coordinate well and strengthen their defenses till that time (plus most importantly avoid any nuclear catastrophic scenarios caused by miscommunnication via malfunctioning of AI-based weapon or military systems.) And then we would hopefully have more time to deal with near-Superintelligence or post-Superintelligence world effectively.

Discuss