Published on July 13, 2025 8:37 PM GMT

Epistemic status: This is an intuition pump for why making LLMs multimodal is helpful. I am ~70% confident that my later claims which build on this are at least somewhat correct. This article was created over an unclear number of hours (20-50?) and red teamed by gpt-4o.

I recently went to a festival at a Buddhist temple in Korea. There were a range of festive activities - lamp making, tea tasting, calligraphy and the like. Chairs were set up in front of a stage by a big central praying hall. This is where the main event was held. A speaker stood up and started talking to us in Korean. Not speaking Korean, I distracted myself with a leaflet I'd found on my seat. I tried to decipher the symbols in front of me and, having learned the Korean alphabet, was able to figure out approximate sounds for some of them. However, I had no concept of meaning. It was at this point I realized just how difficult a task traditional large language models are given.

When we first learn to speak as small children, we are shown objects and learn to associate them with sounds made by our parents. My first word was "car", which I presumably figured out by associating the sounds "c", "a" and "r" to the thing with wheels. This was unfortunately not an entirely correct generalization as to my infant mind the dishwasher tray counted as a "car", but points for trying nevertheless. Indeed, I am now in Vietnam and am realising just how hard the task of learning a language even in the presence of reference objects is. Sure, you can in principle figure out what the word for "table" is, given this word may be said in the presence of the object, but good luck filtering that out in the midst of drunken Vietnamese banter during a rowdy evening with locals[1].

My point is that both of the tasks I've mentioned are significantly easier than the standard LLM next token prediction task. In Vietnamese conversation I can use situational clues to build up knowledge of what words are used when, noticing that "mot hai ba yo!" always comes before clinking of glasses and drinking for instance. In reading the passage of Korean I didn't know where to start. What does this word mean? Giraffe? Quaint? Sticky toffee pudding? It's shocking how hard it is to deduce anything from text like this. There's no context you can use when the entire context is equally meaningless. It gets worse, because I would still expect most of the words on the page to reference concepts I had prior experience with, in a way that just cannot be the case for a large language model which has seen, heard or touched the world it describes.

Like think about this for a minute, you wake up with no knowledge of anything. You're given a sequence of tokens and told to predict what comes next. 58, 2, 74, 578, fill in the blank. 78? Wrong, it was actually 43[2].

Sure, you can in principle make progress. Grammar is probably the simplest thing to start noticing - 34 (I) is regularly followed by 51 (am). Maybe over time you're able to connect nouns to adjectives - 765 (elephant) seems to relate to 383 (big). I think language models have quite clearly demonstrated it is possible to become very good at this, but think for a moment how it would feel to have no concept of a word other than its relation to other words. Even in the parable of the blind men and the elephant they had a sense of touch to relate the elephant to.

Presumably this goes a long way to explaining why most modern LLMs are multimodal, accepting images as well as text - it's a lot easier to understand how an elephant fits together from an image than from text. As an example, here is its Wikipedia description:

Distinctive features of elephants include a long proboscis called a trunk, tusks, large ear flaps, pillar-like legs, and tough but sensitive grey skin. The trunk is prehensile, bringing food and water to the mouth and grasping objects. Tusks, which are derived from the incisor teeth, serve both as weapons and as tools for moving objects and digging. The large ear flaps assist in maintaining a constant body temperature as well as in communication. African elephants have larger ears and concave backs, whereas Asian elephants have smaller ears and convex or level backs.

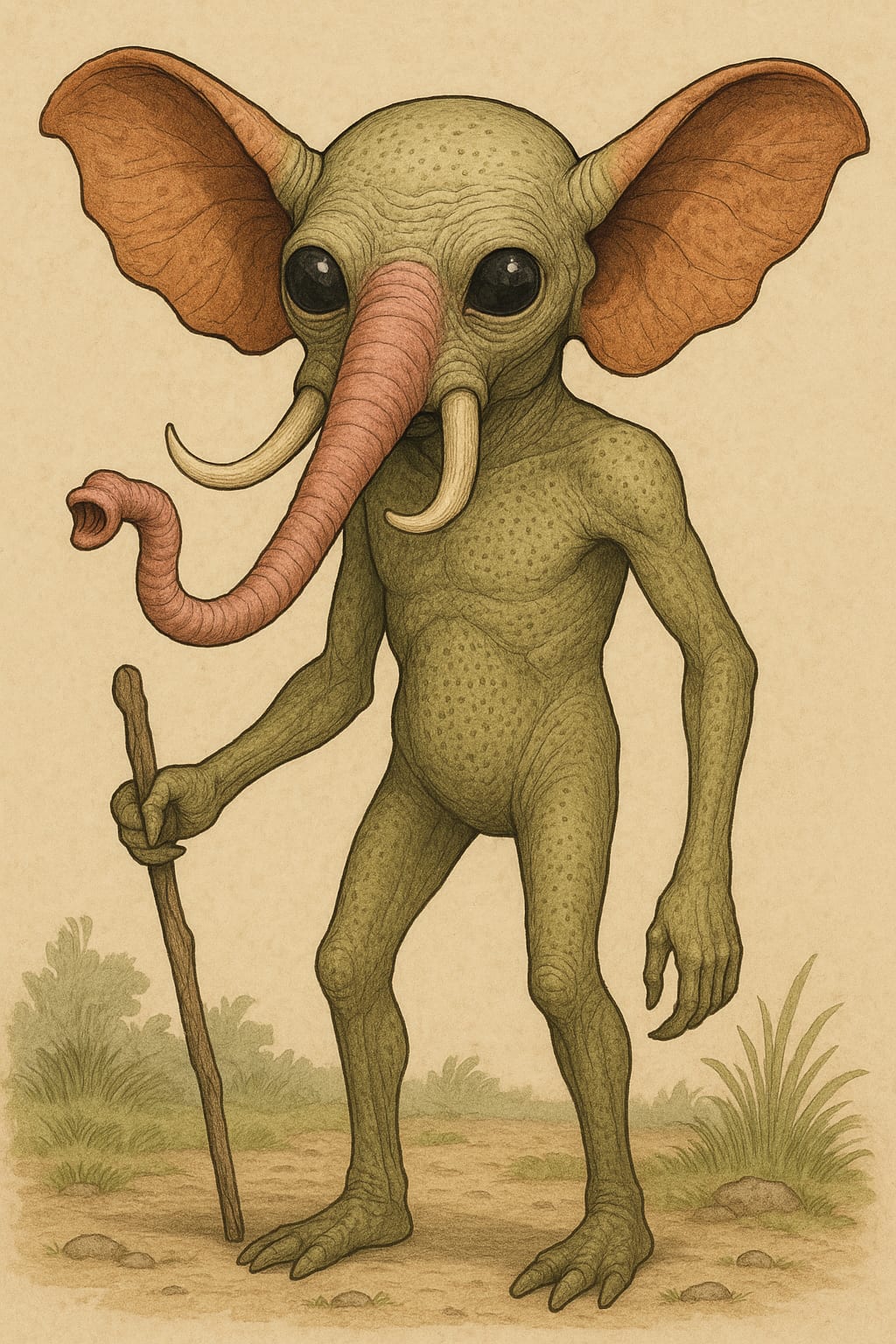

And here is an image which fits the description[3]:

Obviously language models have access to a ton more data, but they also don't have an easily accessible model of the world. Just creating this image includes an implicit assumption that we already know what "trunks", "tusks", and "ears" are. Note that I am not arguing it is impossible to figure these relations out, as in the limit of infinite compute, data, etc it may be. What this is meant to be is an intuition pump for the fact that looking at things with a different mode of input gives a fundamentally different perspective. In this new perspective, it is likely that many things which were not previously clear become so.

So what happens now that we have multimodal models? Well, they still mostly only have access to text and images. This is a long way from the information humans have at our disposal, so let's do a comparison. Let us imagine that we've understood language and images well enough to match the labels to the images, understand grammar and adjectives and that sort of thing. What might be limiting us now?

Imagine you are given a few videos of a completely alien species which moves using an unknown-to-us 4th dimension of space, and are tasked with creating a simple explanation for how it moves[4]. You might be able to, but this would be a hell of a lot easier if you had direct experience with the extra dimension. In the same way, our text + image trained AI has no real experience with 3d space, or for that matter with time (save possibly a few sets of images taken one after another). This immediately makes a lot of things which happen in 3 dimensions of space and 1 dimension of time very counterintuitive. I feel like this is a true statement independent of whether a human or an AI is doing the learning: it is easier to learn about a phenomenon which is directly observed than one which has to be inferred from other data.

Naturally different forms of input will be useful in different contexts - I doubt giving AI a sense of smell will help with its coding, while it might well improve its cooking. However, I do expect many new forms of input to produce new capabilities just through giving a new lens to look at the world. Notably, all human senses must have evolved through some form of evolutionary pressure and must therefore be helpful in the ancestral environment. While this does not directly follow, I imagine that giving AI access to human senses would unlock new capabilities, if only in helping them understand humans.

As it stands, the key gaps between the inputs[5] that humans have and the inputs that modern AI has seem to me to be the following[6]:

- Sound (for most models)Continuous motion through spaceContinuous motion through timeTouchTemperatureSmell/TasteProprioception (sense of where our body is in space)Nociception (Pain)

The first 3 can probably come from video, and I imagine understanding cause and effect, the flow of time and interactions between objects more generally is much easier if long series of events can be experienced in one go. Placing a model in a virtual world and letting it interact with objects also seems like it would help with notions of motion through space and time.

I should probably pause here to recognise that there are challenges inherent to actually training on different modes of data properly, and it is not obvious how to design a neural network to integrate varied data into a system which can process them together cleanly. Notably, I think the later items on the list would most likely be harder to implement and give more marginal improvements (as well as having significantly less readily available data).

However, I don't think that many of these challenges are more difficult than your standard AI research. For instance, I feel like it will be much easier for frontier AI companies to fully integrate video data to the LLM training pipeline than it will be for alignment researchers to make similarly sized progress on the alignment problem. I also feel like many of the flaws with LLMs commonly pointed out by sceptics can in various ways be narrowed down to lack of experience with "the real world". In particular, finding the solution to many of the ARC-AGI 2 problems seems to require something like moving the "objects" in the image around, a difficult concept for anything that has never seen anything move.

Now, we've discussed how AI is currently held back compared to humans by its lack of senses, but in the future it may well push beyond them - we are in Plato's cave ourselves and are limited in our perception of the world by the tools given to us by evolution, which has endowed other animals with a variety of other modes of input - echolocation, electroreception and infrared vision to name just a few[7].

We also need not limit ourselves solely to extant senses - future AI should equally be able to think natively about radio waves and x-rays, weather data and just about any other form of data. Each new mode of input gives a new perspective, and with this comes new capabilities and insights. I have a friend who is blind, but plays ice hockey remarkably well. I imagine she would play even better with her sight restored, and the ability to echolocate the puck and other players would probably help in other, novel ways.

I think this is one of the intuitions people have when they say we won't reach AGI solely by scaling up LLMs. It's not that it's impossible in principle, it's that it takes several quorpleflops[8] of compute to recreate intuitions much more easily derived from other sources. On the other hand, if AI can already do so much while being so limited, significant progress may be coming in the near future. For the doomers among us (myself included) this is bad news.

- ^

I am so much more grateful now for the existence of Duolingo.

- ^

Quick warning not to try to look for a pattern, I am very much making these up on the spot. Unless it amuses you, in which case do whatever you want.

- ^

I tried to create something even more cursed but chatgpt couldn't generate it for me. I think there's a lot more stuff you could fiddle with to make this look less like an elephant while still fitting the description.

- ^

Physicists out there, please don't shoot me. I know that there are very strong reasons not to believe in more than 3 large dimensions, but this was the best illustration I could think of.

- ^

Here taken to mean "something experienced at some point in the training data"

- ^

It is possible I have missed some. If so, please tell me and I'll add them.

- ^

There's an entire discussion about the possibility of forms of subjective experience completely separate from anything humans have experienced here, but I will leave that for another time.

- ^

My submission for a new word meaning "way more flops than should ever be used on anything by any reasonable person"

Discuss