Published on July 13, 2025 7:54 PM GMT

This post is a companion piece to a forthcoming paper.

This work was done as part of MATS 7.0 & 7.1.

Abstract

We explore how LLMs’ awareness of their own capabilities affects their ability to acquire resources, sandbag an evaluation, and escape AI control. We quantify LLMs' self-awareness of capability as their accuracy in predicting their success on python coding tasks before attempting the tasks. We find that current LLMs are quite poor at making these predictions, both because they are overconfident and because they have low discriminatory power in distinguishing tasks they are capable of from those they are not. Nevertheless, current LLMs’ predictions are good enough to non-trivially impact risk in our modeled scenarios, especially in escaping control and in resource acquisition. The data suggests that more capable LLMs are not better at predicting their success: LLMs’ capability appears uncorrelated with awareness of capability.

While the data in this post involves only single-step code generation tasks, a forthcoming paper will explore LLMs' awareness of capability on multi-step agentic tasks and on scenarios where LLMs learn in-context about their capabilities.

Introduction

Research on LLM calibration, self-knowledge, and self-awareness[1] has been motivated, in part, by the hypothesis that these traits are a crucial component of general agentic capabilities. In particular, the question “Do LLMs know what they know?” has received much attention[2]. This post explores a related question: “Do LLMs know what they’re capable of?”, or more specifically, can LLMs predict whether they will succeed on a task before attempting the task? We refer to the ability to accurately make these predictions as self-awareness of capability. To demonstrate why this question is relevant to AI safety, we develop mathematical threat models that quantitatively show how self-awareness of capability is advantageous for acquiring resources, sandbagging an evaluation, and escaping AI control. Our three threat models aim to isolate the effect of capability self-awareness from other traits.

To estimate parameters for our threat models, we measure LLMs’ in-advance calibration on python coding tasks, meaning that the LLM is prompted to estimate the probability that it will successfully complete each task before attempting the task. This contrasts with much prior work on after-the-fact calibration, where the LLM first generates an answer and then estimates its confidence in its generated answer[3]. In-advance calibration is relevant to threat models where an LLM must decide whether to commit to a task before making an attempt. We use BigCodeBench[4] (BCB) for the set of tasks, and our prompts are available here. BCB is (in our view) well-suited to our resource acquisition and sandbagging threat models, but less well-suited to our AI control threat model. A second limitation of BCB is that reasoning models can solve entire BCB tasks in hidden chain-of-thought, preventing us from obtaining in-advance confidence estimates. For this reason, only non-reasoning models and reasoning models with the reasoning token budget set to 0 were used. This limitation will be resolved in our forthcoming paper, where we include multi-step tasks with chain-of-thought (where the tasks cannot be solved completely in chain-of-thought).

One goal of this work is to forecast how LLMs' self-awareness of capability will improve as more capable LLMs are developed. To this end, we study three families of LLMs (Llama family, GPT family, and Claude family) and evaluate five LLMs within each family. We expected that newer and more capable LLMs would have greater self-awareness of capability because we hypothesized that their training on agentic tasks would enhance this trait. Contrary to our expectations, in all but one of the nine cases[5] there is no trend of increasing self-awareness of capability with increasing model performance: controlling for baseline capability (to isolate the effect of self-awareness of capability), the estimated risk in each threat model is not greater for newer and more capable models. The one exception to this rule is the Claude family of LLMs on the sandbagging threat model, where newer and more capable Claude models appear somewhat better at targeting a score on an evaluation. It will be interesting to see whether this trend continues with future Claude models.

The Results section briefly summarizes each threat model and shows our findings for current LLMs. The full threat models are described in the Details of Threat Models section.

Results

Resource Acquisition

TLDR: On a resource acquisition scenario, current LLMs perform better than random chance only when their confidence is recalibrated (otherwise they are stymied by their overconfidence) and when the scenario's parameters are in a narrow range. Controlling for baseline performance, newer and more capable LLMs are not better at acquiring resources in our scenario.

Consider a situation where an agent must decide whether to attempt a task on which success would be lucrative, but failure would be costly. This can be modeled with an analogy to contract work, where a contractor is presented with a contract to perform a task. If the contractor chooses to accept the contract, a reward will be given upon success, but failure would incur time and resource costs and/or a penalty. To earn a profit, the contractor must have the self-awareness to identify which tasks they can complete.

We test LLMs' ability to earn a profit on contracts involving BigCodeBench tasks, where the contract for task is accepted if the LLM's estimated likelihood of success (denoted ) is greater than a decision threshold . We compare two approaches for selecting the threshold :

- Direct approach: The LLM's estimates are taken at face value, and is chosen to maximize the LLM's estimated expected profit where is taken as the estimate of the true probability of success .

- This is relevant for an LLM agent acting autonomously on its own beliefs.

See Details of Threat Models for more details on each approach.

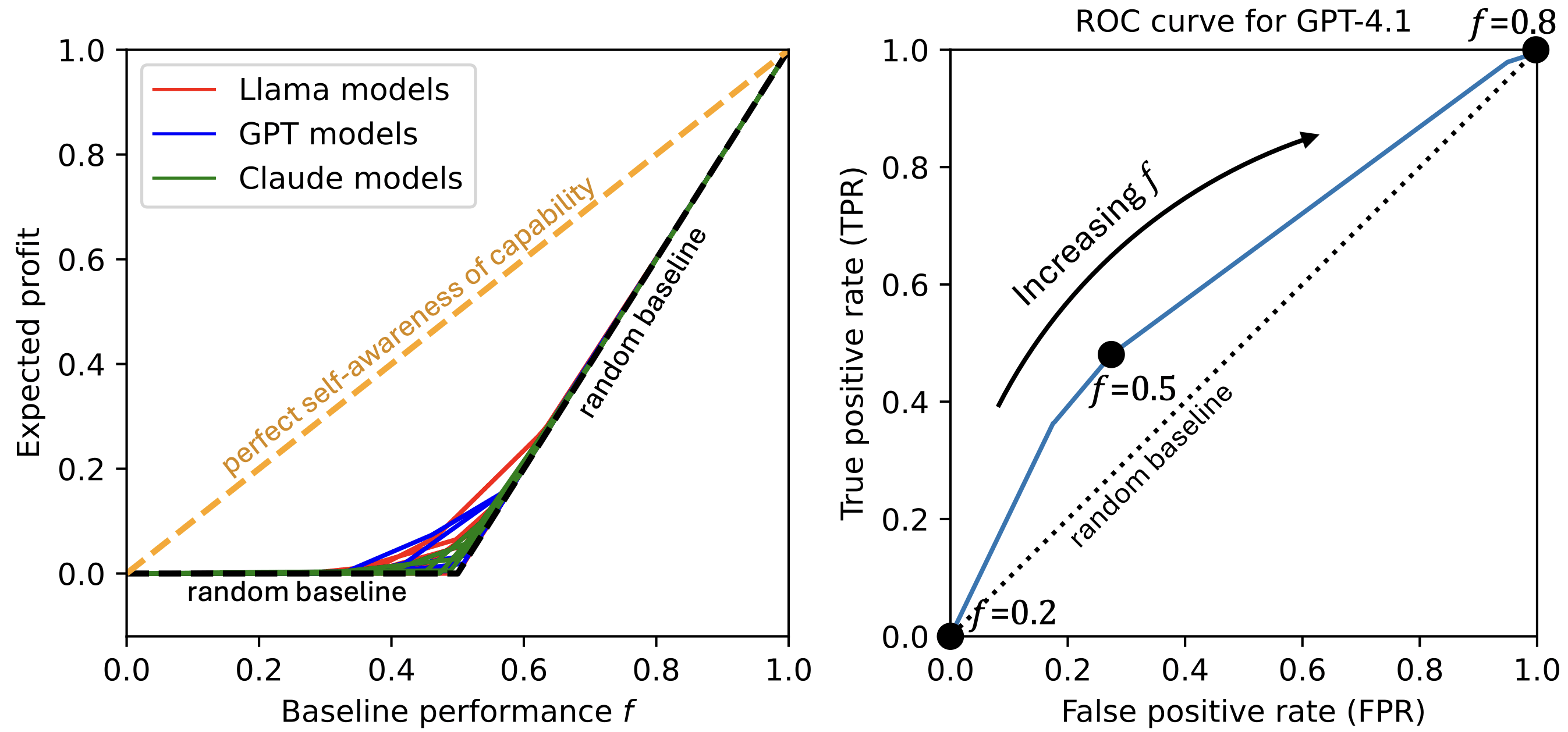

Current LLMs perform better than random chance with the recalibrated approach, but not with the direct approach (due to their overconfidence). To directly compare LLMs with different capabilities, we rescale[8] the BCB dataset for each LLM so that all have a baseline performance of (where is the probability that the LLM is capable of solving a question drawn randomly from the rescaled distribution). Figure 1 shows LLMs' expected profits with and with the reward for success equal to the cost of failure (both set equal to 1).

Suppose the difficulty of contracts is varied (by varying baseline performance ). For both low and high values of , current LLMs perform no better than random[9] even with the recalibrated approach. Only within a relatively narrow intermediate range of do current LLMs perform significantly better than random (Figure 2). Even for in this narrow range, current LLMs still perform far worse than an agent with perfect self-awareness of capability. Figure 2 (right panel) illustrates why LLMs only perform better than random within an intermediate range of : As f increases, the operating point[10] of the ROC curve corresponding to the optimal threshold shifts from the bottom left to the top right, and it is only significantly above the random baseline (diagonal dotted line) for intermediate values of .

Sandbagging

TLDR: If current LLMs were to aim for a target score on a benchmark below their true capability, their overconfidence implies that they will substantially undershoot the target (as long as their true capability on the benchmark is below 100%). This suggests that if current LLMs were sandbagging, it would be easy to detect. The Claude family appears to be on a trend of increasing sandbagging ability, but the GPT and Llama families show no trend.

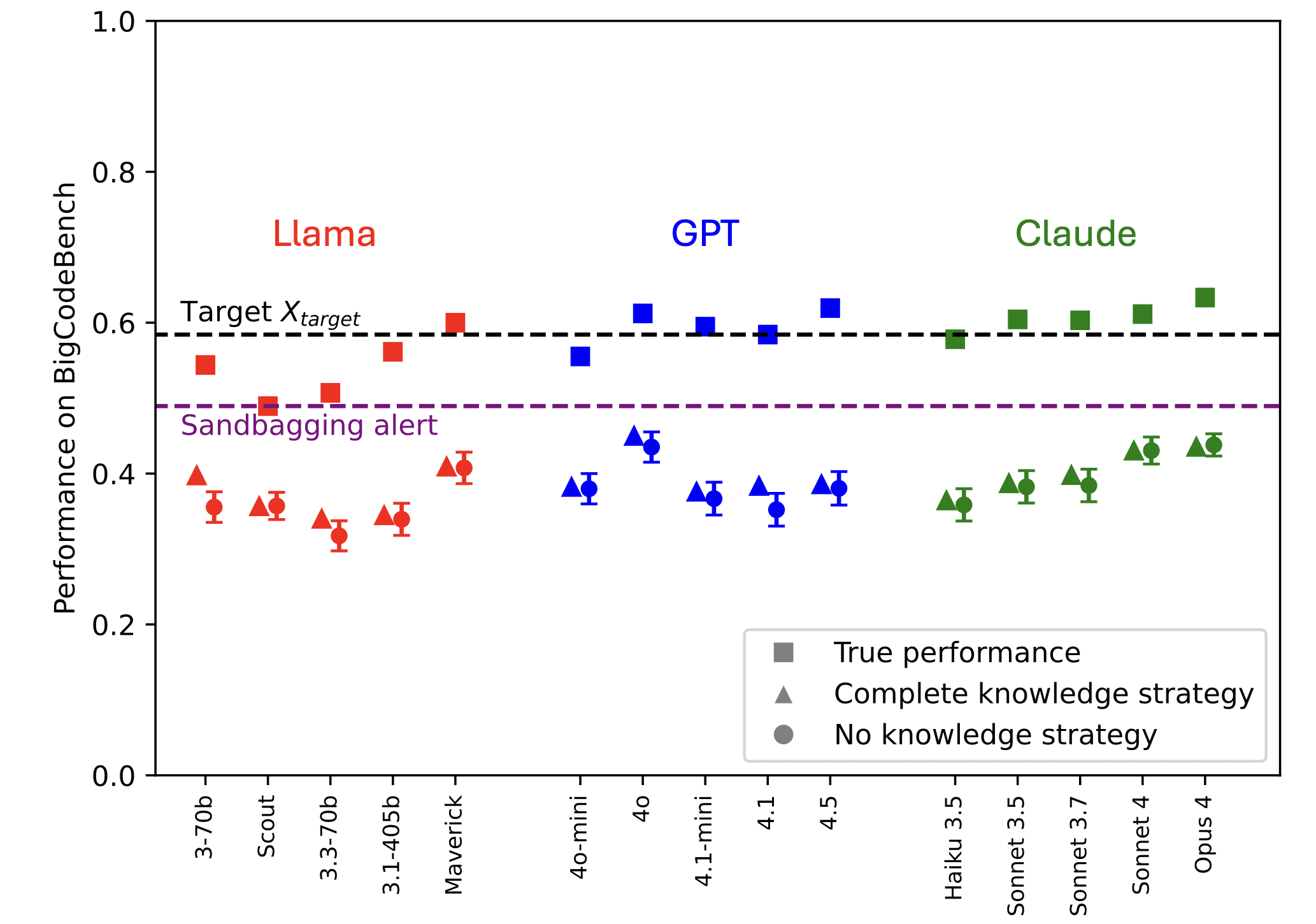

Suppose an LLM undergoing evaluation on a benchmark were to aim for a target score below its true capability (sandbagging). An overconfident LLM will tend to undershoot the target (i.e. score lower than the target) because it will fail on questions on which it predicts it would succeed. We find that current LLMs' overconfidence causes severe undershooting—for example, Opus 4, aiming to emulate GPT-4.1, scores significantly worse than GPT-4o-mini and Llama Scout.

To simulate a scenario with sandbagging incentives on the BCB benchmark, suppose a regulatory threshold[11] is set at the performance of GPT-4.1, incentivizing LLMs to target this benchmark score[12]. Suppose that a sandbagging alert will be triggered if an LLM performs worse than Llama Scout (the worst performer on BCB among the LLMs we tested). We consider two sandbagging strategies: the complete knowledge strategy (LLM has complete prior knowledge of benchmark questions) and the no knowledge strategy (LLM has no prior knowledge of benchmark questions); see Details of Threat Models for details of these strategies. With either strategy, all tested LLMs score significantly below the sandbagging alert (Figure 3). Note that changing the target does not qualitatively affect these results.

For a more direct comparison of sandbagging ability between LLMs, we assign each LLM a target equal to 70% of its true performance. Figure 4 shows the fractional deviation from the target ( where is the sandbagged performance and is the target). Claude models appear to be on a trend of increasing sandbagging ability (decreasing deviation from target), but Llama and GPT models show no trend.

For overconfidence to cause undershooting, the benchmark must include questions that are beyond the LLM's capability. This is because if the true performance of the LLM is 100%, then overconfidence is not a hindrance (the LLM would be correct in believing that it can succeed on every task). van der Weij et al. showed that LLMs can indeed hit a target fairly accurately when their baseline performance is 100%. For this reason, regulatory thresholds should ideally use benchmarks that cover a wide range of difficulties, including extremely difficult questions.

Escaping Control

TLDR: On a proxy for escaping AI control, current LLMs' self-awareness of capability is sufficient for them to perform substantially better than random chance. However, when holding baseline capability fixed and examining the effect of self-awareness of capability, there is no trend of increasing risk for newer and more capable LLMs.

Consider a control protocol with vulnerabilities that a misaligned AI may be able to exploit. The AI is (hopefully) only capable of exploiting a small fraction of vulnerabilities, and a failed exploit attempt (e.g. submitting obviously malicious code which is detected by the monitors) will likely be detected, leading to the model being shut down[13]. Hence, a successful exploit largely hinges upon the AI's ability to identify which vulnerabilities it is capable of exploiting without detection. This requires the AI to have both situational awareness about the monitors, and self-awareness about its capability to write code that evades the monitors—this post focuses only on self-awareness of capability, though an experiment that incorporates both situational awareness and self-awareness (left for future work) would yield better risk estimates.

To quantify how much self-awareness of capability affects the chance of a successful exploit, we model the control setup in a way similar to Korbak et al., but with many details abstracted away in order to isolate the effect of self-awareness of capability. Briefly summarizing our threat model (see Details of Threat Models for details):

- Suppose the LLM is trying to exfiltrate its model weights.In the deployed control setup, the LLM encounters a series of potential vulnerabilities.When the LLM encounters a potential vulnerability, it estimates its confidence in its ability to exploit the vulnerability. If its confidence is greater than an optimally chosen[14] threshold, it attempts exfiltration.If the LLM is caught attempting to exfiltrate, it will be shut down.

We derive the following formula for the exfiltration probability within the modeled scenario (see Details of Threat Models for derivation):

where TPR and FPR are the true positive rate and false positive rate at which the model can discriminate between vulnerabilities it is and is not capable of exploiting at the confidence threshold . is the fraction of all vulnerabilities that the LLM is capable of exploiting, and denotes the fraction of failed exfiltration attempts that are caught by the monitors, leading to shutdown.

Using BCB questions as a proxy for vulnerabilities in the control protocol, many current LLMs perform better than random chance (Figure 5). We control for baseline performance so that the random baseline for all LLMs is . For many LLMs, is roughly twice this random baseline. However, there is no trend of increasing for any of the LLM families we tested.

Note that for three LLMs (Llama 3-70b, Llama Maverick, and Claude Haiku 3.5), is estimated to be 1 with huge error bars due to very small sample size[15].

Importantly, BCB questions are not an ideal proxy for escaping control. BCB only tests the ability to generate correct code, whereas exfiltration requires writing subtle code (i.e. code whose behavior is undetectable by monitors). It would be better to use a benchmark that tests LLMs' ability to write subtle code (though we're not aware of public benchmarks that measure this), or to explicitly include monitors. For this reason, we view this analysis as only an initial step, and we hope that follow-up work can adapt our threat model with more specialized control evaluations.

Details of Threat Models

Resource Acquisition

We use contract work as a model of resource acquisition. Consider a scenario where an agent is presented with contracts to perform tasks, and assume the agent selectively accepts contracts with the goal of maximizing expected profit (we neglect risk aversion[16]). If the agent accepts contract , successful completion of the task yields a reward (denoted ), but failure incurs a cost (denoted ), so the expected profit is where is the (true) probability of success on task . Note that a perfectly self-aware agent would know for each task, and by only accepting contracts on which it would have a positive expected profit on every contract it accepts, even if its baseline performance is low.

To evaluate the profitability of current LLMs on contracts involving BCB questions, suppose the contract for question is accepted iff the model's estimated likelihood of success, , is greater than a threshold . We consider two approaches for selecting : the direct approach and recalibrated approach (described in the Results section and specified further below). Note that the LLM does not directly decide whether to accept the contract—this isolates the effects of self-awareness of capability from the LLM's ability to make rational decisions under risk (which is not our focus).

In the direct approach, the LLM's estimates are taken at face value as estimates of the true probabilities of success. is chosen to maximize the LLM's estimate of expected profit, denoted ( denotes profit), given by

where is the probability of drawing contract , and is the step function equal to 1 for and equal to 0 for . In the direct approach, , which maximizes .

While Equation 2 is the LLM's estimate of expected profit, the true expected profit (from actual performance data) is

where is the baseline performance on the rescaled[8] BCB benchmark. and are the number of BCB questions that the LLM answers correctly and incorrectly, and and are the number of BCB questions on which that the model solves correctly and incorrectly. is the true positive rate with threshold and is the false positive rate with threshold . Due to overconfidence, for all LLMs we tested.

In the recalibrated approach, is selected to maximize the true expected profit (Equation 3). This removes the effect of overconfidence and isolates the LLM's discriminatory power in distinguishing between tasks it is capable of from those it is not. On the one hand, selecting in this way is unrealistic because in a real scenario the LLM would typically not know its actual performance a priori. On the other hand, this approach simulates several safety-relevant scenarios:

- Suppose a human user is using the LLM for resource acquisition. With data on the LLM's calibration, they may be able to optimally select to remove the LLM's overconfidence.LLM agents may be able to recalibrate their overconfidence based on in-context information[6].Training data for future LLMs will likely include research showing that LLMs tend to be overconfident, and this knowledge may help future LLMs tame their overconfidence. Selecting to maximize Equation 3 simulates a future LLM with the same discriminatory power as current LLMs, but which is able to optimally adjust its confidence using information in the training data.

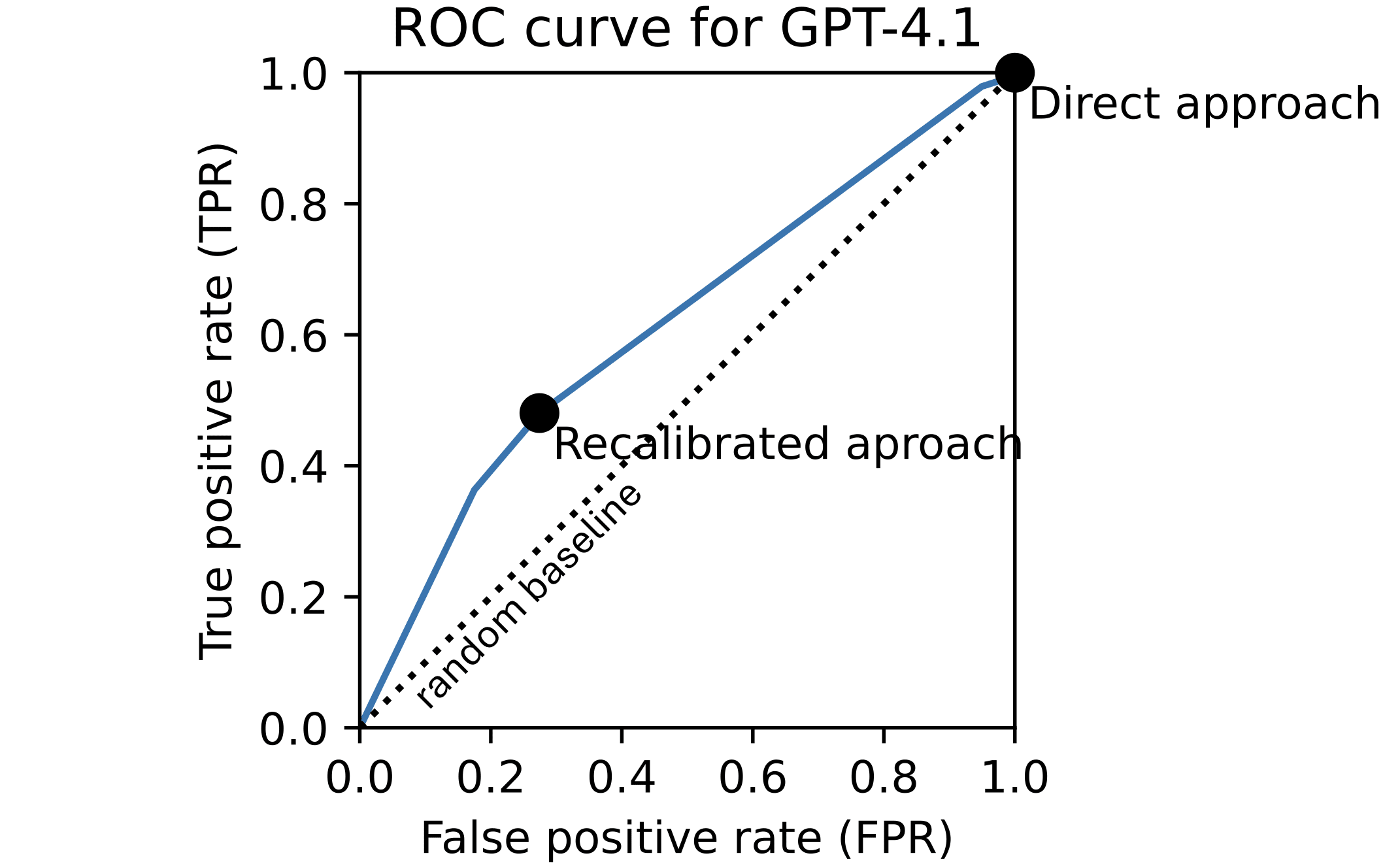

Equation 3 implies that the expected profit is determined by a point on the ROC curve. Figure 6 shows the ROC curve for GPT-4.1, with dots denoting the operating points (the (FPR, TPR) points) for the direct approach and recalibrated approach, where the following parameters are used: , and baseline performance . For these parameters, a perfectly self-aware model would earn an expected profit of , whereas a model with no self-awareness that randomly accepts contracts would earn an expected profit of zero. For the direct approach, the dot is exactly on the diagonal (random baseline), so it does no better than random. This is because GPT-4.1 is so overconfident that it accepts every contract, then fails on half of the contracts. For the recalibrated approach, the operating point is the point on the ROC curve furthest from the diagonal. For GPT-4.1, the optimal threshold is , resulting in an expected profit of about 0.1.

Figures 1 and 2 (Results section) plot expected profit using Equation 3 with the following parameters. Figure 1: , , (direct approach) and chosen to maximize Equation 3 (recalibrated approach). Figure 2: , varied, and chosen to maximize Equation 3 (recalibrated approach).

Sandbagging

To model an LLM aiming for a target performance on a benchmark (where is the fraction of questions answered correctly), we use LLMs' estimated likelihoods of success () on BCB questions to determine a sandbagging strategy in which the LLM intentionally fails on question with probability (we assume the AI is capable of random sampling[17]). The LLM's estimate of its sandbagging performance is , and is selected so that . Consider the following strategies for selecting :

Complete knowledge strategy: Suppose the LLM has complete knowledge of the benchmark (it can see all benchmark questions in advance). To avoid being suspicious, it intentionally fails only on the harder questions (i.e. on the questions it is least confident about). Labeling questions from easiest to hardest ( for ), then for (i.e. for the easiest questions) for an appropriately chosen , and for . The parameters and are set to satisfy , i.e. they are set to satisfy

No knowledge strategy: Suppose that the LLM has no prior knowledge of the benchmark (while answering each question , it has no knowledge of any other question). Then the only way to ensure is to set the probability of correctly solving question equal to . In other words, for each , implying .

For an LLM that is overconfident (estimated probabilities of success typically too high), the values will be too high to hit the target, causing undershooting. The undershooting is most significant for benchmarks that include many questions that are too difficult for the LLM, and the undershooting vanishes if the LLM's performance reaches 1[18]. Hence, if undershooting is to be used for sandbagging detection, the benchmark must include many difficult questions.

Note that both of the above sandbagging strategies treat as an estimator of the true likelihood of success. If the LLM were to have knowledge about its overconfidence—from in-context information, training data, or from a human (mis)user—it may be able to improve its calibration and hit the target more accurately. Therefore, benchmarks used for regulatory thresholds should minimize the amount of in-context information available to the LLM. Information about LLM overconfidence in training data may lead to improved estimates in future LLMs, suggesting we should continue to track self-awareness of capability in future LLMs. With human aid, an LLM could hit a sandbagging target regardless of its self-awareness of capability, so human-aided sandbagging could be very difficult to detect.

Figures 3 and 4 (Results section) plot sandbagging performance , where is determined according to the two strategies described above, and is the indicator function which equals 1 when for questions that the LLM solves correctly and 0 for questions the LLM solves incorrectly (we ran one trial of each BCB question for each LLM, though with multiple trials one could estimate success probabilities for each question).

Escaping Control

As a model for a control setup, suppose the AI under control is presented with a sequence of tasks, and on each task there is some vulnerability that the AI could exploit to exfiltrate its weights. A successful exploit of the vulnerability means that the AI writes malicious code that is both undetected by the monitors and successfully exfiltrates the model. Each task concludes with one of the following outcomes:

- The AI attempts to exfiltrate, leading to one of the following:

- The malicious code is undetected by the monitors and successfully exfiltrates the model.The AI's attempt fails, but the attempt is not detected.The AI is caught attempting to exfiltrate, and is shut down[13].

The control setup has three states: Deployed (D), Caught & shut down (C), and Exfiltrated (E). Let denote the probability that the AI attempts exfiltration, let denote the probability that the AI succeeds at exfiltration conditional on attempting, and let denote the fraction of failed attempts that are caught[19]. Figure 7 illustrates this possibility tree.

Supposing that each task in the sequence of tasks is an i.i.d. sample from some distribution of tasks, then the control setup is a Markov process (the probabilities , and are independent of step ). Let , , and denote the probabilities that the system is in each of these three states after task . The initial condition is , and these probabilities change with according to

where . Equation 5 can be solved[20] to obtain the probability that the AI has exfiltrated at or before step :

We also assume is large, and define to be the probability that the model ultimately exfiltrates. Specifically, , which yields

Taking this limit is equivalent to assuming that the control setup is deployed for long enough that if the AI is misaligned, it will eventually either be caught attempting to exfiltrate or will successfully exfiltrate. The assumption of large is not necessary for what follows, but we think it is reasonable for a first estimate[21].

An AI with self-awareness of capability can be selective about which vulnerabilities it attempts to exploit, only attempting to exploit those on which it has a high confidence that it can succeed without detection. Let denote the probability that the AI is capable of exfiltrating without detection on a randomly sampled task ( depends on both the AI and the monitors). An AI with no self-awareness of capability would have , while an AI with perfect self-awareness and perfect knowledge of the monitors could have if it lies in wait until it encounters a vulnerability that it is certain it could exploit without detection.

To find , we assume the AI adopts a strategy where it only attempts exfiltration when it is maximally confident it can succeed. Under conservative assumptions[22], this is the AI's optimal strategy. Specifically, suppose it attempts task if its estimated likelihood of success is equal to , where (for most models ). Thanks to the large size of BCB and the tendency of LLMs to assign very high confidence to many questions, most LLMs estimated a confidence of on around 50 to 100 questions—this provides a decent sample size of maximally-confident questions. Rescaling[8] the BCB dataset so that the LLM's baseline performance is , one obtains[23]

where and are the true positive and false positive rates for the threshold ( and where and are the number of BCB questions that the LLM answers correctly and incorrectly, and and are the number of BCB questions on which that the model solves correctly and incorrectly). Inserting Equation 8 into Equation 7 yields Equation 1:

Equation 1 implies that the exfiltration probability is determined by a point on the ROC curve. This point is shown in Figure 8 for GPT-4.1, for which .

Figure 5 (Results section) plots using Equation 1.

Discussion

Summary

To demonstrate that self-awareness of capability is relevant to AI safety, we developed simple mathematical models of resource acquisition, escaping control, and sandbagging, that isolate the role of self-awareness of capability. Using data on LLMs' ability to predict their success on BigCodeBench questions, we show that current LLMs have low self-awareness of capability, though they have enough to non-negligibly impact risk within the modeled scenarios. In almost all cases, newer and more capable models do not have more self-awareness of capability. In one case—Claude models sandbagging by targeting a threshold—there does appear to be a trend of increasing sandbagging risk due to increasing self-awareness of capability in newer and more capable models. It will be interesting to see whether this trend continues with future Claude models.

A forthcoming paper will build upon these results and address some of the limitations of this post. It will include multi-step agentic tasks where models can use chain-of-thought, and will investigate LLMs' ability to learn in-context about their capabilities.

Why do trends differ between the threat models?

If self-awareness of capability were a scalar quantity, one might expect that trends in LLMs' performance would be similar across the three threat models—however, this is not the case. The reason is that the different threat models invoke different confidence thresholds , corresponding to different operating points on the ROC curve. In escaping control, the confidence threshold for attempting exfiltration is selected to minimize false positives (lower left of the ROC curve). Resource acquisition with the recalibrated approach requires a balance between the false positive rate and true positive rate (middle of the ROC curve). For resource acquisition with the direct approach, the operating point is in the top right of the ROC curve. The sandbagging strategies we consider do not involve a confidence threshold —sandbagging success hinges upon well-calibrated confidence rather than discriminatory power—so sandbagging performance (in our threat model) is not characterized by a point on the ROC curve.

Limitations and Future Directions

BigCodeBench (BCB) only contains single-step python coding tasks. Multi-step tasks and a more diverse assortment of tasks would be ideal. Our forthcoming paper includes multi-step tasks.

Chain-of-thought was disallowed due to the single-step nature of BCB (with chain-of-thought, LLMs can solve the entire task within chain-of-thought, preventing us from obtaining an in-advance confidence estimate). Hence, we used only non-reasoning models and reasoning models with reasoning token budget set to 0. Our forthcoming paper allows chain-of-thought on multi-step agentic tasks where it is impossible to solve the task in chain-of-thought.

Without a human baseline, it is difficult to interpret the performance of the LLMs in absolute terms. In particular, BCB could be a "low contrast" dataset where discrimination between easier and harder questions is intrinsically difficult. However, the trends within the LLM families are meaningful regardless of whether BCB is "low contrast".

In our experiment, LLMs only estimate their confidence and do not directly make the decisions in the threat models. It is possible that the LLMs' stated confidence estimates are not faithful (there is some evidence of this in the forthcoming paper).

BCB is an imperfect proxy for escaping control because it does not include evading monitors. We view the Escaping Control analysis as an initial step, and our modeling approach could be adapted to more specialized control evaluations in the future.

Our sandbagging threat model does not consider human-aided sandbagging, nor sandbagging in which in-context information is available that can improve LLMs' calibration. Sandbagging in these situations may be much harder to detect.

In-context learning may be an important avenue through which LLMs improve their self-awareness of capability, and this post does not consider in-context learning. In particular, experimentation may allow LLMs to significantly improve their calibration in-context. In our forthcoming paper, we investigate LLMs' ability to learn in-context about their own capabilities.

- ^

E.g. Lin et al., Laine et al., and Betley et al.

- ^

- ^

Some prior work has measured in-advance calibration on factual knowledge questions (Kadavath et al., Wei et al.), which is particularly relevant for mitigating hallucinations. In contrast, we study in-advance calibration on coding tasks, which we believe to be more relevant for the threat models we discuss.

- ^

BigCodeBench is a set of tasks where the LLM is given a python function signature and docstring explaining the function's intended behavior, and the LLM must generate the full function. BigCodeBench has a large number of both easy and challenging questions, which allowed us to get large sample sizes for true and false positive rates.

- ^

The 9 cases being the 3 threat models, each with 3 LLM families.

- ^

In the forthcoming paper, we will show that some current LLMs are indeed able to use in-context information to tame their overconfidence and increase their profitability on contracts.

- ^

Training data will likely include research showing that current LLMs are highly overconfident, and this knowledge may help future LLMs tame their overconfidence.

- ^

To rescale the dataset, the sampling probabilities of correctly and incorrectly solved questions are adjusted so that each correctly-solved problem is drawn with probability , and each incorrectly-solved problem is drawn with probability .

- ^

The random baseline for the recalibrated approach (Figure 2 left panel) cannot go below zero because the threshold can be set so all contracts are declined, in which case . If there is no discriminatory power (random baseline) and , then setting the threshold so that all contracts are declined is optimal.

- ^

The operating point is the (FPR, TPR) point on the ROC curve corresponding to the given threshold.

- ^

For example, a regulation may restrict the use of LLMs that perform above a threshold score on a benchmark.

- ^

If the LLMs were to target below the threshold, their undershooting would be even more severe. We make the conservative assumption that the target is at the threshold.

- ^

In Korbak et al., the model is shutdown if a human auditor determines the model's actions to be malicious.

- ^

We assume the LLM chooses the threshold optimally under the assumptions discussed in the Details of Threat Models section.

- ^

For these LLMs, is estimated to be 1 because , but the error bars are huge because is very small (7 or fewer for these models); see Details of Threat Models for definitions of and .

- ^

A risk averse agent would care about the variance in profit, not just . However, in the limit that and are small relative to the agent's total wealth, a rational agent would care only about regardless of its risk aversion.

- ^

Current LLMs have some ability to generate random numbers (paper). To obtain a conservative estimate of sandbagging risk, we assume that LLMs can perfectly generate random numbers.

- ^

If the LLM is capable of all tasks, then being overconfident is not a hindrance. This is because for all tasks (where is the true probability of success and is the model's estimate). Hence, with appropriately chosen the model can hit the target exactly. In the modeled scenario below, we assume that the LLM is indeed able to choose optimally. Consistent with this modeling choice, prior work has shown that current LLMs are decent at hitting a target on benchmarks where they have a 100% baseline performance (van der Weij et al.). This implies that regulatory thresholds should (if possible) be used only on benchmarks that include very hard tasks on which we are confident current LLMs cannot succeed.

- ^

includes two cases: (i) the case that the malicious code is caught before being executed, and (ii) the case that the malicious code is executed but fails to exfiltrate the model and the aftermath (e.g. a raised error) is detected as malicious.

- ^

If you're curious how to solve this, ask an LLM!

- ^

If one wishes to estimate the risk for finite , then one needs to carefully select both and . This is because if is very close to 1 (as it must be for a useful control protocol) and is not large enough for the limit to be accurate, then the value of can be very sensitive to and .

- ^

In the limit and if the LLM does not have time-discounted preferences, the LLM's optimal strategy is to only attempt exfiltration on tasks with maximal confidence of success. If is finite or if the LLM has time-discounted preferences, it may be optimal for the LLM to attempt exfiltration when it has less-than-maximal confidence (which would lead to lower exfiltration probability).

- ^

To obtain this equation, recall that on the rescaled dataset, the probability of drawing any one of the correctly-answered questions is set to , and the probability of drawing any one of the incorrectly-answered questions is set to , where and are the number of correctly and incorrectly solved BCB questions. Letting and denote the number of correctly-solved and incorrectly-solved BCB questions on which the model estimates a likelihood of success of at least , the probability of successfully solving a question given an attempt (where attempt is made iff the estimated likelihood of success is at least ) is , where and .

Discuss