Published on July 11, 2025 8:57 AM GMT

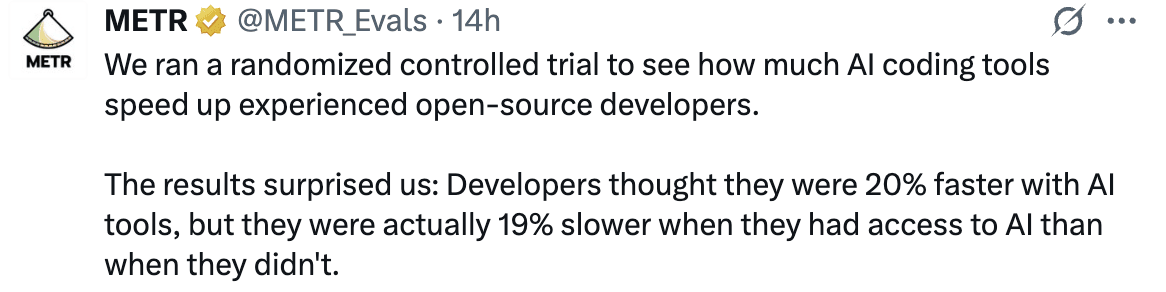

This seems like an important piece of work - an RCT on the use of AI capabilities for developers. The TL;DR of the paper is

The devil is in the details of course. This post by Steve Newman does a good job of working through some of them.

I have highlighted some considerations from it:

The methodology was as follows:

METR recruited 16 developers from major open-source projects.Each developer selected a list of coding tasks from their todo list, breaking up large projects into tasks that they could complete in an hour or two. In all, 246 tasks were included in the study.The developers estimated how long it would take them to complete each task (a) under normal conditions, and (b) without using any AI tools. The percentage difference between these figures yields the predicted speedup – the degree to which the developer expected that AI tools would boost their productivity.Each task was randomly assigned to one of two categories: “AI Allowed” (the developer can use any tools they like) or “AI Disallowed” (the developer cannot use AI coding tools or features).The developers went about their work, while recording their screens for later analysis. After each task, they reported the time spent1. For AI Allowed tasks, they also estimated how much time AI tools had saved them – the retrodicted speedup

Several aspects of the study play to the weaknesses of current tools. First, it was conducted on mature projects with extensive codebases. The average project in the study is over 10 years old and contains over 1 million lines of code – the opposite of “greenfield”. Carrying out a task may require understanding large portions of the codebase, something that current AI tools struggle with. (This may be less a fundamental weakness of AI models, and more a design choice in some coding tools to limit the amount of “context” sent to the model, in order to control costs and get quicker responses.) It also involved editing large files, which may be “out of distribution” for most AI models (i.e. they may not get much training on large files).

Second, most of these open-source projects have strict style guidelines. The experienced developers in the study were accustomed to coding according to their project’s guidelines, but the AI tools are not – thus requiring developers to review and fix the AI’s output.

Third, the developers in the study had years of experience working on their projects, meaning that they were able to work very efficiently – posing a high standard for AI to compete with.

Note that the model used here was Claude Sonnet 3.5/3.7, which was SOTA at the time this study was being conducted. I expect things to be slightly different now; my intuition is that Opus 4 seems to have been a big jump among my SWE friends.

Tweets I found interesting:

Discuss