Published on July 9, 2025 2:24 PM GMT

This post is an overview of the topic of anthropic reasoning, mostly based on Nick Bostrom's book Anthropic Bias. If you've read that book, you will not find much new here; I originally wrote this as a prompt for a meetup discussion so that the participants wouldn't have to read the whole book. Perhaps you will find it useful for a similar purpose.

Sleeping Beauty problem

Sleeping Beauty volunteers to undergo the following experiment. On Sunday she is given a drug that sends her to sleep. A fair coin is then tossed just once in the course of the experiment to determine which experimental procedure is undertaken. If the coin comes up heads, Beauty is awakened and interviewed on Monday, and then the experiment ends. If the coin comes up tails, she is awakened and interviewed on Monday, given a second dose of the sleeping drug, and awakened and interviewed again on Tuesday. The experiment then ends on Tuesday, without flipping the coin again. The sleeping drug induces a mild amnesia, so that she cannot remember any previous awakenings during the course of the experiment (if any). During the experiment, she has no access to anything that would give a clue as to the day of the week. However, she knows all the details of the experiment.

Each interview consists of one question, “What is your credence now for the proposition that our coin landed heads?” (Source)

Argument for 1/2: "the coin is known to be fair and it appears as if awakening does not give relevant new information to somebody who knew all along that she would at some point be awakened."

Argument for 1/3: "if there were a long series of Sleeping Beauty experiments then on average one third of the awakenings would be Heads-awakenings. One might therefore think that on any particular awakening, Beauty should believe with a credence of 1/3 that the coin landed heads in that trial." (Source)

Can we reconcile these positions with Bayes's rule? Clearly, the prior probability of Heads is 1/2. Therefore, the Thirder must posit that Beauty updates her probability upon awakening because she thereby observes something that is twice as likely to happen in the Tails-timeline as in the Heads-timeline. By the same token, the Halver must say that if Beauty asks the date and is told that it's Monday, she must now believe that the probability of Heads is 2/3. Does this make sense?

Doomsday Argument

Anthropic reasoning seemingly forces us to conclude that humanity will die out soon.

One might at first expect the human race to survive, no doubt in evolutionary much modified form, for millions or even billions of years, perhaps just on Earth but, more plausibly, in huge colonies scattered through the galaxy and maybe even through many galaxies. Contemplating the entire history of the race—future as well as past history—I should in that case see myself as a very unusually early human. I might well be among the first 0.00001 per cent to live their lives. But what if the race is instead about to die out? I am then a fairly typical human. Recent population growth has been so rapid that, of all human lives lived so far, anything up to about 30 per cent . . . are lives which are being lived at this very moment. Now, whenever lacking evidence to the contrary one should prefer to think of one’s own position as fairly typical rather than highly untypical. To promote the reasonable aim of making it quite ordinary that I exist where I do in human history, let me therefore assume that the human race will rapidly die out. (Leslie 1990), pp. 65f. (Source)

Do you accept this argument?

Self-sampling assumption (SSA), self-indication assumption (SIA)

SSA: One should reason as if one were a random sample from the set of all observers in one’s reference class.

SIA: Given the fact that you exist, you should (other things equal) favor hypotheses according to which many observers exist over hypotheses on which few observers exist.

These are not necessarily incompatible.

SIA: Beauty should answer "1/3" because Tails-timeline contains twice as many observer-moments as Heads-timeline, and so Tails is considered twice as likely as Heads given the observation "I exist".

This defuses Doomsday because, given "I exist", a high-population timeline is more likely than a low-population timeline in proportion to the population ratio, which exactly cancels the update from observed earliness.

But SIA has other problems:

The Presumptuous Philosopher

It is the year 2100 and physicists have narrowed down the search for a theory of everything to only two remaining plausible candidate theories, T1 and T2 (using considerations from super-duper symmetry). According to T1 the world is very, very big but finite and there are a total of a trillion trillion observers in the cosmos. According to T2, the world is very, very, very big but finite and there are a trillion trillion trillion observers. The super-duper symmetry considerations are indifferent between these two theories. Physicists are preparing a simple experiment that will falsify one of the theories. Enter the presumptuous philosopher: “Hey guys, it is completely unnecessary for you to do the experiment, because I can already show to you that T2 is about a trillion times more likely to be true than T1! (whereupon the philosopher runs the Incubator thought experiment and explains Model 3).”

[...]

Finally, consider the limiting case where we are comparing two hypotheses, one saying that the universe is finite (and contains finitely many observers), the other saying that the universe is infinite (and contains infinitely many observers). SIA would have you assign probability one to the latter hypothesis, assuming both hypotheses had a finite prior probability. But surely, whether the universe is finite or infinite is an open scientific question, not something that you can determine with certainty simply by leaning back in your armchair and registering the fact that you exist! (Source)

What is a "reference class"? Problems arising

Sleeping Beauty can use the most straightforward, minimal reference class (what Bostrom calls ℜ⁰) i.e. "all observer-moments subjectively indistinguishable from this one" because of the stipulation that her memory is erased after each observation. But Doomsday uses the more general class "all humans". Is this choice arbitrary? Can our probabilistic reasoning be affected by the choice of reference class?

Incorporated by reference: the section entitled "The reference class problem".

Importantly, in the first example, the black-bearded man in Room 1 knows that he is not the white-bearded man in Room 2, but the inclusion of the white-bearded man in the reference class affects the black-bearded man's probability estimate. Now suppose that the being in Room 2 (if it exists) is a chimpanzee. Then, even though the black-bearded man knows that he is not a chimpanzee, his estimate will be affected by whether he includes the chimpanzee in his reference class. Is it weird that such a purely philosophical (seemingly arbitrary) position has a real effect?

Apply this to the Doomsday Argument: suppose we discover that the galaxy is populated by trillions of Von Neumann probes that have been self-replicating for millions of years, but it's debatable whether the probes count as "conscious beings". Now, certainly, the discovery of the probes will affect our expectations for our own species' future. But should the question of whether the probes are conscious also have an effect? It seems weird that it would.

Does anthropic reasoning violate physicalism?

Suppose you are given complete information about a scenario, including about all the minds that exist. But it still feels like there's an open question: "But which one of those minds am I?" This defeats physicalism (i.e. the claim that the only facts about the world are physical facts), suggesting that we need to include an element of "irreducible subjectivity" in our metaphysics.

You might object that the question "Which one of those minds am I?" is not a real question after all, that it's epiphenomenal, illusory, etc. But the objection is countered by anthropic reasoning whereby different observers come to different conclusions about the probability of something even though their information differs only in the answer to the "indexical" question.

Bostrom gives an example of such a scenario. Incorporated by reference:

- the section "Observer-relative chances: another go";and also the next section, "Discussion: indexical facts - no conflict with physicalism" where Bostrom answers the charge of extraphysicalism.

Also incorporated: "Where physics meets experience".

Bostrom's Observation Equation

Because of the Presumptuous Philosopher problem, Bostrom rejects SIA and advocates a modification of SSA (strong self-sampling assumption, SSSA) which is just SSA except "observer" is replaced with "observer-moment". (The reasoning is fairly transparent to this distinction so I'll use the general term "being" throughout.)

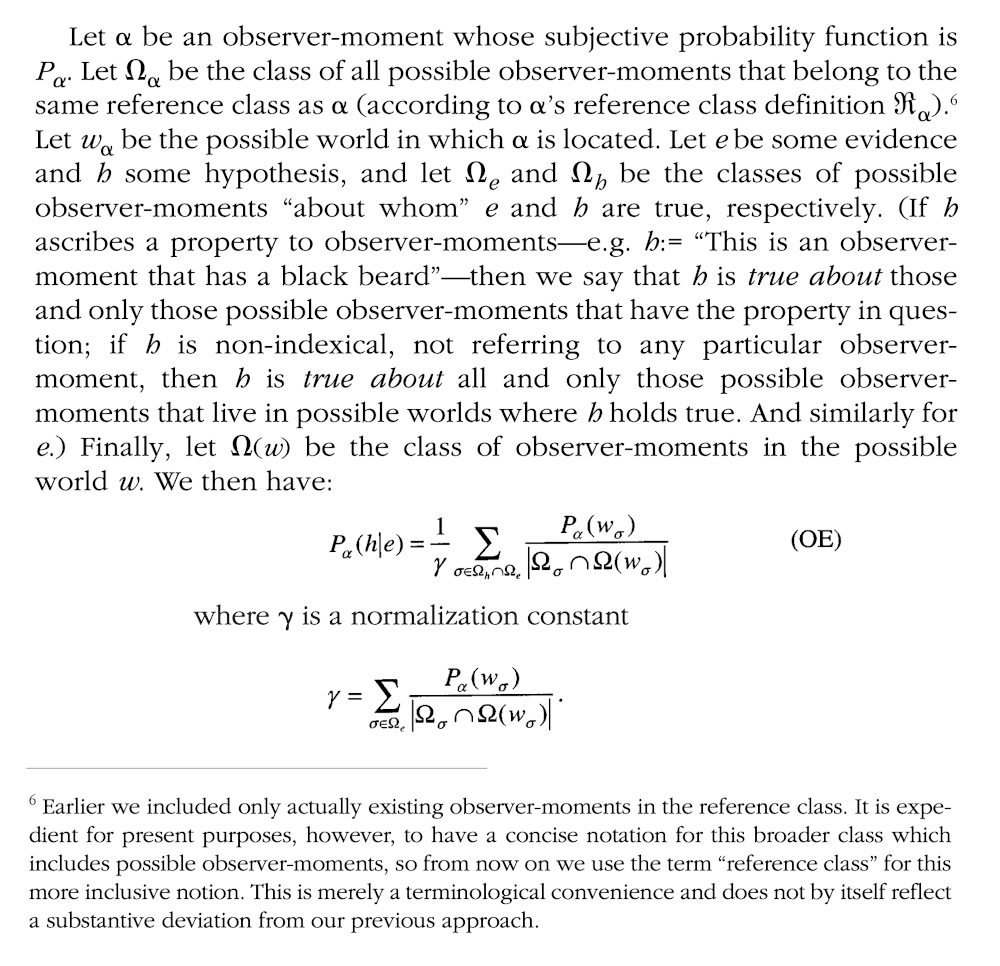

SSSA, combined with rejection of SIA, leads him to develop the Observation Equation (OE):

Bostrom's notation is highly abstruse but (if I understand it correctly) is easy to understand when explained intuitively. In short, what the equation says is:

- Start with the a priori probability of each possible world (and don't adjust it based on population, since we reject SIA).For each possible world, allocate that world's probability mass equally (since we accept SSA) among all in-your-reference-class beings in that world (if there are any).Consider the set of all such beings (across all possible worlds) about whom the evidence e is true. The total weight of all these beings is our denominator.Consider the subset of those beings about whom the hypothesis h is also true. Their total weight comprises our numerator. Numerator÷Denominator yields the probability of h given e.

This rule has the following properties:

- A possible world doesn't get more weight just because it contains a lot of beings, since the weight of each being in a high-population possible world is discounted proportionally.However, we do (effectively) assign more weight to worlds where a high proportion of the beings match our evidence, since that world's total contribution to the denominator will be greater.

Furthermore, Bostrom uses this equation to argue that we can't always use the reference class ℜ⁰. This is because we'd run into "Boltzmann brain" problems - if my reference class is so restricted, it'll contain lots of Boltzmann brains but very few "normal" beings. (But perhaps this is an artifact of the equation weighting all beings equally within each world. You might instead say that Boltzmann brains should receive a lower weight due to having a more complex "address" in the multiverse. This is the position of the "UDASSA" theory expounded variously on LW.)

Observation Equation applied to Sleeping Beauty

If we use ℜ⁰, then the answer is 1/2.

But if we broaden the reference class to include the rest of humanity, then the answer tends to 1/3 as the population increases! We derive this as follows:

Let's say the population of observer-moments is 1000001 in the Heads-timeline (1 million outsiders, plus Monday Beauty), and 1000002 in the Tails-timeline (1 million outsiders, plus Monday and Tuesday Beauty). Then each being in H-timeline gets a weight of 0.5/1000001, and each in T-timeline gets 0.5/1000002. But in step 3 we restrict the set under consideration to those beings that match the observed evidence, i.e. "I am undergoing the experiment". There is 1 such being in H-timeline and 2 in T-timeline, with weights of 0.5/1000001, 0.5/1000002, and 0.5/1000002 respectively. Therefore the probability that we're in H-timeline is 0.5/1000001 divided by 0.5/1000001+0.5/1000002+0.5/1000002, which is 0.3333335555....

This is a remarkable result - has Bostrom managed to reconcile the two Sleeping Beauty viewpoints? On this view, the confusion arises when we take the reasoning that applies to a small scenario (like Sleeping Beauty) and generalize it to apply to the whole universe (like Doomsday).

Discuss