Published on July 6, 2025 1:19 AM GMT

Epistemic status: informed speculation using only publicly available information

OpenAI is about to spend $500 billion on infrastructure they might not need: if researchers aren't ready with experiments, or if demand for intelligence doesn't grow, every unused GPU-hour vanishes forever. Yet there's no futures curve for compute, no market price for intelligence, no way to hedge any of it.

We're building the intelligence economy on vibes when we could be building it on market prices.

What if compute and AI intelligence could be traded like commodities? Futures markets could transform risky AI spending into predictable investment, while serving as a real-time prediction market for AI progress itself.

Table of Contents

- The New UtilitiesThe Perishable Fuel ProblemWhy Spot Markets Aren't EnoughThe Missing Piece 1: Compute FuturesThe Missing Piece 2: Intelligence FuturesWhy Don't They Exist Yet?Reason for OptimismWhy This Is AwesomeWhy NVDA is not a Good ProxyMore Wild Implications

The New Utilities

"AI companies are becoming the new utilities companies. They take compute (raw material) as input, generate intelligence (electricity) as output, and pipe it to businesses through APIs (electrical grid)."

Companies aren't building their own AI models – they're buying intelligence-as-a-service from OpenAI, Anthropic, Google. Need to analyze a contract? Send it to GPT-4. Want to generate marketing copy? Claude's got you. Need to process customer support tickets? There's an API for that.

In this world, AI companies are becoming the new utilities companies. They take compute (raw material) as input, generate intelligence (electricity) as output, and pipe it to businesses through APIs (electrical grid). But unlike traditional utilities, they face a unique challenge: compute, which is the raw material they need, is both massively expensive and completely perishable.

We're building the intelligence economy on vibes when we could be building it on prices.

The Perishable Fuel Problem

Here's the thing about compute: you use it or lose it.

If you're a power company, life is good. You can sign long-term contracts for natural gas delivery. You can stockpile coal. You can continuously hedge your fuel cost exposure in the power futures market. Your fuel sits there, waiting patiently to be burned.

But if you're OpenAI? You can't stockpile compute. Every unused GPU-hour vanishes into the ether, forever. Tuesday's spare compute can't help with Wednesday's training run. It's just... gone.

This is a real problem when you're considering spending $500 billion to build data centers. What if your researchers don't have enough experiments ready when all those GPUs come online? What if you built too much? In normal markets, you'd just sell the excess. But you can't exactly put unused compute in a warehouse and auction it off later.

So OpenAI faces a dilemma: build conservatively and risk not having enough compute when everyone wants it, or build aggressively and watch expensive GPUs sit idle. If I had to guess, that $500 billion is probably a conservative number. If OpenAI could lock in some guaranteed return on all that compute, they'd probably invest even more in data centers.

Why Spot Markets Aren't Enough

"What about cloud computing?" You might ask.

It's true that you can already buy and sell compute on demand. There's the traditional AWS and Google Cloud, and there are many newer services targeting GPU compute specifically: Together AI offers serverless APIs, Prime Intellect is building a compute marketplace, RunPod offers per-second billing.

But big training runs require thousands of GPUs for months. Most spot markets aren't designed for this type of demand: supply is often sporadic and limited without advanced reservation. Even reservation-based systems aren't good enough, because research delays might push training run schedules back by weeks or months, and reservation-based systems don't offer enough flexibility.

This is exactly the type of problems that futures markets try to solve. A farmer needs to know they can sell their wheat before they plant it. A power plant needs to ensure certain electricity demand and natural gas supply before building new generators. And an AI company would want some certainty in both the supply of compute and demand for intelligence before investing billions in hiring and building infrastructure.

The Missing Piece 1: Compute Futures

Imagine a market where OpenAI can buy/sell a contract today for "100 million H100-hours deliverable in March 2026." Suddenly, they can plan. They can hedge. They can justify massive infrastructure investments because they've locked in / hedged their fuel costs. If their own research compute demand changes, they can always sell/buy back the futures.

But here's where it gets even more interesting. These futures prices would reveal something useful for the rest of the world: the market's collective view about AI progress.

Think about it:

- If AI companies are making breakthroughs (e.g. if intelligence demand is about to explode) they'll be buying every compute future they can get. Prices soar.If progress stalls (e.g. if the intelligence market isn't growing as expected) they'll be selling their excess compute capacity forward. Prices crash.

The compute futures curve becomes a real-time prediction market for the AI intelligence economy.

The Missing Piece 2: Intelligence Futures

But compute futures only solve half the problem. What if OpenAI spends $500 billion on GPUs and then nobody wants to buy intelligence? What if the market price of a ChatGPT query goes to zero? You can't just hedge one side of the P&L.

Imagine intelligence futures: contracts to deliver "1 billion GPT-5-equivalent tokens" or "100 hours of expert-level legal analysis" in 2026. Now we're talking about a complete hedging strategy. AI labs can sell these futures to remove revenue uncertainty.

Similar to compute futures, intelligence futures can also reveal something really interesting. If the price for 1 billion GPT-5-equivalent tokens soars, that means people have found new ways to use intelligence economically. And if the price crashes, that means AI applications have been limited to interesting demos rather than transformative business value.

In short, a liquid intelligence futures market allows:

For AI companies (sellers):

- Lock in revenue before building infrastructureJustify massive capex to investorsSmooth out demand volatility

For enterprises (buyers):

- Budget AI costs years in advanceGuarantee access to cutting-edge modelsHedge against intelligence price spikes

Why Don't They Exist Yet?

If we have figured out how to solve the fungibility problem for "pork belly" futures, we will be able to solve the intelligence fungibility problem.

There's a reason these markets don't exist yet. Neither compute nor intelligence is perfectly fungible:

Compute fungibility challenges:

- Hardware compatibility: Your model might be optimized for A100s, but the futures contract delivers H100sData locality: Your dataset lives in AWS, but the compute is in Google Cloud

Intelligence fungibility challenges:

- Quality variations: Not all "GPT-4 equivalent" outputs are truly equivalentTask specificity: Legal analysis intelligence might not substitute for coding intelligence

These are hard and important problems. But they seem solvable to me. Over time, we might see standardized compute units with conversion factors (like sweet vs. crude in oil futures). With more hardware standardization and algorithmic improvements, compute could become more fungible between different data centers. As for the data locality problem, one could imagine people developing better solutions for syncing data storage across different clusters, and it's also possible that most future compute usage is live inference and sampling, so the data locality issue becomes moot.

I'm less certain what the standardization of "intelligence" might looks like. We might need to wait and see how AI development plays out in the next year or so. But ultimately I don't see this being an insurmountable blocker. If we have figured out how to solve the fungibility problem for "pork belly" futures, we will be able to solve the intelligence fungibility problem.

Reason for Optimism

It's worth noting that there are existing futures markets with many of the properties we mentioned above, and they work extremely well: electricity futures.

Electricity shares compute's key properties: it's highly perishable and can't be stored economically. Yet electricity futures markets are among the most sophisticated and liquid commodity markets in the world.

They've solved the locality problem through transmission networks and standardized delivery points. They handle the perishability problem through time-specific contracts that match the rhythms of supply and demand.

These markets enable utilities companies to hedge fuel costs, power plants to secure revenue streams, and large consumers to lock in electricity prices years in advance. The price discovery mechanism works so well that electricity futures often predict demand shifts and supply constraints months before they materialize, allowing the entire industry to plan and invest accordingly.

Why This Is Awesome

"The day intelligence futures hit $10,000/million-tokens is the day when we have finally found Product Market Fit for AGI."

With both compute and intelligence futures, we get a complete market ecosystem where AI companies can hedge costs and revenue, enterprises can budget with certainty, and compute providers can plan infrastructure based on forward demand.

But the real magic happens at the system level. Just as oil and electricity futures enabled the industrial age, compute and intelligence futures will enable the intelligence age.

The implications ripple outward: companies can hedge their entire AI strategy, markets provide real-time signals about AI progress, and capital flows efficiently to where it's needed most. We can finally finance massive AI infrastructure investments, enable smaller players to compete with tech giants, and separate real AI progress from hype.

Because unlike pundits and thought leaders, futures markets have to put their money where their mouth is. The day intelligence futures hit $10,000/million-tokens is the day when we have finally found Product Market Fit for AGI [1].

Why NVDA is not a Good Proxy

You might be thinking: "Can't we just use NVIDIA stock as a proxy for all this?" It's true that NVDA has become the de facto bet on AI progress. When AI hype builds, NVDA soars. When progress stalls, it crashes. But it's a deeply flawed proxy for several reasons:

For hedging compute costs:

NVDA stock price ≠ GPU rental price. The stock reflects expectations about NVIDIA's pricing power and market share, not the spot price of compute You can't deliver NVDA shares to your data center when you need GPUs. The correlation breaks down exactly when you need it most (e.g., if AMD or a startup disrupts the market)

For price discovery:

NVDA conflates hardware manufacturing with compute availability. It tells you nothing about utilization rates or regional compute supply. It can't distinguish between "AI is progressing rapidly" and "NVIDIA has a moat"

For intelligence markets:

NVDA also tells you nothing about the price of a GPT query. Can't hedge the spread between compute costs and intelligence revenue. NVDA is like trying to hedge airline fuel costs by buying Boeing stock. There's some correlation, but you're exposed to a dozen other factors you don't care about.

More Wild Implications

Epistemic status: progressively more trolling

The Spread Trade

The spread between compute futures and intelligence futures becomes the market's view on AI efficiency.

If intelligence futures are expensive relative to compute futures, that means the market believes:

- Demand for intelligence is outstripping supplyCompetition among AI providers is limitedAI models will not be commoditized: one or two labs own the secret sauce to produce intelligence and can capture its economic value

If the spread narrows, it signals:

- Supply for intelligence is outstripping demandAI services are commoditizedIncreased competition between AI labs

Traders could arbitrage this spread, and thereby providing price discovery on the productivity of the AI industry.

The Solar Trade

Based on current trends:

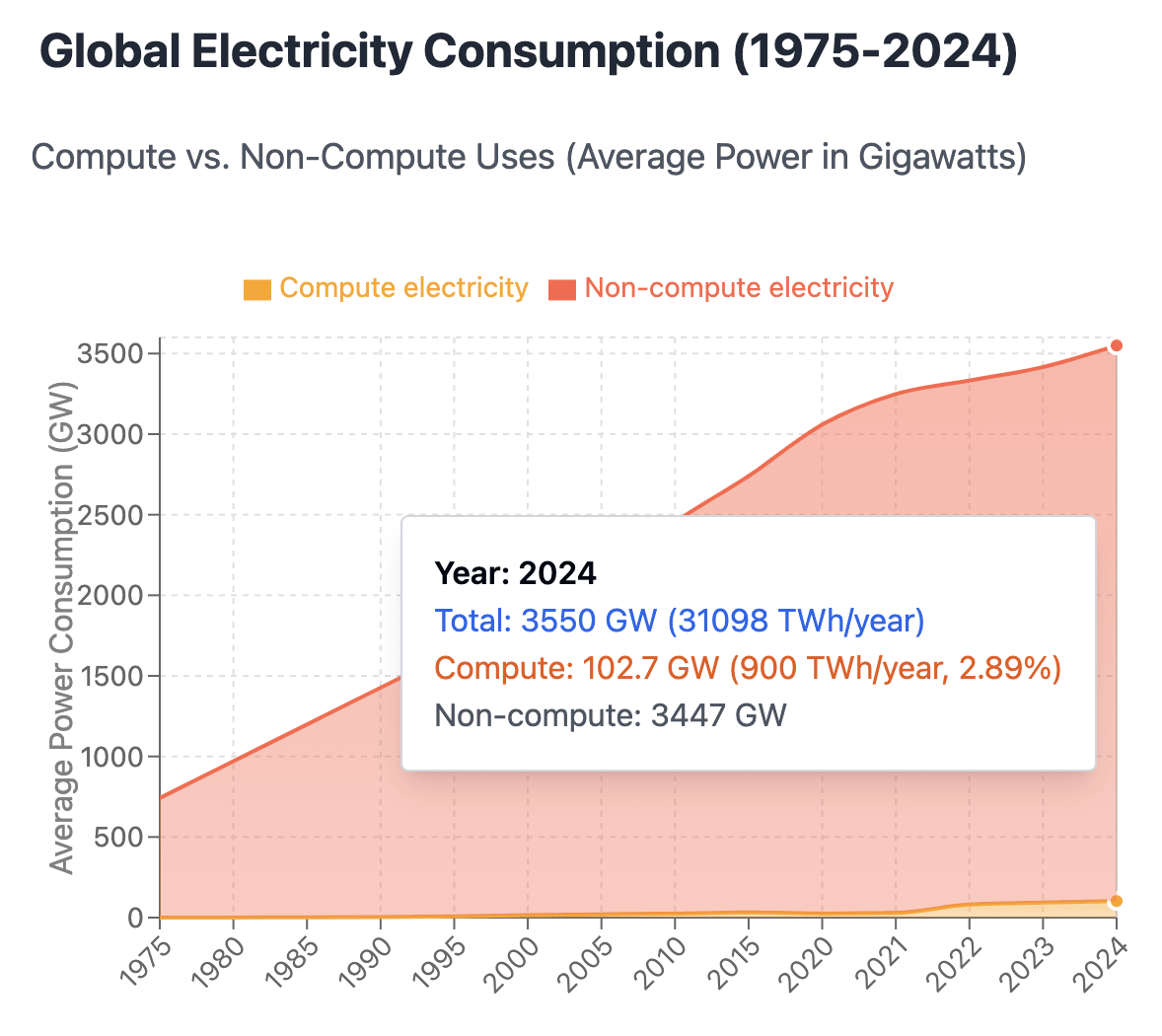

Electricity consumption by compute is growing much faster than overall electricity consumption (Claude Opus4 produced the plot):

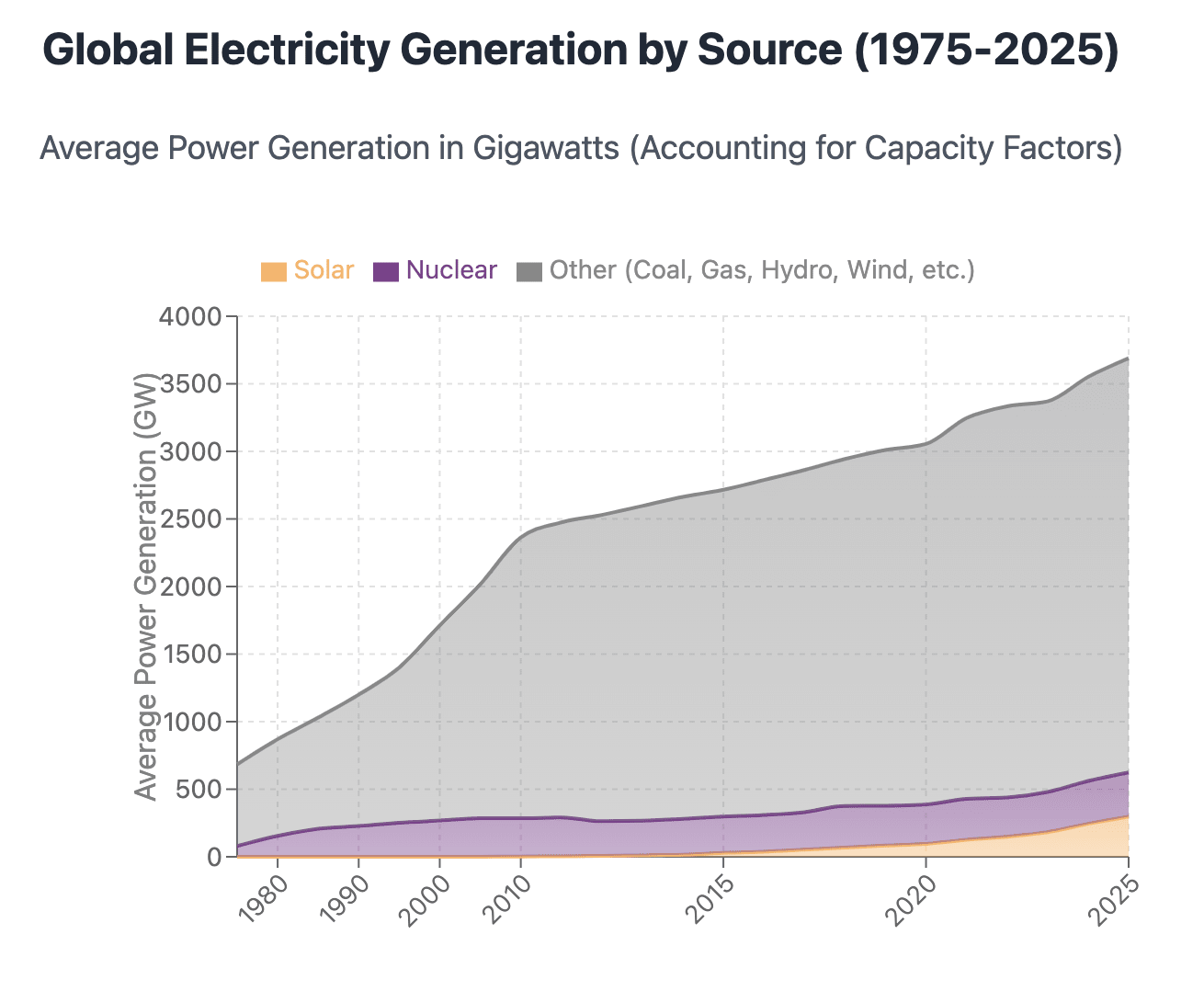

Solar is growing much faster than other energy sources (Claude Opus4 produced the plot):

Extrapolate those trends and we see:

- Most energy consumption will be computeMost energy generation will be solar

This means that there will be more compute, and thus more intelligence during day time. The compute futures market will have to price in daily solar cycles.

It's kind of fun to picture AI agents working during the day and resting at night. Maybe that's the true AGI moment.

- I am mostly suggesting that high token cost implies PMF, not the other way. Of course if there is enough competition between AI labs the token cost will stay low. ↩︎

Discuss