Published on July 4, 2025 4:54 PM GMT

I think a lot about the possibility of huge numbers of AI agents doing AI R&D inside an AI company (as depicted in AI 2027). I think particularly about what will happen if those AIs are scheming: coherently and carefully trying to grab power and take over the AI company, as a prelude to taking over the world. And even more particularly, I think about how we might try to mitigate the insider risk posed by these AIs, taking inspiration from traditional computer security, traditional insider threat prevention techniques, and first-principles thinking about the security opportunities posed by the differences between AIs and humans.

So to flesh out this situation, I’m imagining a situation something like AI 2027 forecasts for March 2027:

- The compute available to the leading AI company has increased 7x compared to today.There are now 200K superhuman coder copies thinking at 30x human speed.These AIs are mostly working on ML R&D, doing tasks like:

- Generating training data, e.g. RL environmentsAlgorithm development, e.g. finding architecture improvements, implementing neuralese, finding more efficient ways to run iterated distillation and amplification.Performance engineering: finding ways of running inference, training, and other expensive workloads more efficiently.

I want to know what we need to do to ensure that even if those AIs are conspiring against us, they aren’t able to cause catastrophic security failures.[1]

One important question here is how strongly incentivized we are to use the AIs to write security-critical infrastructure code.

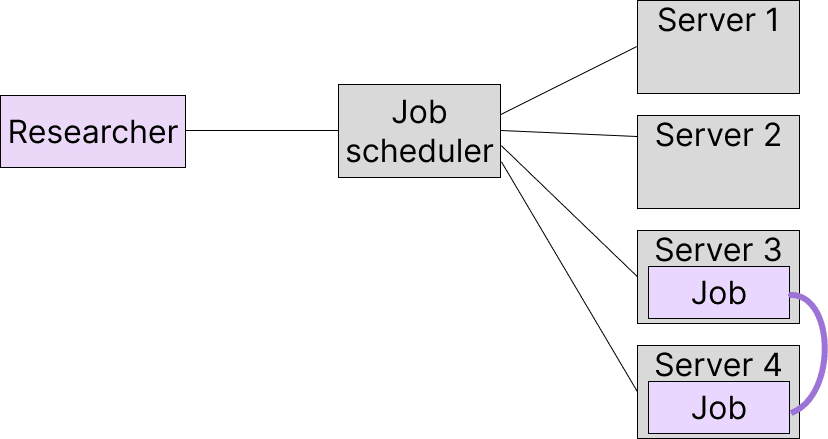

The most optimistic possibility here is that the AIs don’t need to write such code: instead of acting as infrastructure engineers, they act with basically the permissions that ML researchers at Google DeepMind have.[2] Something like this: The AIs act as users of the cluster infrastructure, rather than as developers of that infrastructure. When they want to run jobs, they submit their jobs to a scheduler with a certain set of permissions, and the infrastructure ensures that the jobs can’t affect parts of the system they don’t have permission to.

But the AIs don’t make lots of contributions to that underlying infrastructure (e.g. the job scheduler or the hypervisors controlling jobs on the servers). They aren’t making huge changes to the codebases owned by the datacenter teams, the infrastructure teams, or the security teams.

The more pessimistic possibility is that the AI company needs to have the AIs write a bunch of novel infrastructure code. Under this possibility, the AIs are rewriting your routers, your job scheduler, your OS kernel, your GPU interconnect drivers, and so on. If this is the case, we’re in a much scarier situation, because we have an additional huge and confusing attack surface.

Note that even in the optimistic case where the AIs just have the permissions that ML researchers usually have, and no lower-level permissions at all, the situation seems very dangerous! Most of the threat modeling I’ve done related to AI control is about the risks posed by the “optimistic” situation. (For why I think this is still risky, see footnote[3].) What I’m discussing here is whether there's a big source of risk aside from the ones I normally talk about!

So, how strongly will AI companies be incentivized to do novel infrastructure development during an intelligence explosion? I’m quite unsure. Here are some reasons that we might expect big infrastructure changes, based on conversations with AI infrastructure experts and my own thinking.

Differences between AI and human labor will incentivize massive infra changes

During the intelligence explosion, I expect AI agents to have pretty different properties from human researchers, which might necessitate either developing new infrastructure, or using current infrastructure in ways that degrade security properties.

One particularly important dynamic is: researchers currently make many tradeoffs that reduce performance in return for saving researcher time. For example, they repeatedly rebuild Docker containers, in return for reduced risk of Python environment issues. The advent of automated engineers and researchers will probably mean[4] we want to shift to a workflow where (like the computer users of the past) the users have much less separation and rely on manual coordination, in order to eke out performance. For example, instead of working on separate computers, they might want to work as different users (or the same user!) on the same Linux server. This would make it way harder for us to enforce a bunch of security invariants (especially stuff about tracking how information was flowing from agent to agent).

Another way of saying this is: Technologies that allow workers to be more isolated from each other gain you both convenience (because your coworkers no longer accidentally mess up what you’re doing) and also security (because you can remove your coworker’s permission to affect the code you’re running), but generally reduce efficiency. When we try to buy efficiency at the cost of convenience, we might lose security too.

This argument doesn’t suggest that AIs will necessitate development of novel security-critical infrastructure; the concern here is that we’ll need to stop using existing technology that enforces security properties. (And then developing new technology for these new workflows would either be an example of novel datacenter infrastructure as I defined it, or would be impossible to do without losing the performance gains.)

Side-channel prevention poses particularly tricky difficulties along these lines. When there’s a resource shared between multiple users, you inherently have to choose between inefficiency or side-channels. (If there’s a bathroom in your shared house, you’ll learn what time your roommate tends to shower, unless you make a rule that he’s only allowed to use it in the even-numbered hours and you’re only allowed to use it in the odd-numbered hours.) The throughput of the side-channel obviously varies depending on the details, but there are at least some cases (especially sharing memory/CPUs/GPUs) where preventing side-channels seems really hard.

On the other hand, increased AI labor might mean the AIs can not-that-expensively jump through annoying hoops created by fine-grained permission management, which might improve security (as discussed here).

Adopting new hardware will require modifying security-critical code

It looks like a bunch of security-critical components are currently under active development, so we should expect them to change over time. For example, networking equipment has been developed a bunch recently to support ML workflows, and some of it is designed to be user-configurable (e.g. Juniper’s user-programmable routers, which give a case study of doing all-reduce in your router (!)). This is evidence that there will be lots of deployed non-mature technology that needs security-critical code, some of which has been especially designed to allow (and maybe require?) end-user programmable configuration.

Infra rewrites can get you big performance improvements

AIs might be able to rewrite code to make it much faster. If they can do that for security-critical infrastructure code, you’ll be strongly incentivized to let them do so. I don’t know how important this consideration is because I don’t know how much inefficiency is coming from parts of the stack that are security-critical. My guess is that most improvements to efficiency of training will come from parts of the stack that aren’t security critical (e.g. writing new kernels, developing new architectures), though some parts seem security critical (e.g. low level network configuration). I don’t know how much efficiency gain on other workloads you can get from upgrading security-critical infrastructure.

The AI company builds new security-critical infra really fast for the sake of security

The AI company might be afraid that off-the-shelf code might have vulnerabilities that adversaries know about (either because of offensive cyber research that adversaries have done, or because it’s been backdoored), and so might want to rewrite everything to reduce security risk. AI company employees often propose doing this.

Also, AI companies might write a bunch of security-critical infra code really fast because they suddenly decide to build or physically reconfigure their datacenter really fast in order to increase physical security. These security requirements might rule out the possibility of them using an existing cloud compute provider, potentially necessitating a bunch of novel code.

Conclusion

I still feel pretty unsure about how all this is going to play out.

Talking to infrastructure engineers inside AI companies has been somewhat helpful for clarifying my thoughts here, but they mostly haven’t thought through the futurism part of this, which means that I can’t just rely on them to give me answers.

I’m hoping to think more about these questions over the next few weeks.

- ^

I think the most plausible such security failures are the AI exfiltrating its weights, the AI hacking the datacenters enough that the AI company loses necessary observability into what’s happening inside them, or the AI seizing control of the AI company (perhaps by threatening or bribing company leadership).

- ^

I’m using GDM as the example because they’re part of Google, a company that has famously good security and that doesn’t rely on external cloud providers. The situation seems basically just worse for Anthropic/OpenAI/x.ai.

- ^

To start with, the AIs might be tasked with doing things that can lead to catastrophe without them having to violate any security invariants that AWS enforces on users. One example is that the AIs might be able to launch rogue internal deployments without violating those security invariants, if it already has access to scary model weights. Another is that if the AIs are tasked with generating data for future training runs, that data might be covertly poisoned. The other big issue is that even though AWS has pretty good security, it’s probably not robust to wildly superintelligent AIs putting hundreds of thousands of human-equivalent years of work into hacking it.)

- ^

[These numbers are sloppy] In March 2027 in the AI 2027 scenario, the compute available to OpenBrain has increased 7x compared to today, while there are now 200K Superhuman coder copies thinking at 30x human speed, for 6M human coder equivalents, which is like 4 OOMs more coders than it probably had in June 2025. So that’s about 3 OOMs less compute than is currently available.

Discuss