Black Forest Labs, one of the world’s leading AI research labs, just changed the game for image generation.

The lab’s FLUX.1 image models have earned global attention for delivering high-quality visuals with exceptional prompt adherence. Now, with its new FLUX.1 Kontext model, the lab is fundamentally changing how users can guide and refine the image generation process.

To get their desired results, AI artists today often use a combination of models and ControlNets — AI models that help guide the outputs of an image generator. This commonly involves combining multiple ControlNets or using advanced techniques like the one used in the NVIDIA AI Blueprint for 3D-guided image generation, where a draft 3D scene is used to determine the composition of an image.

The new FLUX.1 Kontext model simplifies this by providing a single model that can perform both image generation and editing, using natural language.

NVIDIA has collaborated with Black Forest Labs to optimize FLUX.1 Kontext [dev] for NVIDIA RTX GPUs using the NVIDIA TensorRT software development kit and quantization to deliver faster inference with lower VRAM requirements.

For creators and developers alike, TensorRT optimizations mean faster edits, smoother iteration and more control — right from their RTX-powered machines.

The FLUX.1 Kontext [dev] Flex: In-Context Image Generation

Black Forest Labs in May introduced the FLUX.1 Kontext family of image models which accept both text and image prompts.

These models allow users to start from a reference image and guide edits with simple language, without the need for fine-tuning or complex workflows with multiple ControlNets.

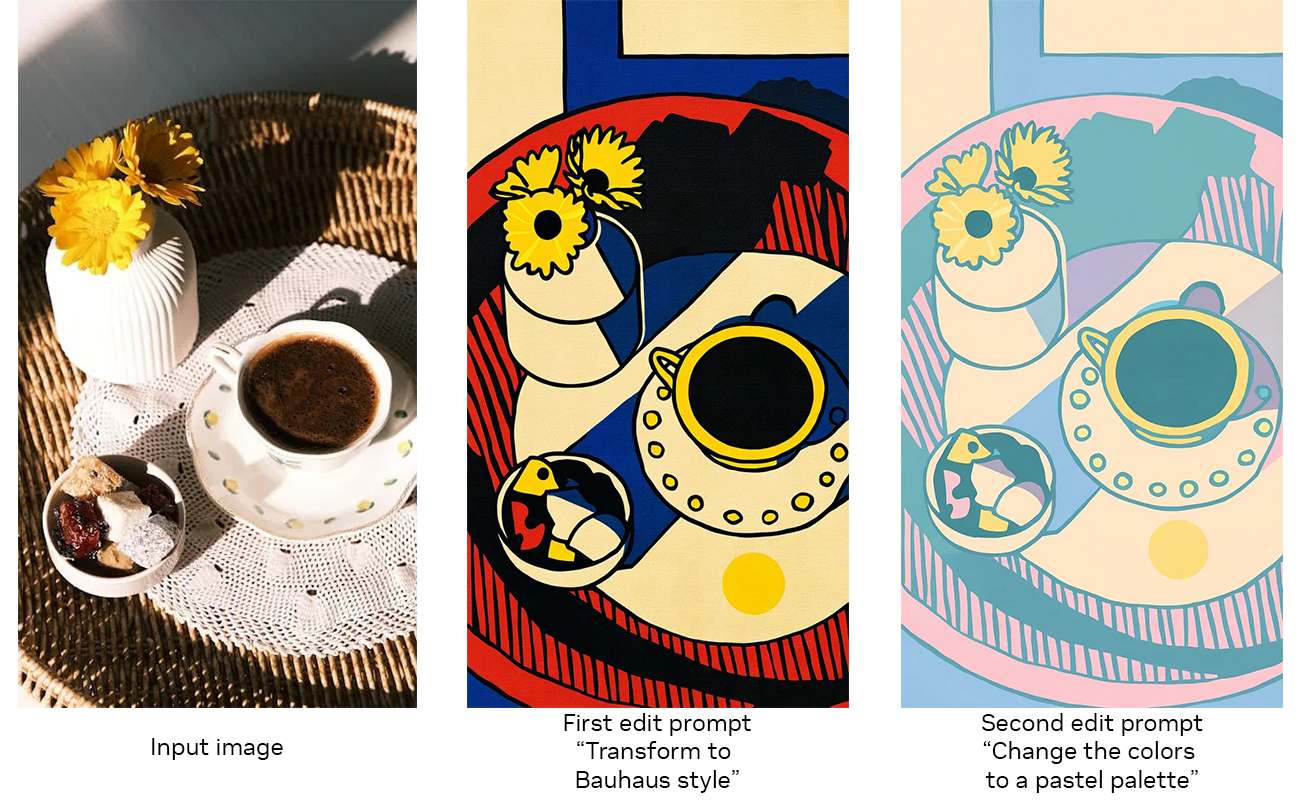

FLUX.1 Kontext is an open-weight generative model built for image editing using a guided, step-by-step generation process that makes it easier to control how an image evolves, whether refining small details or transforming an entire scene. Because the model accepts both text and image inputs, users can easily reference a visual concept and guide how it evolves in a natural and intuitive way. This enables coherent, high-quality image edits that stay true to the original concept.

FLUX.1 Kontext’s key capabilities include:

- Character Consistency: Preserve unique traits across multiple scenes and angles.Localized Editing: Modify specific elements without altering the rest of the image.Style Transfer: Apply the look and feel of a reference image to new scenes.Real-Time Performance: Low-latency generation supports fast iteration and feedback.

Black Forest Labs last week released FLUX.1 Kontext weights for download in Hugging Face, as well as the corresponding TensorRT-accelerated variants.

The [dev] model emphasizes flexibility and control. It supports capabilities like character consistency, style preservation and localized image adjustments, with integrated ControlNet functionality for structured visual prompting.

FLUX.1 Kontext [dev] is already available in ComfyUI and the Black Forest Labs Playground, with an NVIDIA NIM microservice version expected to release in August.

Optimized for RTX With TensorRT Acceleration

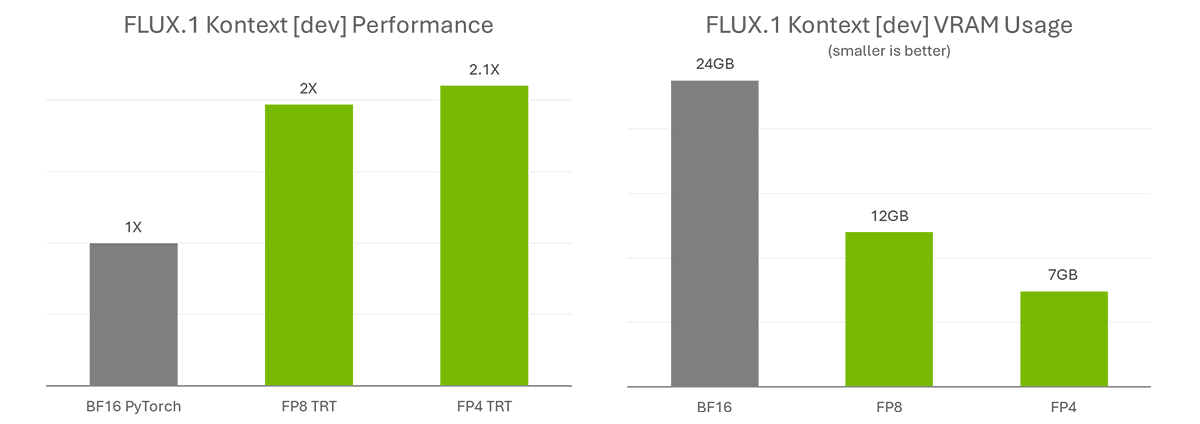

FLUX.1 Kontext [dev] accelerates creativity by simplifying complex workflows. To further streamline the work and broaden accessibility, NVIDIA and Black Forest Labs collaborated to quantize the model — reducing the VRAM requirements so more people can run it locally — and optimized it with TensorRT to double its performance.

The quantization step enables the model size to be reduced from 24GB to 12GB for FP8 (Ada) and 7GB for FP4 (Blackwell). The FP8 checkpoint is optimized for GeForce RTX 40 Series GPUs, which have FP8 accelerators in their Tensor Cores. The FP4 checkpoint is optimized for GeForce RTX 50 Series GPUs for the same reason and uses a new method called SVDQuant, which preserves high image quality while reducing model size.

TensorRT — a framework to access the Tensor Cores in NVIDIA RTX GPUs for maximum performance — provides over 2x acceleration compared with running the original BF16 model with PyTorch.

Get Started With FLUX.1 Kontext

FLUX.1 Kontext [dev] is available on Hugging Face (Torch and TensorRT).

AI enthusiasts interested in testing these models can download the Torch variants and use them in ComfyUI. Black Forest Labs has also made available an online playground for testing the model.

For advanced users and developers, NVIDIA is working on sample code for easy integration of TensorRT pipelines into workflows. Check out the DemoDiffusion repository to come later this month.

But Wait, There’s More

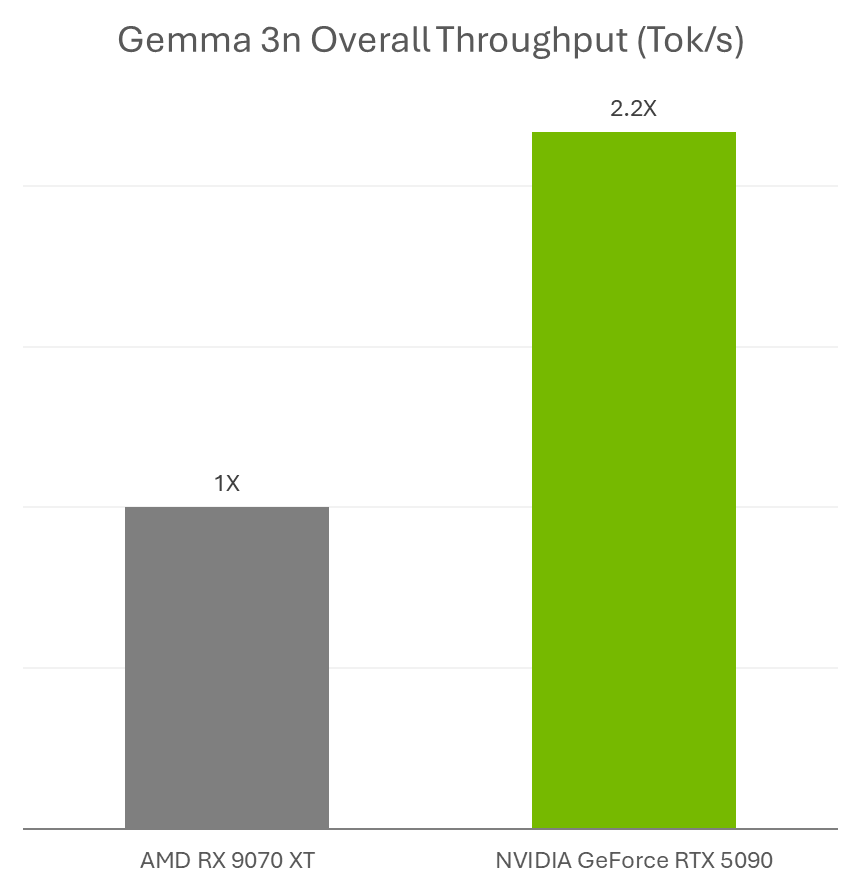

Google last week announced the release of Gemma 3n, a new multimodal small language model ideal for running on NVIDIA GeForce RTX GPUs and the NVIDIA Jetson platform for edge AI and robotics.

AI enthusiasts can use Gemma 3n models with RTX accelerations in Ollama and Llama.cpp with their favorite apps, such as AnythingLLM and LM Studio.

Plus, developers can easily deploy Gemma 3n models using Ollama and benefit from RTX accelerations. Learn more about how to run Gemma 3n on Jetson and RTX.

In addition, NVIDIA’s Plug and Play: Project G-Assist Plug-In Hackathon — running virtually through Wednesday, July 16 — invites developers to explore AI and build custom G-Assist plug-ins for a chance to win prizes. Save the date for the G-Assist Plug-In webinar on Wednesday, July 9, from 10-11 a.m. PT, to learn more about Project G-Assist capabilities and fundamentals, and to participate in a live Q&A session.

Join NVIDIA’s Discord server to connect with community developers and AI enthusiasts for discussions on what’s possible with RTX AI.

Each week, the RTX AI Garage blog series features community-driven AI innovations and content for those looking to learn more about NVIDIA NIM microservices and AI Blueprints, as well as building AI agents, creative workflows, digital humans, productivity apps and more on AI PCs and workstations.

Plug in to NVIDIA AI PC on Facebook, Instagram, TikTok and X — and stay informed by subscribing to the RTX AI PC newsletter.

Follow NVIDIA Workstation on LinkedIn and X.

See notice regarding software product information.