🔁 Hugging Face 转推了

Hunyuan @TencentHunyuan

🚀 Introducing Hunyuan-A13B, our latest open-source LLM.

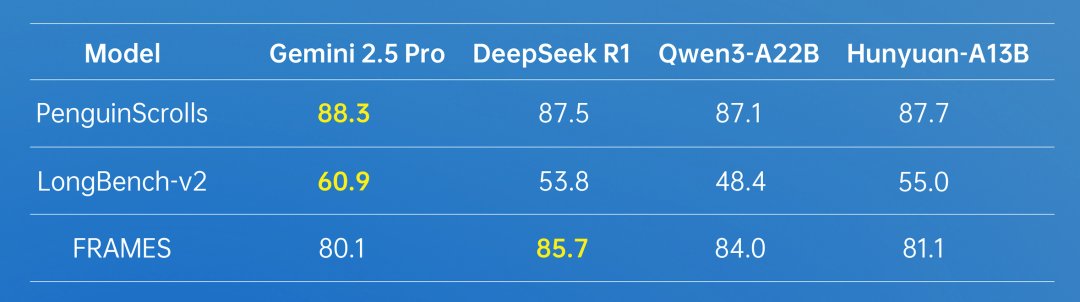

As an MoE model, it leverages 80B total parameters with just 13B active, delivering powerful performance that scores on par with o1 and DeepSeek across multiple mainstream benchmarks.

Hunyuan-A13B features a hybrid

As an MoE model, it leverages 80B total parameters with just 13B active, delivering powerful performance that scores on par with o1 and DeepSeek across multiple mainstream benchmarks.

Hunyuan-A13B features a hybrid