The placement of links within Interconnects has had a few evolutions over the years and hopefully this next one is a more stable place. I’m moving the links post from the Artifacts Log series to a standalone, slightly less than monthly, series. This’ll give them space to breathe. They’re a popular subset of Artifacts Log’s based on the click data I get, but in this era with so much content it’s best to give you one obvious piece of content per email.

A separate post will also give me a bit more space to tell you why I’m reading them. Comments for these posts will be open, so folks can chime in on what they’re reading too.

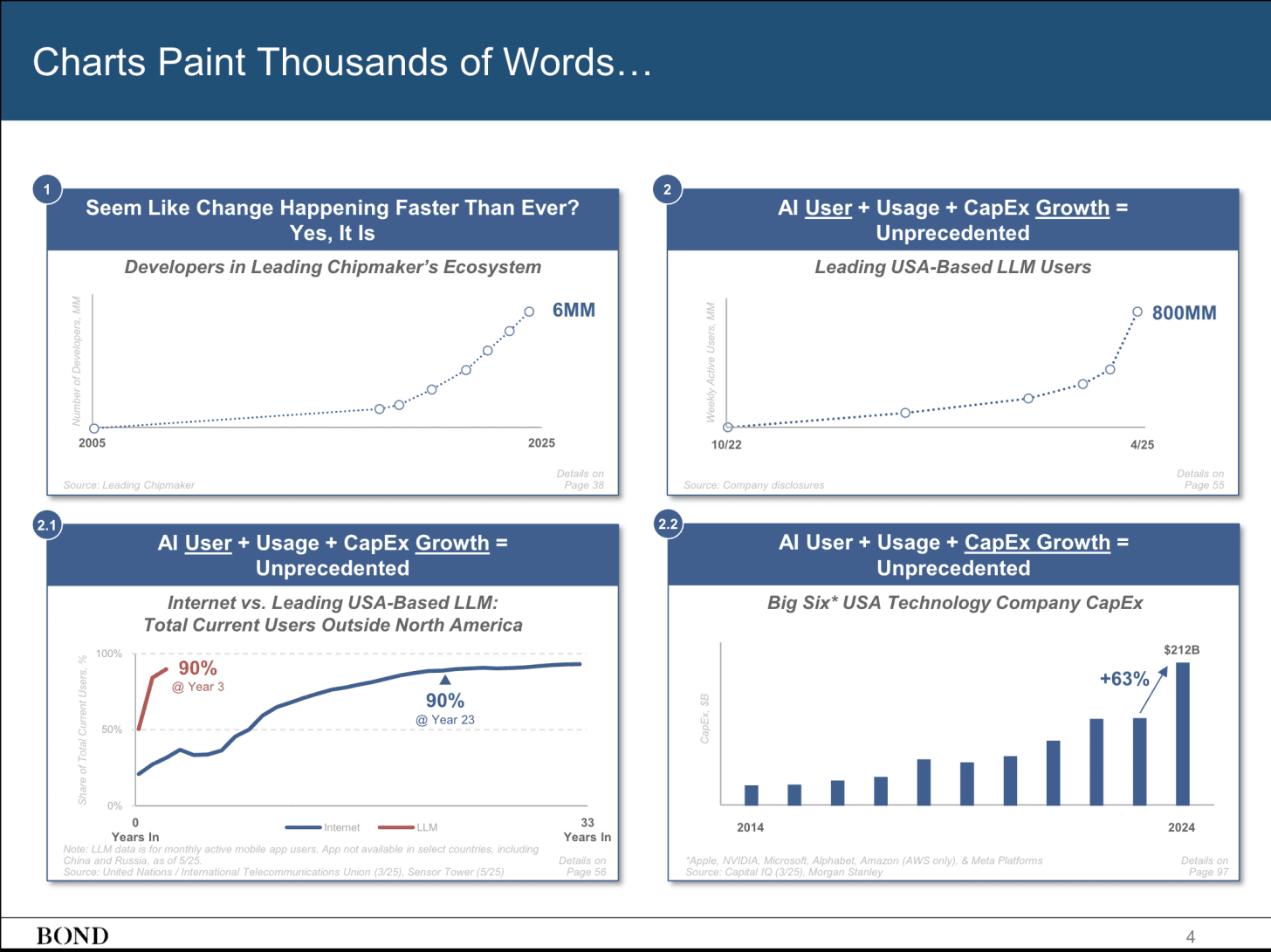

This Mary Meeker slide-deck did the rounds weeks ago, but it’s still the best summary of the industry we’ve seen in a long time. It shows countless trends that are up and to the right in usage and revenue growth. There is so much evidence that people use AI more than you think and that the revenue forecasts for AI companies, at least for existing products and not far out agents, are realistic because they’re supply limited on compute (unlike previous software companies).

The core of what I’ve been reading is within the Substack AI content ecosystem. There’s so much great stuff getting published right now telling you where the technology is going — i.e. stuff not focused on noisy product releases or drama. Ones I liked include:

My new colleague ’s take on the path to AGI with RL. This complements my recent pieces on next-generation reasoning models, the philosophy of reasoning machines, and RL generally. Here’s an excerpt:

The development of AlphaGo illustrates this paradox perfectly:

RL was essentially the only viable approach for achieving superhuman performance in Go

The project succeeded but required enormous resources and effort

The solution existed in a "narrow passageway" - there were likely very few variations of the AlphaGo approach that would have worked, as can be seen by the struggle that others have had replicating AlphaGo’s success in other domains.

Another one is from an author I consistently recommend, , who leads model behavior at OpenAI. This post is on human-AI relationships, which is somewhat far out, but reinforces how model behavior is going to get easier to steer as models get stronger, which opens up very different problems than we’re facing today with LMArena maxing models as our biggest problem.

In some ways this feels like rounding up who among the top tiers of AI circles wrote in public, but in reality plenty of the pieces from these authors wouldn’t make the cut.

The next one from of UC Berkeley and Physical Intelligence fame provided an interesting provocation on why language as the foundation of AI may have been special — essentially letting the models think in a token space that can allow thinking. The post answers the question: If we have so much more video data than text, why are text-based models better at understanding the world than predicting just the next pixels?

Here’s an excerpt:

Unfortunately, things didn’t pan out the way that video prediction researchers had expected. Although we now have models that can generate remarkably realistic video based on user requests, if we want models that solve complex problems, perform intricate reasoning, and make subtle inferences, language models are still the main and only option.

And, of course, we have helping carry the torch of the few remaining people who are very optimistic about the potential of AI as a technology calling out the crazy moves of the industry. Too many of these critiques come from people who are critical of the technology as well, so their voices are ignored by people in power because they’re never being reasonable.

The post expanding on the problem of people making nonsensical critiques of AI is below, from a newer author I found. I describe this as an antidote to the Gary Marcus disease.

The last of the AI posts on Substack you need to read is the Latent Space interview with Noam Brown. Simply a great episode recapping recent topics.

On the long-form side, I’ve been reading the two books that came out recently about OpenAI — Empire of AI and The Optimist. They do very different things. Empire of AI is better overall for learning about how OpenAI and the Valley operate, but some sections I skipped over as a bit too polemic for me. The Optimist has some nice history on Sam Altman’s origins and his time at Y Combinator, but the OpenAI stuff was much lighter, so I didn’t finish it and am happily starting Apple in China.

From here, let’s go to some quick hits:

A post asking do reasoning models work like humans?

nanoVLLM: A minimal implementation of a complex inference library like VLLM.

A nice post on why Q learning is unlikely to scale (hint, TD learning).

An analysis of the SWE Bench benchmark.

A beautiful, online-first computer vision textbook.

And, that Karpathy talk at YC on the new software with LLMs that you need to watch.

And to end this issue, for the Substack service level nerds like me, you can read this post on how so many mainstream voices are now joining Substack. This actually lifts all boats by bringing more readers onto the platform.