Published on June 20, 2025 12:17 PM GMT

Recently, in a group chat with friends, someone posted this Lesswrong post and quoted:

The group consensus on somebody's attractiveness accounted for roughly 60% of the variance in people's perceptions of the person's relative attractiveness.

I answered that, embarrassingly, even after reading Spencer Greenberg's tweets for years, I don't actually know what it means when one says:

explains of the variance in .[1]

What followed was a vigorous discussion about the correct definition, and several links to external sources like Wikipedia. Sadly, it seems to me that all online explanations (e.g. on Wikipedia here and here), while precise, seem philosophically wrong since they confuse the platonic concept of explained variance with the variance explained by a statistical model like linear regression.

The goal of this post is to give a conceptually satisfying definition of explained variance. The post also explains how to approximate this concept, and contains many examples. I hope that after reading this post, you will remember the meaning of explained variance forever.

Audience: Anyone who has some familiarity with concepts like expected values and variance and ideally also an intuitive understanding of explained variance itself. I will repeat the definitions of all concepts, but it is likely easier to appreciate the post if one encountered them before.

Epistemic status: I thought up the "platonically correct" definition I give in this post myself, and I guess that there are probably texts out there that state precisely this definition. But I didn't read any such texts, and as such, there's a good chance that many people would disagree with parts of this post or its framing. Also, note that all plots are fake data generated by Gemini and ChatGPT --- please forgive me for inconsistencies in the details.

Acknowledgments: Thanks to Tom Lieberum and Niels Doehring for pointing me toward the definition of explained variance that made it into this post. Thank you to Markus Over for giving feedback on drafts. Thanks to ChatGPT and Gemini for help with the figures and some math.

Definitions

The verbal definition

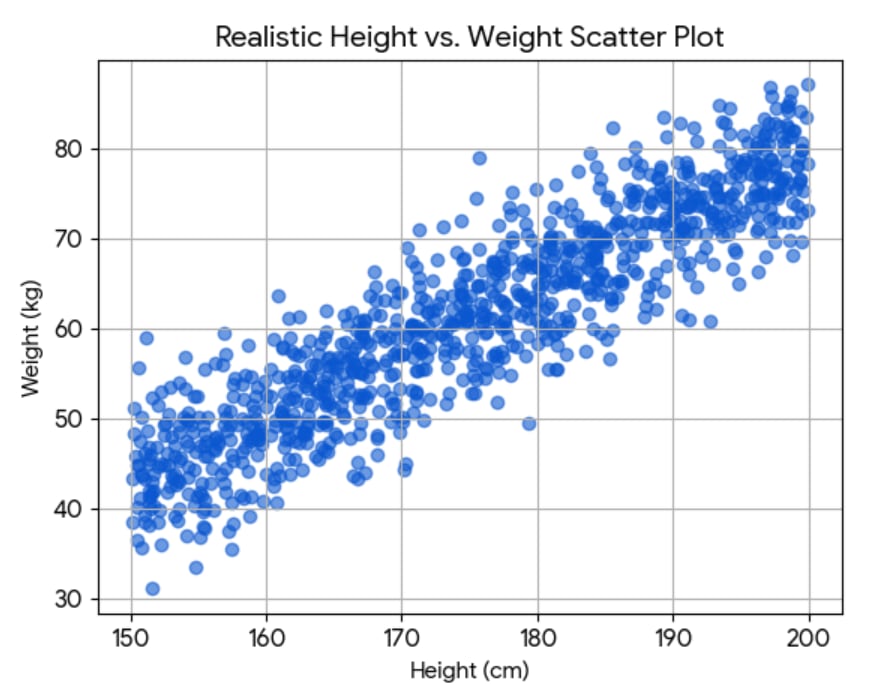

Assume you observe data like this fake (and unrealistic) height-weight scatterplot of 1000 people:

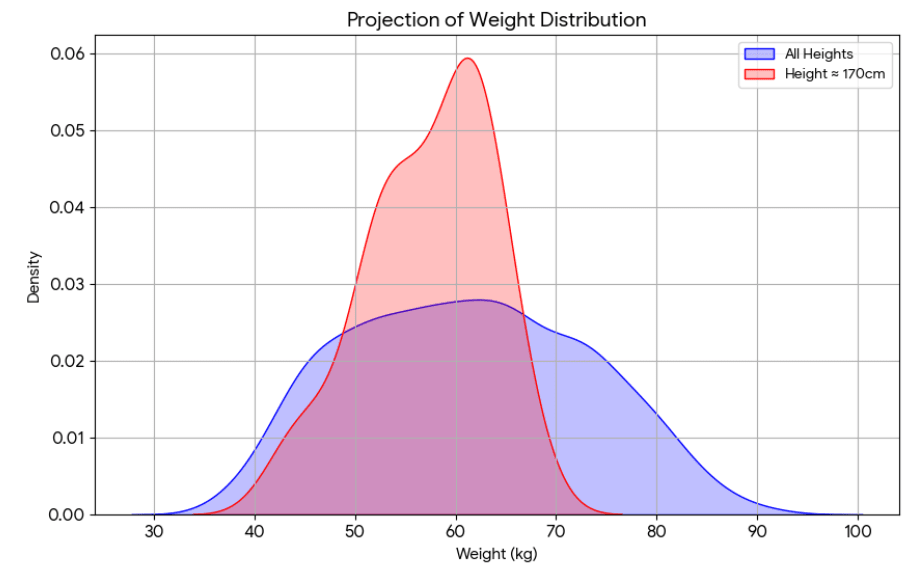

Let be the height and be the weight of people. Clearly, height is somewhat predictive of weight, but how much? One answer is to look at the extent to which knowledge of narrows down the space of possibilities for . For example, compare the spread in weights for the whole dataset with the spread for the specific height of 170cm:

The spread in these two curves is roughly what one calls their "variance". That height explains of the variance in weight then means the following: the spread of weight for a specific height is times the total spread. It's a measure of the degree to which height determines weight!

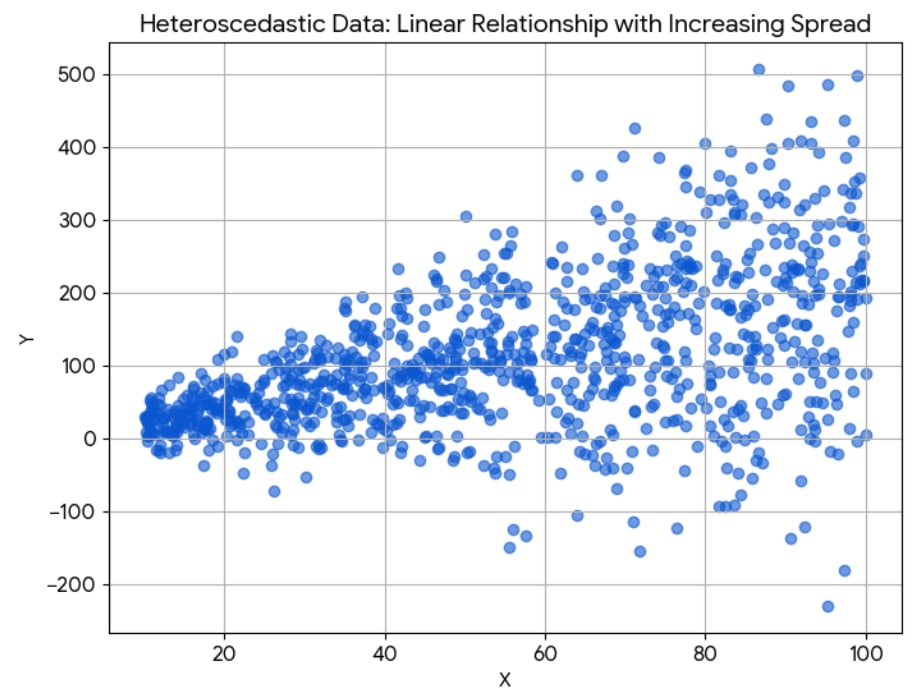

There is a caveat, which is that the spread might differ between different heights. E.g., look at yet another artificial scatter plot:

Here are three projections of the data on , for small, large, and all values of :

The spread varies massively between different ! So which spread do we compare the total spread (blue) with, when making a statement like " explains of the variance in "? The answer is to build the average of the spreads over all values of , weighted by how likely these values of are.

Thus, we can translate the sentence

explains of the variance in

to the following verbal definition:

On average, over all different values of weighted by their likelihood, the remaining variance in is times the total variance in .

This is the definition that we will translate to formal math below!

The mathematical definition

We now present a mathematical definition that precisely mirrors the verbal definition above. It's important to understand that the verbal definition is much more general than the figures I presented might suggest. In particular:

- The definition does not presuppose any particular relationship between and , like being linear up to some noise.The definition does not assume that and live "in the same space". It could be that samples of are very complex object, like "all genetic information about a human". But we assume to always be representable on a scale.The definition does not assume a specific way of predicting from . We will come to that later, when talking about regression, which leads to the more widely-used definitions in the literature.

With that out of the way, recall that we want to translate this sentence to formal math:

On average, over all different values of weighted by their likelihood, the remaining variance in is times the total variance in .

To accomplish this, we model and as random variables, which are jointly sampled according to a density . To be fully general, takes values in some arbitrary (suitable, measurable) space , and takes values in the real numbers . In regions of the "plane" where is large, we are more likely to sample datapoints than elsewhere. We can then express the sentence above in the following formula:

We need to explain all the symbols here! Let's start with the total variance in , which we denote by . It is the average of the squared distance of samples of from the mean of . As a formula:

Note that is the marginal density of , which is given from the joint density by The mean that appears in the variance is itself an average, namely over all of :

What about the remaining variance, which we denoted ? According to the verbal definition, it is an average of the remaining variances for different values of , weighted by their likelihood. So we get:

Now we need to explain the inner remaining variance. The idea: It is given in the same way as the total variance of , except that is now sampled conditional on being fixed. We obtain:

where is the conditional density, and where the conditional mean is given by

This explains the entire definition!

If we now want to make a claim of the form " explains of the variance of " and want to determine the fraction of unexplained variance for it, then we simply rearrange the top formula:

The fraction of explained variance is then

How to approximate

I now discuss how to approximate the fraction of unexplained variance via the formula above.

When you have lots of data

Imagine you sample lots of datapoints , which we conceptually think of as being sampled from the joint distribution . Define as the sample mean of , which approximates the true mean :

Then we easily get an approximation of the total variance as:

For each , define as the sample mean of taken over all for which . This approximates . With the number of for which , we obtain:

Using the chain rule , we can approximate the remaining variance as follows:

Putting it together, we obtain:

where the was canceled in the last step.

When you have less data: Regression

The formula above is nice in that it converges to the true fraction of unexplained variance when you have lots of data. However, it has the drawback that, unless we have enormous amounts of data, the means will probably be very inaccurate --- after all, if the specific value appears only once in the data (which is virtually guaranteed if is itself a continuous random variable in the special case ), then is only based on one data point, leading to . The numerator in the fraction then disappears. This results in a very severe case of overfitting in which it falsely "appears" as if explains all the variance in : We obtain the estimate .

This is why in practice the concept is often defined relative to a regression model. Assume that we fit a regression function that approximates the conditional mean of :

represents the best guess for the value of , given that is known. can be any parameterized function, e.g. a neural network, that generalizes well. If , then could be given by the best linear fit, i.e. a linear regression. Then, simply define , leading to outwardly the same formula as before:

Here, is the approximate fraction of variance in that cannot be explained by the regression model from . This is precisely the definition in the Wikipedia article on the fraction of variance unexplained.

As far as I can tell, the fraction of variance explained/unexplained is in the literature predominantly discussed relative to a regression model. But I find it useful to keep in mind that there is a platonic ideal that expresses how much variance in is truly explained by . We then usually approximate this upon settling on a hopefully well-generalizing statistical model that is as good as possible at predicting given knowledge of .

Examples

We now look at two more examples to make the concept as clear as possible. In the first, we study the variance explained by three different regression models/fits. In the second one, we look at variance explained by genetic information in twin studies, which does not involve any regression (and not even knowledge of the genes in the samples!).

Dependence on the regression model

Assume is the sidelength of a cube of water and is a measurement of its volume. The plot might look something like this:

Up to some noise, the true relationship is . If you use as our regression fit (green line in the graph), then we explain almost all variance in volume. Consequently, the fraction of variance unexplained by the regression function is roughly .

If you do linear regression, then your best fit will look roughly like the blue line, given by . This time, substantial variance remains since the blue line does mostly not go through the actual data points. But we still reduced the total variance substantially since the blue line is on average much closer to the datapoints than the mean over all datapoints is to those points (said mean is approximately ). Thus, the fraction of variance unexplained by the regression function is somewhere strictly between and : .

If you do linear regression, but your linear fit is very bad, like (red dotted line), then you're so far away from the data that it would have been better to predict the data by their total mean. Consequently, the remaining variance is greater than the total variance and the fraction of variance unexplained is .[2]

When you have incomplete data: Twin studies

Now, imagine you want to figure out to what extent genes, , explain the variance in the IQ, . Also, imagine that it is difficult to make precise gene measurements. How would you go about determining the fraction of unexplained variance in this case? The key obstacle is that all you can measure is IQ values , and you don't know the genes of those people. Thus, we cannot determine a regression function , and thus also no estimate to be used in the formula. At first glance this seems like an unsolvable problem; but notice that does not appear in the final formula of ! If only it was possible to determine an estimate of ...

There is a rescue, namely twin studies! Assume data , where are the unknown genes of an identical twin pair, and the IQ measures of both twins. We don't use regression but instead use an approach adapted from the true approximation from this section. Define the conditional means by:

Since we only have two datapoints per twin pair, to get an unbiased estimate of the conditional variance , we need to multiply the sample variance by a factor of (we did not need this earlier since we assumed that we have lots of data, or that our regression is well-generalizing).[3] With this correction, the fraction of unexplained variance computed over all datapoints becomes:

Now, notice that[4]

Consequently, we obtain

This is precisely , where is the intraclass correlation as defined in this wikipedia page. If we apply this formula to a dataset of 32 twin pairs who were separated early in life, then we arrive[5] at , meaning that (according to this dataset) genes explain ~79% of the variance in IQ.[6]

Conclusion

In this post, I explained the sentence " explains of the variance in " as follows:

On average, over all different values of weighted by their likelihood, the remaining variance in is times the total variance in .

If an intuitive understanding of this sentence is all you take away from this post, then this is a success.

I then gave a precise mathematical definition and explained how to approximate the fraction of unexplained variance when you have lots of data --- which then approximates the platonic concept --- and when you don't; in this case, you get the fraction of variance unexplained by a regression function.

In a first example, I then showed that the fraction of variance explained by a regression function sensitively depends on that function. In a second example, I explained how to use a dataset of twins to determine the fraction of variance in IQ explained by genes. This differs from the other examples in the fact that we don't need to measure genes (the explaining variable ) in order to determine the final result.

- ^

In the whole post, is a number usually between and .

- ^

Yes, this means that the fraction of explained variance is : the model is really an anti-explanation.

- ^

Here is a rough intuition for why we need that factor. Assume you have a distribution and you sample two datapoints with sample mean . The true variance is given by

Note that does not depend on ! Thus, if we knew , then the following would be an unbiased estimate of said variance:

However, we don't know the true mean, and so the sample variance we compute is

Now the issue is roughly that is precisely in the center between and , which leads to this expression being systematically smaller than with being replaced by . Mathematically, it turns out that the best way to correct for this bias is to multiply the estimate of the variance by precisely . See the Wikipedia page for details for general sample sizes.

- ^

Thanks to Gemini 2.5 pro for noticing this for me.

- ^

Code written by Gemini.

- ^

There are lots of caveats to this. For example, this assumes that twins have the same genetic distribution as the general population, and that the environmental factors influencing their IQ are related to their genes in the same way as for the general population.

Discuss