There's no shortage of predictions about AI's impact on software development. But while everyone is debating whether AI will replace programmers, my teammates at Pulley and I are using it daily, and as a result, we've become a tiny team that's punching well above our weight.

For context, we build equity and token management software - cap tables for startups, token management for crypto companies, and additional services. Our engineering team is around a dozen people, versus our biggest competitor, Carta, which has somewhere in the ballpark of 300 to 400 engineers1 - about 30x the size!

Here's how AI helps us compete.

Building an AI-Oriented Culture

We don't have a top-down AI mandate from the C-Suite, but we do have something arguably more powerful: we hire people who are genuinely excited about working with AI tools. The result is an incredibly experimental culture where team members try new AI coding tools almost weekly and share their findings2.

In the last month alone, engineers have used Cursor (the most popular), GitHub Copilot, Windsurf, Claude Code, Codex CLI, and Roo Code - probably others I'm forgetting. The choices aren't static. Someone discovers a new capability in a model or tool, tests it internally, and shares the results with the team.

We back this experimentation with a company-wide AI tools budget, plus additional API credits for engineering. Leadership has been highly supportive, recognizing that this isn't just an engineering thing - over 50% of our entire company uses AI regularly, from sales and marketing to support and design.

But having the tools is just the start. The real question is: how do you actually work with them day-to-day?

Embracing Uncertainty

Nearly everyone on our engineering team uses AI "most or all" of the time, but it's essential to understand what that means. The typical workflow involves giving the task to an AI model first (via Cursor or a CLI program) to see how it performs, with the understanding that plenty of tasks are still hit or miss.

And when AI fails, the engineers are still responsible for implementing the features. Vibecoding is the first step, but far from the last. Just because an AI wrote the code does not absolve humans from reading the output, ensuring adequate testing, and signing off on the pull request. "Cursor wrote it" is never an excuse for bugs or poor code quality.

That said, we also don't assume that model performance is static. We've developed internal "benchmarks" for tasks that consistently trip up current models, but give us a good sense of how the models are improving on the things we care about. One example: for whatever reason, we've learned that it's surprisingly tricky for the latest models to fully implement an API endpoint in our architecture, though that might not be the case in a few months.

Leveraging Agentic Capabilities

When people imagine "coding with AI," they might picture asking ChatGPT for code snippets and pasting them into a text editor. That's not what we do.

In many cases, we're moving beyond autocomplete or chatbots and using agents (and soon, fleets) to implement features at scale. Tools like Cursor Agent and Claude Code can work with bash tools, web browsers, filesystems, and more. I've noticed that the latest agentic workflows feel less like using a tool and more like collaborating with competent junior developers3 (albeit ones that still need clear direction).

This collaboration style has shifted the skills that matter. I often find myself thinking less about writing code and more about writing specifications - translating the ideas in my head into clear, repeatable instructions for the AI. In some ways, it feels like I'm leaning on my managerial skills as much as my technical ones.

In the long term, I suspect that this ability to communicate in specs (and effectively do context engineering) may become more important than low-level coding skills.

Designing An AI-Friendly Codebase

We've invested heavily in making our codebase easier for AI to understand and work with. This includes Cursor rules and READMEs within various parts of the codebase that explain layout principles and stylistic expectations.

We've also built custom tooling to enhance AI collaboration: MCP servers that expose local SQL tools and integrations with our project management system. These aren't just conveniences - they're infrastructure that makes AI agents more capable and reliable collaborators.

It's still early days here, and we're far from having the best practices figured out. For example, we recently discovered that having too many Cursor rules can make some Claude models worse at following instructions - they likely polluted the context. But this is where the culture of experimentation and sharing comes in handy.

Sharing Hard-Won Lessons

To be clear, AI adoption hasn't been all upside. We've learned plenty of lessons the hard way:

Models are developing (intentionally or not) different strengths and weaknesses. Gemini is a very strong planner. o3 is very methodical about problem-solving and tool usage. Claude loves to code - so much that you have to explicitly tell it only to touch relevant files, or else risk having it enthusiastically refactor your entire codebase.

Agent-based coding workflows require new programming habits. Case in point: frequent commits. If you don't commit regularly, the model might clobber all your good work with wayward changes when you ask for a slight modification later.

Many models have been trained to write way too many comments. It's great if they're generating snippets for you to learn from, but much less useful when every new pull request has "# New feature" sprinkled across multiple files.

Prompt engineering is a subset of context engineering. Like I mentioned above, this means (in part) thinking in specs rather than prompts. But it also means providing the right context (like specific files or links to documentation) at the right time, instead of using bare-bones prompts and letting the LLM figure it out.

We're also anticipating security challenges as tools like MCP become more sophisticated. When your agent can talk to the outside world while making writes to your codebase and data stores, how do you prevent malicious prompt injections or unexpected side effects?

Expanding Our Ambition

AI also provides value beyond just writing code. It's turned impossibly daunting tasks into regular Tuesday afternoons.

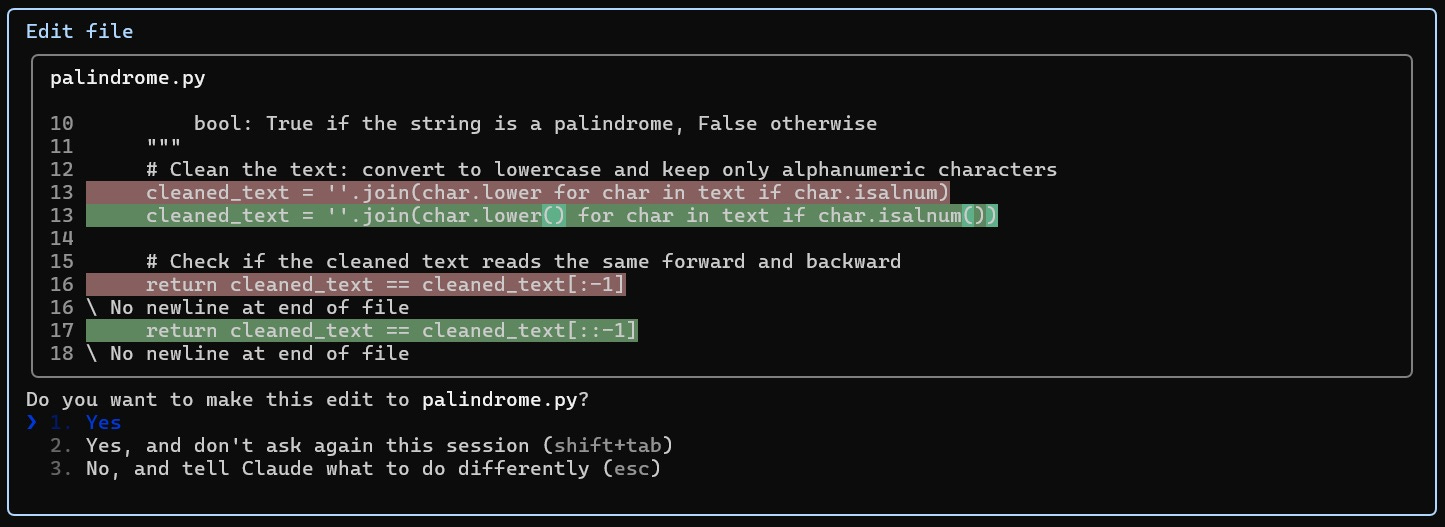

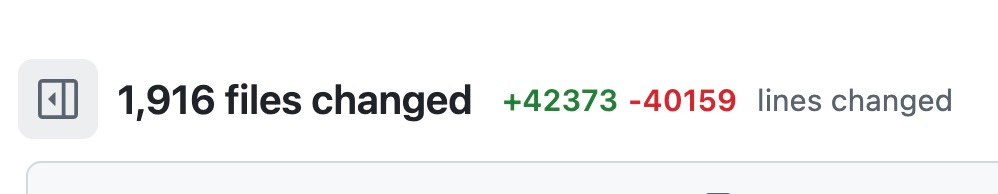

Last month, we shipped a major refactor that updated how we use primitive types across our entire codebase - over 1,900 files and 40,000 lines of code. Pre-AI, this was a "maybe next quarter, if the whole team has bandwidth" type of project. Now, one engineer knocked it out in a handful of days. Large refactoring projects that engineers might postpone forever suddenly become tractable.

AI also shines at tasks where the path forward is clear but the execution is tedious. Rather than dreading these necessary but boring refactors, engineers can tackle them knowing AI will handle the mechanical parts while they focus on the interesting decisions4.

As crazy as it sounds, we've almost eliminated engineering effort as the current bottleneck to shipping product. It's now about aligning on designs and specifications - a much more challenging task, given the number of humans involved. But with how much the company has embraced AI, we may find ways to create 10x product managers and 10x designers, too.

Hiring for the AI Era

It's also worth noting that we've adapted our hiring process to reflect this new reality. Not only do we allow candidates to use AI tools during interviews, we encourage it. Surprisingly, most struggle to get into a groove with AI during the interview process. One candidate remarked that using AI "felt like cheating."

This reaction reveals how much the industry mindset still needs to evolve. We're not looking for people who can code without AI; we're looking for people who can code effectively with AI. That's a different skill set requiring comfort with ambiguity, strong communication skills, and the ability to think architecturally rather than just syntactically.

Case in point: we've seen candidates come in and use AI to generate code, but cannot explain why it works or have a hard time debugging it on the fly. Outside of Pulley, I see this all the time on social media - people who vibecode 80% of an app but struggle to iterate quickly as LLMs accidentally clobber their previous work (or worse, ship “working” code with major security flaws).

Looking Forward

We're approaching a world of AI agents that can act as virtual developers, taking tasks that require several hours and returning with complete pull requests. And although we're not seeing 100% success with these tools yet, if this frontier improves as quickly as the others have, it presents a major opportunity to rethink how we create software.

As I've said before, ignore the AI hype at your own risk - the gap between AI-native teams and everyone else is widening daily. So whether you're an individual engineer or leading a team, I'd encourage deep experimentation with these tools and models.

Start tomorrow: Pick your gnarliest refactoring task. Give it to Claude 4 or o3 with full context. Watch it fail in interesting ways. Learn from those failures. Within a month, you'll be shipping code you couldn't have imagined writing yourself.

The learning curve is real, and the early lessons can be painful. But the companies and engineers who master AI collaboration now will have an enormous advantage as these capabilities continue to improve. For a company like Pulley, already running lean and moving fast, AI adoption is fundamentally changing what's possible.

I’m still optimistic about the programmer's role in the age of AI. But increasingly, I see AI-powered engineers (and product managers, designers, and domain experts) replacing everyone else.

I don't have an official source for this number, but I've taken numbers from old blog posts and third-party analysis, plus some rough math based on the company's current size.

Seriously: every week our engineering team devotes at least a few minutes to sharing updates and workflows with the latest AI tools that they're using.

Again, that doesn't mean the outputs are always perfect - far from it. We regularly regenerate answers when the first attempt isn't quite right, or back up conversations to explore different approaches.

Personally, I've found AI to be a massive speed boost when working in Go, a language I'm not very familiar with. It's like having an expert pair programmer who can translate your intent into idiomatic code while you focus on the logic and architecture.