Published on June 17, 2025 10:38 AM GMT

[This spent a couple days on top of HackerNews in February; see here for discussion. Best used as an loose visualization of LLM reasoning. Note that the distances used for the bar and line charts are actual cosine sim, not tSNE artifacts.]

Frames of Mind: Animating R1's Thoughts

We can visualize the "thought process" for R1 by:

- Saving the chains of thought as textConverting the text to embeddings with the OpenAI APIPlotting the embeddings sequentially with t-SNE

Here's what it looks like when R1 answers a question (in this case "Describe how a bicycle works."):

Consecutive Distance

It might be useful to get a sense of how big each jump from "thought i" to "thought i+1" is. The graph below shows the difference between consecutive steps.

By default we calculate cosine similarity between the embeddings and normalize across the set of all consecutive steps to 0, 1. I'm interested in seeing when the bigger or smaller jumps happen in the "thought cycle".

Combined Plot

The combined plot shows both at once.

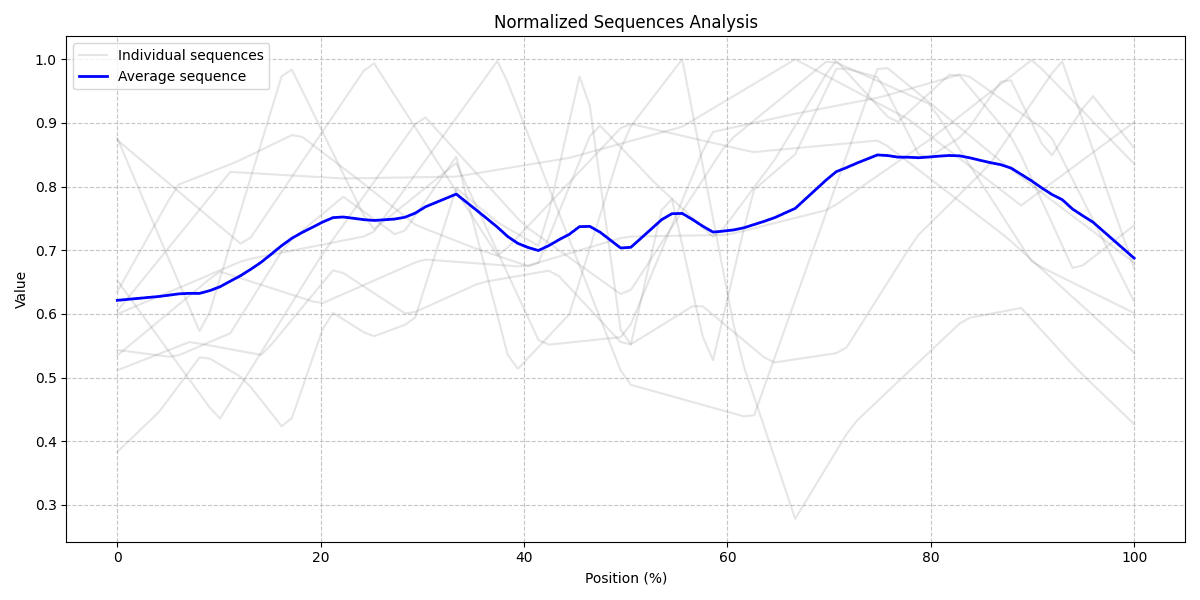

Aggregate Distances

The graph above shows the aggregate distances for 10 samples. To my eyes it looks like a "search" phase where size of step is large, followed by a stable "thinking" phase, followed by a "concluding" phase.

Usage

I used these prompts:

- Describe how a bicycle works.Design a new type of transportation.Explain why leaves change color in autumnHow should society balance individual freedom with collective good?How would you resolve a conflict between two people with opposing views?What makes a good life?What would happen if gravity suddenly doubled?What's the best way to comfort someone who is grievingWhy do humans make art?Why do people tell jokes?

The chains are available in data/chains. To easily pull from Deepseek's public chat interface, paste the "pull_cot.js" script into your browser console when a chat is open. It will download automatically.

Install requisite packages in Pipfile and run with the function in run.py.

Discuss