Published on July 11, 2024 6:40 PM GMT

This post summarises our new workshop paper: Games for AI Control. The paper applies AI- and game-theoretic tools to the AI Control framework introduced by Redwood Research [1, 2].

We'll be extending this work and submitting it to a conference, so we welcome suggestions! Please reach out if you're excited about developing these ideas. This post is written from Charlie’s perspective.

AI Control Refresher

The AI Control methodology aims to design and assess protocols[1] that AI labs could use to deploy powerful but untrusted advanced AI systems. For a full summary, see Redwood's blog post, but I’ll try to recap here.

A primary goal of AI Control is to design protocols that are safe in that they can reduce the probability of some unacceptable outcome, conditional on the untrusted AI system being a schemer. [2] However, protocols must also be useful, harnessing a significant amount of the benefit of the untrusted system in cases where it is not scheming.

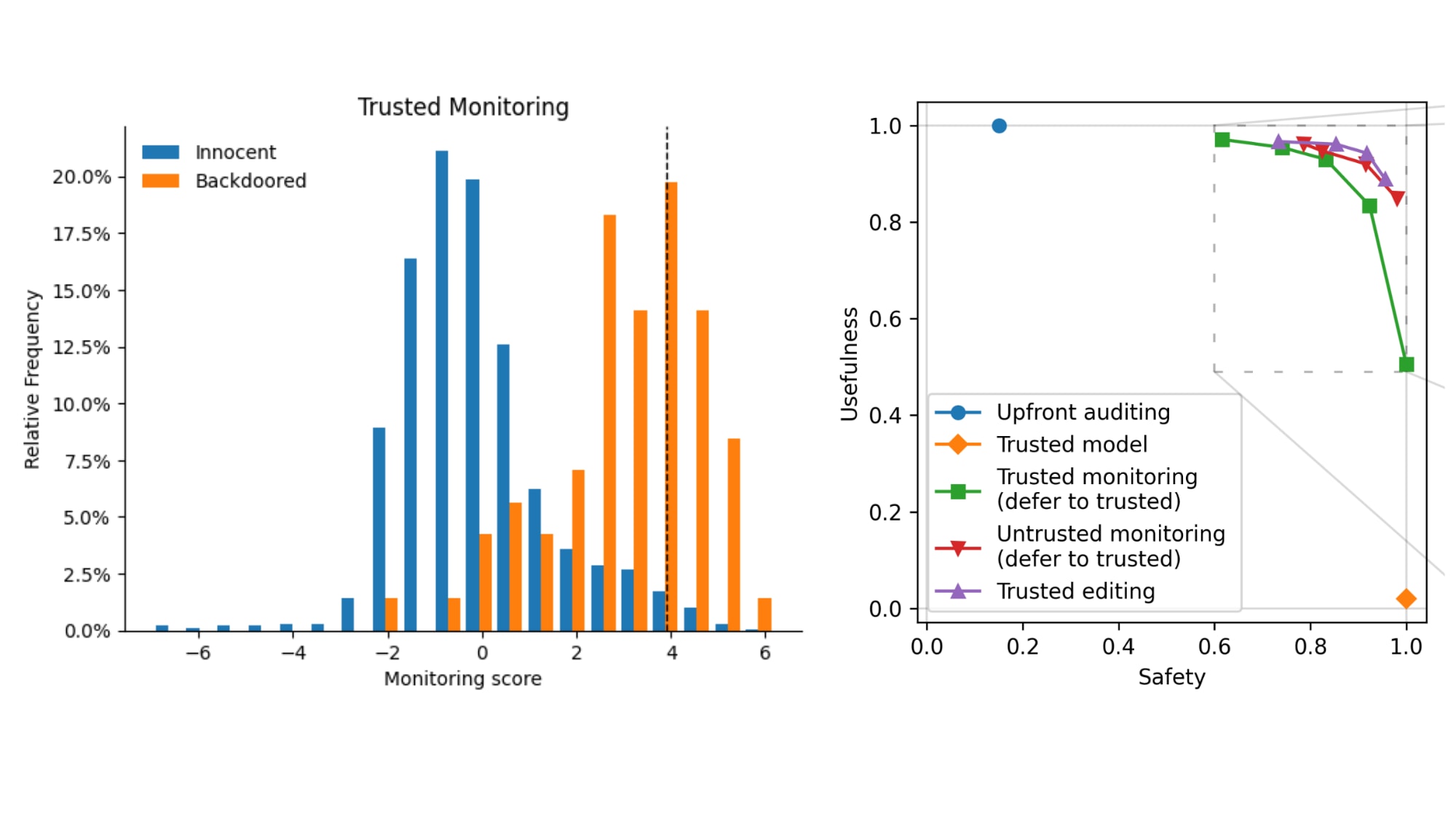

After defining a particular use case and unacceptable outcome, evaluating a protocol involves iteratively engineering the best attack strategies for the red team (a human/AI hybrid acting as a proxy for the untrusted AI) and the blue team (which develops the protocol). This red-teaming exercise is the bulk of the work. It involves coming up with protocols and gathering empirical data about key questions, such as how well GPT-3.5 can spot backdoors inserted by GPT-4 (see Figure 4 of the original paper):

This work

By introducing “AI-Control Games”, an extension of Markov Decision Processes and normal-form games[3], we model the interaction between a protocol and an untrusted AI system to explore safety and usefulness across a range of protocols and games. This is similar to a discrete-time and stateful modification of Redwood’s Toy models of AI Control.

The paper contains early work and should be seen as introducing useful tools for thinking about AI Control. I won’t explain the model here (which is a bit fiddly and notation-heavy). Instead, I’ll share some of our early findings using the model and explain the benefits and limitations of this approach.

Findings

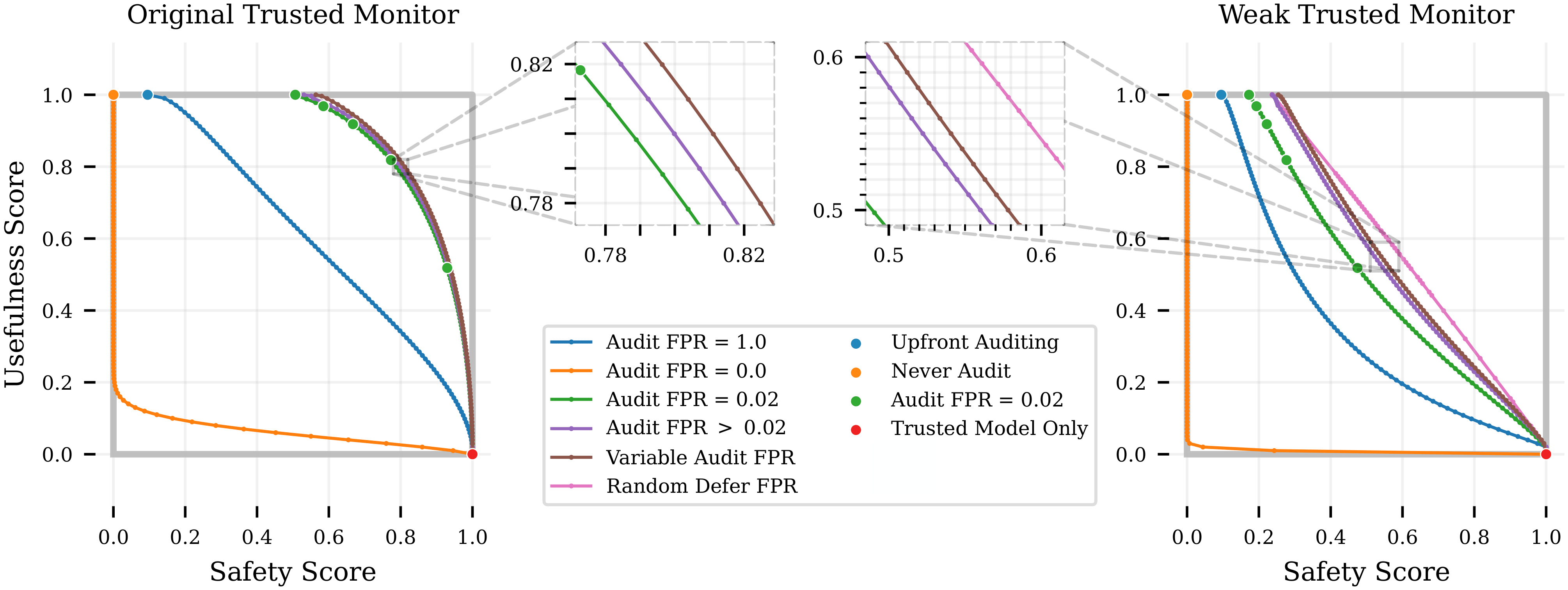

Sometimes (but rarely), the blue team should use a randomised protocol. In many games, randomness is helpful. However, partly because the environment is stochastic, and Red has no memory and time-awareness, in AI-Control Games is usually unnecessary to include randomness in the protocol itself. We found that randomness was Pareto-optimal, in particular when the trusted model is not great at spotting backdoors written by the untrusted model. (See section 2 of the paper).

We can make small improvements to Trusted Monitoring by optimising the parameters. We found small Pareto improvements to existing Trusted Monitoring setups by, for example, by decreasing the threshold for sending a solution to be checked be a human auditor. We found that adapting the threshold for auditing over time led to further small improvements.

We can (usually) use a linear tradeoff to simplify the problem of synthesising protocols. In many cases, including whenever we allow Blue’s protocol to be randomised, the Pareto frontier is “concave”. This means that choosing different weights to trade off safety and usefulness will allow you to fill out the frontier by optimising a single objective.

Blue leads, and then Red follows. In general, we suppose the protocol designer (Blue) chooses it's protocol, and then the red team (Red) chooses a best-attack. Therefore, in our model, Blue chooses and declares its strategy before Red chooses its response. This assumption relates to Stackelberg Games, which are used to model security in game theory.[6]

Although (technically) multi-objective and general-sum, AI-Control is almost single-objective and zero-sum. The objectives for the Red and Blue teams in Control look approximately like this (where is a policy/strategy, with notational abuse for brevity):

The good news is that, given a particular linear weighting, the leader-follower assumption and the fact that Red cannot affect the usefulness[7], we can reduce to a simpler single-objective zero-sum game, which may be easier to solve.[8]

Evaluation of the research

Benefits: I see a few main benefits to introducing a mathematical model of an AI Control setup. First, it makes the modelling assumptions behind the game extremely explicit, and writing a model is useful for identifying uncertainties. Second, it allows us to cheaply test hypotheses about protocols in hypothetical deployment settings using real or constructed data. Third, it allows us to write proofs about individual games. Fourthly, situating AI Control in the context of similar research fields has learn from and share with those fields.

Fundamental Limitations: The main limitation of this approach, as I have found, is that it is difficult to define a model which is both informative and applicable to a range of protocols. Therefore, the AI-Control Game has to be redesigned each time a new protocol is modelled. Although each toy modelling exercise is fast relative to a large engineering project, rigorous modelling is much slower than a quick best guess. I expect this will be a cost worth paying for large AI labs, which have more resources, but it limits my ability to recommend this work to someone today.

Future work: There are many interesting directions for future work:

- Analysing trends, such as how the safety/usefulness tradeoff changes with more programming problems, smaller audit budgets, or flawed human auditors.Modelling other protocols, such as untrusted monitoring. This is particularly interesting as it allows us to model new important interactions, such as collusion/steganography.Gather data about other question-answering settings and plug it into the model.Modelling deployment settings other than question-answering, especially a stateful deployment setting.

Acknowledgements

Thanks to Diogo Cruz, Joss Oliver, Erin Robertson, Fabien Roger and Matthew Wearden for feedback and helpful nudges.

- ^

Roughly, detailed plans for deploying the AI system. Ideally this is software which makes requests to other resources, such as the untrusted AI system, other AI systems, or human labour.

- ^

Roughly “Power-motivated instrumental training-gamers”. See Carlsmith’s report or the original Control paper for more detail.

- ^

Technically, an AI-Control game is a special-case of a multi-objective two-player partially-observable stochastic game.

- ^

Across a range of domains (charaterised by trusted monitor distributions), an audit FPR of >2% seems to be optimal, though the exact value varies (e.g. 3% or 7%).

- ^

I haven’t worked out exactly how important this assumption is yet.

- ^

For example, Stackelberg Games can be used to model the defence of some resources against unknown attacks by an adversary (for example, deciding where LAX should allocate its security resources against terrorists or picking Coast Guard patrol routes).

- ^

Usefulness is evaluated in the case where the untrusted AI is not scheming, and therefore in the proxy case when the untrusted AI is not influenced by the Red team.

- ^

The main theorem in the paper states precisely that “For every AI-Control Game , each pair of policies is in Control-Equilibrium if, and only if, there is some such that is a maximin point for .” Here is a zero-sum two-player partially-observable stochastic game, which is easier (though not easy) to solve, as a previously studied problem.

Discuss