Published on June 6, 2025 1:30 AM GMT

My wife and I are power users of Large Language Models (LLMs). My go-to LLM has been Google Gemini, while she has been extensively using ChatGPT for almost a year. We make an effort to try these models for both personal and professional tasks, making our lives more efficient.

As power users of LLMs, we constantly run into their shortcomings. One of the most obvious shortcomings is the models' poor short-term memory. This shortcoming also exists in human beings via a disorder called anterograde amnesia.

What is Anterograde Amnesia?

Anterograde amnesia is a type of memory loss that occurs when you can’t form new memories. In the most extreme cases, this means you permanently lose the ability to learn or retain any new information.

Temporary anterograde amnesia often occurs with binge drinking. If you’ve ever been blackout drunk, you have experienced anterograde amnesia. Permanent anterograde amnesia is quite rare, often associated with brain damage. It is also very rare as a standalone disorder: typically it occurs alongside retrograde amnesia, forming a disorder we’ve all heard about: dementia.

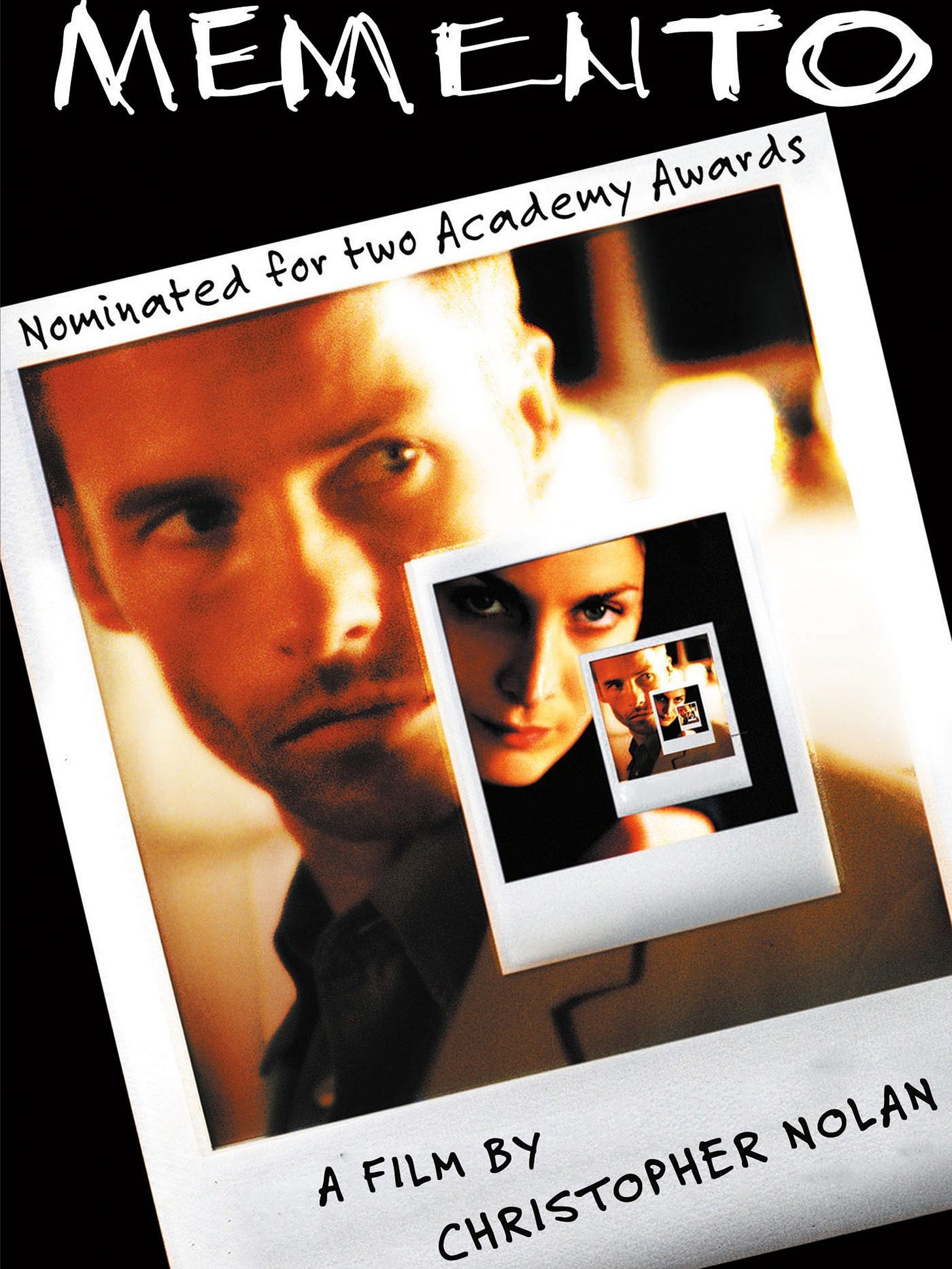

Memento

One of the best representations of standalone permanent anterograde amnesia is the main character of the film Memento. In the film, Leonard Shelby is forced to navigate the world in a very peculiar way due to his disorder, while trying to avenge the murder of his wife.

Leonard has to use several tools such as tattoos, notes, and polaroids to reconstruct memories of previous moments. Very early into the film you begin to realize that Leonard can’t function as a normal human being without assistance. This is one of the most striking similarities between Leonard and LLMs: They both need to be guided and assisted by a human in order to be functional.

Things that are simple even to children such as remembering a hotel room or what day it is are extremely difficult for Leonard. Despite having processes to remember, Leonard has to depend on other characters to function both on a day-to-day basis but also working through his long-term goal of finding his wife’s murderer.

LLMs with Amnesia

LLMs do not suffer from anterograde amnesia to the degree that Leonard does in Memento. This is because models have context windows, which is the amount of text the model can process and remember at any given time. Models like Gemini 2.5 Pro have context windows of up to 2 million tokens, which is a lot! This means that you can prompt a very long conversation with a model and the model will be able to recall every component of the conversation until you hit your context window limit.

However, this "memory" is strictly confined to a single conversation. In my experience, LLMs are not very good at retrieving information from other conversations when prompted in a new chat window. I have also found that if your prompt to recall information within a long conversation is not very specific, the LLM can get confused easily.

If we apply context windows to Leonard, this would be as if Leonard kept many different journals, each related to a task or question that comes up at a given time. If Leonard was an LLM, he would be able to read every word of each journal instantly, however, unless what he was looking for within the journal was perfectly clear, he might not be able to find the right answer. If he only kept one journal and wrote everything there, he would fill it up really quickly, rendering the journal useless.

Conclusion

I am not an expert on human memory and it is also a field that is not well understood. In some ways our memories are vastly superior to LLMs while in others, LLMs are already superhuman. An LLM long-term memory is all the common knowledge in the world, while an average human’s long-term memory is vastly smaller. LLMs are able to recall a long-term memory near instantly, while humans might take longer, need an association, or not be able to recall a long-term memory at all.

Humans are able to convert short-term memories into long-term memories instantly, while LLMs, as far as I know, are not able to. If a frontier model company is able to figure out a way to store short-term memories the way humans do, it will be a breakthrough that will make LLMs vastly more efficient and useful, getting us closer to model generality (the ability for an AI to perform any intellectual task a human can).

I am glad experts are publicly asking themselves similar questions, and I am confident that researchers at all the frontier labs are trying to solve this key memory problem.

Discuss