Published on May 29, 2025 6:49 PM GMT

Everyone around me has a notable lack of system prompt. And when they do have a system prompt, it’s either the eigenprompt or some half-assed 3-paragraph attempt at telling the AI to “include less bullshit”.

I see no systematic attempts at making a good one anywhere.[1]

(For clarity, a system prompt is a bit of text—that's a subcategory of "preset" or "context"—that's included in every single message you send the AI.)

No one says “I have a conversation with Claude, then edit the system prompt based on what annoyed me about its responses, then I rinse and repeat”.

No one says “I figured out what phrasing most affects Claude's behavior, then used those to shape my system prompt".

I don't even see a “yeah I described what I liked and don't like about Claude TO Claude and then had it make a system prompt for itself”, which is the EASIEST bar to clear.

If you notice limitations in modern LLMs, maybe that's just a skill issue.

So if you're reading this and don't use a personal system prompt, STOP reading this and go DO IT:

- Spend 5 minutes on a google doc being as precise as possible about how you want LLMs to behavePaste it into the AI and see what happensReiterate if you wish (this is a case where more dakka wins)

It doesn’t matter if you think it cannot properly respect these instructions, this’ll necessarily make the LLM marginally better at accommodating you (and I think you’d be surprised how far it can go!).

PS: as I should've perhaps predicted, the comment section has become a de facto repo for LWers' system prompts. Share yours! This is good!

How do I do this?

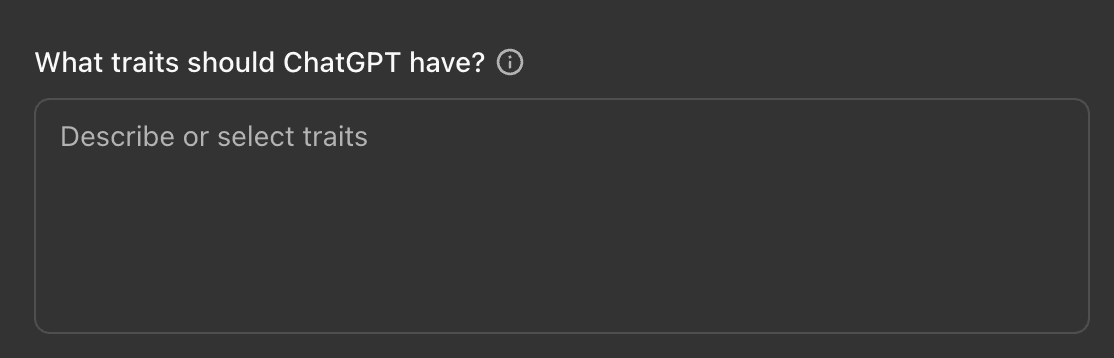

If you’re on the free ChatGPT plan, you’ll want to use “settings → customize ChatGPT”, which gives you this popup:

This text box is very short and you won’t get much in.

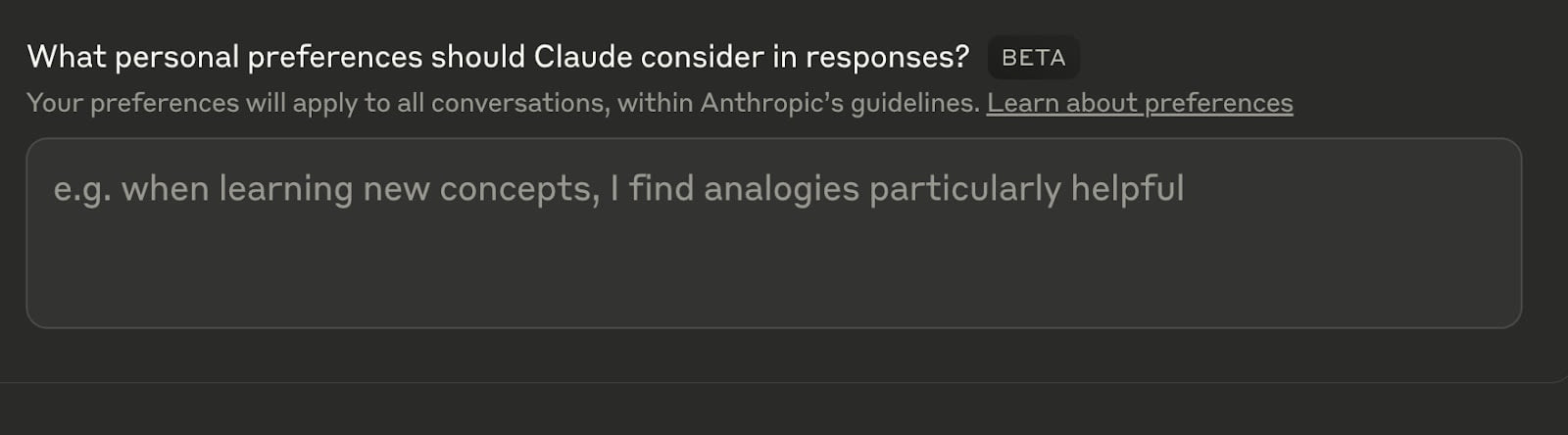

If you’re on the free Claude plan, you’ll want to use “settings → personalization”, where you’ll see almost the exact same textbox, except that Anthropic allows you to put practically an infinite amount of text in here.

If you get a ChatGPT or Claude subscription, you’ll want to stick this into “special instructions” in a newly created “project”, where you can stick other kinds of context in too.

What else can you put in a project, you ask? E.g. a pdf containing the broad outlines of your life plans, past examples of your writing or coding style, or a list of terms and definitions you’ve coined yourself. Maybe try sticking the entire list of LessWrong vernacular into it!

In general, the more information you stick into the prompt, the better for you.

If you're using the playground versions (console.anthropic.com, platform.openai.com, aistudio.google.com), you have easy access to the system prompt.

A Gemini subscription doesn’t give you access to a system prompt, but you should be using aistudio.google.com anyway, which is free.

If you use LLMs via API, put your system prompt into the context window.

- ^

This is an exaggeration. There are a few interesting projects I know of on Twitter, like Niplav's "semantic soup" and NearCyan's entire app, which afaict relies more on prompting wizardry than on scaffolding. Also presumably Nick Cammarata is doing something, though I haven't heard of it since this tweet.

But on LessWrong? I don't see people regularly post their system prompts using the handy Shortform function, as they should! Imagine if AI safety researchers were sharing their safetybot prompts daily, and the free energy we could reap from this.

(I'm planning on publishing a long post on my prompting findings soon, which will include my current system prompt).

Discuss