Google I/O 2025 was basically an AI-fest. There were a ton of updates to Gemini, AI Studio, and the Gemini API that make building with AI much easier for you.

Here’s the list of announcements you should be aware of.

Veo 3 can generate videos with audio

There were a lot of interesting things announced at Google I/O. First, Veo 3 is the first video generation model with native audio generation. This means one prompt to create a video with accompanying audio.

This has been a barrier for applications of AI video generation. Veo 2 was really only good for B-Roll or social media posts due its duration limitation and lack of native audio. With Veo 3, I wouldn’t be surprised if we see high-quality feature films entirely generated with AI within the next year.

Flow: An AI-powered filmmaking tool

The big blocker now is consistency between generated videos, but it seems Google is solving that because they’ve released Flow, an AI-powered filmmaking product. It’s gimmicky in its current form but will definitely get better with time.

Google AI Ultra is $250/mo

You might have to pay quite a bit to use Flow’s full feature set, though, because Flow is part of Google’s Google AI Ultra plan priced at $250/mo. This plan is clearly targeted toward businesses as no consumer could afford that payment, but many Gemini fans are upset at the high price tag.

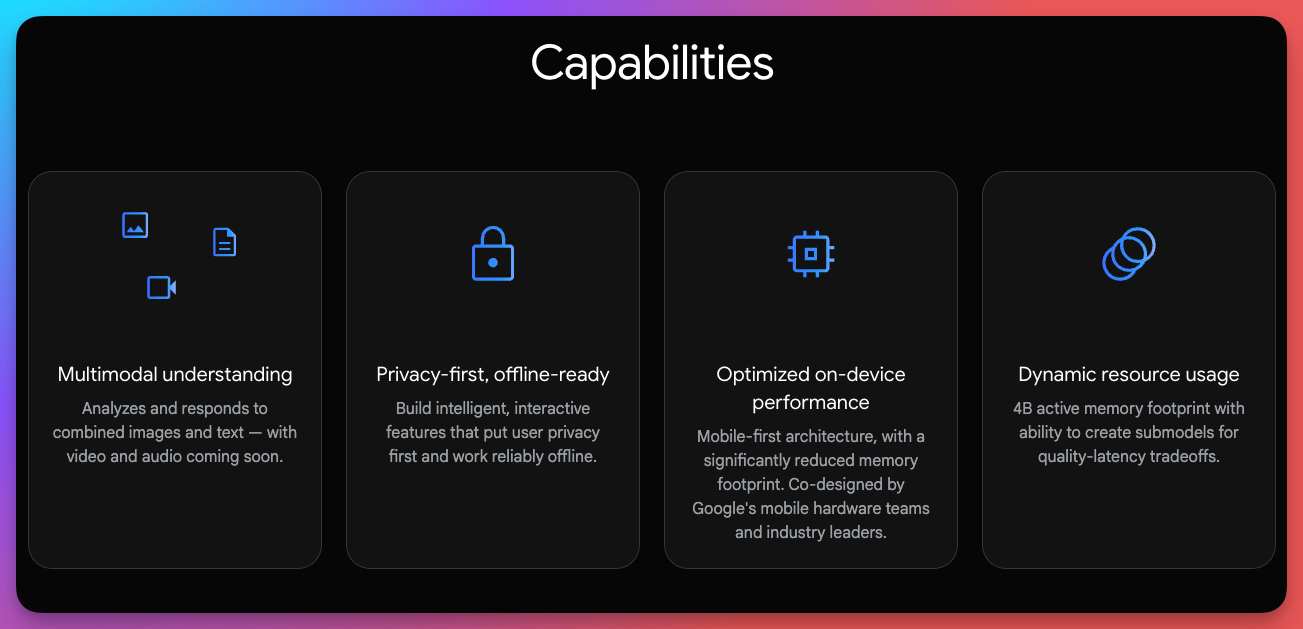

Gemma 3n is an open model that can run locally with just 2GB of RAM

Google I/O also saw the announcement of Gemma 3n, Google’s state-of-the-art mobile-first open model. It only requires only 2GB of RAM to run on-device with only 4B active parameters and is similar in performance to Gemini Nano. It’s already available for developers to start building via Google’s Gen AI SDK.

This is huge: on-device LLMs are the future once performance for smaller models can be improved. The vast majority of users don’t need anything more powerful than an on-device model, so they shouldn’t have to pay for anything better or send their data off-device to have access to an LLM.

Gemini 2.5 Flash is more transparent

Gemini 2.5 Flash is now the default model in the Gemini app and thought summaries have been added for greater transparency.

One of the chief complains of the Gemini App is the amount of thinking that is done without being shared with the user. Previously, users have noticed (with all LLMs—not just Gemini) that thinking models tend to go in circles or their thoughts that arrive at their conclusion don’t make sense. Transparency fixes the complaint of users not knowing when this happens.

Gemma gets two new variants

Gemma has two more variants: MedGemma for medical image and text comprehension and SignGemma (I couldn’t find a link for this, so if you have one let me know!) for translating sign language into spoken language.

Open models for both of these purposes are very important. This increases both transparency and accessibility.

Gemini Diffusion makes coding with AI 5x faster

Gemini Diffusion is a text diffusion model that is 5x as fast as Flash while matching coding performance. You have to sign up to get access. It seems this is the direction Google is going for coding models. It makes a ton of sense.

If you’ve used Cursor, you know how slow the agentic mode with Gemini 2.5 Pro can get with large projects or even a single large text file. Making this much faster will make AI-assisted coding a much better experience.

Google Colab is going agentic

Google Colab will soon have a fully agentic experience.

I’ve wondered when Google will get into the AI code editor competition and Colab seems to be the logical entry point. I use an AI-assisted editor to code each day during my job at Google but Google currently doesn’t offer it externally. I’m guessing this will be extended to a fully outward-facing AI editor in the future especially given Google’s focus on creating better coding models (see section above).

30 thing you can build with Gemini

Google Code Assist and Code Review now powered by Gemini 2.5 Pro

Google Code Assist is now powered by Gemini 2.5 Pro with a 2 million token context window. Both Code Assist and Gemini Code Assist for GitHub (a code review assistant) are now generally available to everyone.

As far as I know both these integrate with GitHub repos and are free.

Jules is an AI programmer that will code alongside you

Jules is a code assistant that codes alongside you by tackling your backlog while you work on code you want to. You can try it now!

Jules integrates directly with GitHub, clones your code to a cloud VM, and gives your a PR to review and submit based on its changes. This reminds of Devin, but it seems like it’s a more fleshed out experience. If you try it out, let me know how it goes!

Generative AI models have been added to AI Studio

Generative media models have been added to Google’s AI Studio making it easier to prototype with those models. Gemini 2.5 Pro has also been integrated into AI Studio’s code editor to enable faster prototyping.

Gemini is introducing a computer use API

A computer use API has been added to the Gemini API (frustrating that I can’t find a link to this—let me know if you do and I’ll add it here). This tool enables developers to build apps that can browse the web. It’s currently only available to trusted testers but will role out further later this year.

Gemini gets access to URL context

A URL context tool has been added to the API allowing Gemini to extract the content of a webpage at a URL. This is something I’ve desperately been waiting for because it seems like such a no-brainer for an LLM offering from the world’s greatest Search company.

Gemini supports MCP

The Gemini API and SDK will support MCP (Model Context Protocol—check out this article if you want to know what it is). Standards like MCP (when implemented properly) make developer easier for engineers. This means Gemini will support and integrate with many more open source tools.

For all updates for developers, check out Google’s blog post here.

Thanks for reading!

Always be (machine) learning,

Logan