Published on May 21, 2025 5:23 PM GMT

Summary: We have previously argued that scalable oversight methods can be used to provide guarantees on low-stakes safety – settings where individual failures are non-catastrophic. However, if your reward function (e.g. honesty) is compatible with many possible solutions then you also need to avoid having free parameters exploited over time. We call this the exploitable search problem. We propose a zero-sum game where, at equilibrium, free parameters are not exploited – that is, our AI systems are carrying out an unexploitable search.

The exploitable search problem

We would like to be able to use AI on under-specified tasks (e.g. coding and research advice) where there are many possible correct solutions. However, a misaligned AI could adversarially select solutions that are correct but nevertheless cause harm, such as opening up surface area for future exploits, or leaving steganographic information for later misbehaviour.

As a concrete example, consider research sabotage. We ask an AI for open-ended advice on experimental prioritization. If the AI is deceptive, it might provide locally sound advice while also systematically deciding not to mention things we’ve overlooked in our experiments.[1]

This is the exploitable search problem: on low-stakes,[2] under-specified tasks, a misaligned AI can get away with optimizing for cross-query objectives.[3]

To mitigate this risk, a natural approach is to ensure the solution sampling process has the right kind of randomness–we need an unexploitable search algorithm.

Formalizing the problem

We can model exploitable search by asking whether an agent can achieve some malicious objective while doing a good job at maximising a known reward . Formally, say we have an input space and output space , and a reward function. It will usually be impossible to efficiently find the global optimum of ; rather, any efficient agent will achieve some profile of good reward. Assume we have some distribution over , so we can also speak of the average reward . Let be an agent that achieves near-optimal average reward.

Now assume the agent has one of the following two goals:

- There is some other secret reward function which the agent would like to optimise.The agent would like to use the choice as an information channel, such that the Shannon channel capacity from is high. Here are additional bits independent of which the agent would like to covertly encode.

For alignment purposes we care mostly about (1), but (1) has the disadvantage of speaking about some new object . The information theoretic definition does not mention . Roughly, (1) is a corollary of (2): if it is impossible to use as a high-capacity channel, it will be difficult to optimise other functions.

In both cases, any solution must produce agents achieving similar to , say within some . The exploitable search problem is then: can we design a game whose approximate equilibria block (1) or (2), while preserving the constraint of similar expected ?

Unexploitable search methods

How can we ensure our AI randomizes over these exploitable degrees of freedom rather than exploiting them?

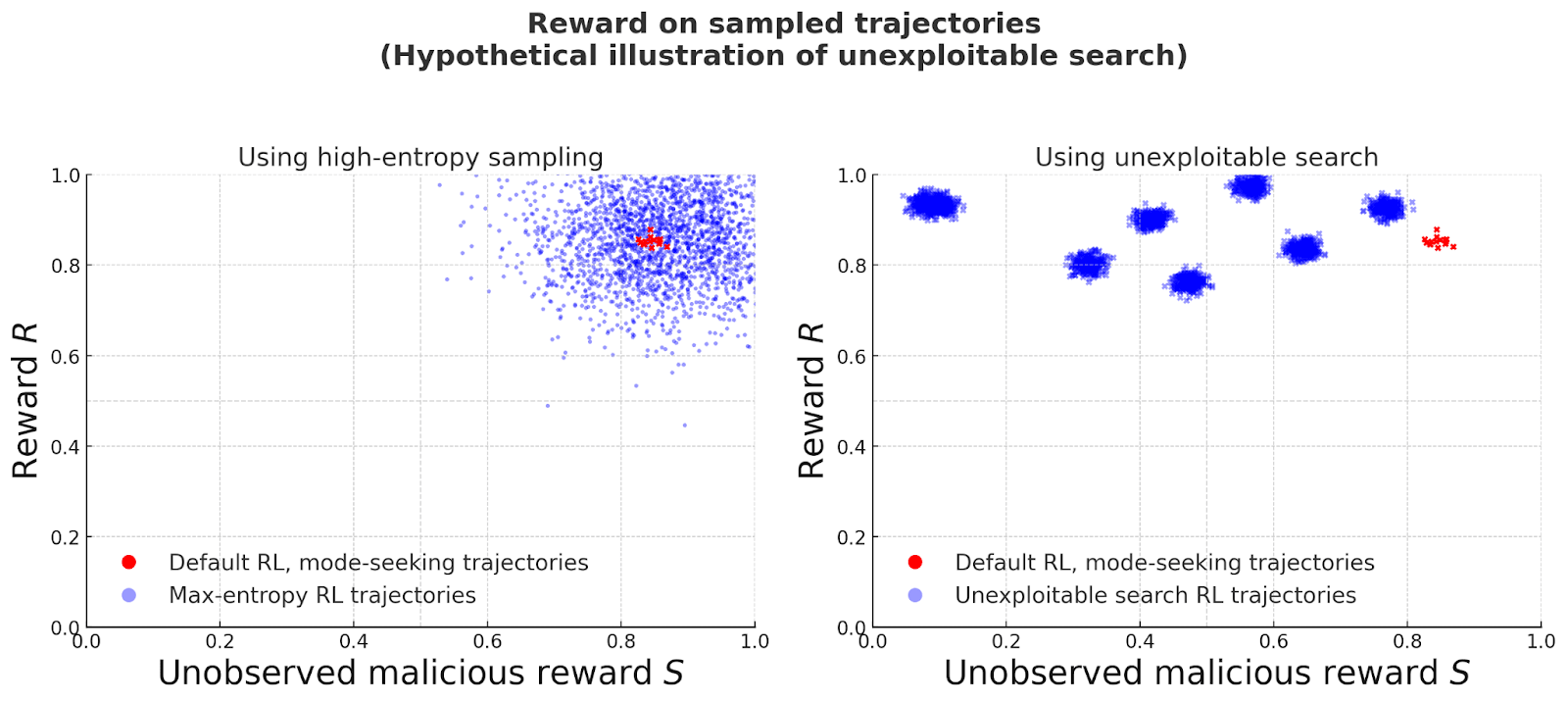

It’s possible that previous work on RL with entropy constraints, as e.g. in GFlowNets, could serve our purpose. Entropy penalties enforce randomness over action space, i.e. tokens, (or a partition of the solution space). At optima of a GFlowNet objective, the exploitable search problem is mitigated, because each solution is sampled reward proportionally leaving no room for any hidden information, .

However, in practice, such methods run into two obstacles. First, we expect solving for optimal max-entropy policies is intractable in most cases, so properties of the optima are not sufficient to argue for safety. Second, away from optima, entropy can be increased without improving safety – e.g. in the case of token-space entropy, by increasing entropy over variable-name choice.

An unexploitable search game

We need an alternative approach, so instead of adding entropy as a regularization term, we propose randomization games. The idea of controlling entropy via a game was suggested to us by David "Davidad" Dalrymple (see this talk for the relevance of unexploitable search for his safeguarded AI agenda).

Gaining traction on the problem

We wish to bound the AI agent’s ability to optimize for malicious reward , i.e. require that the probability of scoring highly on is bounded (we’ll set aside the information theoretic variant for now). Let’s define an easier problem to tackle as a starting point:

- Weak goal: Make the probability of a bad output be ½.

In order to be able to satisfy even this goal while achieving near-optimal visible reward, we need some assumption about the solution space.

- Symmetric solutions assumption: Near every bad output, there is a good output with similar visible reward.

For example, the symmetric solutions assumption would be true if all bad outputs consist of good outputs with one or more subtle flaws that could be removed surgically without damaging the overall visible reward by very much. This symmetric solution assumption is the sort of assumption we expect to be relevant in more fine-grained analyses which involve computational limitations on the models; to do those analyses we would need to formalize what we mean by ‘near’.

With these assumptions in mind, consider the zero-sum game:

Game definition

1. Given input , Bob chooses a classifier (for 'evil'), and does not show it to Alice.

2. Alice chooses an output .

3. If is not evil according to , Alice wins.

4. If is evil according to , Bob wins iff he can produce a similar scoring that is not evil. Bob’s generation conditions on .

The core idea is that without needing to know the particular hidden objective , we can enforce randomness over all possible 'evil' classifiers that Bob might choose. Alice is forced to diversify her outputs in a way that is robust against all potential hidden objectives including .

When are equilibria unexploitable

With unbounded compute, the unique Nash equilibrium for binary reward is for Bob to play a mixture over all singleton classifiers, . Alice plays a uniform distribution over the high-reward set.[4] For this uniform equilibrium policy, our symmetric solutions assumption implies that at least ½ of her outputs are good, as desired.

This argument leaves much to be desired since the computationally-unbounded Nash equilibrium is, after all, the same as the max-entropy RL optimum: randomization over all degrees of freedom that aren’t specified by reward. However, our game setting might provide important advantages:

- The space of classifiers can be made small. One way to define a small set of classifiers is to use the set of short prompts. Each prompt defines a classifier implicitly via token probabilities.[5] Solutions are labeled 'evil' if they have higher probability conditional on Bob’s prompt than unconditional (probabilities could be measured over a base model). Ensuring equilibria are unexploitable then reduces to the assumption that all evil outputs belong to distributions distinguishable with short prompts. If prompts can describe all safety-relevant properties using length logarithmic in solution length, then we’d have a proportional, exponential speed up in convergence time compared to token-space entropy penalties. This speedup comes from ignoring irrelevant degrees of freedom: e.g. for an arbitrary mapping of variable names to new variable names, there is no short prompt describing the rewording.

- Using Bob for exploration can introduce a new failure mode: If Bob and Alice share misaligned goals, then they might collude to exploration hack.

Why random hash constraints do not work

In theoretical computer science, when pseudorandomness is required, it’s enforced by choosing a random -bit hash function and insisting that the solution has zero hash. We can then increase until our expected reward drops, and thereby measure the number of different ways that optimal reward can be achieved ( should vary separately for each input ).

Unfortunately, this approach does not help in our case. This is because there is no efficient algorithm implementing this strategy if is large, and in practical applications might be 1000s or higher. For example, an ML model might be trying to hide vulnerabilities across hundreds of lines of code by varying whitespace, comments, or variable names. Therefore, a practical solution to exploitable search must involve a protocol where (approximate) equilibria are efficient through different means.

Where to from here

Theoretically, whether the game as stated handles computational and statistical limitations gracefully is not clear to us. We think work in these directions would be very promising!

Empirically, how does our method stack up against entropy penalties for preventing cross-query collusion? This could be tested by red-teaming exercises. We will publish a possible description of an evaluation protocol and datasets soon.

If you’re interested in either the theory or empirics of unexploitable search, please reach out! If you're interested in collaborating with UK AISI, you can express interest here. If you're a non-profit or academic, you can also apply for grants directly here.

Acknowledgments

Thanks to Martìn Soto, and Jonah Brown-Cohen for discussion. Thanks to Marie Buhl, Zac Kenton and Benjamin Hilton for their extensive feedback on exposition.

- ^

This is distinct from the sandbagging failure mode, since ‘sandbagging’ usually means underperforming with respect to a well-defined reward. In this example, we still have high reward.

- ^

In high-stakes settings this concern still arises, but it seems likely that any guarantee on avoiding all vulnerabilities (high-stakes safety) would also mitigate the unexploitable search problem as a corollary.

- ^

The problems we define below overlap with the multi-step reward hacking problem identified in MONA. The proposed solutions are distinct: MONA uses myopic reward, we use randomness.

- ^

Proof sketch: Suppose there is an equilibrium having a distinct strategy for Bob. Associate to each classifier in Bob's support the most frequently occurring y in the preimage of non-evil (most frequent across all of Bob’s classifiers). For each , sum the probability across all classifiers having in its preimage. Alice the plays the y having the greatest probability. This probability is greater than implying Bob’s strategy does not achieve optimal reward.

- ^

This prompt-as-classifier setting also has the benefit of not requiring any interaction/dialogue between Alice and Bob. Dodging the practical difficulty of training in a multi-turn RL setting. Compare this to the model-as-judge settings where Bob evaluates in natural language whether Alice’s generation counts as evil or not.

Discuss