Generative AI will take on a larger chunk of the world’s power consumption to keep up with the hefty hardware requirements to run applications. “AI chips represent 1.5% of electricity use over the next five years, a substantial part of the world’s energy,” said semiconductor research firm TechInsights in a research note last month.

TechInsights took a baseline measurement of global power consumption totaling 153,000 TWh between 2025 and 2029, sourced from the US Energy Information Administration.

The research firm estimated that AI accelerators will consume 2318 TWh of global power in the same time frame, reaching a 1.5% share of global electricity consumption.

The measurement was based on each GPU using 700W, which is the power consumption of Nvidia’s flagship Hopper GPU. Nvidia’s upcoming GPUs, called Blackwell, are faster but draw 1,200W of power.

TechInsights’ assumptions include only power consumed by chips and don’t include measurements of storage, memory, networking, and other components used for generative AI.

“Such high utilization is feasible considering the huge demand for GPU capacity and the need to sweat these expensive assets to provide a return,” TechInsights analyst Owen Rogers said in a research report.

Green or Not?

A McKinsey AI survey said 65% of respondents intended to adopt generative AI.

To meet the demand, cloud providers and hyperscalers are investing billions of dollars to grow GPU capacity. Microsoft relies on Nvidia GPUs to run its AI infrastructure, and Meta will have a compute environment equal to “nearly 600,000 H100” GPUs in its arsenal, the company has said.

Nvidia shipped roughly 3.76 million GPUs in 2023, up from about 2.6 million GPUs in 2022, according to TechInsights.

Last year, Gartner provided a more aggressive forecast on power consumption, saying AI “could consume up to 3.5% of the world’s electricity.” Gartner’s methodology isn’t clear, but it could include networking, storage, and memory.

The AI race is characterized by companies providing the fastest infrastructures and better results. The rush to implement AI in business has disrupted long-established corporate sustainability plans.

Microsoft, Google, and Amazon are spending billions to build mega-data centers with GPUs and AI chips to train and serve larger models, which has increased the power burden.

The Cost Challenges

Servers can be acquired for $20,000, but companies need to account for the growing cost of electricity and challenges facing energy grids, Rogers said in the research note.

Data centers also need to be designed to handle the power requirements of AI. That demand may depend on grid capacity and the availability of backup power capacity.

The onus is also on energy providers to prepare for the AI era and establish electricity infrastructure, which includes power stations, solar farms, and transmission lines.

“If demand can’t be met, energy suppliers will take market-based approaches to manage capacity – namely increasing prices to reduce consumption – rather than denying capacity. Again, this could have cost implications for users of AI technology,” Rogers said.

The US government’s first goal is to generate 100% clean energy by 2035, which would reduce the burden on the grid. That would also open the door for more AI data centers.

Productive Use of Energy

The power consumed by AI mirrors early trends of cryptocurrency mining burdening power grids. Crypto mining accounts for about 2.3% of US electricity consumption, according to a February report issued by the US Energy Information Administration.

However, energy industry observers agree that AI is a more productive use of energy compared to bitcoin mining.

Nvidia’s AI focus is also on productive energy use. To cut down power consumption, Nvidia’s GPUs include its own chip technologies. The company is switching to liquid cooling from air cooling on a Hopper.

“The opportunity… here is to help them get the maximum performance through a fixed megawatt datacenter and at the best possible cost,” said Ian Buck, vice president and general manager of Nvidia’s hyperscale and HPC computing business, at an investor event last month.

HPC Providers, AI, and Sustainability

Panelists at the recent ISC 24 supercomputing conference poked fun at Nvidia, claiming that its 1000-watt GPUs were “sustainable.”

Government labs also said GPUs and direct liquid cooling provided better performance scaling than CPUs in the past.

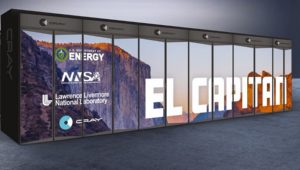

Lawrence Livermore National Laboratory, which is building the upcoming 2-exaflop supercomputer called El Capitan, increased cooling to 28,000 tons with an additional 18,000 tons and raised the power supply to 85 megawatts for current and future systems.

“El Capitan … will be under 40 megawatts, about 30 megawatts, but that is a lot of electricity,” said Bronis de Supinski, chief technology officer at LLNL, in a breakout session.

He admitted that the El Capitan supercomputer may not be considered environmentally friendly, but the focus should also be on results achieved within that performance-power envelope. For example, the energy a supercomputer uses may be well worth it if it solves climate problems.

“A 30-megawatt supercomputer? I’m not going to tell you that that’s a sustainable resource, but it could do a lot to address the societal problems that we want to get addressed,” de Supinski said.

Labs are also moving to renewable energy and liquid cooling. For example, liquid cooling is “saving about 50% of the energy for cooling,” said Dieter Kranzlmüller, LRZ’s chairman, at the ISC 24 session.

Carbon offsetting, capturing and reusing wasted heat, and reusing materials are also being considered in sustainable computing environments.

HPC Past Driving Future

Efforts to make supercomputers more energy efficient are now being used to make better use of every watt consumed in AI processing.

At last month’s HPE Discover conference, CEO Antonio Neri said the company was porting energy efficiency technologies used in Frontier and El Capitan to AI systems with Nvidia GPUs.

“HPE has one of the largest water-cooling manufacturing capabilities on the planet. Why? Because we have to do it for supercomputers,” Neri said.

Nvidia CEO Jensen Huang, who was also on stage, quipped “the future of liquid cooling is going to result in everything from better performance, lower infrastructure costs and lower operating cost.”

Offloading AI

Consumer device makers are touting PCs and mobile devices with neural chips for on-device AI. The neural chips can run AI models locally, reducing the strain on GPUs in the cloud.

Apple provided a full vision of its on-device and cloud AI strategy — if an iPhone or Mac determines an AI task cannot be done on-device, it reroutes the query to a cloud server in Apple’s data center.

Apple users can also select whether to run AI on-device or via the cloud.

Microsoft is encouraging the use of AI chips in Windows devices. Qualcomm’s AI Hub allows users to run benchmarks to see how AI models will run on-device. That lets users decide whether to run inference on-device or in a cloud.

However, there are no killer applications for AI PCs that provide a tangible example of PCs offloading the AI stress on GPUs in the cloud.