Published on May 6, 2025 11:05 PM GMT

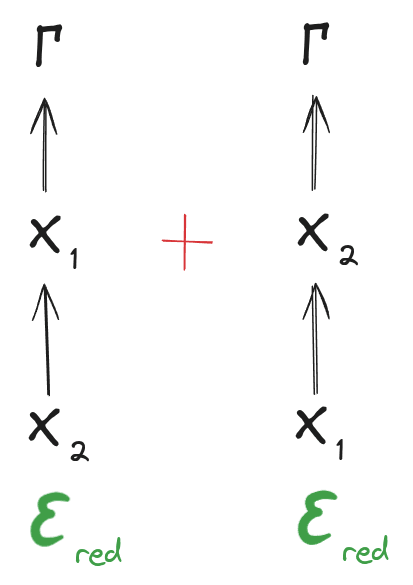

A lot of our work involves "redunds".[1] A random variable is a(n exact) redund over two random variables exactly when both

Conceptually, these two diagrams say that gives exactly the same information about as all of , and gives exactly the same information about as all of ; whatever information contains about is redundantly represented in and . Unpacking the diagrammatic notation and simplifying a little, the diagrams say for all such that .

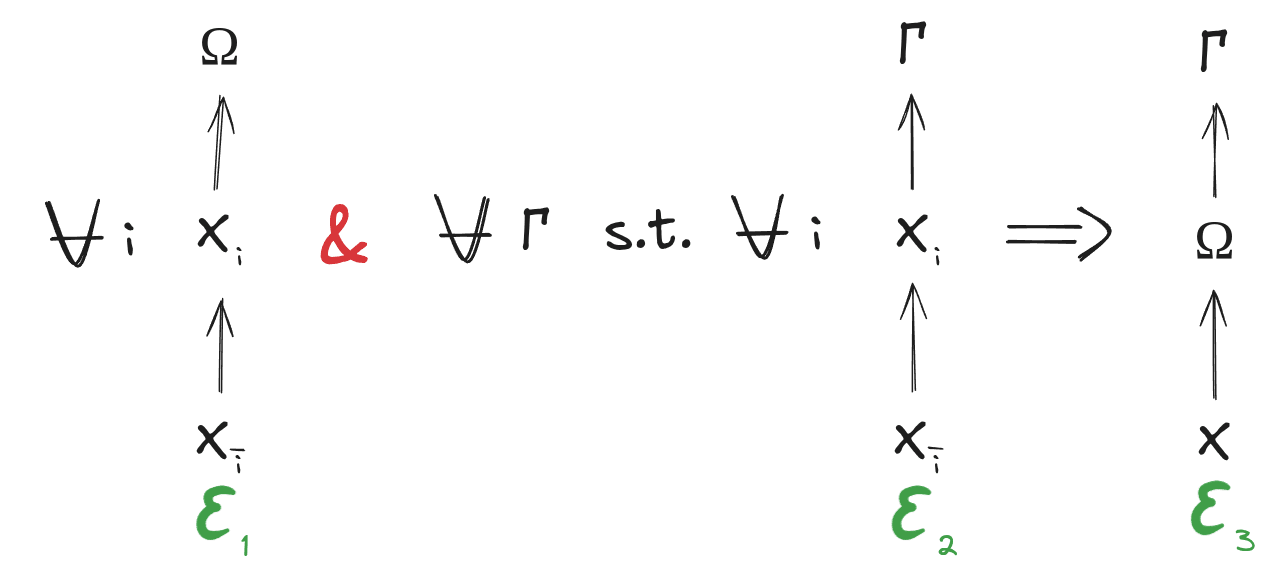

The exact redundancy conditions are too restrictive to be of much practical relevance, but we are more interested in approximate redunds. Approximate redunds are defined by approximate versions of the same two diagrams:

Unpacking the diagrammatic notation, these two diagrams say

This bounty problem is about the existence of a(n approximate) maximal redund : a redund which contains (approximately) all the information about contained in any other (approximate) redund. Diagrammatically, a maximal redund satisfies:

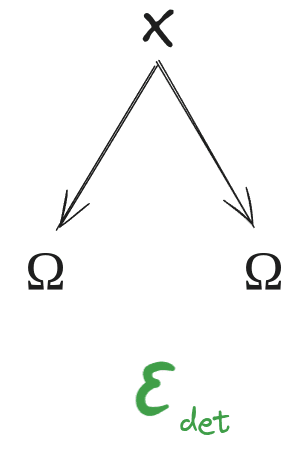

Finally, we'd like our maximal redund to be an approximately deterministic function of , i.e. , which is equivalent to the diagram

What We Want For The Bounty

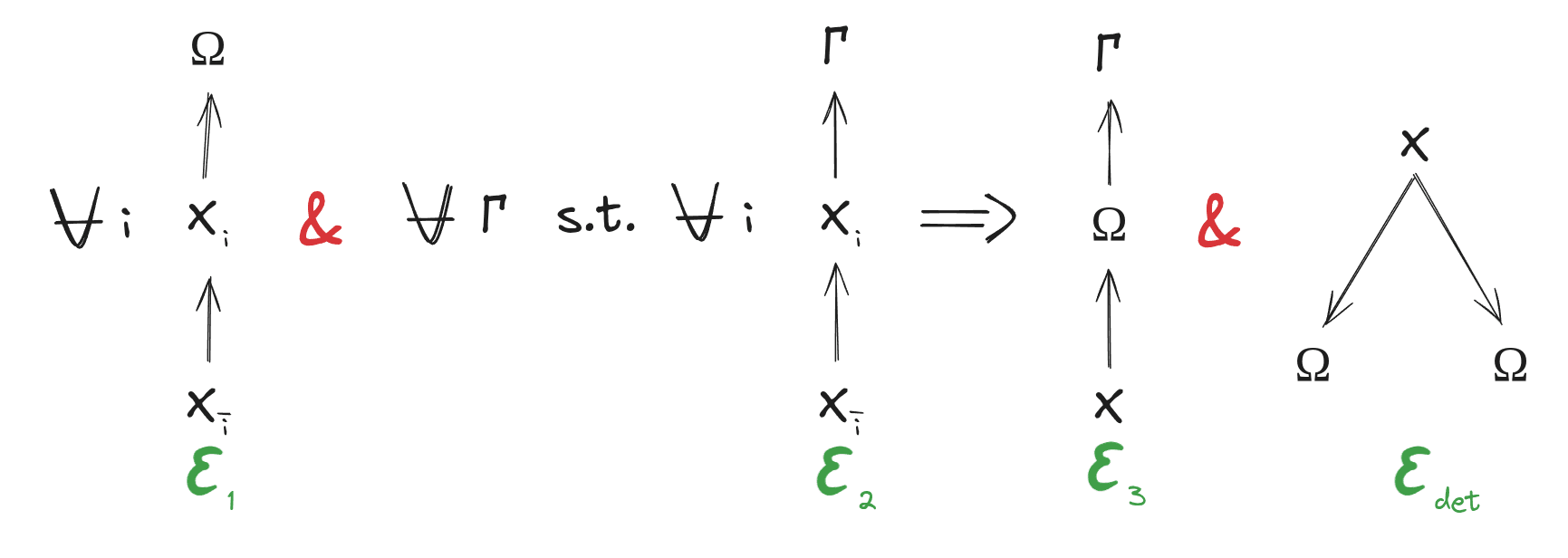

This bounty pays out $500 for establishing that there always, over any two random variables , , exists an satisfying the requirements above, i.e.

... with reasonable error bounds.

What counts as reasonable error bounds? We're expecting all the other 's to scale with in the diagrams above, and ideally be small constant multiples of . In other words: the higher the approximation error on allowable redunds , the more approximation error we allow for all the conditions on . Reasonableness of error bound is partially a judgement call on our part, but small constant (like e.g. 2 or 3) multiples of would definitely suffice.

Bounds should be global, i.e. apply even when is large. We're not interested in e.g. first or second order approximations for small unless they provably apply globally.

If the error bounds are sufficiently reasonable, a solution to this problem will also solve our other open bounty problem, paying out another $500, for a total of $1000.

If people post major intermediate steps which are load-bearing for an eventual solution, we might allocate the bounty based on our judgement of different peoples' relative contributions; the hope is to incentivize sharing intermediate results.

We might also partially pay out for counterexamples. That's a judgement call depending on how thoroughly the counterexample kills hope of any theorem vaguely along the lines intended.

In terms of rigor and allowable assumptions, roughly the level of rigor and assumptions in the posts linked above is fine.

Some Intuition From The Exact Case

A proof in the exact case is straightforward; we'll walk through it here to build some intuition.

The exact redundancy conditions say

wherever . So, we can form a bipartite graph, where each vertex represents a value of or a value of , and two vertices are connected iff . Then the definition of redundancy says that, for ANY redund , and are the same for ANY values of and (respectively) in the same connected component.

So, let be defined as the connected component in which falls. For any redund , must be a function of (since two -values in the same connected component have the same ). Thus

is therefore a(n exactly) deterministic function of , and (exactly) mediates between X and any redund . Thus, satisfies our requirements, in the exact case (i.e. when ).

Alas, this proof does not easily generalize to the approximate case.

Why We Want This

This is a conjecture which we've had on the back burner for a while, and it would be relevant in many places. It would solve our other open bounty problem, it would likely be our starting point for a new version of the Telephone Theorem which more explicitly connects to the natural latents framework, and it would directly simplify the problem of computing natural latents numerically. Probably there are other use-cases which we're not thinking about right at the moment.

- ^

A lot of our work involves "redunds".

Discuss