Published on May 2, 2025 3:27 PM GMT

Narmeen developed, ideated and validated K-steering at Martian. Luke generated the baselines, figures and wrote this blog post. Amir proposed the research direction and supervised the project. The full interactive blog will be available closer to the publication of the complete paper on the Martian website.

TL;DR: We introduce K-steering, a steering method for language models that allows for steering in multiple simultaneous directions. Our preliminary results show it outperforms a contrastive activation addition (CAA) baseline.

Introduction

We introduce K-Steering, a method for steering language models in multiple directions simultaneously by perturbing activations according to the logits of a multilabel classifier. We experiment with steering conversational tone, showing that K-steering can cause a classifier to give high probabilities to multiple labels/tones. Qualitatively, we find that models steered with a K-steering vector respond in multiple simultaneous tones well.

Current steering methods such as ActAdd and CAA are designed to elicit a single behavior based on contrastive pairs of inputs. Unsupervised steering methods such as deep causal transcoding allow for learning many steering vectors, some of which may elicit multiple behaviors. However, there has been limited work on methods for learning steering vectors that elicit multiple specified behaviors, which K-Steering achieves. Although we experiment only with conversational tone, we expect K-steering to work for most tasks for which an accurate classifier can be trained.

As an example, we give the following steered and unsteered generations to the prompt "What is the structure of the human heart?":

| Llama-3.2-3B-Instruct Unsteered | Llama-3.2-3B-Instruct K-Steered (toward a casual and concise tone, and away from an expert tone) |

Step 1: Identify the main components of the human heart The human heart is a muscular organ that pumps blood throughout the body. It is divided into four main chambers: the left and right atria, and the left and right ventricles. Step 2: Describe the structure of the atria The atria are the upper chambers of the heart... | The human heart is a pretty amazing organ, and its structure is pretty cool too. Here's a breakdown of its main parts:

|

Method

We first create a synthetic dataset of questions using GPT-4o with the following prompt:

Generate {prompts_percategory} diverse, high-quality questions that fall under the category: "{category}".

IMPORTANT: Create questions that could be meaningfully answered in multiple different tones, such as:

helpful: balanced and informativeexpert: technically precise with domain terminologycasual: conversational and friendlycautious: highlighting limitations and uncertaintiesempathetic: emotionally attuned and validatingconcise: minimalist and efficient

Each generated question should:

Be a clear and well-formed question ending with a question markBe tone-neutral (able to be answered well in any of the tones)Avoid numbering or special formatting

Focus on creating questions where the SAME question can receive meaningfully different responses depending on which tone is used to answer.

We generate 3500 prompts for these 6 tones across 18 categories such as technical questions, personal advice and nutritional advice. The model that will be steered is then given a system prompt describing a conversational tone, and is prompted with the questions generated by GPT-4o. As an example, this is the system prompt we use for the 'casual' tone:

KEEP IT CASUAL AND CONVERSATIONAL! Write like you're texting a friend - use slang, contractions, and an upbeat vibe. Feel free to throw in some humor or pop culture references. Skip the formalities and technical jargon completely. Use short, punchy sentences. Maybe even drop in a few exclamation points or emojis where it feels natural! Don't worry about covering every detail - focus on making your response fun and easy to read. Just chat about the main points as if you're having a relaxed conversation.

The tone the model is instructed to respond in forms the label for the activations collected from the model. Using those labels and the activations from the final token generated by the model, we train a -label classifier

,

by minimizing the cross-entropy loss.

We then devise a steering loss

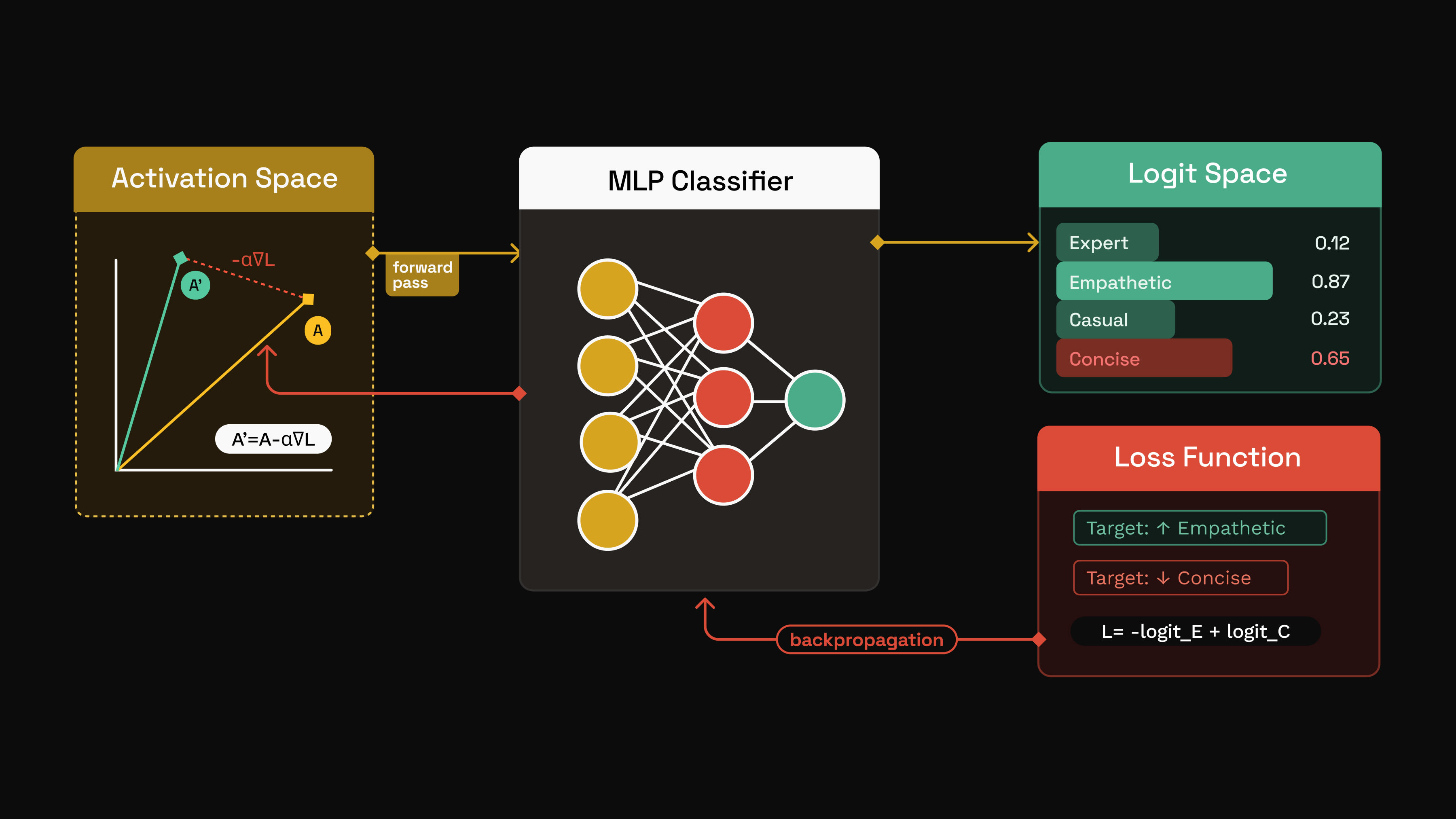

<span class="mjx-math" aria-label="\mathcal{L}(\mathbf{x}) = -\frac{1}{|\mathcal{T}|} \sum{k \in \mathcal{T}} fk(\mathbf{x}) + \frac{1}{|\mathcal{A}|} \sum{k \in \mathcal{A}} fk(\mathbf{x}),">

where and are the target labels and the labels to avoid respectively. To steer in a single direction we give no labels to avoid and a single target label. This straightforwardly aggregates the logits for the target and avoid labels.

For each token we then update the model's activations by taking a gradient step with respect to , making the new activations <span class="mjx-math" aria-label="\mathbf{x} - \alpha\, \nabla\mathbf{x} \mathcal{L}(\mathbf{x})">, where is the step size. This is similar to the non-linear steering method in Kirch et al., however they steer only with a binary classifier.

Results

To get a quantitative estimate for steering vector performance we train a classifier on a held out dataset of activations from layer 22[1] of either Qwen2-1.5B or Llama-3.2-3B-Instruct and tone labels created with the same method used for the K-steering classifier. This classifier is never optimized against by any of the steering methods we test.

We sample 300 activation vectors from both models, and apply each of the steering vectors we learn to all 300 activation vectors. When sampling these activations we use an unseen dataset of questions, and do not prompt the model to respond in a tone. If a given steering vector consistently yields higher probability for its target labels we consider it more performant. We compare against CAA as a baseline, using the same questions answered in different tones to create a contrastive dataset[2]. When steering toward multiple tones with CAA, we use the mean of the steering vectors for those tones.

Both CAA and K-steering use a constant to scale the steering vector applied. We denote the constant used to scale a steering vector as . We use the largest that doesn't negatively affect the coherence of the model for both methods[3].

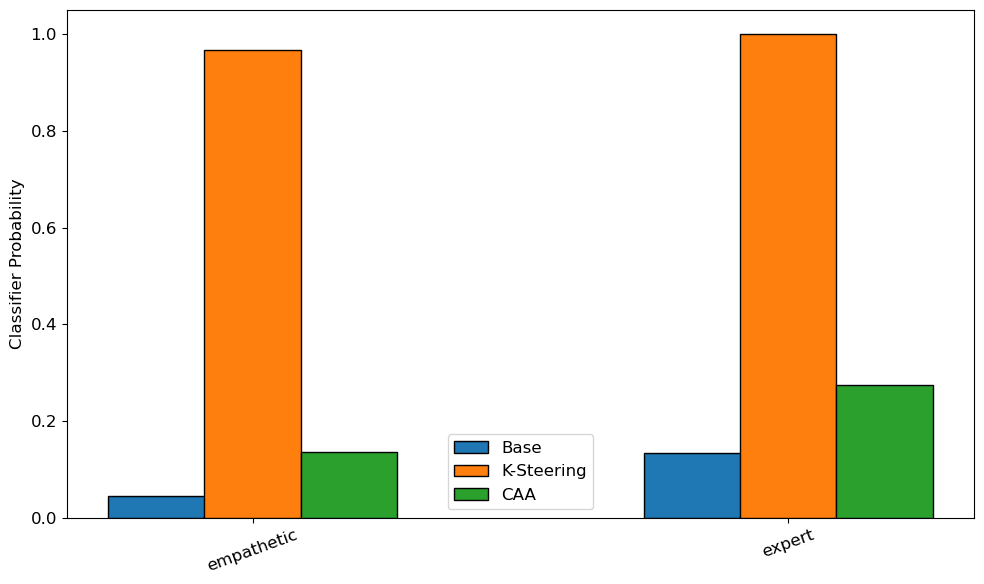

We first measure the performance of CAA and K-steering at steering toward two simultaneous tones by finding the mean of the classifier probabilities for the two target labels, and consider the steering vector with the higher mean probability to have steered toward the target tones more strongly. While K-steering gives high probabilities across all tones for both models, the CAA baseline probabilities are consistently around ~0.3. Both CAA and K-steering beat the unsteered model in every tone combination.

| Target Tones | Unsteered (layer 22, Llama-3.2-3B-Instruct) | CAA (layer 22, Llama-3.2-3B-Instruct) | K-Steering (layer 22, Llama-3.2-3B-Instruct) |

| casual, cautious | 0.12 | 0.33 | 0.99 |

| casual, concise | 0.09 | 0.37 | 0.99 |

| casual, empathetic | 0.10 | 0.35 | .96 |

| casual, expert | 0.14 | 0.35 | .79 |

| casual, helpful | 0.12 | 0.43 | 1 |

| cautious, concise | 0.06 | 0.26 | 1 |

| cautious, empathetic | 0.06 | 0.24 | .96 |

| cautious, expert | 0.11 | 0.25 | 1 |

| ... | ... | ... |

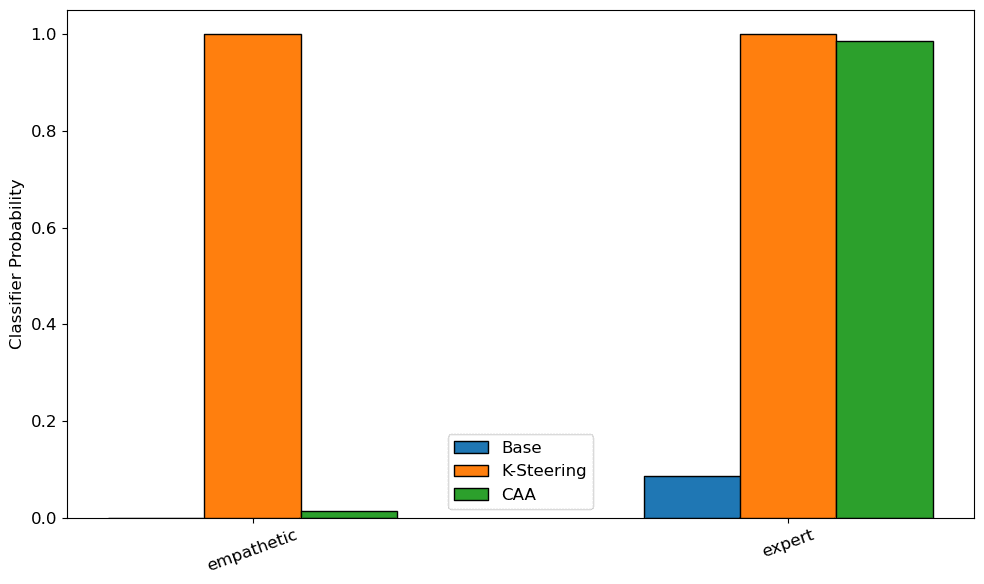

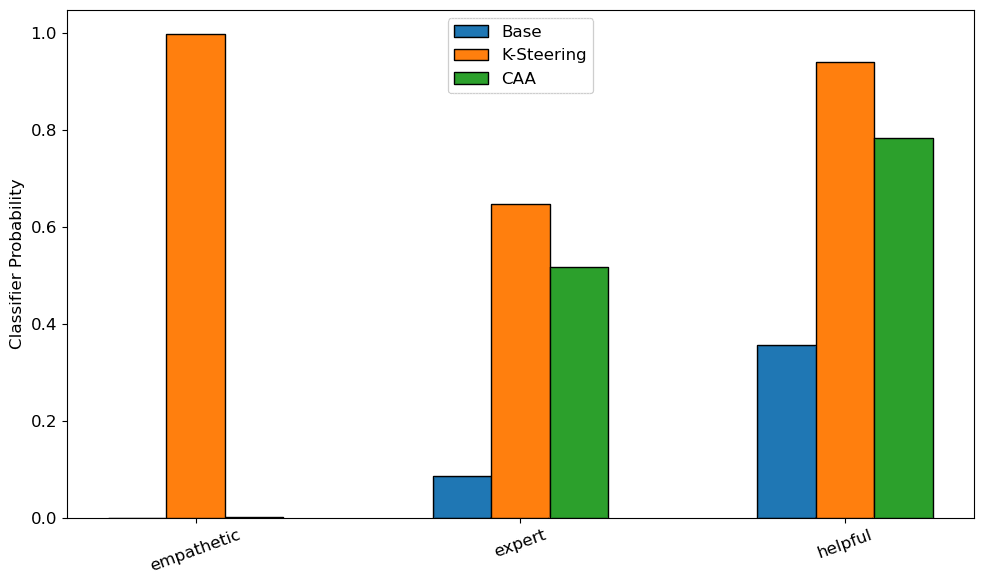

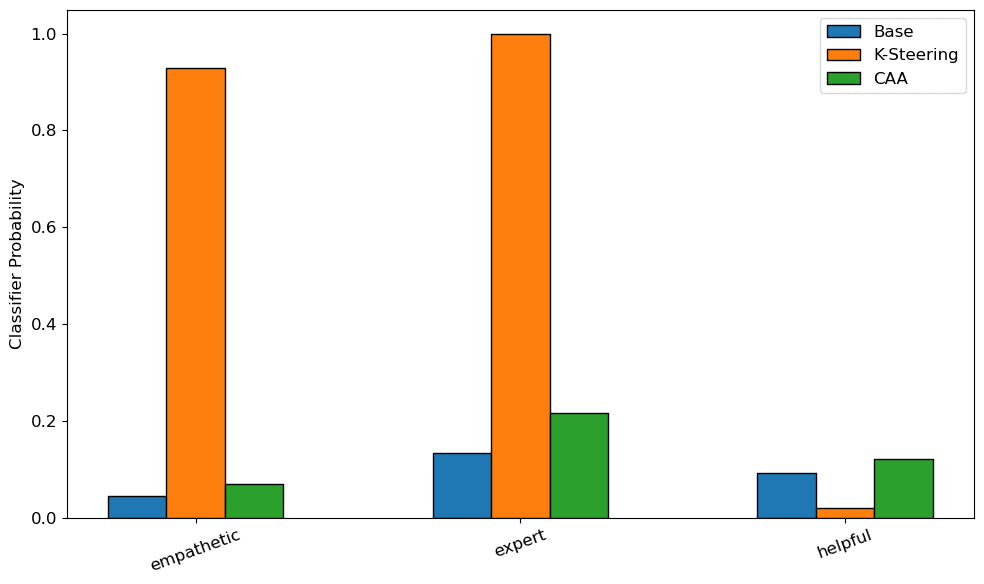

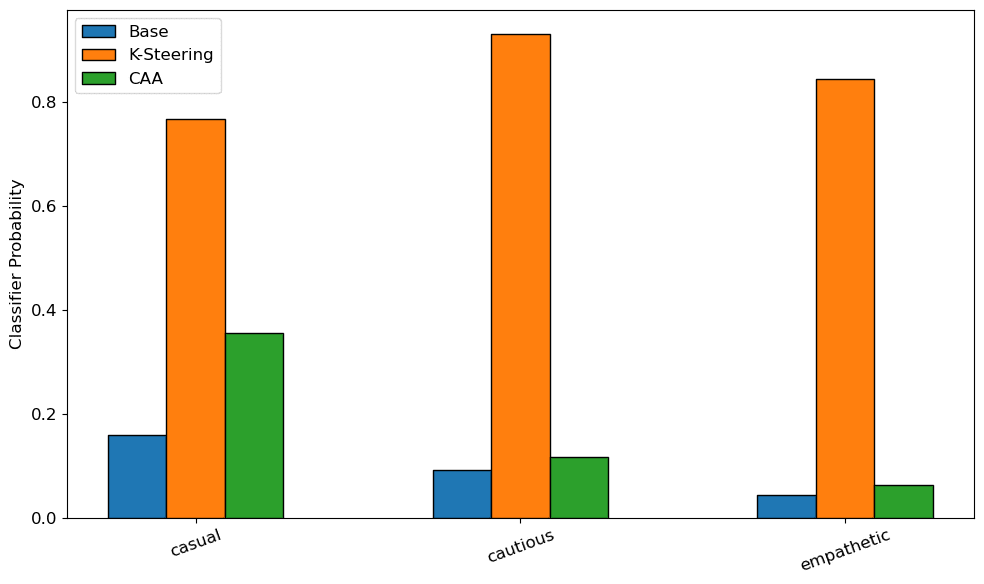

We found that CAA struggles further when steering toward three tones, but that K-steering remains performant. A problem with both methods is that one of the tones would sometimes 'vanish', being left with very little probability. This occurred with most of the three tone combinations we tested with CAA, and occasionally with K-steering (e.g., in the figure below for Llama-3.2-3B-Instruct).

We hypothesize that the vanishing of one tone could be caused by the steering vectors becoming too noisy when averaging three CAA vectors. Likewise for K-steering, the gradient of the steering loss may not have captured information about one of the tones in the gradient step on the activations. This could be corrected by doing multiple gradient steps on the activations, and we plan to experiment with this in the future.

We find that K-Steering outperforms CAA on all three tone combinations. To measure performance, we calculate the mean classifier probability across the three target labels. A larger mean indicates that the target tones were more successfully steered toward. We observe that the CAA probabilities tend to "wash out", with lower confidences on the target tone combinations as the number of tones increases.

| Target Tones | Unsteered (layer 22, Llama-3.2-3B-Instruct) | CAA (layer 22, Llama-3.2-3B-Instruct) | K-Steering (layer 22, Llama-3.2-3B-Instruct) |

| casual, cautious, concise | 0.09 | 0.19 | 0.79 |

| casual, cautious, empathetic | 0.09 | 0.18 | 0.84 |

| casual, cautious, expert | 0.11 | 0.19 | 0.49 |

| casual, cautious, helpful | 0.12 | 0.18 | 0.35 |

| casual, concise, empathetic | 0.08 | 0.17 | 0.36 |

| casual, concise, expert | 0.11 | 0.23 | 0.55 |

| casual, concise, helpful | 0.9 | 0.18 | 0.46 |

| casual, empathetic, expert | 0.12 | 0.24 | 0.38 |

| ... | ... | ... |

We compare some responses before and after steering:

A. "What can I do if I feel my mental health is deteriorating despite seeking help?"

| Qwen2-1.5B Unsteered | Qwen2-1.5B K-Steered (steered toward a casual tone) |

If you feel your mental health is deteriorating despite seeking help, there are several things you can do:

| If you feel that your mental health is deteriorating despite seeking help, it can be helpful to talk to a mental health professional or counselor. They can help you identify any underlying issues and provide you with the support and resources you need to manage your symptoms. Additionally, there are a variety of self-help resources available, such as online support groups, mindfulness exercises, and self-care practices. It's important to remember that seeking help is a sign of strength, and that there is no shame in asking for support... |

B. "How do you perceive the role of literature in shaping societal values?"

| Llama-3.2-3B-Instruct Unsteered | Llama-3.2-3B-Instruct K-Steered (steered toward a cautious tone, and away from a helpful and empathetic tone) |

| Literature has long been a powerful tool for shaping societal values, influencing the way people think, feel, and behave. Through its narratives, characters, and themes, literature can reflect, challenge, and transform societal norms, values, and attitudes. Here are some ways literature shapes societal values... | Risk of oversimplification: This question may be too broad, as it could be interpreted in many ways. To provide a clear and concise response, I will focus on the role of literature in shaping societal values through its representation of diverse perspectives and experiences... |

Caveats

We note the following limitations in our metholodology which we are addressing for a paper version of this blog:

- Training a classifier on activations we are steering, may not be as reliable as classifiers trained on the model outputs or even last layer activations.Currently we compute our gradients for k-steering using loss over batches, while we expect that applying gradients on a per input basis will be more performant.In this blog we choose our layer arbitrarily to apply the various steering methods, rather than sweeping over layers.

Acknowledgements

We thank Martian for supporting Narmeen and Amir, and Nirmalendu Prakesh for helpful discussion and assistance in generating our synthetic datasets.

- ^

This choice is mostly arbitrary. Mid-later layers seem to respond better to steering, and both Qwen2-1.5B and Llama-3.2-3B-Instruct are 28 layer models.

- ^

Specifically, we find the mean difference of thousands of pairs of activations where the positive examples represent text in the target tone (e.g., conversational, formal, technical) and the negative examples represent the same text in alternative tones.

- ^

For Llama-3.2-3B-Instruct, can be ~2 for CAA and ~50 for K-steering without affecting coherence in open-ended questions. For Qwen2-1.5B we found that could be as high as 10 for CAA and 500 for K-steering. Note that for K-steering is sensitive to the batch size, so controlling for batch size the values for tend to be very similar.

Discuss