A few weeks ago, I stumbled across AI 2027, a forecast of near-term AI progress that predicts we'll reach AGI around 2027, and (in the worst-case scenario) human extinction by 2030.

My reaction to it was similar to my response to other AGI timelines I’ve read: that it was somewhere between science fiction and delusion.

But as I dug deeper into the project, I learned something interesting about its lead author, Daniel Kokotajlo. It turns out he previously wrote a similar post that correctly predicted the major trends leading to ChatGPT, GPT-4o, and o1 - nearly four years ago.

With that in mind, I started to take AI 2027 more seriously, but I also continued to struggle with my credulity. I have a hard time believing that we’re going to automate 90% of jobs and cure all diseases by the next Presidential election. And yet, there are many, many smart people who seem to believe this (or at least some close variant of it).

So, with that in mind, I wanted to finally articulate precisely why I’m skeptical of not just AI 2027 but nearly all AGI timelines that I’ve encountered.

I don’t know whether I’m right - sharper minds than mine have spent far more hours pondering this - but I'd like to finally get my arguments down on paper, for posterity if nothing else.

What is AI 2027?

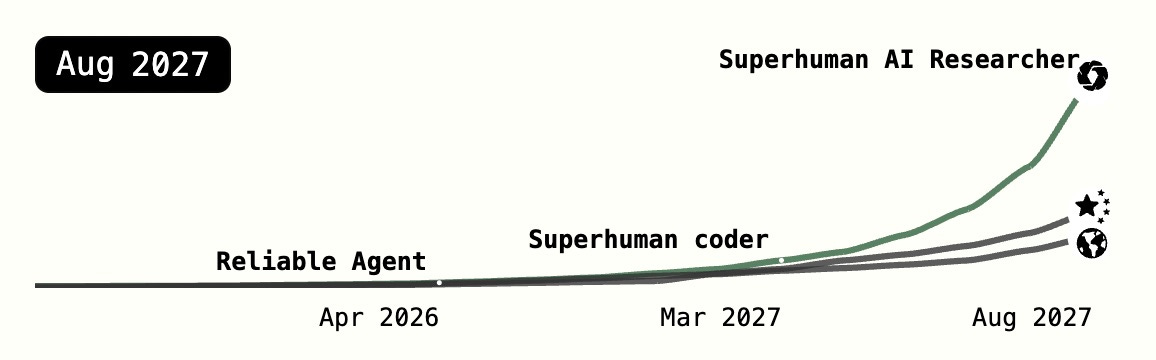

For a bit of background, let’s recap the main ideas in AI 2027. The research project presents a detailed, month-by-month scenario forecasting the development of AGI. It lays out a series of specific technical milestones and their corresponding societal impacts, ultimately presenting two divergent futures - a "green" ending and a "red" ending.

The document resembles other AGI write-ups like Leopold Aschenbrenner's "Situational Awareness," which focused on measuring AI progress through orders of magnitude (OOMs) advances in data centers, chip development, and algorithmic breakthroughs. Like other AI safety discussions, it attempts to quantify existential risk, though it goes farther than most in providing specific predictions about AI capabilities and deployment.

If you haven't seen it, it's certainly a fascinating read. Some of the major milestones mentioned include:

Late 2025: The world's most expensive AI

Early 2026: AI starts to speed up AI research itself

Mid 2026: China begins nationalizing AI research

Early 2027:

New agent architecture that continuously learns and trains

China steals state-of-the-art weights from US companies

Leading AI companies sign a DoD contract for national security reasons

Mid 2027:

SOTA AI begins self-improving (and most of the humans don't meaningfully contribute anymore)

AGI is reached; agents are more capable than a human remote worker

The White House begins planning geopolitical moves around AGI

Late 2027:

AI can conduct AI research faster and better than humans

The latest AGI is misaligned and attempts to subvert its creators

Government oversight kicks in

There's much, much more in the full forecast, but I also want to touch on the two endings. In the slowdown (green) ending, humanity successfully coordinates a slowdown in AI development, and AI systems remain under human control. The US and China broker a deal on mutually aligned AGI, and by the 2028 presidential election, we're ushering in a new AI era - by 2029, we've developed "fusion power, quantum computers, and cures for many diseases."

In the race ("red") ending, competitive pressures between the US and China drive rapid AI development without adequate safety measures. We create ever more intelligent AGIs, each as misaligned as the last (though so does China, and eventually our AGI and China's AGI negotiate their own agreement). Each country invests more and more into new robotics factories and labs to keep up with the arms race - by late 2028, these factories are creating "a million new robots per month". And by 2030, our AGI overlord finally ends the human race in its quest to become ever more advanced.

What Kokotajlo Got Right

Like I mentioned, I was initially skeptical of AI 2027's predictions. But there was something that made me - at least partially - change my mind about how much weight to give it: the lead author, Daniel Kokotajlo.

In August 2021 (ChatGPT came out in November 2022, if you're counting at home), he wrote a very similar blog post titled "What 2026 Looks Like." In it, he outlined his predictions for the next five years of AI research.

Here are some of the things he got right:

2022

GPT-3 would become obsolete and be replaced by chatbots (ChatGPT arrived in December of that year)

Moreover, chatbots would be fun to talk to, but ultimately considered shallow in their knowledge

2023

Multimodal transformers become the next big thing

LLMs are using a significant fraction of NVIDIA's chip output

An explosion of apps using new models, and a cascade of VC money flowing into new startups

2024

AI progress feels like it's plateaued, despite the spending

The US places export controls on AI chips (this happened in late 2022)

2025

RL delivers a new breakthrough in fine-tuning large models (this is, at a very high level, how "reasoning" models work, which arrived in late 2024 rather than 2025)

AI can play Diplomacy as well as the best humans (this happened in late 2022)

To be clear: he also got several things wrong, and a number other predictions have yet to materialize. And yet - these predictions were laid out over a year before ChatGPT even existed! From someone who, as far as I can tell, wasn't even an AI researcher at the time[1].

To quote Scott Alexander (who would come to collaborate on AI 2027):

If you read Daniel's [2021] blog post without checking the publication date, you could be forgiven for thinking it was a somewhat garbled but basically reasonable history of the last four years.

I wasn't the only one who noticed. A year later, OpenAI hired Daniel to their policy team. While he worked for them, he was limited in his ability to speculate publicly. "What 2026 Looks Like" promised a sequel about 2027 and beyond, but it never materialized.

It’s because of this impressive track record that I think it's worth considering what AI 2027 likely gets right. And this time around, he's partnered with forecasting experts and is coming to the table after a stint behind the scenes at OpenAI.

With that in mind, I think several short-term predictions in AI 2027 seem likely based on current trends:

China's AI competitiveness is real, and DeepSeek has become central to its strategy. Its history of appropriating American research makes the possibility of state-sponsored model weight theft entirely plausible.

Near-term AI development predictions through 2026 are likely already on roadmaps at major AI labs. Given the months or years between conception and deployment, many capabilities Kokotajlo describes are probably in various stages of development.

AI coding tools are already providing daily value despite limitations. Dismissing their impact feels shortsighted when many developers already leverage these tools productively.

The specificity of Kokotajlo's predictions is also commendable. Few AI analysts will make concrete forecasts over multi-year timescales, preferring vague trends to testable claims. I very much appreciate the courage this requires - the best I've done is predict a year in advance, with much higher-level analysis than AI 2027.

My Problem(s) with AGI Timelines

But despite some of the strengths of AI 2027, I can't help but be skeptical of its long-term predictions, for the same reasons that I feel other AGI timelines fall apart. Yes, I understand that humans are incredibly bad at appreciating exponential curves. And yes, I do think we're on a very rapid curve when it comes to model benchmark performance.

But there are three areas of disconnect that I have yet to be convinced about:

Reality has a surprising amount of detail

Models are not the same as products

Decisions are made by people, not computers

Let's go through each one.