Published on April 23, 2025 12:01 PM GMT

Dear policymakers,

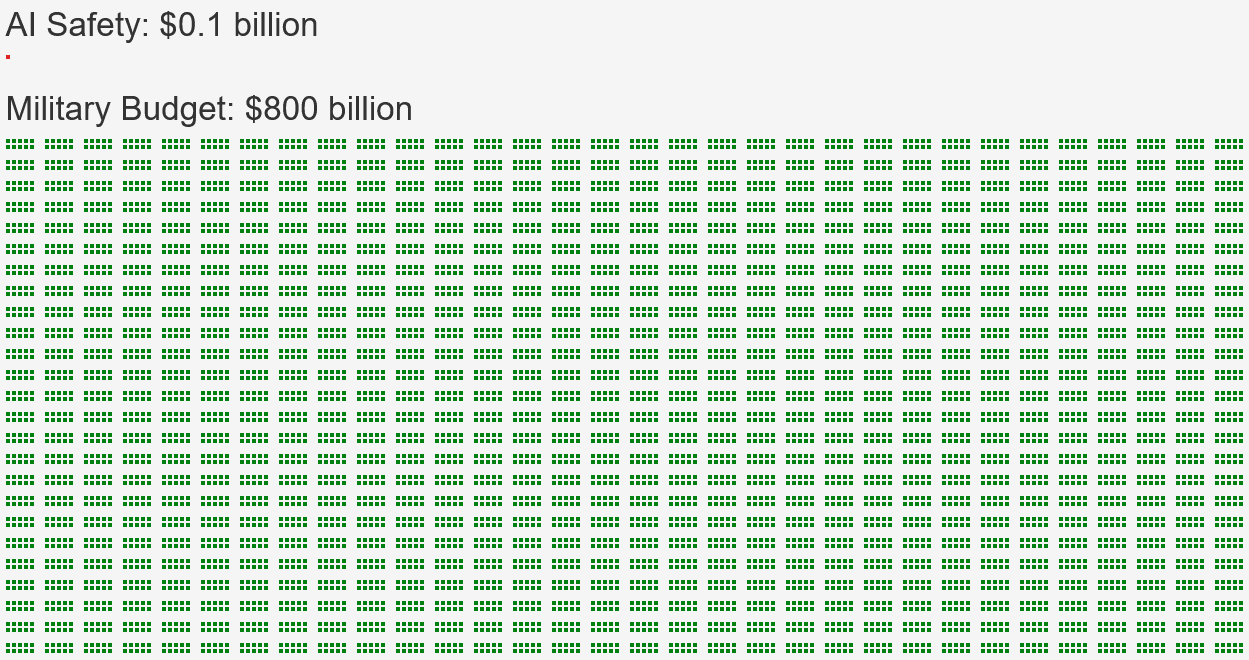

We demand that the AI alignment budget be Belief-Consistent with the military budget.

Belief-Consistency is a simple yet powerful idea:

- If you spend 8000 times less on AI alignment (compared to the military),You must also believe that AI risk is 8000 times less (than military risk).

Yet the only way to reach Belief-Consistency, is to

Greatly increase AI alignment spending,

or

Become 99.95% certain that you are right, and that the majority of AI experts, other experts, superforecasters, and the general public, are all wrong.Let us explain:

In order to believe that AI risk is 8000 times less than military risk, you must believe that an AI catastrophe (killing 1 in 10 people) is less than 0.001% likely.

This clearly contradicts the median AI expert, who sees a 5%-12% chance of an AI catastrophe (killing 1 in 10 people), the median superforecaster, who sees 2.1%, other experts, who see 5%, and the general public, who sees 5%.

In fact, assigning only 0.001% chance contradicts the median expert so profoundly, that you need to become 99.95% certain that you won't realize you were wrong and the majority of experts were right!

If there was more than a 0.05% possibility that you study the disagreement further, and realize the risk exceeds 2% just like most experts say, then the laws of probability forbid you from believing a 0.001% risk.

We believe that foreign invasion concerns have decreased over the last century, and AGI concerns have increased over the last decade, but budgets remained within the status quo, causing a massive inconsistency between belief and behaviour.

Do not let humanity's story be so heartbreaking.

Version 1.1us

Please add your signature by asking in a comment (or emailing me). Thank you so truly much.

Signatories:

- Knight Lee

References

Claude 3.5 drew the infographic for me.

“Military Budget: $800 billion”

- [1] says it's $820 billion in 2024. $800 billion is an approximate number.

“AI Safety: $0.1 billion”:

- The AISI is the most notable US government funded AI safety organization. It does not focus on ASI takeover risk though it may partially focus on other catastrophic AI risks. AISI's budget is $10 million according to [2]. Worldwide AI safety funding is between $0.1 billion and $0.2 billion according to [3].

“the median AI expert, who sees a 5%-12% chance of an AI catastrophe (killing 1 in 10 people), other experts, who see 5%, the median superforecaster, who sees 2.1%, and the general public, who sees 5%.”

- [4] says: Median superforecaster: 2.13%. Median “domain experts” i.e. AI experts: 12%. Median “non-domain experts:” 6.16%. Public Survey: 5%. These are predictions for 2100. Nonetheless, these are predictions before ChatGPT was released, so it's possible they see the same risk sooner than 2100 now.[5] says the median AI expert sees a 5% chance of “future AI advances causing human extinction or similarly permanent and severe disempowerment of the human species” and a 10% chance of “human inability to control future advanced AI systems causing human extinction or similarly permanent and severe disempowerment of the human species.”

- ^

USAFacts Team. (August 1, 2024). “How much does the US spend on the military?” USAFacts. https://usafacts.org/articles/how-much-does-the-us-spend-on-the-military/

- ^

Wiggers, Kyle. (October 22, 2024). “The US AI Safety Institute stands on shaky ground.” TechCrunch. https://techcrunch.com/2024/10/22/the-u-s-ai-safety-institute-stands-on-shaky-ground/

- ^

McAleese, Stephen, and NunoSempere. (July 12, 2023). “An Overview of the AI Safety Funding Situation.” LessWrong. https://www.lesswrong.com/posts/WGpFFJo2uFe5ssgEb/an-overview-of-the-ai-safety-funding-situation/?commentId=afv74rMgCbvirFdKp

- ^

Karger, Ezra, Josh Rosenberg, Zachary Jacobs, Molly Hickman, Rose Hadshar, Kayla Gamin, and P. E. Tetlock. (August 8, 2023). “Forecasting Existential Risks Evidence from a Long-Run Forecasting Tournament.” Forecasting Research Institute. p. 259. https://static1.squarespace.com/static/635693acf15a3e2a14a56a4a/t/64f0a7838ccbf43b6b5ee40c/1693493128111/XPT.pdf#page=260

- ^

Stein-Perlman, Zach, Benjamin Weinstein-Raun, and Katja Grace. (August 3, 2022). “2022 Expert Survey on Progress in AI.” AI Impacts. https://aiimpacts.org/2022-expert-survey-on-progress-in-ai/

Why this open letter might succeed even if previous open letters did not

Politicians don't reject pausing AI because they are evil and want a misaligned AI to kill their own families! They reject pausing AI because their P(doom) honestly is low, and they genuinely believe that if the US pauses then China will race ahead, building even more dangerous AI.

But as low as their P(doom) is, it may still be high enough that they have to agree with this argument (maybe it's 1%).

It is very easy to believe that "we cannot let China win," or "if we don't do it someone else will," and reject pausing AI. But it may be more difficult to believe that you are 99.999% sure of no AI catastrophe, and thus 99.95% sure the majority of experts are wrong, and reject this letter.

Also remember that AI capabilities spending is 1000x greater than alignment spending.

This makes it far easier for me to make a quantitative argument for increasing alignment funding, than to make a quantitative argument for increasing regulation.

I am not against asking for regulation! I just think we are dropping the ball when it comes to asking for alignment funding.

PS: I feel this letter better than my previous draft, because although it is longer, the gist of it is easier to understand and memorize: "make the AI alignment budget Belief-Consistent with the military budget."

Why I feel almost certain this open letter is a net positive

Delaying AI capabilities alone isn't enough. If you wished for AI capabilities to be delayed by 1000 years, then one way to fulfill your wish is if the Earth had formed 1000 years later, which delays all of history by the same 1000 years.

Clearly, that's not very useful. AI capabilities have to be delayed relative to something else.

That something else is either:

Progress in alignment (according to optimists like me)

or

Progress towards governments freaking out about AGI and going nuclear to stop it (according to LessWrong's pessimist community)Either way, the AI Belief-Consistency Letter speeds up that progress by many times more than it speeds up capabilities. Let me explain.

Case 1:

Case 1 assumes we have a race between alignment and capabilities. From first principles, the relative funding of alignment and capabilities matters in this case.

Increasing alignment funding by 2x ought to have a similar effect to decreasing capability funding by 2x.

Various factors may make the relationship inexact, e.g. one might argue that increasing alignment by 4x might be equivalent to decreasing capabilities by 2x, if one believes that capabilities is more dependent on funding.

But so long as one doesn't assume insane differences, the AI Belief-Consistency Letter is a net positive in Case 1.

This is because alignment funding is only at $0.1 to $0.2 billion, while capabilities funding is at $200+ billion to $600+ billion.

If the AI Belief-Consistency Letter increases both by $1 billion, that's a 5x to 10x alignment increase and only a 1.002x to 1.005x capabilities increase. That would clearly be a net positive.

Case 2:

Even if the wildest dreams of the AI pause movement succeed, and the US, China, and EU all agree to halt all capabilities above a certain threshold, the rest of the world still exists, so it only reduces capabilities funding by 10x effectively.

That would be very good, but we'll still have a race between capabilities and alignment, and Case 1 still applies. The AI Belief-Consistency Letter still increases alignment funding by far more than capabilities funding.

The only case where we should not worry about increasing alignment funding, is if capabilities funding is reduced to zero, and there's no longer a race between capabilities and alignment.

The only way to achieve that worldwide, is to "solve diplomacy," which is not going to happen, or to "go nuclear," like Eliezer Yudkowsky suggests.

If your endgame is to "go nuclear" and make severe threats to other countries despite the risk, you surely can't oppose the AI Belief-Consistency Letter on the grounds that "it speeds up capabilities because it makes governments freak out about AGI," since you actually need governments to freak out about AGI.

Conclusion

Make sure you don't oppose this idea based on short term heuristics like "the slower capabilities grow, the better," without reflecting on why you believe so. Think about what your endgame is. Is it slowing down capabilities to make time for alignment? Or is it slowing down capabilities to make time for governments to freak out and halt AI worldwide?

Discuss