Reliable evaluation of large language model (LLM) outputs is a critical yet often complex aspect of AI system development. Integrating consistent and objective evaluation pipelines into existing workflows can introduce significant overhead. The Atla MCP Server addresses this by exposing Atla’s powerful LLM Judge models—designed for scoring and critique—through the Model Context Protocol (MCP). This local, standards-compliant interface enables developers to seamlessly incorporate LLM assessments into their tools and agent workflows.

Model Context Protocol (MCP) as a Foundation

The Model Context Protocol (MCP) is a structured interface that standardizes how LLMs interact with external tools. By abstracting tool usage behind a protocol, MCP decouples the logic of tool invocation from the model implementation itself. This design promotes interoperability: any model capable of MCP communication can use any tool that exposes an MCP-compatible interface.

The Atla MCP Server builds on this protocol to expose evaluation capabilities in a way that is consistent, transparent, and easy to integrate into existing toolchains.

Overview of the Atla MCP Server

The Atla MCP Server is a locally hosted service that enables direct access to evaluation models designed specifically for assessing LLM outputs. Compatible with a range of development environments, it supports integration with tools such as:

- Claude Desktop: Enables evaluation within conversational contexts.

Cursor: Allows in-editor scoring of code snippets against specified criteria.

OpenAI Agents SDK: Facilitates programmatic evaluation prior to decision-making or output dispatch.

By integrating the server into an existing workflow, developers can perform structured evaluations on model outputs using a reproducible and version-controlled process.

Purpose-Built Evaluation Models

Atla MCP Server’s core consists of two dedicated evaluation models:

- Selene 1: A full-capacity model trained explicitly on evaluation and critique tasks.

Selene Mini: A resource-efficient variant designed for faster inference with reliable scoring capabilities.

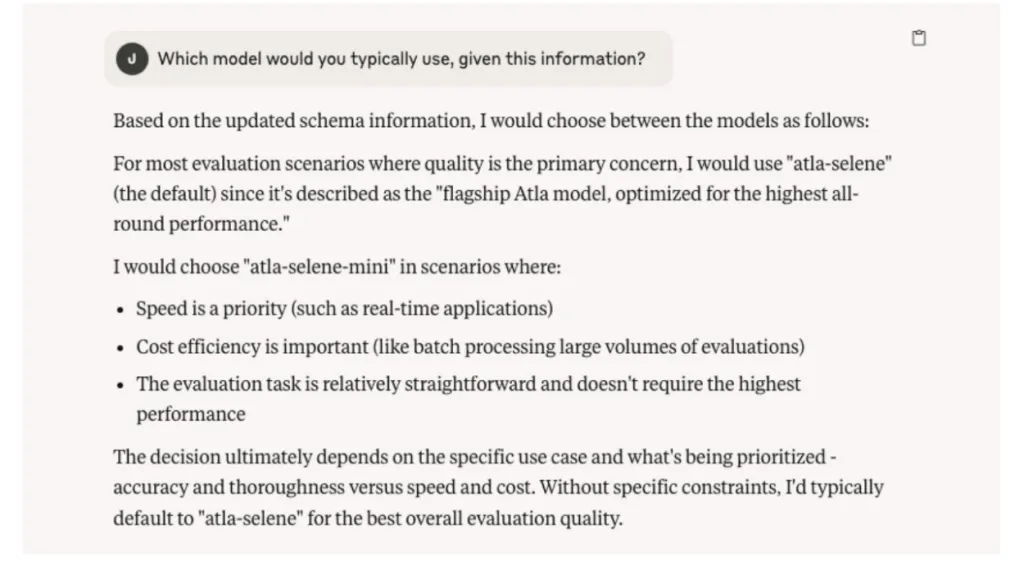

Which Selene model does the agent use?

If you don’t want to leave model choice up to the agent, you can specify a model.

Unlike general-purpose LLMs that simulate evaluation through prompted reasoning, Selene models are optimized to produce consistent, low-variance evaluations and detailed critiques. This reduces artifacts such as self-consistency bias or reinforcement of incorrect reasoning.

Evaluation APIs and Tooling

The server exposes two primary MCP-compatible evaluation tools:

- evaluate_llm_response: Scores a single model response against a user-defined criterion.

evaluate_llm_response_on_multiple_criteria: Enables multi-dimensional evaluation by scoring across several independent criteria.

These tools support fine-grained feedback loops and can be used to implement self-correcting behavior in agentic systems or to validate outputs prior to user exposure.

Demonstration: Feedback Loops in Practice

Using Claude Desktop connected to the MCP Server, we asked the model to suggest a new, humorous name for the Pokémon Charizard. The generated name was then evaluated using Selene against two criteria: originality and humor. Based on the critiques, Claude revised the name accordingly. This simple loop shows how agents can improve outputs dynamically using structured, automated feedback—no manual intervention required.

While this is a deliberately playful example, the same evaluation mechanism applies to more practical use cases. For instance:

- In customer support, agents can self-assess their responses for empathy, helpfulness, and policy alignment before submission.

In code generation workflows, tools can score generated snippets for correctness, security, or style adherence.

In enterprise content generation, teams can automate checks for clarity, factual accuracy, and brand consistency.

These scenarios demonstrate the broader value of integrating Atla’s evaluation models into production systems, allowing for robust quality assurance across diverse LLM-driven applications.

Setup and Configuration

To begin using the Atla MCP Server:

- Obtain an API key from the Atla Dashboard.

Clone the GitHub repository and follow the installation guide.

Connect your MCP-compatible client (Claude, Cursor, etc.) to begin issuing evaluation requests.

The server is built to support direct integration into agent runtimes and IDE workflows with minimal overhead.

Development and Future Directions

The Atla MCP Server was developed in collaboration with AI systems such as Claude to ensure compatibility and functional soundness in real-world applications. This iterative design approach enabled effective testing of evaluation tools within the same environments they are intended to serve.

Future enhancements will focus on expanding the range of supported evaluation types and improving interoperability with additional clients and orchestration tools.

To contribute or provide feedback, visit the Atla MCP Server GitHub. Developers are encouraged to experiment with the server, report issues, and explore use cases in the broader MCP ecosystem.

Note: Thanks to the ATLA AI team for the thought leadership/ Resources for this article. ATLA AI team has supported us for this content/article.

The post Atla AI Introduces the Atla MCP Server: A Local Interface of Purpose-Built LLM Judges via Model Context Protocol (MCP) appeared first on MarkTechPost.