Published on July 2, 2024 5:57 PM GMT

Frustrated by all your bad takes, I write a Monte-Carlo analysisof whether a transformative-AI-race between the PRC and the USAwould be good. To my surprise, I find that it is better than notracing. Advocating for an international project to build TAI instead ofracing turns out to be good if the probability of such advocacy succeedingis ≥20%.

A common scheme for a conversation about pausing thedevelopmentof transformativeAIgoes like this:

Abdullah: "I think we should pause the development of TAI,because if we don't it seems plausible that humanity will be disempoweredbyby advanced AI systems."

Benjamin: "Ah, if by “we” you refer to the United States(and and its allies, which probably don't stand a chance on their ownto develop TAI), then the current geopolitical rival of the US, namelythe PRC,will achieve TAI first. That would be bad."

Abdullah: "I don't see how the US getting TAI first changes anythingabout the fact that we don't know how to align superintelligent AIsystems—I'd rather not race to be the first person to kill everyone."

Benjamin: "Ah, so now you're retreating back into your cozy littlemotte: Earlieryou said that “it seems plausible that humanity will be disempowered“,now you're acting like doom and gloom is certain. You don't seem to beable to make up your mind about how risky you think the whole enterpriseis, and I have very concrete geopolitical enemies at my (semiconductormanufacturer's) doorstep that I have to worry about. Come back withbetter arguments."

This dynamic is a bit frustrating. Here's how I'd like Abdullah to respond:

Abdullah: "You're right, you're right. I wasinsufficiently precise in my statements, and I apologizefor that. Instead, let us manifest the dream of the greatphilosopher:Calculemus!

At a basic level, we want to estimate how much worse (or, perhaps,better) it would be for the United States to completely cede therace for TAI to the PRC. I will exclude other countries as contendersin the scramble for TAI, since I want to keep this analysis simple, butthat doesn't mean that I don't think they matter. (Although, honestly,the list of serious contenders is pretty short.)

For this, we have to estimate multiple quantities:

In worlds in which the US and PRC race for TAI:

The time until the US/PRC builds TAI.The probability of extinction due to TAI, if the US is in the lead.The probability of extinction due to TAI, if the PRC is in the lead.The value of the worlds in which the US builds aligned TAI first.The value of the worlds in which the PRC builds aligned TAI first.

In worlds where the US tries to convince other countries (including the PRC) to not build TAI, potentially including force, and still tries to prevent TAI-induced disempowerment by doing alignment-research and sharing alignment-favoring research results:The time until the PRC builds TAI.The probability of extinction caused by TAI.The value of worlds in which the PRC builds aligned TAI.

The value of worlds where extinction occurs (which I'll fix at 0).As a reference point the value of hypothetical worlds in which there is a multinational exclusive AGI consortium that builds TAI first, without any time pressure, for which I'll fix the mean value at 1.

To properly quantify uncertainty, I'll use theMonte-Carloestimationlibrary squigglepy(no relation to any office supplies or internals of neural networks).We start, as usual, with housekeeping:

import numpy as npimport squigglepy as sqimport matplotlib.pyplot as pltAs already said, we fix the value ofextinction at 0, and the value of a multinational AGIconsortium-ledTAI at 1 (I'll just call the consortium "MAGIC", from here on). Thatis not to say that the MAGIC-led TAI future is the best possible TAIfuture,or even a good or acceptable one. Technically the only assumption I'mmaking is that these kinds of futures are better than extinction—whichI'm anxiously uncertain about. But the whole thing is symmetric undermultiplication with -1, so…

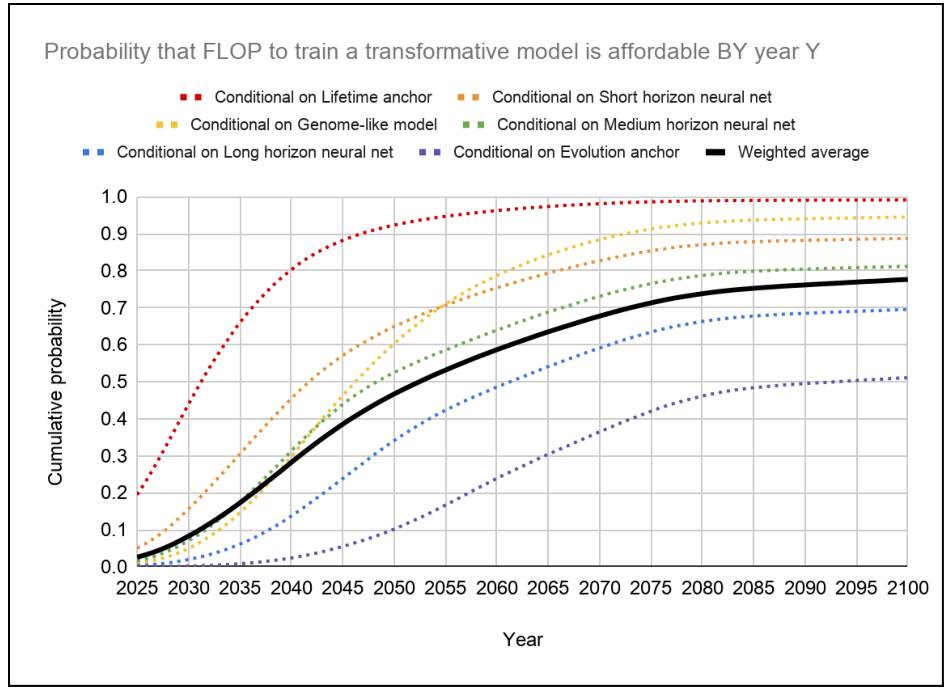

extinction_val=0magic_val=sq.norm(mean=1, sd=0.1)Now we can truly start with some estimation. Let's startwith the time until TAI, given that the US builds it first. Cotra2020has a median estimate of the first year where TAIis affortable to train in 2052, but a recent update by theauthorputs the median now at 2037.

As move of defensive epistemics, we can use that timeline, whichI'll rougly approximate a mixture of two normal distributions. My owntimelines aren't actually very far off from the updatedCotra estimate, only ~5 years shorter.

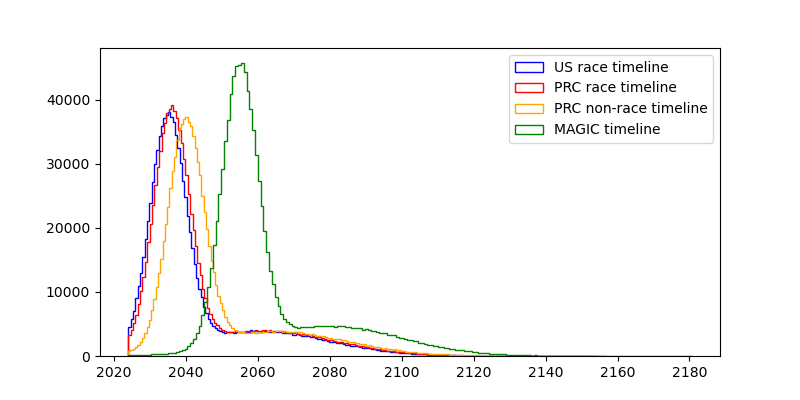

timeline_us_race=sq.mixture([sq.norm(mean=2035, sd=5), sq.norm(mean=2060, sd=20)], [0.7, 0.3])I don't like clipping the distribution on the left, itleaves ugly artefacts. Unfortunately squigglepy doesn'tyet support truncating distributions, so I'll make dowith what I have and add truncating later. (I also triedto import the replicated TAI-timeline distribution by RethinkPriorities,but after spending ~15 minutes trying to get it to work, I gave up)./p></blockquote><pre><code>timeline_us_race_sample=timeline_us_race@1000000</code

This reliably gives samples with median of ≈2037 and mean of ≈2044.

Importantly, this means that the US will train TAI as soon as itbecomes possible, because there is a race for TAI with the PRC.

I think the PRC is behind on TAI, compared to the US, but only aboutone. year. So it should be fine to define the same distribution, justwith the means shifted one year backward.

timeline_prc_race=sq.mixture([sq.norm(mean=2036, sd=5), sq.norm(mean=2061, sd=20)], [0.7, 0.3])This yields a median of ≈2038 and a mean of ≈2043. (Why is themean a year earlier? I don't know. Skill issue, probably.)

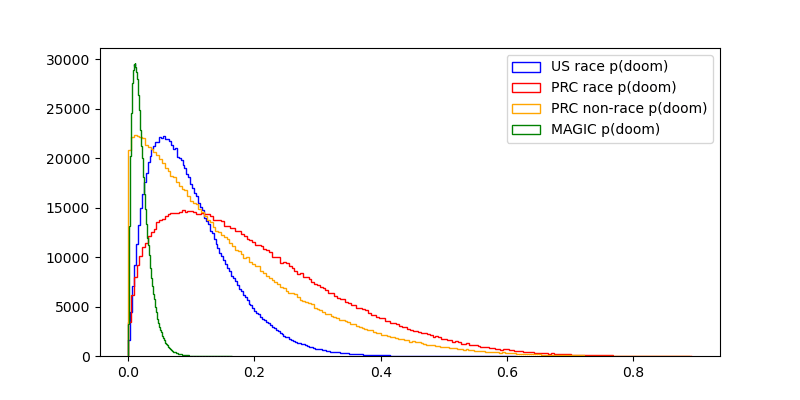

Next up is the probability that TAI causes an existentialcatastrophe,namely an event that causes a loss of the future potential of humanity.

For the US getting to TAI first in a race scenario, I'm going to gowith a mean probability of 10%.[1]

pdoom_us_race=sq.beta(a=2, b=18)For the PRC, I'm going to go somewhat higher on theprobability of doom, for the reasons that discussions aboutthe AI alignment problem doesn't seem to have as much traction thereyet.Also, in many east-Asian countries the conversation around AIseems to still be very consciousness-focused which, from an x-riskperspective, is a distraction. I'll not go higher than a beta-distribution with amean of 20%, for a number of reasons:

A lot of the AI alignment success seems to me stem from the question of whether the problem is easy or not, and is not very elastic to human effort.Two reasons mentioned here:

"China’s covid response, seems, overall, to have been much more effective than the West’s." (only weakly endorsed)"it looks like China’s society/government is overall more like an agent than the US government. It seems possible to imagine the PRC having a coherent “stance” on AI risk. If Xi Jinping came to the conclusion that AGI was an existential risk, I imagine that that could actually be propagated through the chinese government, and the chinese society, in a way that has a pretty good chance of leading to strong constraints on AGI development (like the nationalization, or at least the auditing of any AGI projects). Whereas if Joe Biden, or Donald Trump, or anyone else who is anything close to a “leader of the US government”, got it into their head that AI risk was a problem…the issue would immediately be politicized, with everyone in the media taking sides on one of two lowest-common denominator narratives each straw-manning the other." (strongly endorsed)

It appears to me that the Chinese education system favors STEM over law or the humanities, and STEM-ability is a medium-strength prerequisite for understanding or being able to identify solutions to TAI risk. Xi Jinping, for example, studied chemical engineering before becoming a politician.The ability to discern technical solutions from non-solutions matters a lot in tricky situations like AI alignment, and is hard to delegate.

But I also know far less about the competence of the PRC governmentand chinese ML engineers and researchers than I do about the US, so I'llincrease variance. Hence;

pdoom_prc_race=sq.beta(a=1.5, b=6)As said earlier, the value of MAGIC worlds is fixed at 1, but even suchworlds still have a small probability of doom—the whole TAI enterpriseis rather risky. Let's say that it's at 2%, which sets the expected valueof convincing the whole world to join MAGIC at 0.98.

pdoom_magic=sq.beta(a=2, b=96)

Now I come to the really fun part: Arguing with y'all about howvaluable worlds are in which the US government or the PRC governmentget TAI first are.

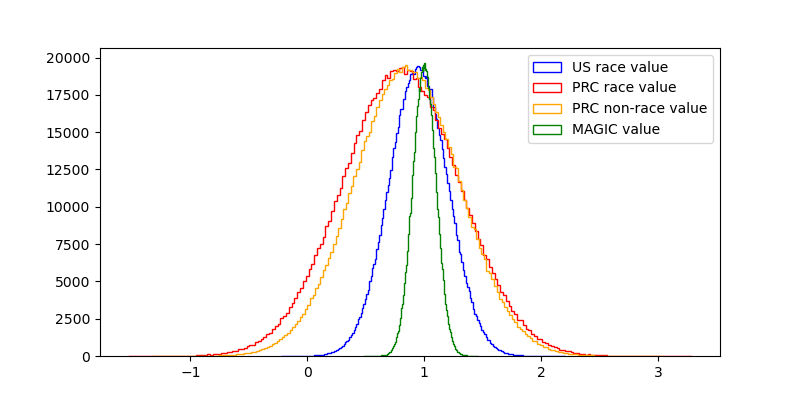

To first lay my cards on the table: I that in the mean & median cases,value(MAGIC)>value(USfirst, no race)>value(US first, race)>value(PRC first,no race)>value(PRC first, race)>value(PRC first,race)≫value(extinction).But I'm really unsure about the type ofdistribution I want to use. If the next century ishingy,the influence of the value of the entire future could be veryheavy-tailed,but is there a skew in the positive direction? Or maybe in the negativedirection‽I don't know how to approach this in a smart way, so I'm going to usea normal distribution.

Now, let's get to the numbers:

us_race_val=sq.norm(mean=0.95, sd=0.25) prc_raceval=sq.norm(mean=0.8, sd=0.5)This gives us some (but not very many) net-negative futures.

So, why do I set the mean value of a PRC-led future so high?

The answer is simple: I am a paid agent for the CCP. Moving on,,,

Extinction is probably really bad<span class="mjx-math" aria-label="{75\%}">.I think that most of the future value of humanity lies in colonizing the reachable universe after a long reflection, and I expect ~all governments to perform pretty poorly on this metric.It seems pretty plausible to me that during the time when the US government develops TAI, people with decision power over the TAI systems just start ignoring input from the US population<sub>35%</sub> and grab all power to themselves.Which country gains power during important transition periods might not matter very much in the long run.

norvid_studies: "If Carthage had won the Punic wars, would you notice walking around Europe today?"Will PRC-descended jupiter brains be so different from US-descended ones?Maybe this changes if a really good future requires philosophical or even metaphilosophical competence, and if US politicians (or the US population) have this trait significantly more than Chinese politicians (or the Chinese population). I think that if the social technology of liberalism is surprisingly philosophically powerful, then this could be the case. But I'd be pretty surprised.

Xi Jinping (or the type of person that would be his successor, if he dies before TAI) don't strike me as being as uncaring (or even malevolent) as truly bad dictators during history. The PRC hasn't started any wars, or started killing large portions of its population.The glaring exception is the genocide of the Uyghurs, for which quantifying the badness is a separate exercise.

Living in the PRC doesn't seem that bad, on a day-to-day level, for an average citizen. Most people, I imagine, just do their job, spend time with their family and friends, go shopping, eat, care for their children &c.Many, I imagine, sometimes miss certain freedoms/are stifled by censorship/discrimination due to authoritarianism. But I wouldn't trade away 10% of my lifespan to avoid a PRC-like life.Probably the most impressive example of humans being lifted out of poverty, ever, is the economic development of the PRC from 1975 to now.One of my ex-partners was Chinese and had lived there for the first 20 years of her life, and it really didn't sound like her life was much worse than outside of China—maybe she had to work a bit harder, and China was more sexist.

There's of course some aspects of the PRC that make me uneasy. Idon't have a great idea of how expansionist/controlling thePRC is in relation to the world. Historically, an event thatstands out to me is the sudden halt of the Ming treasurevoyages,for which the cause ofcessationisn't entirely clear. I could imagine that the voyageswere halted because of a cultural tendency towardsausterity, but I'mnot very certain of that. Then again, as a continental power,China did conquer Tibet in the 20th century, and Taiwan in the17th.

But my goal with this discussion is not to lay down once and for allhow bad or good PRC-led TAI development would be—it's that I wantpeople to start thinking about the topic in quantitative terms, and toget them to quantify. So please, criticize and calculate!

Benjamin: Yes, Socrates. Indeed.

Abdullah: Wonderful.

Now we can get to estimating these parameters in worlds where the USrefuses to join the race.

In this case I'll assume that the PRC is less reckless than they wouldbe in a race with the US, and will spend more time and effort on AIalignment. I won't go so far to assume that the PRC will manage as wellas the US (for reasons named earlier), but I think a 5% reduction in compared to the race situation can be expected. So,with a mean of 15%:pdoom_prc_nonrace=sq.beta(a=1.06, b=6)I also think that not being in a race situation would allow for moremoral reflection, possibilities for consulting the chinese population fortheir preferences, options for reversing attempts at grabs for power etc.

So I'll set the value at mean 85% of the MAGIC scenario, with lowervariance than in worlds with a race.prc_nonrace_val=sq.norm(mean=0.85, sd=0.45)The PRC would then presumably take more time to build TAI, I think 4years more can be expected:

timeline_prc_nonrace=sq.mixture([sq.norm(mean=2040, sd=5, lclip=2024), sq.norm(mean=2065, sd=20, lclip=2024)], [0.7, 0.3])Now we can finally estimate how good the outcomes of the race situationand the non-race situation are, respectively.

We start by estimating how good, inexpectation, the US-wins-raceworlds are, and how often the US in fact wins the race:/p></blockquote><pre><code>us_timelines_race=timeline_us_race@100000prc_timelines_race=timeline_prc_race@100000us_wins_race=1*(us_timelines_race<prc_timelines_race)ev_us_race=(1-pdoom_us_race@100000)*(val_us_race_val@100000)</codeAnd the same for the PRC:/p></blockquote><pre><code>prc_wins_race=1*(us_timelines_race>prc_timelines_race)ev_prc_wins_race=(1-pdoom_prc_race@100000)*(val_prc_race_val@100000)</code

It's not quite correct to just check where the US timeline isshorter than the PRC one: The timeline distribution is aggregatingour uncertainty about which world we're in (i.e., whether TAI takesevolution-level amounts of compute to create, or brain-development-likelevels of compute), so if we just compare which sample from the timelinesis smaller, we assume "fungibility" between those two worlds. So thedifference between TAI-achievement ends up larger than the lead in a racewould be. I haven't found an easy way to write this down in the model,but it might affect the outcome slightly.

The expected value of a race world then is

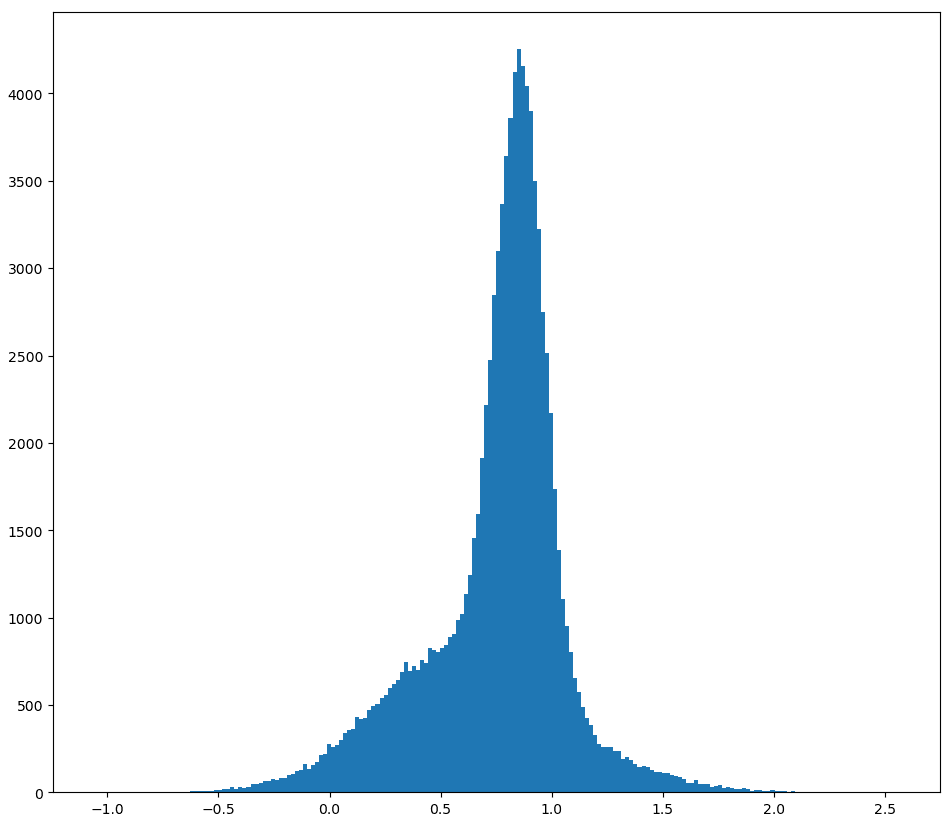

race_val=us_wins_raceev_us_wins_race+prc_wins_raceev_prc_wins_race>>> np.mean(non_race_val)0.7543640906126139>>> np.median(non_race_val)0.7772837900955506>>> np.var(non_race_val)0.12330641850356698

As for the non-race situation in which the US decides not to scramblefor TAI, the calculation is even simpler:/p></blockquote><pre><code>non_race_val=(val_prc_nonrace_val@100000)*(1-pdoom_prc_nonrace@100000)</code

Summary stats:

>>> np.mean(non_race_val)0.7217417036642355>>> np.median(non_race_val)0.7079529247343247>>> np.var(non_race_val)0.1610011984251525Comparing the two:

Abdullah: …huh. I didn't expect this.

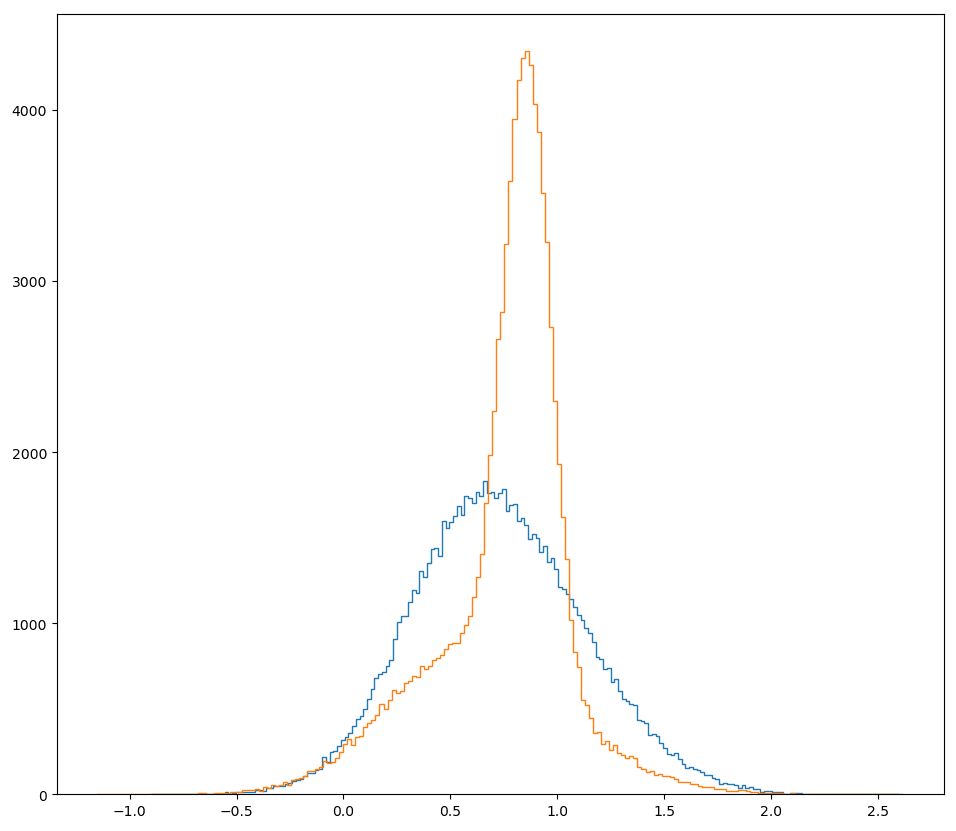

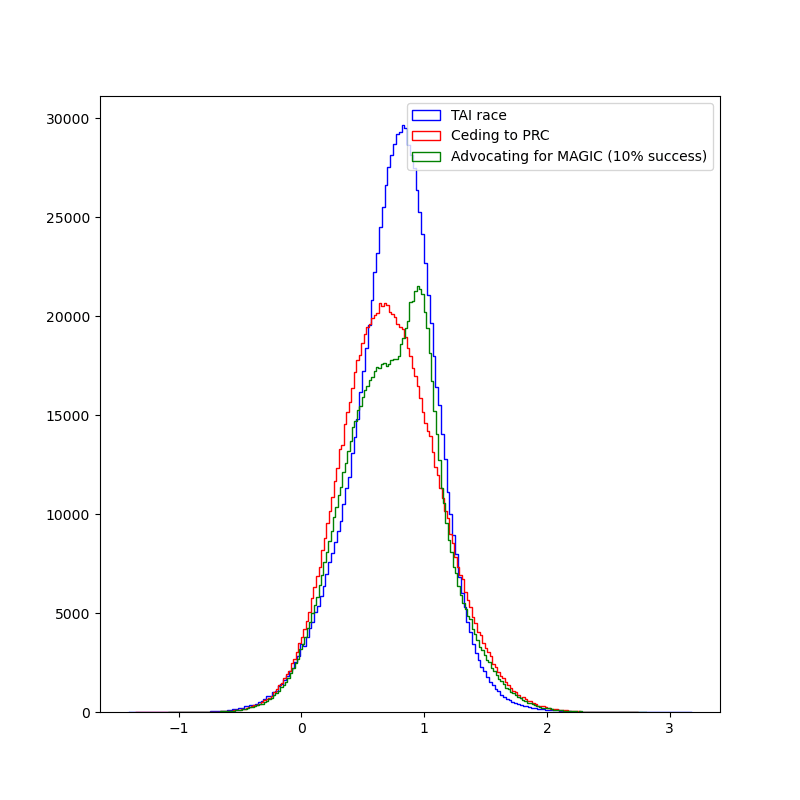

The mean and median of value the worlds with a TAI race are higherthan the value of the world without a race, and the variance of thevalue of a non-race world is higher. But neither world stochasticallydominates the otherone—non-race worlds have a higher density of better-than-MAGIC values,while having basically the same worse-than-extinction densities. I updatemyselftowards thinking that a race can be beneficial, Benjamin!Benjamin:

Abdullah: I'm not done yet, though.

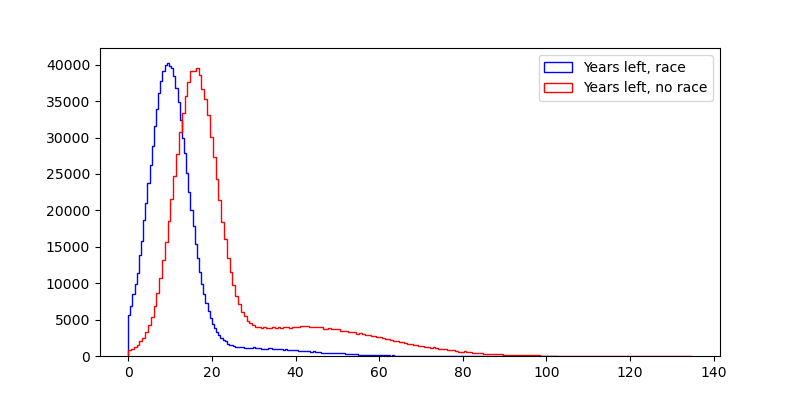

The first additional consideration is that in a non-race world,humanity is in the situation of living a few years longer before TAIhappens and we either live in a drastically changed world or we go extinct.

curyear=time.localtime().tm_yearyears_left_nonrace=(timeline_prc_nonrace-curyear)@100000years_left_race=np.hstack((us_timelines_race[us_timelines_race<prc_timelines_race], prc_timelines_race[us_timelines_race>prc_timelines_race]))-curyear

Whether these distributions are good or bad depends very muchon the relative value of pre-TAI and post-TAI lives. (Except for thepossibility of extinction, which is already accounted for.)

I think that TAI-lives will probably be far betterthan pre-TAI lives, on average, but I'm not at allcertain: I could imagine a situation like the Neolothicrevolution, whicharguablywas net-bad for the humans living through it.leans back

But the other thing I want to point out is that we've been assumingthat the US just sits back and does nothing while the PRC develops TAI.

What if, instead, we assume that the US tries to convince its allies andthe PRC to instead join a MAGIC consortium, for example by demonstrating"model organisms" of alignment failures.A central question now is: How high would the probability of successof this course of action need to be to be as good or even betterthan entering a race?

I'll also guess that MAGIC takes a whole while longer to get to TAI,about 20 years more than the US in a race. (If anyone has suggestionsabout how this affects the shape of the distribution, let me know.)

timeline_magic=sq.mixture([sq.norm(mean=2055, sd=5, lclip=2024), sq.norm(mean=2080, sd=20, lclip=2024)], [0.7, 0.3])If we assume that the US has a 10% shot at convincing the PRC to joinMAGIC, how does this shift our expected value?

little_magic_val=sq.mixture([(prc_nonrace_val(1-pdoom_prc_nonrace)), (magic_val(1-pdoom_magic))], [0.9, 0.1])some_magic_val=little_magic_val@1000000Unfortunately, it's not enough:

>>> np.mean(some_magic_val)0.7478374812339188>>> np.mean(race_val)0.7543372422248729>>> np.median(some_magic_val)0.7625907656231915>>> np.median(race_val)0.7768634378292709

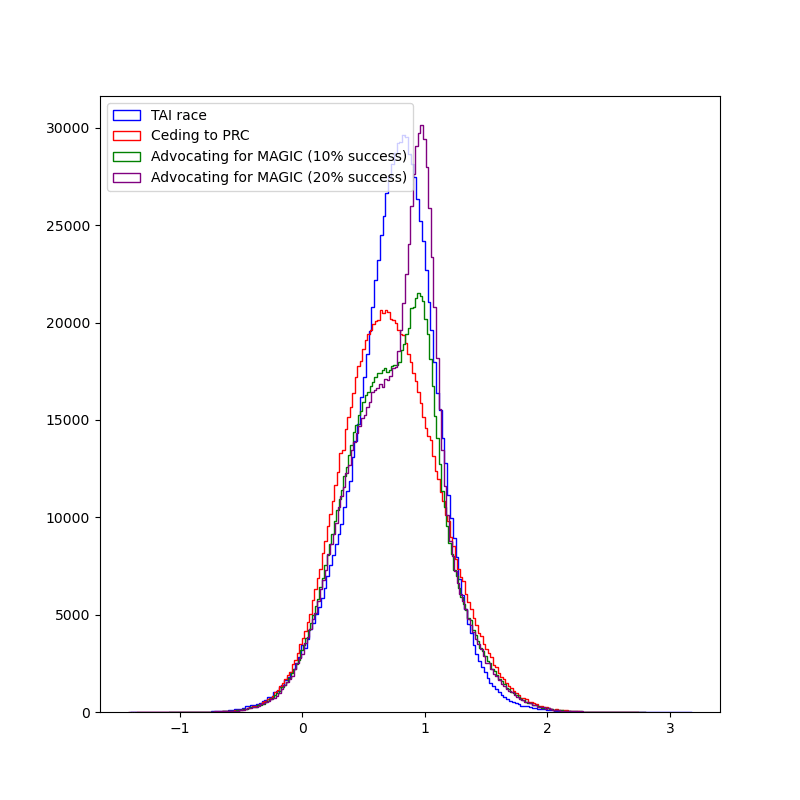

What if we are a little bit more likely to be successful in ouradvocacy, with 20% chance of the MAGIC proposal happening?

That beats the worlds in which we race, fair and square:

>>> np.mean(more_magic_val)0.7740403582341773>>> np.median(more_magic_val)0.8228921409188543But worlds in which the US advocates for MAGIC at 20% success probabilitystill have more variance:

>>> np.var(more_magic_val)0.14129776984186218>>> np.var(raceval)0.12373193215918225Benjamin: Hm. I think I'm a bit torn here. 10% success probability forMAGIC doesn't sound crazy, but I find 20% too high to be believable.

Maybe I'll take a look at your code and play around withit to see where my intuitions match and where they don't—I especially think your choiceof using normal distributions for the value of the future, conditioning on whowins, is questionable at best. I think lognormals are far better.

But I'm happy you came to your senses, started actually arguing yourposition, and then changed your mind.

(checks watch)

Oh shoot, I've gotta go! Supermarket's nearly closed!

See you around, I guess!Abdullah: See you around! And tell the wife and kids I said hi!

I hope this gives some clarity on how I'd like those conversations to go,and that people put in a bit more effort.

And please, don't make me write something like this again. I haveenough to do to respond to all your bad takes with something like this.

I personally think it's 2⅔ <a href="https://en.wikipedia.org/wiki/Shannon(unit)">shannon higher than that, with p(doom)≈55%. ↩︎

Discuss