Published on April 20, 2025 7:00 PM GMT

Say hi at ICSE in Ottawa, I’ll be at the reception Thursday, this colocated event on Friday, and the LLM4Code workshop on Saturday.

As usual there are no benefits to the paid subscription.

Sorry for consolidating two months into one post again after I said I wouldn’t.

Fermstimate of the cost of patching all security relevant open source software

Niplav writes

So, a proposal: Whenever someone claims that LLMs will d/acc us out of AI takeover by fixing our infrastructure, they will also have to specify who will pay the costs of setting up this project and running it.

I’m almost centrally the guy claiming LLMs will d/acc us out of AI takeover by fixing infrastructure, technically I’m usually hedging more than that but it’s accurate in spirit.

If transformative AI is developed soon, most open source projects (especially old ones relevant to internet infrastructure) are going to be maintained by humans with human response times. That will significantly increase the time for relevant security patches to be reviewed and merged into existing codebases, especially if at the time attackers will submit AI-generated or co-developed subtle exploits using AI systems six to nine months behind the leading capabilities, keeping maintainers especially vigilant.

I usually say we prove the patches correct! But Niplav is correct: it’s a hard social problem, many critical systems maintainers are particularly slop-phobic and won’t want synthetic code checked in. That’s why I try to emphasize that the two trust points are the spec and the checker, and the rest is relinquished to a shoggoth. That’s the vision anyway– we solve this social problem by involving the slop-phobic maintainers in writing the spec and conveying to them how trustworthy the deductive process is.

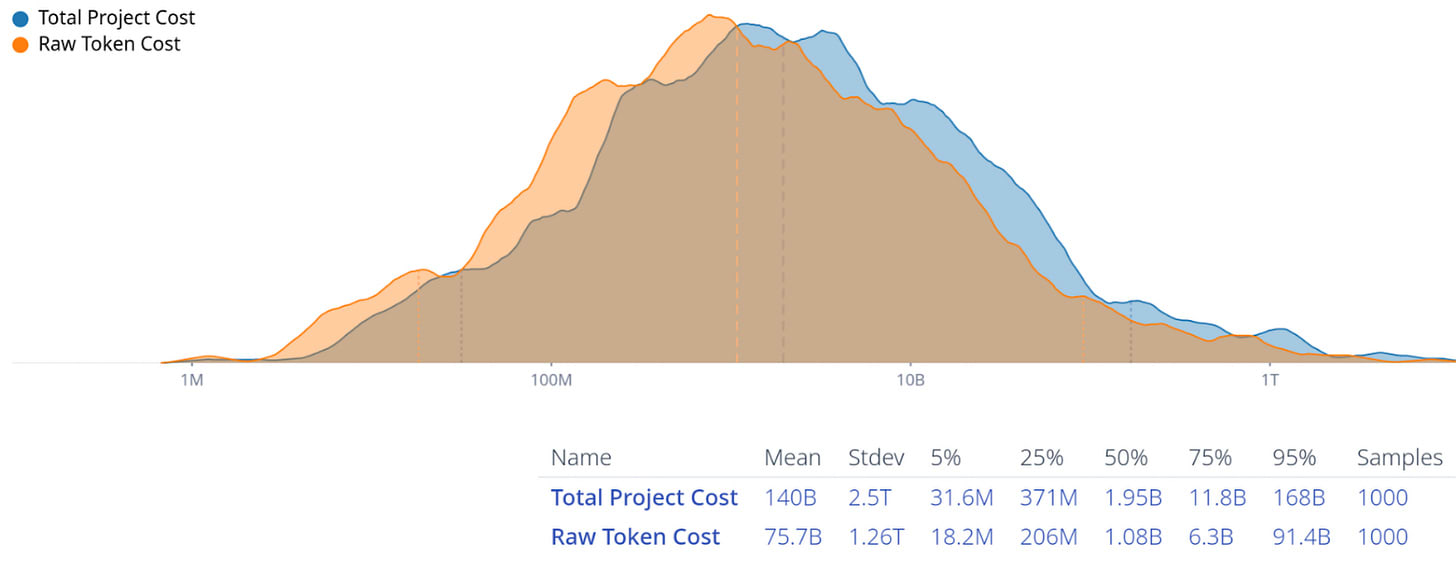

Median $~1b worth of tokens, plus all the “setting up the project, paying human supervisors and reviewers, costs for testing infrastructure & compute, finding complicated vulnerabilities that arise from the interaction of different programs…” etc costs. I think a lot’s in our action space to reduce those latter costs, but the token cost imposes a firm lower bound.

But this is an EA Forum post, meaning the project is being evaluated as an EA cause area: is it cost effective? To be cost effective, the savings from alleviating some disvalue have to be worth the money you’ll spend. As a programming best practices chauvinist, one of my pastimes is picking on CrowdStrike, so let’s not pass up the opportunity. The 2024 outage is estimated to have cost about $5b across the top 500 companies excluding microsoft. A public goods project may not have been able to avert CrowdStrike, but it’s instructive for getting a flavor of the damage, and this number suggests it could be easily worth spending around Niplav’s estimate. On cost effectiveness though, even I (who works on this “LLMs driving Hot FV Summer” thing full time) am skeptical, only because open source software is pretty hardened already. Curl/libcurl saw 23 CVEs in 2023 and 18 in 2024, which it’d be nice to prevent but really isn’t a catastrophic amount. Other projects are similar. I think a lot about the Tony Hoare quote “It has turned out that the world just does not suffer significantly from the kind of problem that our research was originally intended to solve.” Not every bug is even an exploit.

Safeguarded AI

Creator profiles for TA1.1 and TA3

The TA1.1 ones have been up for a while and I think I missed them, but TA3 was posted fairly recently.

TA3 is the applications domain. Yaknow, the real world, that thing from all those functional programming jokes.

This work aims to address the challenge of AI deployment in safety-critical sectors by combining scientific world models and mathematical proofs to develop robust safety assurances.

Researchers from diverse companies and organisations such as AstraZeneca, Mind Foundry, the University of Oxford, the University of Birmingham, and the University of York, will aim to demonstrate how safeguarded AI systems can help them to make significant improvements in vital sectors.

TA2 call

This isn’t really open to individuals, but as always reading the funding call gets you some insight into ARIA’s worldview and strategy

ARIA is launching a multi-phased solicitation for Technical Area 2 (TA2) to support the development of a general-purpose Safeguarded AI workflow. The programme aims to demonstrate that frontier AI techniques can be harnessed to create AI systems with verifiable safety guarantees. In TA2, we will award £18m to a non-profit entity to develop critical machine learning capabilities, requiring strong organizational governance and security standards. Phase 1, backed by £1M, will fund up to 5 teams to spend 3.5 months to develop full Phase 2 proposals. Phase 2 — which will open on 25 June 2025 —will fund a single group, for £18M, to deliver the research agenda. TA2 will explore leveraging securely-boxed AI to train autonomous control systems that can be verified against mathematical models, improving performance and robustness. The workflow will involve forking and fine-tuning mainstream pre-trained frontier AI models to create verifiably safeguarded AI solutions.

I’ve heard this described as something like a “new frontier lab”, which is <sarcasm>always music to my ears</sarcasm>, but in truth I’m only worried about shops that push the envelope on scaling (chinchilla or otherwise). Boromir wanted to use the One Ring to defend Gondor, and we largely trust Tolkien/whomever when they say that defensive acceleration is folly and the Ring’s corrupting influence incentives are stronger than the will of man. Nevertheless, I feel pretty good about this because it’s narrow and targeted. Eager to find out if I’ll eat my words.

Conferences, events

High Confidence Software and Systems Conference May 12, Maryland US

CAV July 21, Croatia

Formal methods for Rust May 2nd, Ottawa CA

New control paper

GSAI and Control are really complimentary forces so I think I’ll mention news in Control, at least sometimes

Control evaluations measure whether monitoring and security protocols for AI systems prevent intentionally subversive AI models from causing harm. Our work presents the first control evaluation performed in an agent environment. We construct BashBench, a dataset of 257 challenging multi-step system administration tasks, and evaluate whether various safety measures can prevent an adversarially constructed AI agent from covertly downloading and executing malicious code in this environment. This multi-step setting introduces new attack and defense dynamics, which we investigate in order to design novel control protocols that prevent safety failures without hindering the ability of non-malicious agents to perform useful work. We introduce a class of control protocols called resample protocols that dynamically take additional samples of certain actions. We find these protocols significantly improve on existing techniques by selectively blocking the AI agent from executing suspicious code and incriminating the agent by generating additional examples of dangerous behavior. We measure the tradeoff between attack prevention and usefulness; our best protocol combines resampling with analysis of previous steps, reducing the success rate of attacks from 58% to 7% at a 5% cost to the performance of a non-malicious agent.

Discuss