Meta is boasting Llama 4 Scout as having an industry-leading context window of 10 million tokens. Social media was eating this up and talking about how you can fit multiple textbooks into the context window and get information from them. While that’s true, the large context window really isn’t as useful as everyone has made it out to be.

Yes, 10 million is a lot of tokens and achieving a 10 million token context window for a production model is a feat (check out MagicAI’s 100 million token context window for kicks), but a large context window isn’t infallible and being able to achieve 10 million tokens comes at a great cost.

What you should know

A large context window isn’t infallible.

You can feed multiple textbooks into a large context window, but it's very difficult for models to accurately recall information from within a context window that is 10 million tokens long. This means you can give the model as much info as you’d like but that doesn’t mean you’ll get the output you’re hoping for.

Models seem to struggle at retrieving information in the middle of the given input and bias their output toward the information at beginning and end of the context.

Training costs are immense.

The traditional self-attention mechanism also becomes computationally prohibitive with so many input tokens. It calculates attention scores between all pairs of input tokens (which is O(n2) for all you engineers) and becomes inherently prohibitive as n scales up. This necessitates the use of non-standard attention mechanisms to make massive context windows computationally viable.

Even with these optimizers, training is expensive. The quadratic term is significant when a model is trained with 256,000 input tokens for each forward pass (this is an estimate based on the Llama 4 information) and model training takes a long time when the model is trained on over 40 trillion tokens.

Without more info from Meta, it’s difficult to estimate the exact training time and cost, but it wouldn’t surprise me if training Llama 4 Scout took tens or hundred of millions of H100 GPU-hours and cost Meta well over $500 million dollars (probably closer to $1 billion mark).

Utilizing the 10 million token context window comes with significant serving constraints.

Whether or not a model has a 10 million token context window in practice comes down to more than just enabling the context window via training and model architecture. The company hosting the model also needs to make the memory requirements for such a context window available to users.

Those memory requirements are massive. As the context window of an LLM increases, the LLM will store more relationships between tokens in its key-value (KV) cache. The KV cache scales roughly linearly as the number of tokens increases.

In fact, the required memory of the KV cache for a 10 million token input is so large that the KV cache becomes the primary memory constraint instead of the size of model parameters.

Let’s do some back-of-the-napkin math to understand how much serving a model with this context window costs:

First, our KV cache size equation:

KV Cache = 2 * number_of_layers * sequence_length * number_kv_heads * head_dimension * bytes_per_element

Second, extracting those values by extrapolating from Llama 3 (couldn’t find these for Llama 4 Scout, so let me know if they’re available somewhere):

number_of_layers = 40

head_dimension = 128

number_kv_heads = 8

bytes_per_element = 0.5 (4-bit precision or 0.5 bytes)

Third, calculation our KV cache cost per token:

= 2 * number_of_layers * number_kv_heads * head_dimension * bytes_per_element

= 2 * 40 * 8 * 128 * 0.5 = 40960 bytes = 40.96 KB

Fourth, our KV cache cost per 1 million token:

= cost_per_token * 1 million

= 40,960 bytes * 1 million = 40,960,000,000 bytes = 40.96 GB per million

Fifth, our KV cache cost per 10 million tokens:

= cost per million * 10

= 40,960,000,000 bytes * 10 = 409,600,000,000 bytes = 409.60 GB

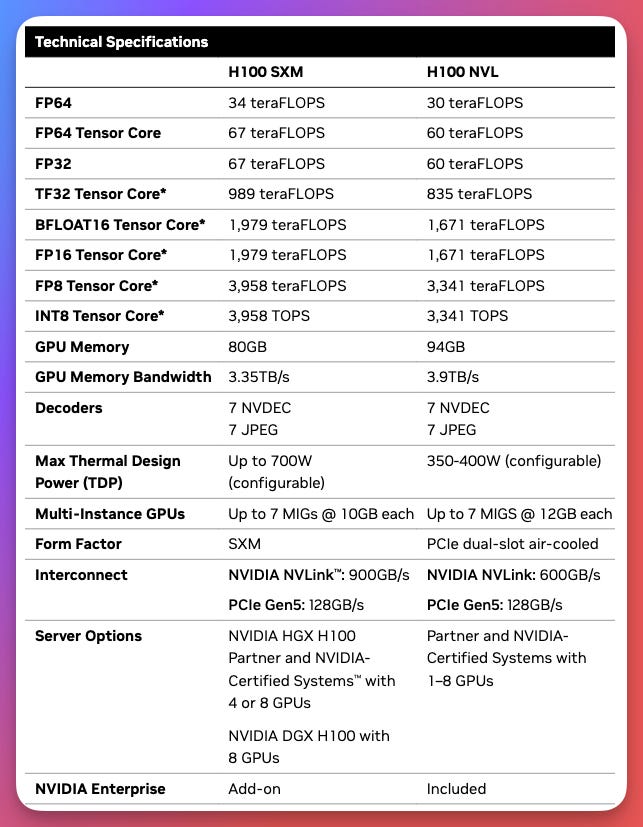

This means Llama 4 Scout with the 10 million token context window won’t be running on a consumer GPU and will require aggressive engineering to fit into a single H100 (80 GB of VRAM so even more quantization, requiring a shorter context length, and more).

This means to serve one model at a 10 million token context window, the company hosting Llama 4 Scout would likely need 7 H100s (storing the KV cache in memory along with ~55 GB of model weights at 4-bit) which would require a server with 8 H100s since that’s how they’re usually made available.

Finally, estimate our cost based on compute requirements:

Looking across GCP, Azure, and AWS, a server of this size would cost ~$90/hour. Compare this to the cost of a single H100 which is ~$10/hr which Llama 4 Scout can fit on if the context window is reduced.

This is a rudimentary calculation. Hosting Llama 4 Scout with a large context window would likely cost a company less than the numbers above if they were using their own servers, but these numbers are a good estimate.

The lesson here is that not just training, but hosting an LLM with a large context window is expensive. Just because a model is capable of a large context window doesn’t mean it’ll be served with that window.

The real tragedy of the Llama series is that they’ve gotten so large they’re basically incapable of being run on a single consumer GPU. This is what made the Llama models so beloved in the AI community.

Companies keep their context window strategies close to the chest.

Companies generally don’t share how they achieve their large context windows and don’t get too specific with details. This is why my numbers above are just estimates (especially training costs).

However, the community is able to reason pretty well about how achieving this context window could be done but the actual methodology is kept secret. The thing we do know is it takes a lot of compute and training time to make happen.

Just writing about this is making me excited. This really is what machine learning engineering is fundamentally about: making machine learning work in practice.

As always I’m open to any questions and let me know if there’s an error in my work.

Always be (machine) learning,

Logan