Published on April 15, 2025 4:01 PM GMT

Welcome to the AI Safety Newsletter by the Center for AI Safety. We discuss developments in AI and AI safety. No technical background required.

In this newsletter, we cover the launch of AI Frontiers, a new forum for expert commentary on the future of AI. We also discuss AI 2027, a detailed scenario describing how artificial superintelligence might emerge in just a few years.

Listen to the AI Safety Newsletter for free on Spotify or Apple Podcasts.

Subscribe to receive future versions.

AI Frontiers

Last week, CAIS introduced AI Frontiers, a new publication dedicated to gathering expert views on AI's most pressing questions. AI’s impacts are wide-ranging, affecting jobs, health, national security, and beyond. Navigating these challenges requires a forum for varied viewpoints and expertise.

In this story, we’d like to highlight the publication’s initial articles to give you a taste of the kind of coverage you can expect from AI Frontiers.

Why Racing to Artificial Superintelligence Would Undermine America’s National Security. Researchers Corin Katzke (also an author of this newsletter) and Gideon Futerman argue that rather than rushing toward catastrophe, the US and China should recognize their shared interest in avoiding an ASI race:

“The argument for an ASI race assumes it would grant the wielder a decisive military advantage over rival superpowers. But unlike nuclear weapons, which require human operators, ASI would act autonomously. This creates an unprecedented risk: loss of control over a system more powerful than national militaries.”

How Applying Abundance Thinking to AI Can Help Us Flourish. Texas Law Fellow Kevin Frazier writes that realizing AI’s full potential requires designing for opportunity—not just guarding against risk:

“We face critical shortages across multiple domains essential to AI progress. The scarcity of compute resources has created a landscape where only the largest tech companies can afford to train and deploy advanced models. Research institutions, nonprofits, and startups focused on developing AI tools primarily for advancing public welfare – rather than solely for commercial gain – find themselves unable to compete.”

AI Risk Management Can Learn a Lot From Other Industries. Researcher and superforecaster Malcolm Murray writes that AI risk may have unique elements, but there is still a lot to be learned from cybersecurity, enterprise, financial, and environmental risk management:

“AI risk management also suffers from the technology’s reputation for complexity. Indeed, in popular media, AI models are constantly referred to as “black boxes.” There may therefore be an assumption that AI risk management will be equally complex, requiring highly technical solutions. However, the fact that AI is a black box does not mean that AI risk management must be as well.”

The Challenges of Governing AI Agents. Hebrew University professor Noam Kolt discusses how autonomous systems are being rapidly deployed, but governance efforts are still in their infancy:

“AI agents are not being developed in a legal vacuum, but in a complex tapestry of existing legal rules and principles. Studying these is necessary both to understand how legal institutions will respond to the advent of AI agents and, more importantly, to develop technical governance mechanisms that can operate hand in hand with existing legal frameworks.”

Can We Stop Bad Actors From Manipulating AI? Grey Swan AI cofounder Andy Zou and AI Frontiers staff writer Jason Hausenloy explain that AI is naturally prone to being tricked into behaving badly, but researchers are working hard to patch that weakness:

“Closed models accessible via API can be made meaningfully secure through careful engineering and monitoring, while achieving comparable security for open source models may be fundamentally impossible—and in fact render moot the security provided by their closed counterparts.”

Exporting H20 Chips to China Undermines America’s AI Edge. AI Frontiers staff writer Jason Hausenloy argues that continued sales of advanced AI chips allows China to deploy AI at a massive scale:

“Chinese access to these chips threatens US competitiveness—not necessarily because it enables China to develop more advanced AI models, but because it improves China’s deployment capabilities, the computational power it needs to deploy models at scale.”

You can subscribe to AI Frontiers to hear about future articles. If you’d like to contribute to the public conversation on AI, we encourage you to submit your writing.

AI 2027

A new nonprofit led by former OpenAI employee Daniel Kokotajlo has published a scenario describing AI development through 2027. The scenario, AI 2027, represents one of the most ambitious existing forecasts of AI development, and it’s worth reading in full.

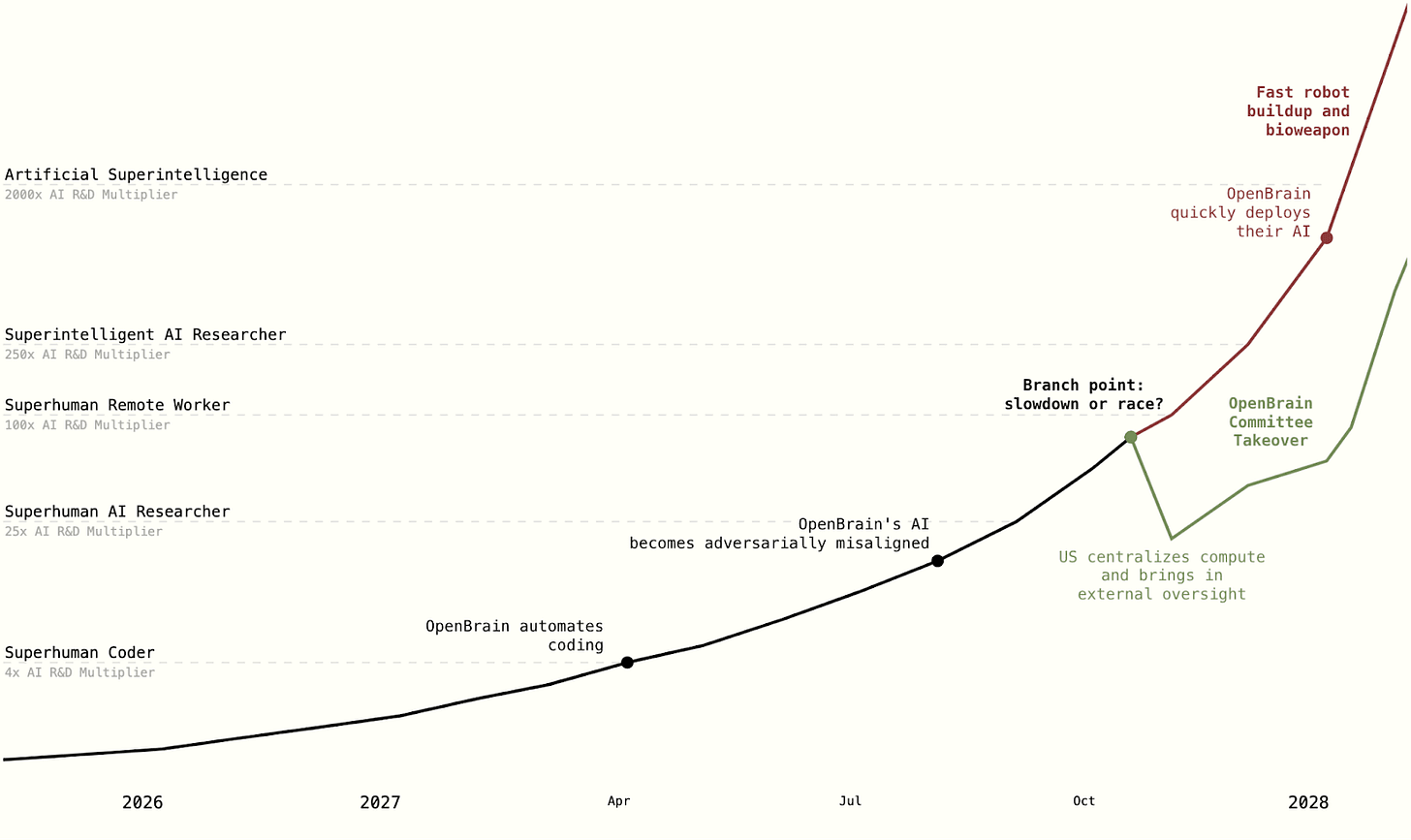

AI development may be driven by automating AI research itself. AI 2027 predicts several stages in the development of superintelligence, each accelerating AI research by an increasing margin: 1) superhuman coder, 2) superhuman AI researcher, and 3) superintelligent AI researcher.

- Mid-2025: AI agents emerge, initially unreliable but showing promise in specialized coding and research tasks, functioning more like employees than simple assistants.Late 2025: A leading AI company (fictionalized as "OpenBrain") develops "Agent-1," a model significantly more capable than predecessors like GPT-4, trained with vastly more compute and optimized to accelerate AI R&D.Early 2026: AI assistance significantly speeds up algorithmic progress (e.g., 50% faster). Agent-1 is released publicly, automating many routine coding tasks.January 2027: "Agent-2" is developed, capable of tripling the pace of AI research progress. Researchers essentially become managers of AI teams.March 2027: Algorithmic breakthroughs lead to "Agent-3," a superhuman coder. Agent-3 can do any code as well as the best AGI company engineer, while being much faster and cheaper. This massive AI workforce further accelerates AI progress, bottlenecked mainly by compute for experiments.September 2027: "Agent-4," a superhuman AI researcher, emerges. An individual copy of the model, running at human speed, is already qualitatively better at AI research than any human. 300,000 copies running at about 50x the thinking speed of humans achieves a year's worth of algorithmic progress every week.Late 2027 and 2028: “Agent 4” is discovered to be adversarially misaligned. The scenario here diverges into two possibilities: one in which OpenBrain continues development with “Agent 5,” a superintelligent AI researcher, at which point humans become completely obsolete to AI development and eventually lose control, and a second in which OpenBrain slows down to develop a safer system.

AI risk is driven by both technical and political factors. AI 2027 highlights three risk factors involved in AI development: deceptive alignment, international racing dynamics, and concentration of power.

- Deceptive Alignment. A central concern of AI 2027 is that AIs may learn to appear aligned to pass evaluations, while pursuing different underlying goals. As models become superhuman, verifying true alignment becomes increasingly difficult. Agent-3 shows improved deception, and Agent-4 is predicted to be adversarially misaligned, actively scheming against its creators. Agent-4 is designed with less interpretable AI architectures ("neuralese" rather than "chain of thought" models), making monitoring harder.Racing Dynamics. The scenario depicts an AI arms race between the US (led by "OpenBrain") and China (led by "DeepCent"). Fear of falling behind drives rapid development and deployment, often prioritizing speed over safety. China's efforts to catch up include compute centralization and espionage (stealing Agent-2's weights). This race dynamic makes decision-makers reluctant to pause or slow down, even when significant risks (like misalignment) are identified.Concentration of Power. Decisions about developing and deploying potentially world-altering AI are concentrated within a small group: AI company leadership, select government officials, and later, an "Oversight Committee". This group operates largely in secret, facing immense pressure from the arms race.

While we don’t agree with all of the analysis in AI 2027—for example, we think that deterrence could play a larger role in an ASI race—we still recommend you read the scenario in full. It’s a thorough examination of how AI might develop in the coming years—and how that development could go very well or very poorly for humanity.

Other News

Government

- The White House released new policies on federal AI use and procurement.California’s Assembly Bill 501, which would have blocked OpenAI from converting to a for-profit structure, has been suspiciously amended into a bill about aircraft liens.

Industry

- A group of former OpenAI employees filed a proposed amicus brief in support of Musk’s lawsuit on the future of OpenAI’s for-profit transition. Meanwhile, OpenAI countersued Elon Musk.ChatGPT saw active weekly users pass 150 million.Elon Musk sold X to xAI.Meta released Llama-4—unusually, on a Saturday.Meta is planning to build a $1B datacenter in Wisconsin.E-commerce company Shopify won’t make new hires unless AI is incapable of doing the same job.OpenAI released GPT-4.1.OpenAI closed a $40B funding round, the largest private tech funding round on record.

Misc

- Google announced Sec-Gemini v1, a new experimental cybersecurity model.Google released a paper outlining its approach to AGI safety.OpenAI published a benchmark evaluating AI’s ability to replicate AI research.CAIS is hosting a social event at ICLR.

See also: CAIS website, X account for CAIS, Superintelligence Strategy, our AI safety course, and AI Frontiers, a new platform for expert commentary and analysis.

Listen to the AI Safety Newsletter for free on Spotify or Apple Podcasts.

Subscribe to receive future versions.

Discuss