Published on April 10, 2025 5:15 PM GMT

This is cross-posted from my blog.

We need more people on board for life extension in order to hit longevity escape velocity in our lifetimes. But most people have never heard of life extension, and even those who have often follow the same predictable arguments. “What if it doesn't work?” “What if bad people live forever?” “What if humanity needs to refresh its stock every so often in order to make progress?” “What about heaven?”

We need more people working on AI safety so we don't all end up dead.

We need more people to understand the coordination problem as the central problem in politics and economics.

For those of us in the business of spreading ideas, it can be a tough row to hoe (I had to look up this phrase to make sure I was saying it right). It can feel like an uphill battle but the way you model it can help guide you.

This is my model when thinking about spreading ideas, especially ones outside the Overton window. It applies less in the later stages of a movement when the Overton window has shifted.

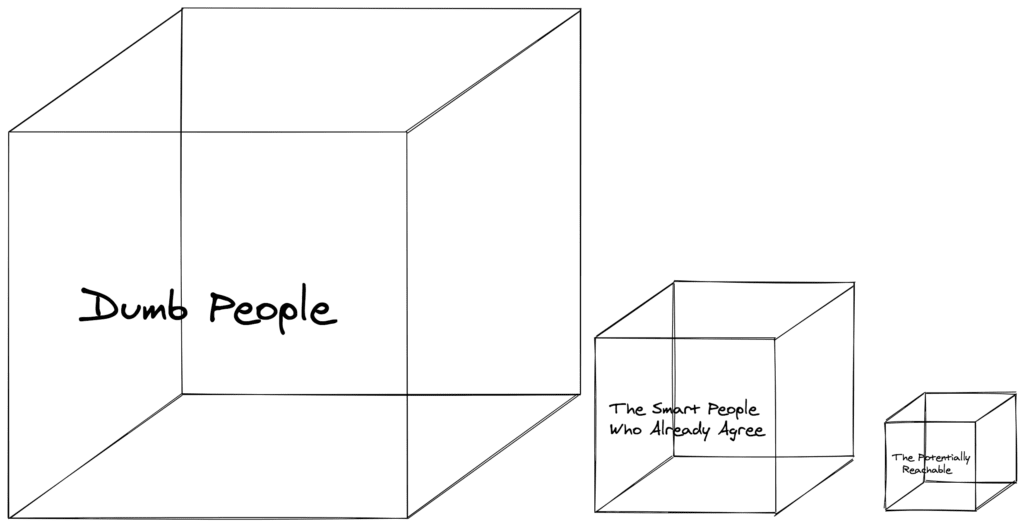

It’s about three boxes. I like buckets but I already have an important post about three buckets so boxes it is.

There’s the giant box. This box has the people that don’t agree with you. In fact, they probably think you’re a terrible person just for talking about these ideas.

There’s the small box. This box has the people that already agree with you.

Then there’s the Tiffany-sized box. These are the people who you may be able to reach with your ideas. The box is much smaller than the giant box.

Why is this?

Four reasons:

1. Efficient market hypothesis. The people who are open to these ideas already believe them.

The obvious caveat is the EMH applies less to new or radical or uncommon ideas since people are less likely to have heard about them. Think transhumanism vs democracy or AI safety research vs Christianity.

2. The amount of people who are smart enough/high enough in openness/reasonable enough to understand and accept a new idea is small.

3. More often than not, the truth is something we don’t want to hear or don’t want to be true. I would love if there was an eternal paradise but there’s not. It would be wonderful if everyone was born smart and beautiful but they aren’t. I would love if we knew for certain that cryonics would work but we don’t.

4. Rationality people can be worse at selling ideas than non-rationality people for a number of reasons:

a. Autism and other neurodivergence are overrepresented in the rationality community which can make people less socially skilled than normies.

b. Many are uncomfortable using the so-called "Dark Arts" of persuasion/influence.

c. Rationality people promote epistemic awareness and humility and recognize that things are often shades of grey. They often provide confidence levels as opposed to blanket statements, e.g. “I’m very confident that my model of Covid-19 is right.” or “I have low confidence in my model of what’s going on with AlphaZero.” They also recognize there are usually pros and cons to issues and nuance. This can be less of a sell than “This is how it works! Big Pharma is evil!”

So how should we act based on all this? What do we do with this model?

Here are some implications:

1. Try not to be discouraged by the giant box.

As a cynic, I know this can be hard. There are some things we can do to alleviate discouragement:

a. Surround yourself with the people in the small box -- the good and smart people who are on the same page -- so you can find relief in them. “I’m not the only sane one!” The trick (besides finding such people) is not just surrounding yourself with yes men or having your group turn into an echo chamber.

b. Avoid spending a lot of time reading annoying shit on the internet. It can be addictive to read flame wars on Twitter but this is mostly a waste of time and just gets us worked up and not much else.

c. Keep a list of people who inspire you. I’m starting to do this by writing about my heroes.

2. Put your effort into the Tiffany-sized box: the people who are likely to be open to your ideas and smart enough to understand them.

Libertarians would love if there were more women among them. But focusing on just getting more women might be a huge waste of resources in the same way that it would be a waste of effort to get more women to be loggers (99.1% men in 2016) or men to be kindergarten teachers (97.5% women in 2016).

Yes, this can result in an overrepresentation but sometimes there’s not much you can do about it even if you make large attempts at being more “inclusive”.

To use your resources effectively in spreading ideas, it’s better to focus on more fertile ground first.

a. Notice what beliefs, etc. correlate with being open to the idea and focus on people with those.

In the libertarian example, they might focus more effort on converting women gun enthusiasts vs women at liberal arts colleges.

Now, if you’re promoting veganism, you’d want to switch that and focus on the liberal arts colleges vs gun enthusiasts.

I wish it weren’t like this and we could have all the best beliefs instead of having these irrational strong constellations of beliefs, but we don’t currently live in that world.

- Remember that it can get easier and breakneck change is eventually possible

As your numbers and in turn resources grow, you’ll be in a better place to shift the Overton window. Or at least be a major player in the future Culture Wars.

Once the Overton window has shifted, change can happen very fast! Support can increase quite modestly and then things hit a tipping point where things change metaphorically overnight. Support and legalization of gay marriage is the poster-child for this.

Major politicians of all parties (including Obama and the Clintons) were against gay marriage. While Bernie wasn’t 100% out and proud in supporting gay marriage, he was light years ahead of everyone else.

It’s a good thing to celebrate people changing their minds to better and more accurate beliefs. But when people just flip-flop because their tribe did or it’s politically expedient, that is less of a cause for celebration.

Nerdy, liberal girls who were tripping over themselves to identify as Ravenclaws, now think that J.K. Rowling deserves death.

Culture is weird and volatile. (Even if you think Rowling is worthy of death, it still is weird and sad that people’s beliefs change that fast.)

Capriciousness just means they’ll give up a reasonable and rational belief for whichever one is in vogue. That’s bad.

This kind of capriciousness makes me feel like there should be a different word for beliefs that have been worked out and evaluated and then supported or not, vs this kind of tribal emotional bullshit, but that’s for another post.

A tipping point can also come because new technology has become available as opposed to just being theoretical. The old chestnut of “If I had asked people what they wanted, they would have said faster horses.” comes to mind.

A future example is longevity treatment. Very few people today support longevity research despite them supporting something much less effective but in the same direction like breast cancer research, etc. This is because people are stupid.

But as Aubrey de Grey and others point out, the second we get real anti-aging treatment, you’ll have people overwhelmingly switch to wanting it. And bitching that they can’t have it despite actively not supporting it before, the fuckers...

What’s the point of getting people to change their minds now then if they’ll just end up changing them on their own in the future?

The point is to get support in the form of money or people working on research or public policy changes. Every day we remain lax on things like AI safety research increases the risk we die from AGI. Every day we don’t have longevity tech is 100,000+ people dying. 99% of the things people purport to care about absolutely pales in comparison to causes like these. That’s why we work to spread ideas.

So in summary:

There are three boxes:

- The giant box: the people that don’t agree with you.The small box: the people that already agree with you.The Tiffany-sized box: the people who you may be able to reach with your ideas.

The lessons from the three boxes model are:

- Try not to be discouraged.Surround yourself with people who inspire you and make you feel less cynical.Write about the people who inspire you.Sow your seeds in fertile ground.Remember that it can get easier and rapid change is possibleDon’t forget the stakes matter. Keep up the good fight.

Discuss